tixiaoshan / lvi-sam Goto Github PK

View Code? Open in Web Editor NEWLVI-SAM: Tightly-coupled Lidar-Visual-Inertial Odometry via Smoothing and Mapping

Home Page: https://youtu.be/8CTl07D6Ibc

License: BSD 3-Clause "New" or "Revised" License

LVI-SAM: Tightly-coupled Lidar-Visual-Inertial Odometry via Smoothing and Mapping

Home Page: https://youtu.be/8CTl07D6Ibc

License: BSD 3-Clause "New" or "Revised" License

It's a nice SLAM!!

I would like to know the information of the camera's lens used in the handheld dataset.

Only camera information is written on the paper.

Can you share the information?

Hello, when I run LVI Sam on the Jetson NX development board,catkin_make has been completed,but when I tried roslaunch, I encountered the following node death problem

[ INFO] [1636441314.154527795]: ----> Visual Odometry Estimator Started.

[ INFO] [1636441314.487589783]: ----> Visual Loop Detection Started.

[ INFO] [1636441314.585487422]: ----> Visual Feature Tracker Started.

[lvi_sam_visual_odometry-9] process has died [pid 13242, exit code -11, cmd /home/nvidia/catkin_sam/devel/lib/lvi_sam/lvi_sam_visual_odometry __name:=lvi_sam_visual_odometry __log:=/home/nvidia/.ros/log/e982fd3c-412a-11ec-ad02-48b02d3da899/lvi_sam_visual_odometry-9.log].

log file: /home/nvidia/.ros/log/e982fd3c-412a-11ec-ad02-48b02d3da899/lvi_sam_visual_odometry-9*.log

[lvi_sam_visual_odometry-9] restarting process

process[lvi_sam_visual_odometry-9]: started with pid [13528]

[ INFO] [1636441314.871099244]: ----> Visual Odometry Estimator Started.

[ INFO] [1636441314.993614341]: ----> Lidar IMU Preintegration Started.

[lvi_sam_visual_loop-10] process has died [pid 13249, exit code -11, cmd /home/nvidia/catkin_sam/devel/lib/lvi_sam/lvi_sam_visual_loop __name:=lvi_sam_visual_loop __log:=/home/nvidia/.ros/log/e982fd3c-412a-11ec-ad02-48b02d3da899/lvi_sam_visual_loop-10.log].

log file: /home/nvidia/.ros/log/e982fd3c-412a-11ec-ad02-48b02d3da899/lvi_sam_visual_loop-10*.log

[lvi_sam_visual_loop-10] restarting process

[ INFO] [1636441315.091523468]: ----> Lidar Cloud Deskew Started.

process[lvi_sam_visual_loop-10]: started with pid [13703]

[ INFO] [1636441315.169702089]: ----> Lidar Feature Extraction Started.

[lvi_sam_visual_feature-8] process has died [pid 13231, exit code -11, cmd /home/nvidia/catkin_sam/devel/lib/lvi_sam/lvi_sam_visual_feature __name:=lvi_sam_visual_feature __log:=/home/nvidia/.ros/log/e982fd3c-412a-11ec-ad02-48b02d3da899/lvi_sam_visual_feature-8.log].

log file: /home/nvidia/.ros/log/e982fd3c-412a-11ec-ad02-48b02d3da899/lvi_sam_visual_feature-8*.log

My environment is:

Ubuntu18.04

ROS melodic

gtsam4.0.2

ceres1.14.0

opencv4.1.1

pcl1.8

How can I solve the problem?

It would be great if you could provide datasets and configuration files.

thanks.

I have already built this repo on Ubuntu 20.04 with PCL 1.10 and C++14.

But when I start rosbag paly garden.bag , I got these in terminal:

[ INFO] [1619515515.441234729]: ----> Visual Odometry Estimator Started. [ INFO] [1619515515.465279140]: ----> Lidar IMU Preintegration Started. [ INFO] [1619515515.475755274]: ----> Lidar Cloud Deskew Started. [ INFO] [1619515515.478325008]: ----> Lidar Feature Extraction Started. [ INFO] [1619515515.482130955]: ----> Visual Loop Detection Started. [ INFO] [1619515515.489539180]: ----> Visual Feature Tracker Started. [ INFO] [1619515515.503315593]: ----> Lidar Map Optimization Started. [lvi_sam_mapOptmization-7] process has died [pid 72715, exit code -11, cmd /home/bie/ros_ws/Lvi_Sam/devel/lib/lvi_sam/lvi_sam_mapOptmization __name:=lvi_sam_mapOptmization __log:=/home/bie/.ros/log/79586428-a73a-11eb-9e5c-244bfecec228/lvi_sam_mapOptmization-7.log]. log file: /home/bie/.ros/log/79586428-a73a-11eb-9e5c-244bfecec228/lvi_sam_mapOptmization-7*.log [lvi_sam_mapOptmization-7] restarting process process[lvi_sam_mapOptmization-7]: started with pid [72894] [ INFO] [1619515533.629490956]: ----> Lidar Map Optimization Started.

I think it's because my PCL version is different from your repo.

Vins-Mono can save pose graph and reuse pose graph for relocalization.

Can LVI-SAM save pose graph?

北京时间 2021/04/29, 谷歌云盘的数据集无法下载,显示"下载此文件会超出下载限额,因此目前无法下载"

Hello, if I am not using a fisheye camera, but a logitech c930e, which code parameters should I modify,thank you very much!

I think each time when odometry comes, imuIntegratorIMU should reset its optimized bias to improve the overall system's stability. And repropagation seems not to be used except for some narrow case that IMU and odom at a specific frequency.

Hello, I have a few questions to ask, thank you:

I output GPS data and IMU from the handheld data set_ Correct, it is found that the starting point and the ending point are not at the same point. How to evaluate the accuracy of the method;

Slam path is a relative position. How to align it with the ENU of GPS path;

The sampling rates of GPS and slam path are different, and the time axis is also misplaced. How does RMSE w.r.t GPS evaluate it.

params_camera.yaml:

lidar_to_cam_tx: 0.05

lidar_to_cam_ty: -0.07

lidar_to_cam_tz: -0.07

lidar_to_cam_rx: 0.0

lidar_to_cam_ry: 0.0

lidar_to_cam_rz: -0.04

The lidar's coordinate system(z) is up, and the camera's coordinate system(z) is forward.

But in the yaml, rpy is 0 0 -0.04,

It's almost the same coordinate system.

After reading your paper and code, thank you very much for your work. But I have the following questions:

malloc(): memory corruption

[lvi_sam_mapOptmization-6] process has died [pid 13598, exit code -6, cmd /home/mwy/lvisam/devel/lib/lvi_sam/lvi_sam_mapOptmization __name:=lvi_sam_mapOptmization __log:=/home/mwy/.ros/log/f460d3d2-9099-11ec-a978-9061ae86e6b5/lvi_sam_mapOptmization-6.log].

log file: /home/mwy/.ros/log/f460d3d2-9099-11ec-a978-9061ae86e6b5/lvi_sam_mapOptmization-6*.log

How to solve this problem? I install the gtsam following your introduction.

I am sure I have used cmake -DGTSAM_BUILD_WITH_MARCH_NATIVE=OFF ..

And my pcl version is 1.8.

I learn that you make your own dataset, So how do you make your all sensors time synchronization? by Hardware or software?

Hi. First I really appreciate for sharing your great work.

I tested both of your LIO-SAM and LVI-SAM on a quadruped robot platform with velodyne LiDAR on Gazebo, and got quite unstable results from LVI-SAM, which were worse than LIO-SAM.

I think maybe I am using wrong parameters and want to ask you If I am, or not.

Can you check over my parameters and result video clip please??

parameters: https://github.com/engcang/SLAM-application/tree/main/lvi-sam

video clip: https://youtu.be/RCY_q_d2Xm0

Thank you in advance.

Hello, I would like to know whether this work can be used for the fusion of only camera and lidar or worked with a 6-axis IMU?

Hello @TixiaoShan,

Thanks for your great work! I couldn't download the test data from the google drive due to it's size is larger than 10G. Could you consider to upload your data to Baidu Netdisk? This may be convenient to people in China.

Hi guys,

How did u plot the trajectory on google maps...

Is there any question in LVI-SAM code ??? Suddenly not run, so nervous!

catkin_ws/src/LVI-SAM/src/lidar_odometry/imuPreintegration.cpp:434:对

‘tf::TransformBroadcaster::sendTransform(tf::StampedTransform const&)’未定义的引用

Hello, the LVI_SAM runs normally in the first few times, but I have encountered the following problems in recent times.

How can I solve it?

#############################################################################################

*** stack smashing detected **: /home/.../catkin_ws/devel/lib/lvi_sam/lvi_sam_mapOptmization terminated

[lvi_sam_mapOptmization-6] process has died [pid 23959, exit code -6, cmd /home/.../catkin_ws/devel/lib/lvi_sam/lvi_sam_mapOptmization __name:=lvi_sam_mapOptmization __log:=/home/.../.ros/log/7e9a70bc-4752-11ec-87cb-5405dbb6548a/lvi_sam_mapOptmization-6.log].

log file: /home/.../.ros/log/7e9a70bc-4752-11ec-87cb-5405dbb6548a/lvi_sam_mapOptmization-6.log

[lvi_sam_mapOptmization-6] restarting process

process[lvi_sam_mapOptmization-6]: started with pid [24765]

##############################################################################################

I have a solid state lidar, Livox Horizon, can I use this lidar to run LVI-SAM?

[lvi_sam_mapOptmization-6] process has died [pid 22275, exit code -6, cmd /home/reeman/wul/LIO-SAM/lvi-sam/devel/lib/lvi_sam/lvi_sam_mapOptmization __name:=lvi_sam_mapOptmization __log:=/home/reeman/.ros/log/64900b78-b201-11eb-ba29-353bc025c460/lvi_sam_mapOptmization-6.log].

log file: /home/reeman/.ros/log/64900b78-b201-11eb-ba29-353bc025c460/lvi_sam_mapOptmization-6*.log

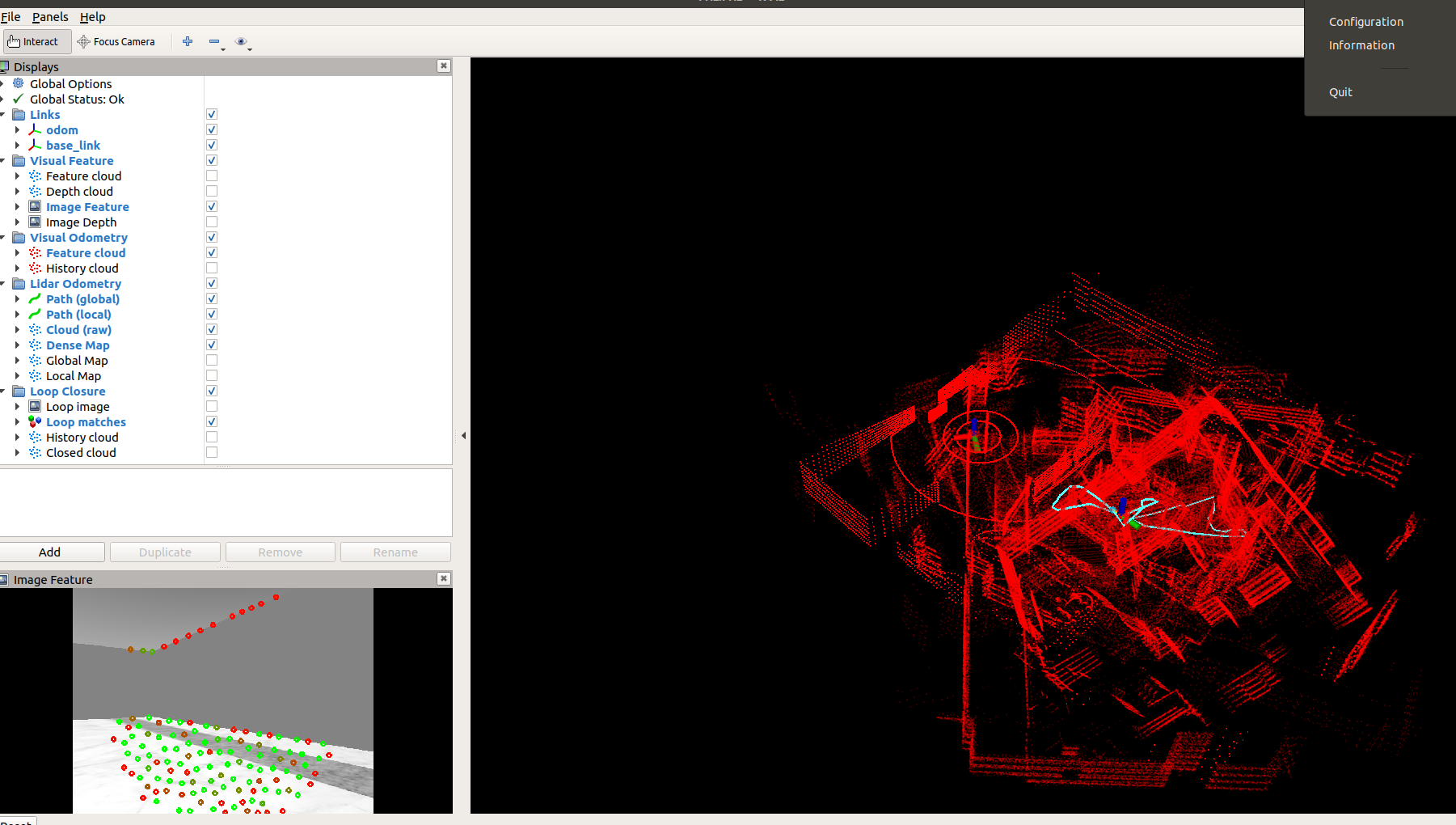

Hello, I can not understand why the rviz visualization is "wrog" but the two position topics (/lvi_sam/lidar/mapping/odometry and /lvi_sam/mavisn/odometry/odometry) gives me a correct estimate position.

I have test the same dataset with LIO-SAM and the resulting map is almost perfect (same for the position).

Can someone explain this problem please?

@TixiaoShan please can you explain me this behavior?

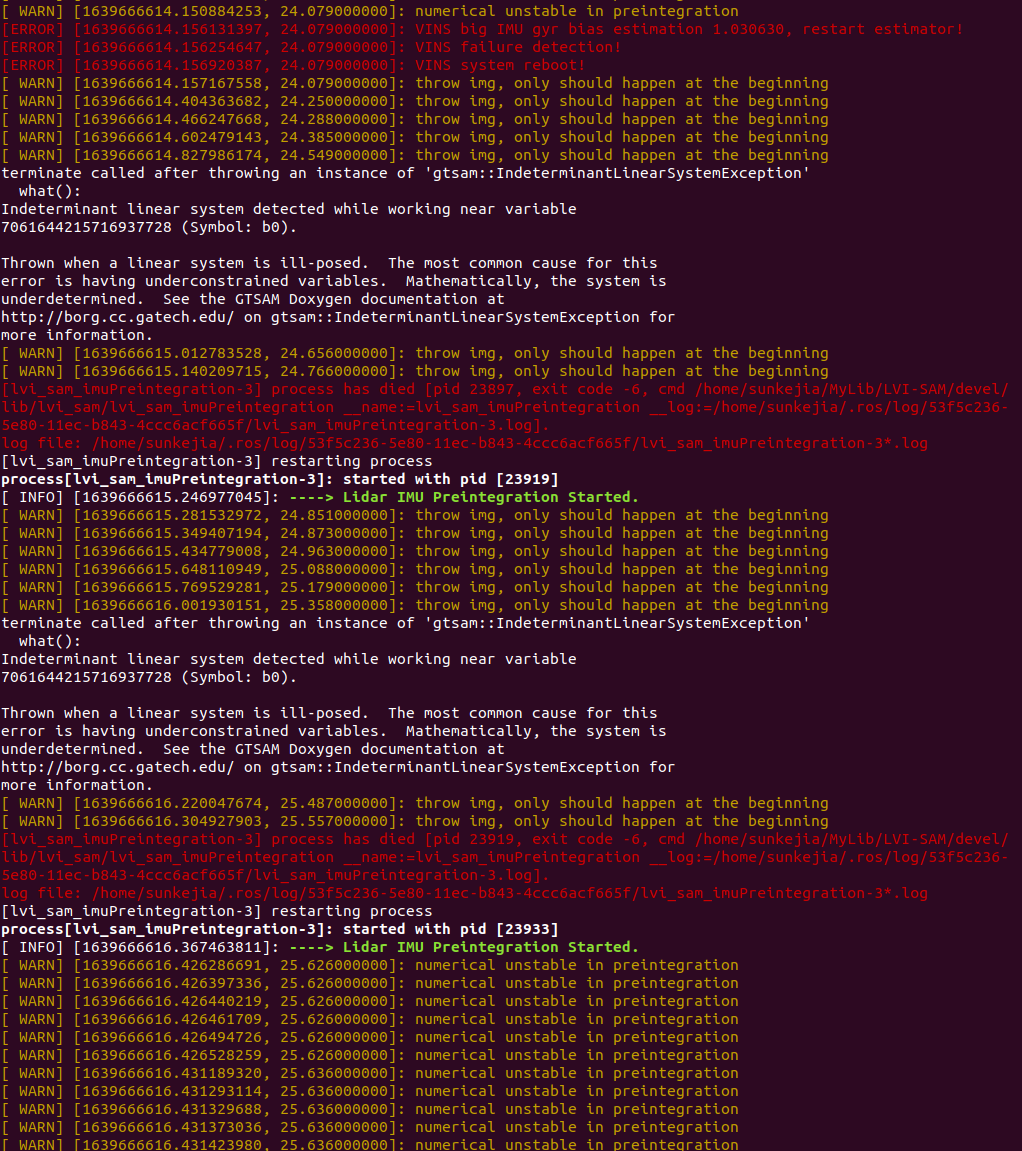

hi developer, i try to enjoy your project in my research

buy i keep receiving the error below

"throw img, only should happen at the beginning"

i use rosbag record imu_raw and realsense image

but it keep fail to initiailze

can you help me out?

I can't run through this code using the calib parameters provided by KITTI_raw dataset. Has anyone ever encountered this problem?

Hi, I noticed there are two different IMU Intrinsics(one in lidar_params.yaml and another one in camera_params/yaml). Can you explain why?

@TixiaoShan

Thanks for your great work!

In the LVI-SAM paper, this algorithm adapted LIS(LiDAR inertial system) fail detection.

However, maybe because I'm an newbie, I couldn't find which part of the code it was.

Which part of the code has the LIS fail detection part implemented?

Thanks,

How to computer the transformation from lidar to camera when I know the transformation bewteen the camera and IMU,the transformation bewteen lidar and camera

When I run the code there's always "[ WARN] [1633796860.060372652]: Point cloud timestamp not available, deskew function disabled, system will drift significantly!"

And then the node lvi_sam_imageProjection crashed and restarted all the time. I used gdb to check the error, it is said that error exists in line "deskewFlag(0)".

Why did the costructor ImageProjection always reconstruct?

Thank you for share awesome work.

I can't reproduce result with shared dataset(https://drive.google.com/drive/folders/1q2NZnsgNmezFemoxhHnrDnp1JV_bqrgV?usp=sharing).

It works fine at the beginning. However, at several points, displayed the warning messages and the trajectory was drifted.

also, I checked library version. (gtsam-4.0.2, ceres-solver-1.14.0)

Could PC specs have an impact?

I installed Linux on Macbook pro A1707.

Processo : 2.6 GHz Intel Core i7 (I7-6700HQ)

RAM: 16GB

GPU: Radeon Pro 450

Warning message:

Large bias, reset IMU-preintegration!

Large velocity, reset IMU-preintegration!

各位大佬,有人试过,使用自己的传感器跑LVI-SAM没?能否交流一下

Hi,

How did u get the extrinsics of lidar and camera

Any ideas??

Hi Tixiao,

I am trying to do some experiments of LVI SAM on the NTU VIRAL public dataset (download page: https://ntu-aris.github.io/ntu_viral_dataset/). Hence, I will include LVI-SAM to the list of applicable methods to NTU VIRAL website.

However LVI SAM diverges quickly after a few minutes. This does not happen if the visual nodes are disabled. Could you please take a look at the configurations and suggest the best configurations?

The forked repository can be found here:

https://github.com/brytsknguyen/LVI-SAM

Working examples of LIO SAM and VINs-Mono on NTU VIRAL datasets can be found here:

https://github.com/brytsknguyen/LIO-SAM

https://github.com/brytsknguyen/VINS-Mono

How did u get acc and gyro bias params for ur imu ????

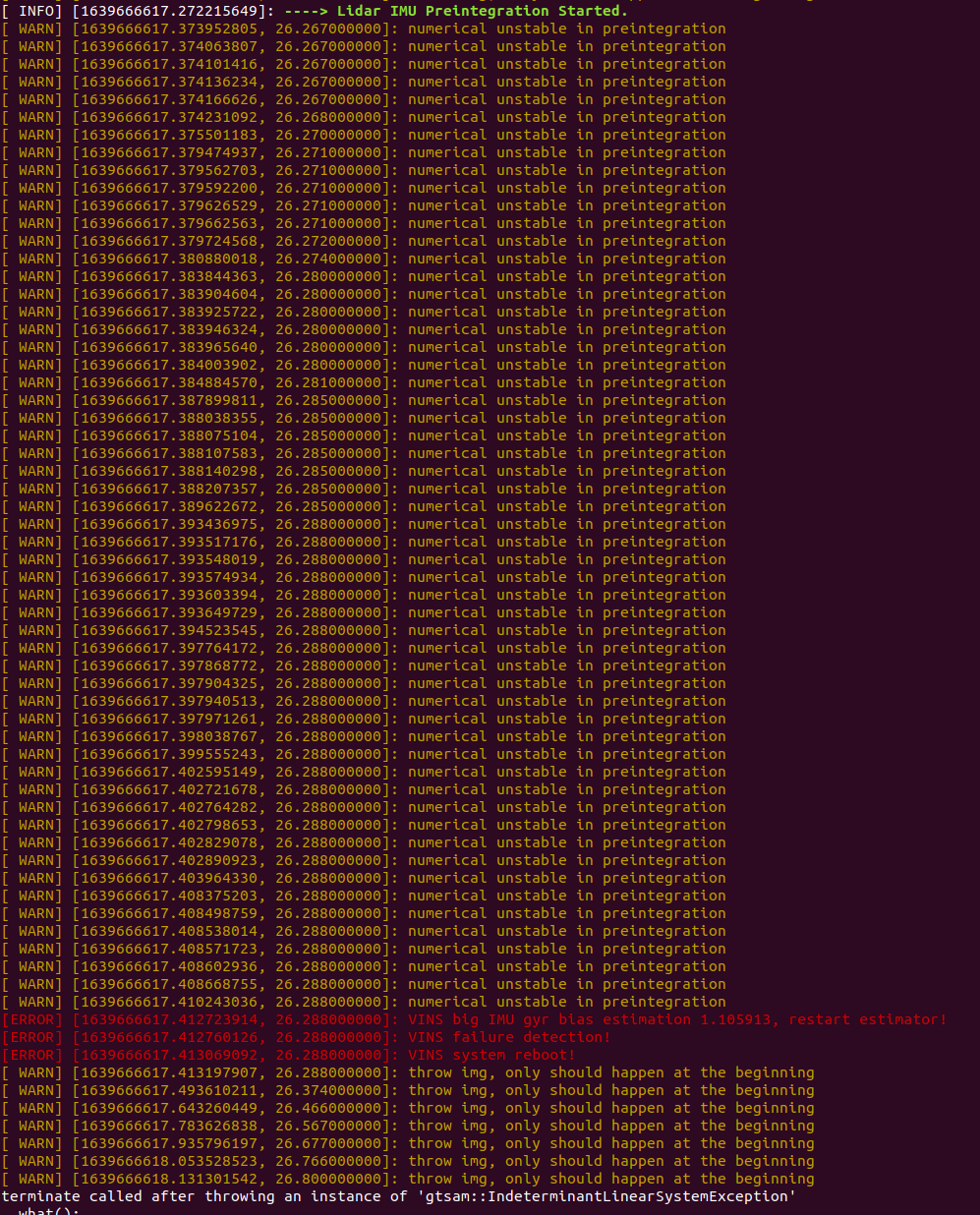

Hi. When I run lvi-sam in Gazebo simulation environment, the maps cannot overlap.

And many WARN and ERROR messages are generated at the terminal.

Here are my .xacro files and params.yaml files:

https://github.com/AntarcticaSkj/file-about-lvi-sam

Hello, I output GPS data from the the Handheld Dataset, and find that the starts and ends are not at the same point.How to evaluate the accuracy of the method?

hello! when I roslaunch lvi-sam run.launch.I meet with this problem :

/usr/include/boost/smart_ptr/shared_ptr.hpp:648: typename boost::detail::sp_member_access::type boost::shared_ptr::operator->() const [with T = const sensor_msgs::PointCloud_<std::allocator >; typename boost::detail::sp_member_access::type = const sensor_msgs::PointCloud_<std::allocator >*]: Assertion `px != 0' failed.

and I used gtsam-4.0.0.Because 4.0.2 always send "error: static assertion failed: Error: GTSAM was built against a different version of Eigen. " I try to add set(GTSAM_USE_SYSTEM_EIGEN ON) in CMakeLists.txt,but never be succeed.So I use gtsam-4.0.0,it is succeed.

how can I process this issue. please help me. thanks!

Sorry for the dummy question, but I can not understan which is the position topic.

I have understand that /lvi_sam/lidar/mapping/odometry is related to the fusion LIDAR+IMU (LIO-SAM output) and /lvi_sam/vins/odometry/odometry is related to CAMERA+IMU (VINS-MONO output).

But which is the one that gives the fusion of both algorithms?

How to save map?

Hello, I'm trying to test the code with my own rosbag with imu, single channel lidar and monocular camera and evaluate performance on your code, how to modify the code to make it compatible?

[ 34%] Building CXX object LVI-SAM/CMakeFiles/lvi_sam_visual_feature.dir/src/visual_odometry/visual_feature/camera_models/EquidistantCamera.cc.o

/home/wuxin/YZH/algorithm_learning/lvi_ws/src/LVI-SAM/src/visual_odometry/visual_feature/camera_models/EquidistantCamera.cc: In member function ‘virtual void camodocal::EquidistantCamera::readParameters(const std::vector<double, std::allocator >&)’:

/home/wuxin/YZH/algorithm_learning/lvi_ws/src/LVI-SAM/src/visual_odometry/visual_feature/camera_models/EquidistantCamera.cc:637:29: warning: comparison between signed and unsigned integer expressions [-Wsign-compare]

if (parameterVec.size() != parameterCount())

~~~~~~~~~~~~~~~~~~~~^~~~~~~~~~~~~~~~~~~

c++: internal compiler error: Killed (program cc1plus)

Please submit a full bug report,

with preprocessed source if appropriate.

See file:///usr/share/doc/gcc-7/README.Bugs for instructions.

LVI-SAM/CMakeFiles/lvi_sam_visual_feature.dir/build.make:62: recipe for target 'LVI-SAM/CMakeFiles/lvi_sam_visual_feature.dir/src/visual_odometry/visual_feature/feature_tracker.cpp.o' failed

make[2]: *** [LVI-SAM/CMakeFiles/lvi_sam_visual_feature.dir/src/visual_odometry/visual_feature/feature_tracker.cpp.o] Error 4

make[2]: *** Waiting for unfinished jobs....

Scanning dependencies of target lvi_sam_generate_messages

[ 34%] Built target lvi_sam_generate_messages

[ 35%] Building CXX object LVI-SAM/CMakeFiles/lvi_sam_visual_odometry.dir/src/visual_odometry/visual_estimator/feature_manager.cpp.o

c++: internal compiler error: Killed (program cc1plus)

Please submit a full bug report,

with preprocessed source if appropriate.

See file:///usr/share/doc/gcc-7/README.Bugs for instructions.

LVI-SAM/CMakeFiles/lvi_sam_visual_feature.dir/build.make:86: recipe for target 'LVI-SAM/CMakeFiles/lvi_sam_visual_feature.dir/src/visual_odometry/visual_feature/feature_tracker_node.cpp.o' failed

make[2]: *** [LVI-SAM/CMakeFiles/lvi_sam_visual_feature.dir/src/visual_odometry/visual_feature/feature_tracker_node.cpp.o] Error 4

[ 37%] Building CXX object LVI-SAM/CMakeFiles/lvi_sam_visual_odometry.dir/src/visual_odometry/visual_estimator/parameters.cpp.o

c++: internal compiler error: Killed (program cc1plus)

Please submit a full bug report,

with preprocessed source if appropriate.

See file:///usr/share/doc/gcc-7/README.Bugs for instructions.

LVI-SAM/CMakeFiles/lvi_sam_mapOptmization.dir/build.make:62: recipe for target 'LVI-SAM/CMakeFiles/lvi_sam_mapOptmization.dir/src/lidar_odometry/mapOptmization.cpp.o' failed

make[2]: *** [LVI-SAM/CMakeFiles/lvi_sam_mapOptmization.dir/src/lidar_odometry/mapOptmization.cpp.o] Error 4

CMakeFiles/Makefile2:1702: recipe for target 'LVI-SAM/CMakeFiles/lvi_sam_mapOptmization.dir/all' failed

make[1]: *** [LVI-SAM/CMakeFiles/lvi_sam_mapOptmization.dir/all] Error 2

make[1]: *** Waiting for unfinished jobs....

Hi , I am not able to run LVI Sam , I am getting Segmentation Fault (Core Dumped) in MapOptimization Node.

void findPosition(double relTime, float *posXCur, float *posYCur, float *posZCur)

{

*posXCur = 0; *posYCur = 0; *posZCur = 0;

// if (cloudInfo.odomAvailable == false || odomDeskewFlag == false)

// return;

// float ratio = relTime / (timeScanNext - timeScanCur);

// *posXCur = ratio * odomIncreX;

// *posYCur = ratio * odomIncreY;

// *posZCur = ratio * odomIncreZ;

}

As far as I understand, this will result in the pcl being deskewed in rotation only, is this correct?

Thank you for your work, but I have a problem about the dataset:

I printed the timestamp of the dataset you provided, and I found some problems with IMU, as shown in the following figure. IMU is not uniformly sampled, is this the correct timestamp or something wrong?

imu :1592423047.652287 imu :1592423047.653273 imu :1592423047.654281 imu :1592423047.658288 imu :1592423047.659220 imu :1592423047.660274 imu :1592423047.663302 imu :1592423047.664251 imu :1592423047.665274 imu :1592423047.669284 imu :1592423047.676231 imu :1592423047.677208 imu :1592423047.678205 imu :1592423047.679205 imu :1592423047.680207 imu :1592423047.682220 imu :1592423047.683204 imu :1592423047.684206 imu :1592423047.687218 imu :1592423047.688215 imu :1592423047.689263 imu :1592423047.693287 imu :1592423047.694272 imu :1592423047.695210 imu :1592423047.700286 imu :1592423047.701271 imu :1592423047.702214 imu :1592423047.705285 imu :1592423047.706218 imu :1592423047.707212 imu :1592423047.711227 imu :1592423047.712312 imu :1592423047.713274 imu :1592423047.717261 imu :1592423047.718269 imu :1592423047.719213 imu :1592423047.724327 imu :1592423047.725272 imu :1592423047.726282 imu :1592423047.729284 imu :1592423047.730273

Hi,thanks for your great works.

the code test well with your data,but it seems the vins program did not work well with my data. I’m confused about what the “question about q_lidar_to_cam q_lidar_to_cam_eigen” mean in the code. hope your reply ,thanks agian.

@TixiaoShan

使用handheld.bag实验效果发现偶尔会出现跳变,不知是否针对这个数据集程序中的参数还需要调整哪些?

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.