Wang Han [homepage]

- :🌱: currently working in Huawei Singapore (Todmind Program).

- 🎓 received Ph.D. in Nanyang Technological University.

- 🔨 specialize in computer vision and robotics.

Fast LOAM: Fast and Optimized Lidar Odometry And Mapping for indoor/outdoor localization IROS 2021

License: Other

Dear Doctor: Thanks for your wonderful modified version of LOAM algorithm. It's really great and helpful to us. However, I think the pointCloudBuf data in odomEstimationNode should be free ? Because it won't be used in odometry estimation. Am I right ?

Hi, I have struggled in the kitti translation error: In your readme, it shows that the final translation error is 0.51%, however in my test, it is about 1.2%,

I run the code on the Macbook-Pro with i7 and also in my desktop with i5, it gives me the same result ? Is it possible have some trouble with the CPU ?

floam/src/lidarOptimization.cpp

Line 33 in 9fdb2ca

Hi,

Why the size of jacobian is 1x7 rather than 1x6 ?

Can you please elaborate on how to generate Kitt output format (row aligned translational and rotational matrix) ?

I have test your floam, It is very good. I want to get the same colored pointlouded as your video. How can I got the pointcloud? Is the source codes is open?

Thank you very much!

Hi, I want to reproduce the results of seq05 with aloam and your bag, and I receive the results with the command "rosbag play -r 0.3 --clock $PATH_OF_BAG" and "rostopic echo /aft_mapped_to_init/pose/pose", but I get 2762 poses, which is one pose more than gt. How did you reproduce the aloam result? Really need your help !

Hello.

Thank you very much for the FLOAM code.

I am working with a UGV traveling at 10 km / h that has a Velodyne VLP16 and a Jetson Xavier AGX. I have tried other optimizers from the Ceres library.

I modified the code (odomEstimationClass) on line 63, changing ceres :: DENSE_QR to ceres :: SPARSE_NORMAL_CHOLESKY; and I had shorter times than with the previous optimizer.

Why does the loop on line 52 of the code (odomEstimationClass) start to decrease to only 2 iterations?

I have removed this and make the loop always do only 2.

Hi!

I have tried to modify the laser processing to be able to process data from the Realsense L515 lidar by adding the following lines in laserProcessingClass.cpp (in the featureExtraction() function):

else if (N_SCANS == 480)

{

scanID = int((angle-45)*480/55); // Assuming angles span from 45 to 100 degrees vertically

if (angle > 100 || angle < 45 || scanID > (N_SCANS - 1) || scanID < 0)

{

continue;

}

}

I assumed that by recording depth with 640x480 resolution I would get 480 scan lines. The L515 has a vertical FOV of 55, but Intel does not say anything about "absolute angular limits" such as what we have for velodyne lidars, so it is hard to tell exactly how I should sort it into scanIDs, but I did some print debugging and these limits I chose did not seem to exclude any measurements.

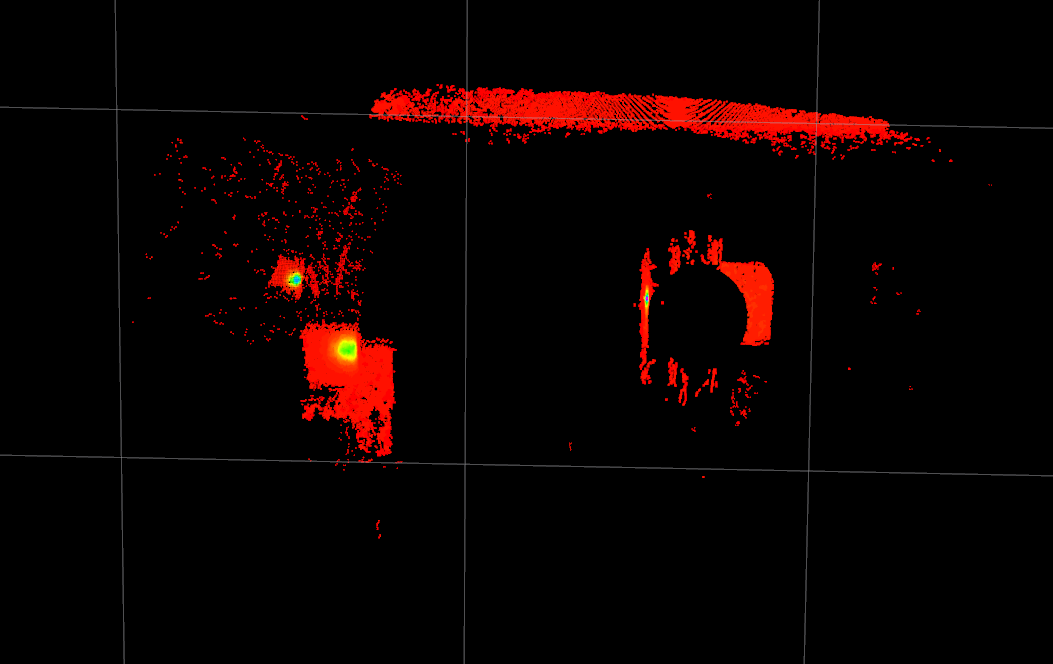

My main issue is that the filtered point clouds have circular "holes" in the middle of the FOV, see the attached screenshots. This Lidar is not recording in sweeps of 360 degrees, so this might be one of the reasons we are getting bad results. I could not understand from the code if/where assumptions about this are made.

I have been running the launch file with these parameters:

<param name="/use_sim_time" value="true" />

<param name="scan_period" value="1.0/30.0" />

<param name="vertical_angle" type="double" value="55.0" />

<param name="max_dis" type="double" value="9.0" />

<param name="min_dis" type="double" value="0.25" />

<param name="map_resolution" type="double" value="0.02" />

Again, I do not really know what to set the vertical angle to, but we have been streaming with 30 fps and the Lidar has a range from 0.25 to 9 meters. The entire rosbag I ran it with is around 8 GB and contains images as well as imu measurements, but I sent you an 18 second sample through WeTransfer. Let me know if I can provide you with any additional information.

Hello, thank you very much for providing such great work for the community. I learned a lot from your code.

However, when I tried to evaluate the sequence 00 in the kitti dataset, I can not achieve the same relative translational error. I got 1.12% instead of the reported 0.51% on the README.md page. I save the lidar odometry result and convert it to kitti gt format with the following code:

// read calib

Eigen::Matrix4d Tr;

Eigen::Matrix4d Tr_inv(Eigen::Matrix4d::Identity());

readCalib(calib_file, 0, Tr);

Tr_inv = Tr.inverse();

std::vector<Eigen::Matrix4d, Eigen::aligned_allocator<Eigen::Matrix4d>> lidar_poses;

readTraj(lidar_est_file, lidar_poses);

// transform the lidar traj to cam

std::vector<Eigen::Matrix4f, Eigen::aligned_allocator<Eigen::Matrix4f>> cam_poses;

auto first = Tr * lidar_poses.front().inverse();

for (auto& lidar_pose : lidar_poses) {

auto cam_pose = first * lidar_pose * Tr_inv;

cam_poses.push_back(cam_pose.cast<float>());

}

saveTraj(camera_seq_est_dir + seq + ".txt", cam_poses);Is there anything wrong I did or do I need to tune some parameters in the system? If so, could you share how you tune the system? Thank you in advance!

Hi,does this prompt affect floam execution?

QObject::connect: Cannot queue arguments of type 'QVector'

(Make sure 'QVector' is registered using qRegisterMetaType().)

QObject::connect: Cannot queue arguments of type 'QVector'

(Make sure 'QVector' is registered using qRegisterMetaType().)

I want to do pose graph optimization on floam, but when execute isam->update(), the OdomestimationNode is stucked and terminal has nothing prompt. Can you help me figure out what the problem is ? Thank you.

Thank you for your great work!

But I wonder that, What changes have you made to make your results on KITTI rank is 16th with translation error only 0.73%. Because the result which I get from your open-source code is much worse than 0.73%, which is about 2%.

What parameters should I change to get your KITTI results?

Best wishes!

Sincerely~

In the status of the map topic I get the following error:

Failed to transform from frame [/map] to frame [base_link]

This means that I a unable to see the map point cloud in Rviz. I am however able to see the raw data.

I am running on noetic on Ubuntu 20.04 LTS and have disabled the hector trajectory server (no support for noetic just yet). Here is the summary I get at the start of floam_mapping.launch

SUMMARY

========

PARAMETERS

* /map_resolution: 0.4

* /max_dis: 90.0

* /min_dis: 3.0

* /rosdistro: noetic

* /rosversion: 1.15.8

* /scan_line: 64

* /scan_period: 0.1

* /use_sim_time: True

* /vertical_angle: 2.0

NODES

/

floam_laser_mapping_node (floam/floam_laser_mapping_node)

floam_laser_processing_node (floam/floam_laser_processing_node)

floam_odom_estimation_node (floam/floam_odom_estimation_node)

rosbag_play (rosbag/play)

rviz (rviz/rviz)

word2map_tf (tf/static_transform_publisher)

I have play the VLP-16 lidar's rosbag file, At begin time used in ms is about 50ms, but as the bagfile is played, the time used can increased to 150ms. And the program used about 100% cpu, memory consumption is also increasing. When the rosbag file is played out, the odom estimation node is also running.

Hi,

Can you tell me that which line of code I needed to use this package for the ouster os1 64? I need to use this package with a rosbag recorded with point cloud and image topic. I need to use it for the automatic calibration package.i want to get dense point cloud in my rosbag so is the first setup is that to setup my sensor again and launch driver using floam launch file and then record again or I can just use rosbag file for this purpose?

Hi! Is floam able to work with tilted angle lidar sensor? What parameters needed to be changed?

Hi

I am testing FLOAM with the ouster 64 Lidar ROS dataset available from their website.

I have a 32 GB RAM, running the ROS melodic. The FLOAM is quickly filling up all the RAM within minutes of running the node.

Can you suggest something to solve this?

Thanks

The code of the iros2021 paper.

and when ?

thanks.

Hi. For the first, thank you for sharing ur excellent work to us.

By the way, I'm confused with some implementation.

As far as I know, LOAM algorithms uses 2 optimizations - 1 for lidar odometry, the other for lidar mapping.

but as I look through ur code, I see just 1 optimization in

'odomEstimationClass.cpp in OdomEstimationClass::updatePointsToMap' function.

and from the name of kdtree - kdtreeSurfMap, I guess it is for lidar-mapping algorithm. Where is the other lidar-odometry algorithm gone?

Am I missing something?

Another question :

in function 'updatePointsToMap' in odomEstimationClass.cpp, there is a variable odom_prediction.

Eigen::Isometry3d odom_prediction = odom * (last_odom.inverse() * odom);

What does this mean? is it predicting the next odometry by previous displacement?

is it in the original paper?

Thank you.

Hello, I was testing this package on my own rosbag files. I wanted to know 3 things:

It will help a lot if you response. Thanks in advance.

Hi, I am confused about the jacobian calculation in edge and surface cost function, is there any related paper or documents explaining that? Thanks!

CMake Error at /opt/ros/melodic/share/catkin/cmake/catkinConfig.cmake:83 (find_package):

Could not find a package configuration file provided by "grid_map_octomap"

with any of the following names:

grid_map_octomapConfig.cmake

grid_map_octomap-config.cmake

Add the installation prefix of "grid_map_octomap" to CMAKE_PREFIX_PATH or

set "grid_map_octomap_DIR" to a directory containing one of the above

files. If "grid_map_octomap" provides a separate development package or

SDK, be sure it has been installed.

first off - amazing work!

I wanted to ask before I got too far down the path - I've done a pretty major rewrite of the code over on my forked repository and wanted to see if you would accept a PR from me to bring these changes back into this original repo?

if not, then I'll probably just go ahead and release the floam package myself to ROS noetic since I think it could be really useful for other people.

let me know what you think! and looking forward to seeing if we can combine our efforts!

Hi!

Congratulations for your great work! I think you really make the loam algorithm easy to understand. However, I just tested this algorithm on my Velodyne 16 dataset. The result is not promising. Since your algorithm needs undistorted point clouds, I just preprocess my dataset and feed the undistorted point cloud. Even though the dataset is undistorted, the output is still not good. I think it must be something wrong with the config. Would you mind adding new launch files for vlp 16? Thank you very much!

Compared with Aloam, would you mind summarizing why your method is so fast? Which one do you think is most helpful for improve real-time performance? Thank you very much!

Dear author, I read your paper about floam . But, I can't find the linear interpolation. please why?

If I use 2D LiDAR, can I use this framework?

Hello Han,

Thanks for your great work and sharing with the community.

As is mentioned by you, the performance optimization is one of the highlights from FLOAM. Is there a configurable or easy way by tuning the parameters in order to improve accuracy if we don't expect it to be real-time (eg. change num of features, resolution, num of iterations in optimization, etc)?

Looking forward to your reply!

My best

Calvin

Great work! Would it be possible to provide the final pointcloud as a download? Maybe as one or multiple ply files?

Hi,when I run tthe package,it shows "*** Error in `/floam_odom_estimation_node': double free or corruption (out): 0x00007ffa102b4fe0 ***"

Do you know why?

thanks!

Hi,

I just installed floam and tried it with your bag (seq 05), however, I got same error like issue 5, "not enough points in map to associate, map error[ INFO]". Do you know how to solve this problem?

Hi!

Thanks for your great work. Making it easier to understand LOAM, I have learned a lot from this work. The project performed well on the KITTI dataset, but poorly on the data I collected indoor. There was a drift at the corner of the room.

Are there any paremeters or settings I could change to reduce the drift?

Looking forward to your reply!

My best

Afei

Hi!

Thanks for sharing the great work! However, there are some problems in the repository, the definitions of feature extraction in 'laserProcessingClass.h' are different from the realizations of feature extraction in 'laserProcessingClass.cpp'

Does the ground_truth you show refer to the real location of the vehicle? How did you get it?

FLOAM was working fine with the KITTI dataset for me. However, when I tested FLOAM with some of my VLP16 data (the data were first collected in pcap format and then converted to bag format) I received the following warnings:

[ WARN] [1615661815.747191914, 1615659482.642718329]: No transform between frames world and base_link available after 1615659482.642718 seconds of waiting. This warning only prints once.

[ WARN] [1615661815.751760228, 1615659482.647800395]: No transform between frames world and velodyne available after 1615659482.647800 seconds of waiting. This warning only prints once.

Nothing was showing in RViz. I have no idea how to fix this so any help is greatly appreciated!

@wh200720041 Just a reminder you haven't git add octoMappingNode.cpp and octoMappingClass.cpp after the last commit

I am a student studying based on the code you have suffered.

Thank you for releasing the code.

I have a question.

How can you bring the color of the point-like your kitti demo image?

Thank you for checking in advance.

Hi!

Just installed floam after trying out LOAM and went to try it out with the rosbag examples. After around 20 seconds, I get the error message:

not enough points in map to associate, map error

I tried changing the source code to skip the if condition that prompted this error message when false, and then got this error, which could explain why tracking was lost:

[pcl::KdTreeFLANN::setInputCloud] Cannot create a KDTree with an empty input cloud!

I also tried installing different versions of PCL (1.8.0, 1.9.1, 1.11.0) to see if this could be the issue, but it did not change the error.

Do you know what could be causing this? I'll attach some screenshots too of the results when running, both with and without mapping. Let me know if I can send any other information that could be of help.

floam/src/lidarOptimization.cpp

Line 83 in 4ba1492

Thanks for your great work!

But I have a question why your plus implement is different from the plus implement of the poses in VINS.

As far as I know, your pose is 7 dim global pose(rotation(x,y,z,w),trans(x,y,z)) , and VINS's pose is the same(trans(x,y,z),rotation(x,y,z,w)), and as a consequence, they should be equal to each other, but in fact, they are not.

I don't know the reason for this, can you tell me the reason for this?

Sincerely!

Thank you!

thanks for openning your code!!

when i learn your paper, i see there is a weight function of smoothness, but i can not find in code.

can you help me? thank you very much!

After I use kitti_to_rosbag to convert the 2011_09_26_0087 data set to bag format, when running this data set, the image cannot be displayed, as shown in the following picture.

But when running the data set you provided, the above problems did not occur.Just like this picture.

When using the rosbag info command to view the bag information, I found that the data set you provided has the topic of cam, but I did not convert it. I don’t know if there was a problem during the conversion process, but the conversion process went smoothly without errors. This makes me very puzzled. If you know how to solve this problem, I hope you can let me know.

I have one more question.

When the last three lines appear, the image in rviz stops. Is this an error or the end of the run?

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.