tixiaoshan / lio-sam Goto Github PK

View Code? Open in Web Editor NEWLIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping

License: BSD 3-Clause "New" or "Revised" License

LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping

License: BSD 3-Clause "New" or "Revised" License

In the paper you are testing it in Amsterdam, but it doesn't seem to have been made public.

Are there any plans to release the Amsterdam dataset?

Hi, I edit params.yaml and change savePCD: true.

But it doesn't work .

the code:

ros::Rate rate(0.2);

while (ros::ok()){

rate.sleep();

publishGlobalMap();

}

if (savePCD == false)

return;

when ros::ok() wiil shun down?

Thanks!

Hi, @TixiaoShan, thanks for your excellent work !

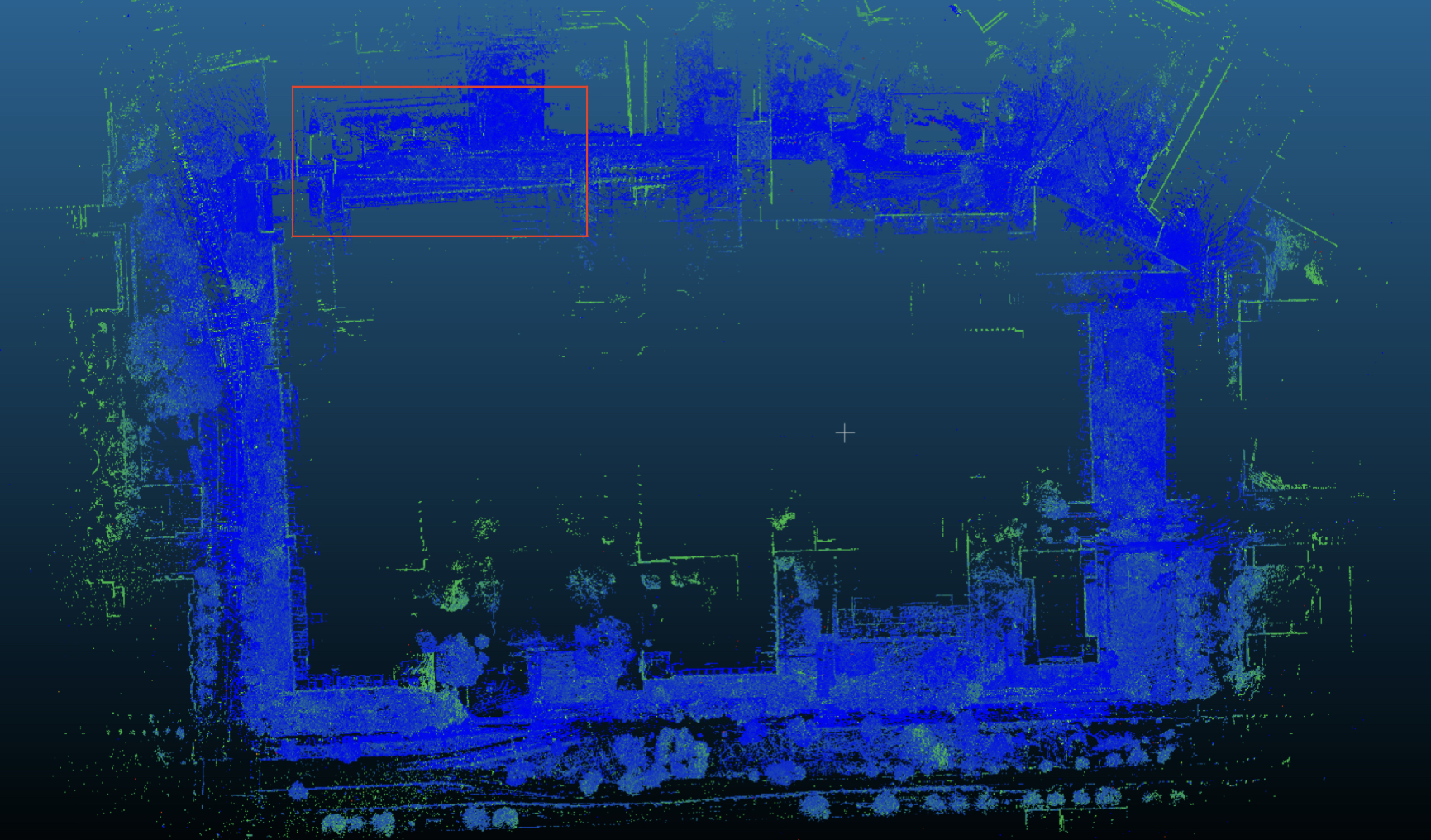

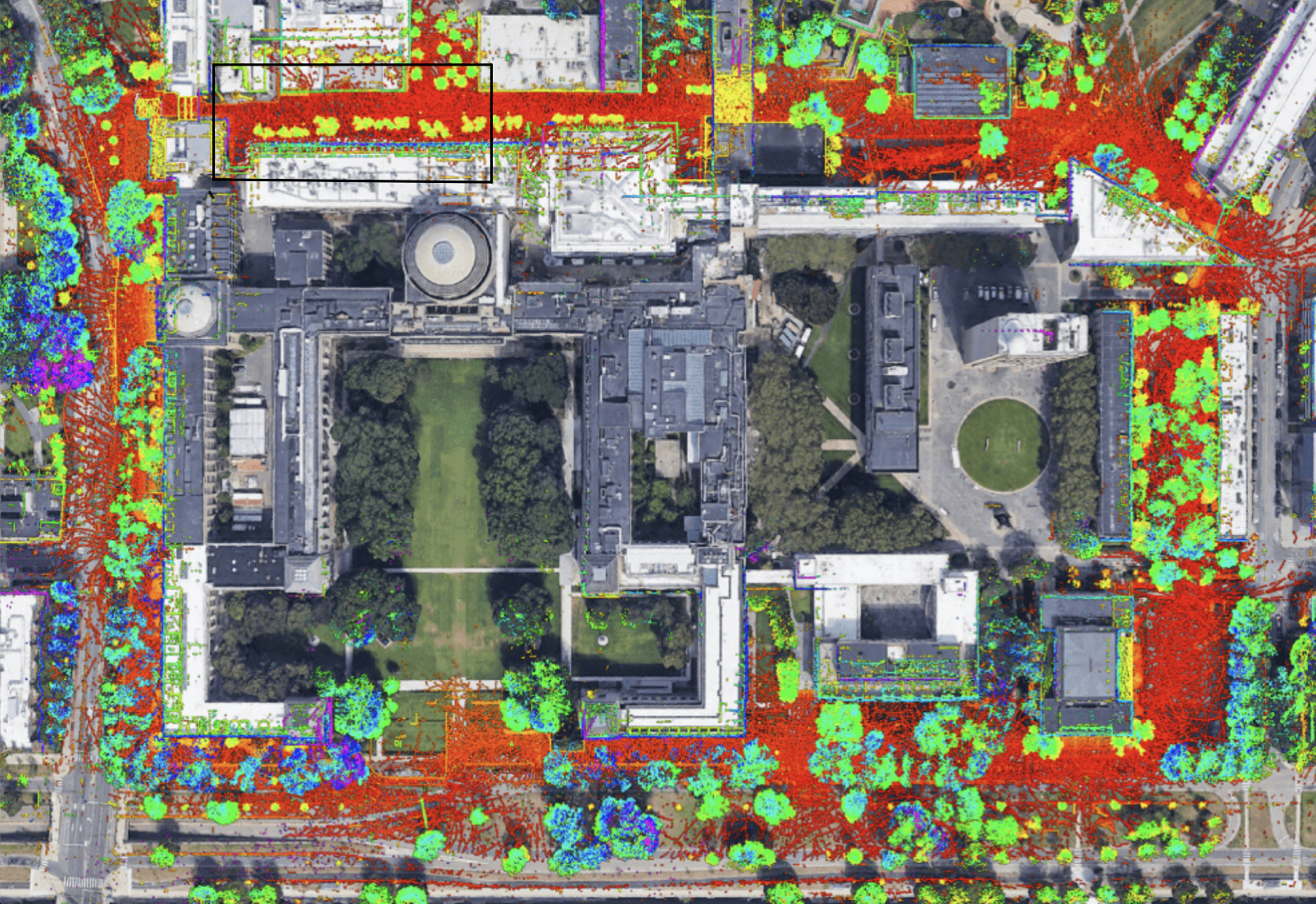

There are some questions for Campus dataset (large) dataset. I have changed the imuTopic to imu_correct and extrinsicRot, extrinsicRPY to Identity matrix in config/params.yaml.

And the rosbag plays at normal speed:rosbag play big_loop.bag

But the final result is not as expected. There is a big shift in the global map.

Here is the result in my computer.

And this is the demo result in LIO-SAM Paper.

The red and black block is the different area.

The detail is shown as:

How can I get the correct result?

Thanks very much!

Thanks for your great job. It's really awesome.

I have googled that 3DM-GX5-25 costs about $1600, which is a high performance IMU. So, I'm curious about the importance of the inertial measurement accuracy in your algorithm, will a consumer grade IMU do the same job ?

Hi @TixiaoShan, thanks for your excellent work !

when reading about extrinsicRPY in doc and imuConverter() function, i don't understand this param's meaning.

Imu's orientation is q_b0_bk, which b0 is the imu's world frame, b0 imu frame in its world frame in time stamp k.

extrinsicRot is q_l2b, different from extrinsicRPY. What's the meaning of q_b0_bk * extQRPY?

It isn't q_b0_lk = q_b0_bk * q_l2b.traspose(), nor q_l0_lk = q_l2b * q_b0_bk * q_l2b.traspose(), right?

Thank your for your great job! Since LIO-SAM and LIO-mapping both use lidar and imu data to run slam. I tested your code with dataset(slow2.bag) provided by LIO-mapping and tested LIO-mapping with your dataset(casual_walk.bag). Though I modified extrinsic rotation and translation, both of two tests failed(generating meaningless map). What's the problem? Is it because your imu data is used in a different way?

Hi, Tixiao.

From the picture about lidar and imu coordinates from readme, it is shown that the translation bewtween the origin of lidar and imu is a few centimeters. My question is why you set [0,0,0] for the parameter extrinsicTrans.

Second question is about the formula which transform imu pose to lidar pose, where in imuPreintegration.cpp line 74,75:

gtsam::Pose3 lidar2Imu = gtsam::Pose3(gtsam::Rot3(1, 0, 0, 0), gtsam::Point3(extTrans.x(), extTrans.y(), extTrans.z()));; In your code, you firstly use imuConverter() to align the axis of the two coordinates, and then use lidar2Imu or imu2Lidar to get that. I use this formula to transform imupose to lidarpose, where the rotation matrix of T_imu_lidar is identity matrix.

T_lidar0_lidarn = T_lidar_imu * T_imu0_imun * T_imu_lidar

And this is different from the imu2Lidar in your code. Please give me some advice! Thank you.

Hi,@TixiaoShan,Thank you for your great work! I don't know why you have to use a 9-axis IMU,and the magnetometer in the IMU is not used in your code,Can you tell me the reason for using a 9-axis IMU instead of a 6-axis IMU?

Thank you very much!

Hi,

I want to use the gps provided by your data sets as the ground truth for evaluation, but its coordinate system and frequency are different from the system output, could you tell me how do you solve this? Thank you very much!

Currently class definitions, ROS node instantiation and execution are embedded in the same source files.

This issue proposes decoupling both class definition from node instantiation.

I propose a convention where we could name classes with CamelCase.h / cpp, and nodes in low case, with the suffix node at the end (e.g. camel_case_node.cpp)

I have noticed that there are so many surface points in the extracted features. Is that a bug ?

Currently the package has a fixed build_type flag:

set(CMAKE_BUILD_TYPE "Release")

set(CMAKE_CXX_FLAGS "-std=c++11")

set(CMAKE_CXX_FLAGS_RELEASE "-O3 -Wall -g -pthread")

This is not ideal. Release with -O3 introduces lots of optimizations, which is great for real-time operation. But it doesn't allow external users to build the package with other flags (for example, 'RelWithDebInfo', which combines debug symbols with release optimizations).

I propose removing the release flag, and rather document in the wiki that for optimal performance the package should be build in release mode.

Please let me know what you think about this proposal :)

Best,

Yoshua

Thank you for your work again! When I use the dataset in https://github.com/TixiaoShan/Stevens-VLP16-Dataset or my own dataset, the map will be in a chaotic state shoortly after the beginning. Just as the picture shows from the bag(2018-0518-14-49-12_0.bag)

I have checked the output of odometry and found that the data is wrong. And for my own data, I have known the relative position between imu and lidar, but after modifying the extrinsicRot and extrinsicRPY following the introduction in Readme, I still can not make LIO-SAM run properly. How does the following sentence mean ? "The transformation of attitude readings is slightly different. We rotate the attitude measurements by -90 degrees around "lidar-z" axis and get the corresponding roll, pitch, and yaw readings in the lidar frame."

Thanks for your great job. It's so amazing

I use the original parameter in your params.yaml, and run the park.bag file. The rviz always show me some error on base_link. AS following the figure1. The ros system shows me error message as following figure2.

Do you know in which steps I have a problem?

Bless you have the good day.

Hi,

Thanks again for your great work.

Can you explain how did you obtain extrinsic parameters between Lidar and IMU?

I need to obtain extrisics between Lidar and IMU for a ground vehicle such as car. Lidar is placed at the top of the car and IMU is placed at the luggage of the car. It is not possible to move the car arround all three axes and along all three axes (the motion is approximately planar). So, hand-eye calibration fails for the height between lidar and IMU. Can you recommend any tool or code that provides extrinsic calibration between IMU and Lidar ?

Thanks in advance.

Hi Tixiao,

By means of this issue I want to suggest supporting clang format and clang tidy to:

Please let me know what you think.

Best,

Yoshua

Hi:

Thanks for sharing you work!

I am confused with the part of Extrinsics in params.yaml, the Extrinsics represent transform from lidar to imu that meas transform measurement in lidar coordinate to imu coordinate. However, in utility.h, function imuConverter,

acc = extRot * acc; and gyr = extRot * gyr;

I think it is used to transform the measurement in imu coordinate to lidar coordinate, is it inconsistent with the definition in yaml file?

Look forward to your reply!

Hello, I'm studying LIO-SAM and I intensively read that code.

Under is about my question.

Through publishClouds in imageProjection.cpp, cloudInfo, i.e., deskew point, is passed to featurExtraction.cpp. At this time, the cloudExtraction function gives the rangeMat.at(i,j) value to cloudInfo.pointRange, which is the range value measured before removing the distortion of the point in the projectPointCloud function. After giving the range value, thisPoint is removed distortion using deskewPoint.

In featureExtraction.cpp, smoothness is calculated using an distorted range value(cloudInfo.pointRange), and I want to know why diff is measured using distorted range instead of undistorted range.

In closed-loop correction, use isam->update() 5 times. Generally, we only use it once. Do you think it will be better to use it multiple times? But is it more time-consuming?

I noticed that a base_link with an offset from the lidar frame leads to erroneous behavior. In that case, the pose of the lidar is not tracked correctly. I first noticed this because the deskewed point cloud and the original point cloud don't match up. While the deskewed cloud remains in place, the original cloud just moved all over the place.

From what I can tell this happens because the transform from the base_link to the lidar is never taken into account. As a result, the pose of the base_link is tracked when the pose of the lidar frame should be tracked instead. Basically: the base_link takes the path that the lidar frame should take.

I hope it became clear what I mean. The error should be easy to reproduce by adding a large offset between the base_link and the lidar frame. It should be immediately apparent when looking at the frames and point clouds in rviz.

I tried LIO-SAM on 2011_09_30_drive_0016 (1.1 GB) Kitti raw data. Using the generated rosbag as input, I got the following warning: Point cloud timestamp not available, deskew function disabled, system will drift significantly!.

I noticed the pointRange is computed in by float pointDistance which is an expensive operation as it requires computing a square root for each point (and there may be over 2 million points per second with Ouster OS1-128).

But the raw data from the lidar already has the range, so it is not necessary to recompute it again.

The PointOS1 defined at https://github.com/ouster-lidar/ouster_example/blob/master/ouster_ros/include/ouster_ros/point_os1.h contains a useful range field which contains the raw range reading.

I suggest making the PointType a template parameter for pointDistance, and do a template specialization for PointOS1 to use the raw range reading directly without recomputing it.

Likewise the horizon angle is redundantly computed by an expensive call to atan2, which is not necessary, because the raw data from the lidar contains this information already in the raw UDP packets, both for Velodyne and Ouster lidars. Unfortunately using pcl::PointXYZI causes this information to be lost, requiring it to be recomputed. For Ouster lidars specifically, the horizon angle does not change between frames, and the driver always outputs all the points (even points without valid range readings), so it is possible to compute the horizon angle from the index of the point. However, the angle is not stored in PointOS1 so it may be more difficult to get the data from raw lidar data. But then atan2 is much slower than sqrt so it may be worth it.

atan2 typically costs 100 cycles, so high resolution lidars producing 2.6 million points per second (e.g. Ouster OS1-128 in 2048 mode) will be starting to be a significant performance penalty.

Could you describe how did you sync the REACH M GPS mentioned in your Paper with the Ouster Unit?

shouldn't the covariance of the odometry growing? I have noticed that the covariance of the odometry in the code doesn't growing, just fluctating.

This is somehow related to #22, which is closed now. Therefore asking here.

I tried the package on Campus dataset(small).

When not using GPS, the package performs very well.

Some results:

On comparing the GPS odometry with the optimized path, both look quite similar. The initial rotation seems to be the only difference between the two.

But when using GPS, there are some serious artifacts in the output PCD.

Some results:

Now I have the following questions:

Dear Tixiao,

This work is really awesome. Thank you for sharing it with the community.

By means of this issue I would like to suggest introducing headers for each class, and adding doxygen strings to the methods.

Please let me know what you think. I would be happy to submit pull requests for one module at a time, if possible with no changes to the underlying functionality.

Best,

Yoshua

Currently, the cmake file specifies include directories as follows:

include_directories(

include

${catkin_INCLUDE_DIRS}

${PCL_INCLUDE_DIRS}

${OpenCV_INCLUDE_DIRS}

${GTSAM_INCLUDE_DIR}

)However, there is no distinction between internal and external source files (e.g. PCL, OpenCV). I propose that we add the CMake include dirs SYSTEM flag so that the compiler only outputs warnings for internal source files.

Example:

include_directories(

include

SYSTEM

${catkin_INCLUDE_DIRS}

${PCL_INCLUDE_DIRS}

${OpenCV_INCLUDE_DIRS}

${GTSAM_INCLUDE_DIR}

)Hi,

Thank you sharing your work.

Is it possible to evaluate LIO-SAM on KITTI Odometry Dataset? Can you explain how to make it possible ?

Thanks in advance.

Hello!

Thank you for a great job.

I was looking at the LIO-SAM code, and the following commented out section caught my attention.

https://github.com/TixiaoShan/LIO-SAM/blob/master/src/imageProjection.cpp#L450-L459

// If the sensor moves relatively slow, like walking speed, positional deskew seems to have little benefits. Thus code below is commented.

// if (cloudInfo.odomAvailable == false || odomDeskewFlag == false)

// return;

// float ratio = relTime / (timeScanNext - timeScanCur);

// *posXCur = ratio * odomIncreX;

// *posYCur = ratio * odomIncreY;

// *posZCur = ratio * odomIncreZ;

What were the results when sensors are relatively fast and you uncommented above?

Also, is there a dataset that I can try?

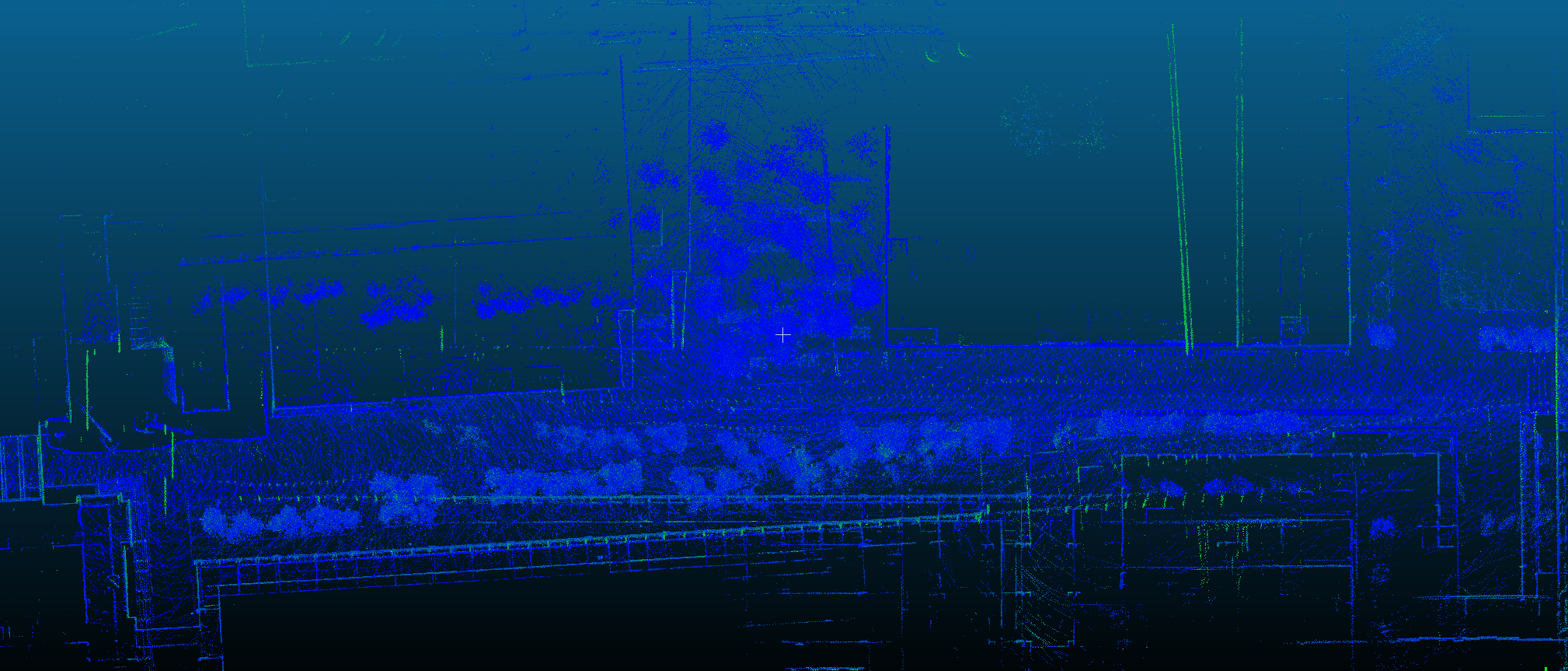

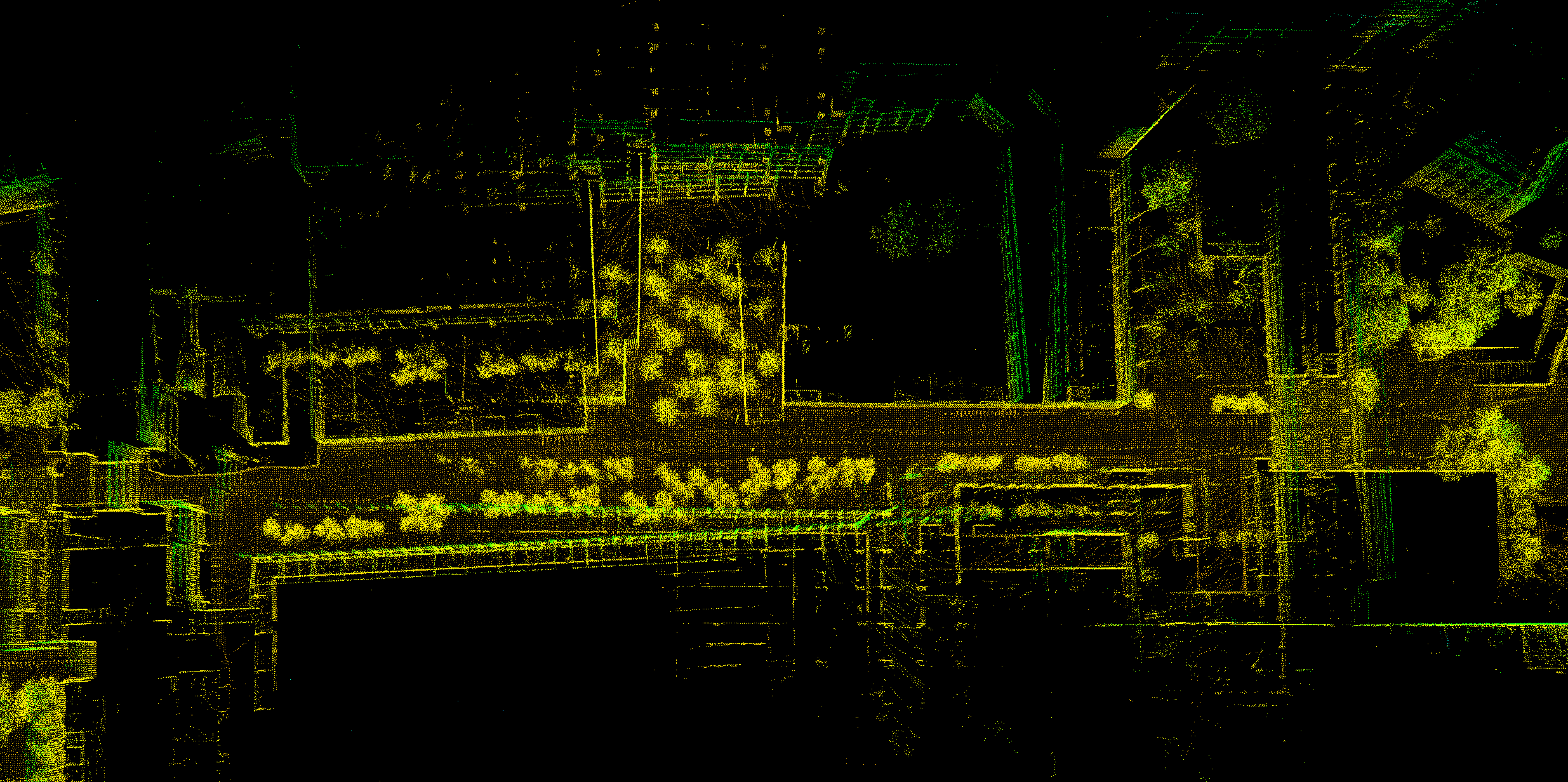

Hi,@TixiaoShan,I run big-loop.bag dataset, the results of loop closure is different,and Sometimes the results was good, sometimes it was bad! (Three times out of ten the results were good) Did I miss some key steps? Thanks!

The results are as follows:

Hi Tixiao,

The results from this package look very impressive.

By means of this issue I would like to ask you the following three questions:

What's the expected accuracy of the LIDAR->IMU transformation?

How do you estimate it? Do you use CAD or an online estimation package?

Can this package improve upon an inaccurate calibration over time?

Thank you in advance.

Best,

Yoshua Nava

I am interested in using this with Ouster lidar sensor.

Could I directly plug the Ouster sensor or

are there any issues to consider?

Thanks for this great framework!

I want to ask a question that is not relative to LIO-SAM. How to project the SLAM map onto Google map?

Thank you in advance!

Shouldn't the covariance of the pose in the Mapping odometry increase constantly. I noticed that the poseCovariance sometimes just fluctuated.

Dear Tixiao,

This work is really awesome. Thank you for sharing it !

I have noticed that LIO-SAM performs great with the rotation dataset, while LIOM is not able to initialize properly using this dataset. So I want to ask what kind of initialization method did LIO-SAM use and what's the difference compared to LIOM.

Looking forward to your answer~

Hello,Dr. Shan. Excellent Work! With respect to imu preintegration, you use gtsam's implementation. The question is that where can we offer the imu rate for calculating discrete-time noise parameter? I didn't find the imu rate setup in your code. And I assume the imu nosie parameter in params.yaml is in continuous-time. Am I right? Thank you very much

I have noticed that in your LOOP_Closure you use the ICP method to calculate the transforamtion and in scan2MapOptimization() functionyou use method of point matching against line or surface. So I want to ask can the ICP in the LOOP_Closure be replaced by the method in scan2MapOptimization() ?

Hi !

I have read through your paper and code, and I find there are two factor graph in the project, so several questions have arisen:

Q1. the result of imu preintegration seems only used to provide a initial estimate for map optimization, even not be used to deskew pointcloud when sensor move slowly, right?

Q2. why not combine imu preintegration factor with the factors in map optimization, just like what VINS-MONO does?(use all the constraints to joint optimization simultaneously)

and I also have the question about Fig 1 in your paper:

Q3. in the figure, we see the robot state node is associated with keyframe, and imu factor is provide a constraint between two successive nodes, however I find in the code, imu factor is added when receiving the pose of lidar frame(rather than keyframe), so does this figure not well match the code, or I don't understand correctly?

Looking forward to your reply !

Thank you !

Hi, @TixiaoShan , thanks for your excellent work !

In imageProjection.cpp/imuDeskewInfo() function, the euler integration is written as:

// get angular velocity

double angular_x, angular_y, angular_z;

imuAngular2rosAngular(&thisImuMsg, &angular_x, &angular_y, &angular_z);

// integrate rotation

double timeDiff = currentImuTime - imuTime[imuPointerCur-1];

imuRotX[imuPointerCur] = imuRotX[imuPointerCur-1] + angular_x * timeDiff;

imuRotY[imuPointerCur] = imuRotY[imuPointerCur-1] + angular_y * timeDiff;

imuRotZ[imuPointerCur] = imuRotZ[imuPointerCur-1] + angular_z * timeDiff;

imuTime[imuPointerCur] = currentImuTime;

The angular_x, angular_y, angular_z is the angular velocity in IMU message.

We can get the angle integration by last angles and current angular velocity and time.

But the euler angle can't be added directly.

Is it better to write using quaternion?

The quaternion for two rotation could be multiply directly, such as:

// get angular velocity

double angular_x, angular_y, angular_z;

imuAngular2rosAngular(&thisImuMsg, &angular_x, &angular_y, &angular_z);

// integrate rotation

double timeDiff = currentImuTime - imuTime[imuPointerCur-1];

// using quaternion

tf::Quaternion last_orientation;

last_orientation = tf::createQuaternionFromRPY(imuRotX[imuPointerCur-1], imuRotY[imuPointerCur-1], imuRotZ[imuPointerCur-1]);

tf::Quaternion delta_orientation;

delta_orientation = tf::createQuaternionFromRPY(angular_x * timeDiff, angular_y * timeDiff, angular_z * timeDiff);

tf::Quaternion curr_orientation = last_orientation * delta_orientation;

double imuRoll, imuPitch, imuYaw;

tf::Matrix3x3(curr_orientation).getRPY(imuRoll, imuPitch, imuYaw);

imuRotX[imuPointerCur] = imuRoll;

imuRotY[imuPointerCur] = imuPitch;

imuRotZ[imuPointerCur] = imuYaw;

imuTime[imuPointerCur] = currentImuTime;

Hi Tixiao,

By means of this issue I propose documenting the software interfaces. Currently the package uses ROS1 as middle-ware, and most interfaces are topics/services. Tf is also used I think, and here it would be interesting to describe the structure of the Tf tree.

I would suggest diving the documentation into:

Please let me know what you think about this proposal. If you're ok with it I'll gradually prepare a PR 👍

Best,

Yoshua

Hi @TixiaoShan,

When get the current laser's initialize pose in map, i debug this code in updateInitialGuess() function in mapOptmization.cpp:

if (cloudInfo.imuAvailable == true)

{

//this line is me added

ROS_WARN("odom = %d, cloud = %d, self = %d ", cloudInfo.odomAvailable, cloudInfo.imuPreintegrationResetId , imuPreintegrationResetId);

Eigen::Affine3f transBack = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit);

Eigen::Affine3f transIncre = lastImuTransformation.inverse() * transBack;

Eigen::Affine3f transTobe = trans2Affine3f(transformTobeMapped);

Eigen::Affine3f transFinal = transTobe * transIncre;

pcl::getTranslationAndEulerAngles(transFinal, transformTobeMapped[3], transformTobeMapped[4], transformTobeMapped[5],

transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]);

lastImuTransformation = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit); // save imu before return;

return;

}

The output is:

process[lio_sam_rviz-8]: started with pid [14288]

[ INFO] [1593869689.438855893]: ----> IMU Preintegration Started.

[ INFO] [1593869689.471981403]: ----> Image Projection Started.

[ INFO] [1593869689.498746662]: ----> Feature Extraction Started.

[ INFO] [1593869689.507955480]: ----> Map Optimization Started.

[ WARN] [1593869693.421098667]: odom = 0, cloud = 0, self = 0

[ WARN] [1593869693.626720210]: odom = 0, cloud = 0, self = 0

[ WARN] [1593869869.932765664]: odom = 1, cloud = 0, self = 1

[ WARN] [1593869870.131129145]: odom = 0, cloud = 0, self = 1

[ WARN] [1593869874.965915153]: odom = 1, cloud = 1, self = 2

[ WARN] [1593869875.179717063]: odom = 0, cloud = 1, self = 2

[ WARN] [1593869875.376471333]: odom = 0, cloud = 1, self = 2

[ WARN] [1593869905.031495240]: odom = 1, cloud = 2, self = 3

[ WARN] [1593869905.231531192]: odom = 0, cloud = 2, self = 3

[ WARN] [1593869909.896946401]: odom = 1, cloud = 3, self = 4

[ WARN] [1593869910.136724098]: odom = 1, cloud = 3, self = 4

[ WARN] [1593869910.275975347]: odom = 0, cloud = 3, self = 4

[ WARN] [1593869919.954947659]: odom = 1, cloud = 4, self = 5

[ WARN] [1593869920.157889474]: odom = 0, cloud = 4, self = 5

[ WARN] [1593869925.202043337]: odom = 1, cloud = 5, self = 6

[ WARN] [1593869925.428638865]: odom = 0, cloud = 5, self = 6

[ WARN] [1593869930.039206332]: odom = 1, cloud = 6, self = 7

[ WARN] [1593869930.244444627]: odom = 1, cloud = 6, self = 7

[ WARN] [1593869935.081837640]: odom = 1, cloud = 7, self = 8

[ WARN] [1593869935.286080622]: odom = 0, cloud = 7, self = 8

[ WARN] [1593869944.967022261]: odom = 1, cloud = 8, self = 9

[ WARN] [1593869945.161093601]: odom = 0, cloud = 8, self = 9

[ WARN] [1593869950.010743418]: odom = 1, cloud = 9, self = 10

[ WARN] [1593869950.215691434]: odom = 1, cloud = 9, self = 10

[ WARN] [1593870259.647486738]: odom = 0, cloud = 10, self = 10

[ WARN] [1593870325.011413956]: odom = 1, cloud = 10, self = 11

[ WARN] [1593870325.214021085]: odom = 0, cloud = 10, self = 11

[ WARN] [1593870329.867247998]: odom = 1, cloud = 11, self = 12

[ WARN] [1593870330.052590294]: odom = 0, cloud = 11, self = 12

[ WARN] [1593870330.254257450]: odom = 0, cloud = 11, self = 12

[ WARN] [1593870335.117750271]: odom = 1, cloud = 12, self = 13

[ WARN] [1593870335.295067667]: odom = 0, cloud = 12, self = 13

[ WARN] [1593870335.505050773]: odom = 0, cloud = 12, self = 13

[ WARN] [1593870344.980313780]: odom = 1, cloud = 13, self = 14

[ WARN] [1593870345.181445314]: odom = 0, cloud = 13, self = 14

[ WARN] [1593870345.382959890]: odom = 0, cloud = 13, self = 14

^C[lio_sam_rviz-8] killing on exit

[navsat-7] killing on exit

[ekf_gps-6] killing on exit

[lio_sam_mapOptmization-5] killing on exit

[lio_sam_featureExtraction-4] killing on exit

[lio_sam_imageProjection-3] killing on exit

[lio_sam_imuPreintegration-2] killing on exit

****************************************************

Saving map to pcd files ...

Processing feature cloud 956 of 957 ...****************************************************

Saving map to pcd files completed

[rosout-1] killing on exit

[master] killing on exit

shutting down processing monitor...

... shutting down processing monitor complete

done

The cloudInfo.odomAvailable in each msg is not always true when after initialized, is it normal?

Thanks for your help!

Hi,

if someone has same error as me,

Use under to build gtsam:

cmake -DGTSAM_BUILD_WITH_MARCH_NATIVE=OFF .. -DGTSAM_USE_SYSTEM_EIGEN=ON

It is mainly due to system eigen version is 3.2.9, while we may install other eigen versions and if you comile gtsam not 3.2.9 and when you compile LIO-SAM, you will find this error.

BR

Hello, I want to ask, I run the kitti data set, only x, y, z, intensity and ring, how can I add time to it.

First, I really appreciate your code! It is really good to understand recent lidar-based SLAM.

My question is, What is the reason for two extrinsic rotation? extrinsicRot and extrinsicRPY.

extrinsicRPY seems to be used for orientation, and transformed rotation is used in initialization.

Can we just use extrinsicRot (which used to transform imu info to lidar frame) for all process?

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.