Toolkit for ApolloScape Dataset

Welcome to Apolloscape's GitHub page!

Apollo is a high performance, flexible architecture which accelerates the development, testing, and deployment of Autonomous Vehicles. ApolloScape, part of the Apollo project for autonomous driving, is a research-oriented dataset and toolkit to foster innovations in all aspects of autonomous driving, from perception, navigation, control, to simulation.

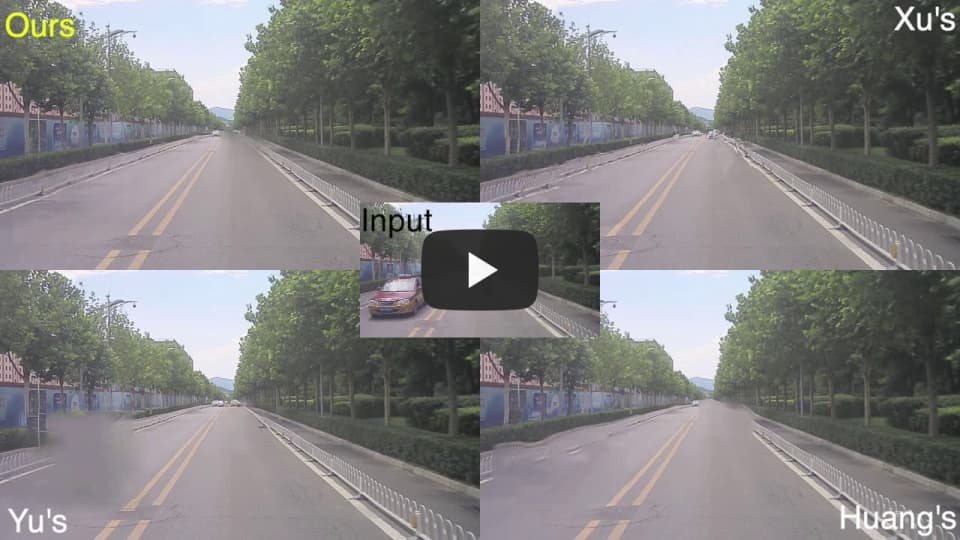

This is a repo of toolkit for ApolloScape Dataset, CVPR 2019 Workshop on Autonomous Driving Challenge and ECCV 2018 challenge. It includes Trajectory Prediction, 3D Lidar Object Detection and Tracking, Scene Parsing, Lane Segmentation, Self Localization, 3D Car Instance, Stereo, and Inpainting Dataset. Some example videos and images are shown below:

Full download links are in each folder.

wget https://ad-apolloscape.cdn.bcebos.com/road01_ins.tar.gz

or

wget https://ad-apolloscape.bj.bcebos.com/road01_ins.tar.gz

wget https://ad-apolloscape.cdn.bcebos.com/trajectory/prediction_train.zip

Run

pip install -r requirements.txt

source source.rcto include necessary packages and current path in to PYTHONPATH to use several util functions.

Please goto each subfolder for detailed information about the data structure, evaluation criterias and some demo code to visualize the dataset.

DVI: Depth Guided Video Inpainting for Autonomous Driving.

Miao Liao, Feixiang Lu, Dingfu Zhou, Sibo Zhang, Wei Li, Ruigang Yang. ECCV 2020. PDF, Webpage, Inpainting Dataset, Video, Presentation

@inproceedings{liao2020dvi,

title={DVI: Depth Guided Video Inpainting for Autonomous Driving},

author={Liao, Miao and Lu, Feixiang and Zhou, Dingfu and Zhang, Sibo and Li, Wei and Yang, Ruigang},

booktitle={European Conference on Computer Vision},

pages={1--17},

year={2020},

organization={Springer}

}

TrafficPredict: Trajectory Prediction for Heterogeneous Traffic-Agents. PDF, Webpage, Trajectory Dataset, 3D Perception Dataset, Video

Yuexin Ma, Xinge Zhu, Sibo Zhang, Ruigang Yang, Wenping Wang, and Dinesh Manocha. AAAI(oral), 2019

@inproceedings{ma2019trafficpredict,

title={Trafficpredict: Trajectory prediction for heterogeneous traffic-agents},

author={Ma, Yuexin and Zhu, Xinge and Zhang, Sibo and Yang, Ruigang and Wang, Wenping and Manocha, Dinesh},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={33},

pages={6120--6127},

year={2019}

}

The apolloscape open dataset for autonomous driving and its application. PDF

Huang, Xinyu and Wang, Peng and Cheng, Xinjing and Zhou, Dingfu and Geng, Qichuan and Yang, Ruigang

@article{wang2019apolloscape,

title={The apolloscape open dataset for autonomous driving and its application},

author={Wang, Peng and Huang, Xinyu and Cheng, Xinjing and Zhou, Dingfu and Geng, Qichuan and Yang, Ruigang},

journal={IEEE transactions on pattern analysis and machine intelligence},

year={2019},

publisher={IEEE}

}

CVPR 2019 WAD Challenge on Trajectory Prediction and 3D Perception. PDF, Website

@article{zhang2020cvpr,

title={CVPR 2019 WAD Challenge on Trajectory Prediction and 3D Perception},

author={Zhang, Sibo and Ma, Yuexin and Yang, Ruigang},

journal={arXiv preprint arXiv:2004.05966},

year={2020}

}