Searching Efficient 3D Architectures with Sparse Point-Voxel Convolution

Haotian Tang*, Zhijian Liu*, Shengyu Zhao, Yujun Lin, Ji Lin, Hanrui Wang, Song Han

ECCV 2020

SPVNAS achieves state-of-the-art performance on the SemanticKITTI leaderboard (as of July 2020) and outperforms MinkowskiNet with 3x speedup, 8x MACs reduction.

[2020-09] We release the baseline training code for SPVCNN and MinkowskiNet.

[2020-08] Please check out our ECCV 2020 tutorial on AutoML for Efficient 3D Deep Learning, which summarizes the algorithm in this codebase.

[2020-07] Our paper is accepted to ECCV 2020.

The code is built with following libraries:

For easy installation, use conda:

conda create -n torch python=3.7

conda activate torch

conda install pytorch torchvision torchaudio cudatoolkit=10.2 -c pytorch

conda install numba opencv

pip install torchpack

pip install --upgrade git+https://github.com/mit-han-lab/torchsparse.git

Please follow the instructions from here to download the SemanticKITTI dataset (both KITTI Odometry dataset and SemanticKITTI labels) and extract all the files in the sequences folder to /dataset/semantic-kitti. You shall see 22 folders 00, 01, …, 21; each with subfolders named velodyne and labels.

We share the pretrained models for MinkowskiNets, our manually designed SPVCNN models and also SPVNAS models found by our 3D-NAS pipeline. All the pretrained models are available in the model zoo. Currently, we release the models trained on sequences 00-07 and 09-10 and evaluated on sequence 08.

| #Params (M) | MACs (G) | mIoU (paper) | mIoU (reprod.) | |

|---|---|---|---|---|

SemanticKITTI_val_MinkUNet@29GMACs |

5.5 | 28.5 | 58.9 | 59.3 |

SemanticKITTI_val_SPVCNN@30GMACs |

5.5 | 30.0 | 60.7 | 60.8 ± 0.5 |

SemanticKITTI_val_SPVNAS@20GMACs |

3.3 | 20.0 | 61.5 | - |

SemanticKITTI_val_SPVNAS@25GMACs |

4.5 | 24.6 | 62.9 | - |

SemanticKITTI_val_MinkUNet@46GMACs |

8.8 | 45.9 | 60.3 | 60.0 |

SemanticKITTI_val_SPVCNN@47GMACs |

8.8 | 47.4 | 61.4 | 61.5 ± 0.2 |

SemanticKITTI_val_SPVNAS@35GMACs |

7.0 | 34.7 | 63.5 | - |

SemanticKITTI_val_MinkUNet@114GMACs |

21.7 | 113.9 | 61.1 | 61.9 |

SemanticKITTI_val_SPVCNN@119GMACs |

21.8 | 118.6 | 63.8 | 63.7 ± 0.4 |

SemanticKITTI_val_SPVNAS@65GMACs |

10.8 | 64.5 | 64.7 | - |

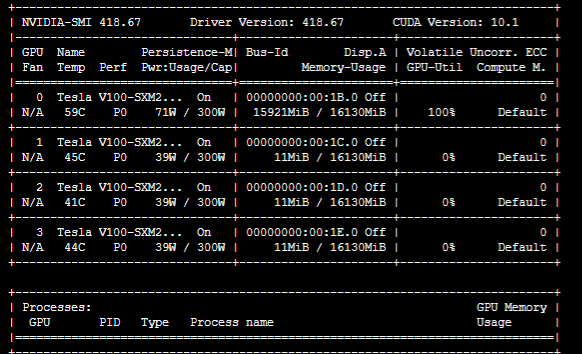

Here, the results are reproduced using 8 NVIDIA RTX 2080Ti GPUs. Result variation for each single model is due to the existence of floating point atomic addition operation in our TorchSparse CUDA backend.

You can run the following command to test the performance of SPVNAS / SPVCNN / MinkUNet models.

torchpack dist-run -np [num_of_gpus] python evaluate.py configs/semantic_kitti/default.yaml --name [num_of_net]For example, to test the model SemanticKITTI_val_SPVNAS@65GMACs on one GPU, you may run

torchpack dist-run -np 1 python evaluate.py configs/semantic_kitti/default.yaml --name SemanticKITTI_val_SPVNAS@65GMACsYou can run the following command (on a headless server) to visualize the predictions of SPVNAS / SPVCNN / MinkUNet models:

xvfb-run --server-args="-screen 0 1024x768x24" python visualize.pyIf you are running the code on a computer with monitor, you may also directly run

python visualize.pyThe visualizations will be generated in assets/.

We currently release the training code for manually-designed baseline models (SPVCNN and MinkowskiNets). You may run the following code to train the model from scratch:

torchpack dist-run -np [num_of_gpus] python train.py configs/semantic_kitti/[model name]/[config name].yamlFor example, to train the model SemanticKITTI_val_SPVCNN@30GMACs, you may run

torchpack dist-run -np [num_of_gpus] python train.py configs/semantic_kitti/spvcnn/cr0p5.yamlTo train the model in a non-distributed environment without MPI, i.e. on a single GPU, you may directly call train.py with the --distributed False argument:

python train.py configs/semantic_kitti/spvcnn/cr0p5.yaml --distributed FalseThe code related to architecture search will be coming soon!

If you use this code for your research, please cite our paper.

@inproceedings{tang2020searching,

title = {Searching Efficient 3D Architectures with Sparse Point-Voxel Convolution},

author = {Tang, Haotian* and Liu, Zhijian* and Zhao, Shengyu and Lin, Yujun and Lin, Ji and Wang, Hanrui and Han, Song},

booktitle = {European Conference on Computer Vision},

year = {2020}

}