I try to Build Pytorch Extensions..

I've tried changing the Cuda and torch versions several times. Failed to Build Extension.

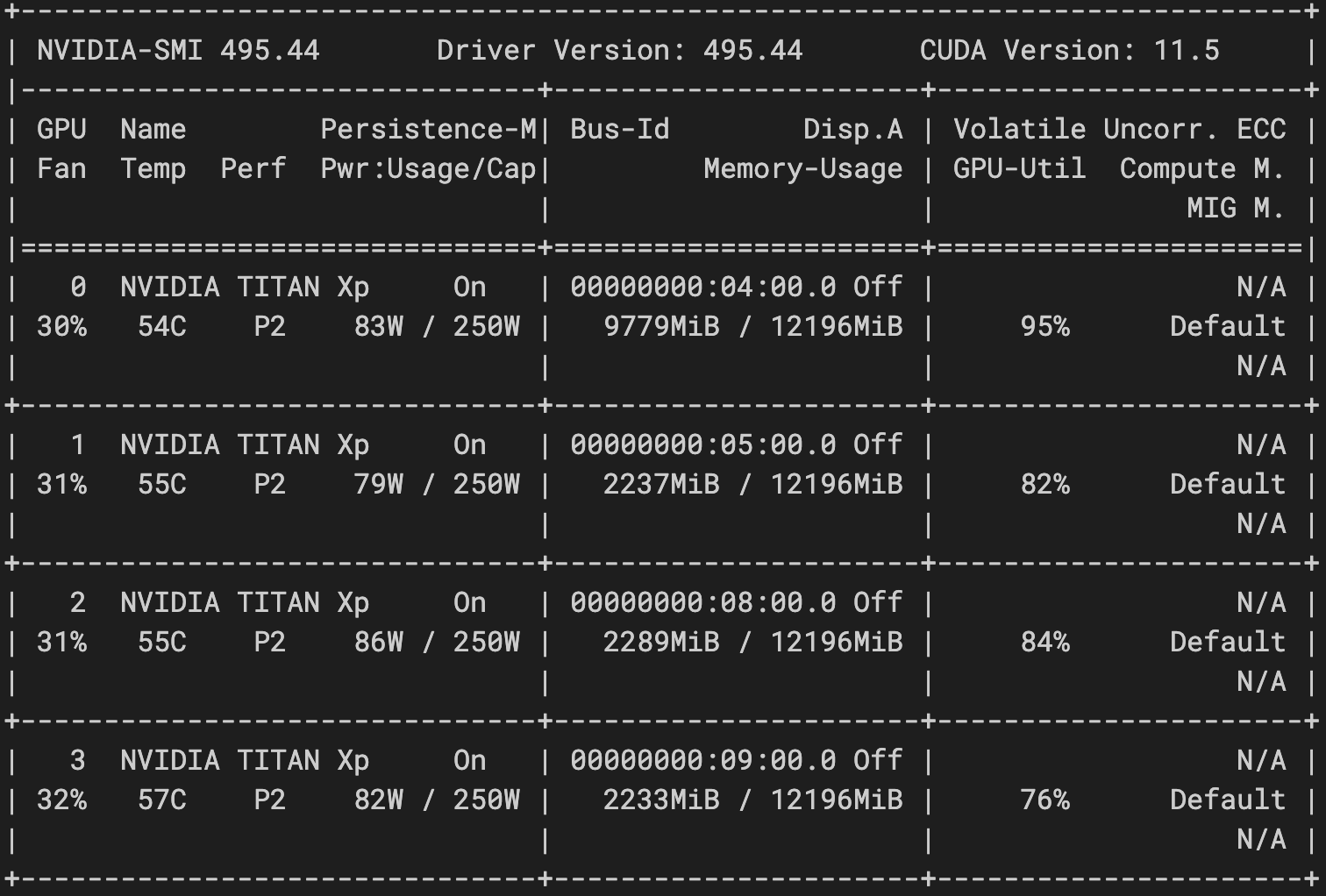

My enviroment is PyTorch = 1.7.0, CUDA = 11.0 and GCC = 6.3.0

thanks for check my issue.

errors occur as follows:

(grnet_latest) C:\Users\USER\Desktop\GRNet\extensions\chamfer_dist>python setup.py install --user

running install

C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\setuptools\command\install.py:34: SetuptoolsDeprecationWarning: setup.py install is deprecated. Use build and pip and other standards-based tools.

warnings.warn(

C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\setuptools\command\easy_install.py:156: EasyInstallDeprecationWarning: easy_install command is deprecated. Use build and pip and other standards-based tools.

warnings.warn(

running bdist_egg

running egg_info

writing chamfer.egg-info\PKG-INFO

writing dependency_links to chamfer.egg-info\dependency_links.txt

writing top-level names to chamfer.egg-info\top_level.txt

reading manifest file 'chamfer.egg-info\SOURCES.txt'

writing manifest file 'chamfer.egg-info\SOURCES.txt'

installing library code to build\bdist.win-amd64\egg

running install_lib

running build_ext

C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\utils\cpp_extension.py:274: UserWarning: Error checking compiler version for cl: [WinError 2] 지정된 파일을 찾을 수 없습니다

warnings.warn('Error checking compiler version for {}: {}'.format(compiler, error))

building 'chamfer' extension

Emitting ninja build file C:\Users\USER\Desktop\GRNet\extensions\chamfer_dist\build\temp.win-amd64-3.8\Release\build.ninja...

Compiling objects...

Allowing ninja to set a default number of workers... (overridable by setting the environment variable MAX_JOBS=N)

[1/1] C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.0\bin\nvcc -Xcompiler /MD -Xcompiler /wd4819 -Xcompiler /wd4251 -Xcompiler /wd4244 -Xcompiler /wd4267 -Xcompiler /wd4275 -Xcompiler /wd4018 -Xcompiler /wd4190 -Xcompiler /EHsc -Xcudafe --diag_suppress=base_class_has_different_dll_interface -Xcudafe --diag_suppress=field_without_dll_interface -Xcudafe --diag_suppress=dll_interface_conflict_none_assumed -Xcudafe --diag_suppress=dll_interface_conflict_dllexport_assumed -IC:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\include -IC:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\include\torch\csrc\api\include -IC:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\include\TH -IC:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\include\THC "-IC:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.0\include" -IC:\Users\USER\anaconda3\envs\grnet_latest\include -IC:\Users\USER\anaconda3\envs\grnet_latest\include "-IC:\Program Files (x86)\Microsoft Visual Studio\2019\Professional\VC\Tools\MSVC\14.29.30133\ATLMFC\include" "-IC:\Program Files (x86)\Microsoft Visual Studio\2019\Professional\VC\Tools\MSVC\14.29.30133\include" "-IC:\Program Files (x86)\Windows Kits\NETFXSDK\4.8\include\um" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.19041.0\ucrt" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.19041.0\shared" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.19041.0\um" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.19041.0\winrt" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.19041.0\cppwinrt" -c C:\Users\USER\Desktop\GRNet\extensions\chamfer_dist\chamfer.cu -o C:\Users\USER\Desktop\GRNet\extensions\chamfer_dist\build\temp.win-amd64-3.8\Release\chamfer.obj -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ --expt-relaxed-constexpr -DTORCH_API_INCLUDE_EXTENSION_H -DTORCH_EXTENSION_NAME=chamfer -D_GLIBCXX_USE_CXX11_ABI=0 -gencode=arch=compute_86,code=sm_86

FAILED: C:/Users/USER/Desktop/GRNet/extensions/chamfer_dist/build/temp.win-amd64-3.8/Release/chamfer.obj

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.0\bin\nvcc -Xcompiler /MD -Xcompiler /wd4819 -Xcompiler /wd4251 -Xcompiler /wd4244 -Xcompiler /wd4267 -Xcompiler /wd4275 -Xcompiler /wd4018 -Xcompiler /wd4190 -Xcompiler /EHsc -Xcudafe --diag_suppress=base_class_has_different_dll_interface -Xcudafe --diag_suppress=field_without_dll_interface -Xcudafe --diag_suppress=dll_interface_conflict_none_assumed -Xcudafe --diag_suppress=dll_interface_conflict_dllexport_assumed -IC:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\include -IC:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\include\torch\csrc\api\include -IC:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\include\TH -IC:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\include\THC "-IC:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.0\include" -IC:\Users\USER\anaconda3\envs\grnet_latest\include -IC:\Users\USER\anaconda3\envs\grnet_latest\include "-IC:\Program Files (x86)\Microsoft Visual Studio\2019\Professional\VC\Tools\MSVC\14.29.30133\ATLMFC\include" "-IC:\Program Files (x86)\Microsoft Visual Studio\2019\Professional\VC\Tools\MSVC\14.29.30133\include" "-IC:\Program Files (x86)\Windows Kits\NETFXSDK\4.8\include\um" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.19041.0\ucrt" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.19041.0\shared" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.19041.0\um" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.19041.0\winrt" "-IC:\Program Files (x86)\Windows Kits\10\include\10.0.19041.0\cppwinrt" -c C:\Users\USER\Desktop\GRNet\extensions\chamfer_dist\chamfer.cu -o C:\Users\USER\Desktop\GRNet\extensions\chamfer_dist\build\temp.win-amd64-3.8\Release\chamfer.obj -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ --expt-relaxed-constexpr -DTORCH_API_INCLUDE_EXTENSION_H -DTORCH_EXTENSION_NAME=chamfer -D_GLIBCXX_USE_CXX11_ABI=0 -gencode=arch=compute_86,code=sm_86

nvcc fatal : Unsupported gpu architecture 'compute_86'

ninja: build stopped: subcommand failed.

Traceback (most recent call last):

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\utils\cpp_extension.py", line 1516, in _run_ninja_build

subprocess.run(

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\subprocess.py", line 516, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['ninja', '-v']' returned non-zero exit status 1.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "setup.py", line 11, in <module>

setup(name='chamfer',

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\setuptools\__init__.py", line 153, in setup

return distutils.core.setup(**attrs)

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\core.py", line 148, in setup

dist.run_commands()

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\dist.py", line 966, in run_commands

self.run_command(cmd)

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\dist.py", line 985, in run_command

cmd_obj.run()

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\setuptools\command\install.py", line 74, in run

self.do_egg_install()

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\setuptools\command\install.py", line 116, in do_egg_install

self.run_command('bdist_egg')

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\cmd.py", line 313, in run_command

self.distribution.run_command(command)

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\dist.py", line 985, in run_command

cmd_obj.run()

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\setuptools\command\bdist_egg.py", line 164, in run

cmd = self.call_command('install_lib', warn_dir=0)

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\setuptools\command\bdist_egg.py", line 150, in call_command

self.run_command(cmdname)

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\cmd.py", line 313, in run_command

self.distribution.run_command(command)

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\dist.py", line 985, in run_command

cmd_obj.run()

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\setuptools\command\install_lib.py", line 11, in run

self.build()

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\command\install_lib.py", line 107, in build

self.run_command('build_ext')

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\cmd.py", line 313, in run_command

self.distribution.run_command(command)

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\dist.py", line 985, in run_command

cmd_obj.run()

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\setuptools\command\build_ext.py", line 79, in run

_build_ext.run(self)

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\command\build_ext.py", line 340, in run

self.build_extensions()

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\utils\cpp_extension.py", line 653, in build_extensions

build_ext.build_extensions(self)

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\command\build_ext.py", line 449, in build_extensions

self._build_extensions_serial()

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\command\build_ext.py", line 474, in _build_extensions_serial

self.build_extension(ext)

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\setuptools\command\build_ext.py", line 202, in build_extension

_build_ext.build_extension(self, ext)

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\distutils\command\build_ext.py", line 528, in build_extension

objects = self.compiler.compile(sources,

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\utils\cpp_extension.py", line 626, in win_wrap_ninja_compile

_write_ninja_file_and_compile_objects(

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\utils\cpp_extension.py", line 1233, in _write_ninja_file_and_compile_objects

_run_ninja_build(

File "C:\Users\USER\anaconda3\envs\grnet_latest\lib\site-packages\torch\utils\cpp_extension.py", line 1538, in _run_ninja_build

raise RuntimeError(message) from e

RuntimeError: Error compiling objects for extension