|

|

|

|

|

yuliangxiu / econ Goto Github PK

View Code? Open in Web Editor NEW[CVPR'23, Highlight] ECON: Explicit Clothed humans Optimized via Normal integration

Home Page: https://xiuyuliang.cn/econ

License: Other

[CVPR'23, Highlight] ECON: Explicit Clothed humans Optimized via Normal integration

Home Page: https://xiuyuliang.cn/econ

License: Other

Hi,

How can I evaluate this code on video file as an input?

Hey,Your work is awesome!

Due to some requirements,I need to generate a human model whose head has his own texture though his body hasn't(I mean use a global texture for everyone's body).So now I've used ECON to generate a body without a head,and having a head generated by other ways.But there is a problem:How can I splice the head and the body?

This is a wired question,I know.To be honest,I'm a novice in this area and I don't know much about that.Is there any way to achieve this?

(base) H:\ECON-master>python -m apps.infer -cfg ./configs/econ.yaml -in_dir ./examples -out_dir ./results

Traceback (most recent call last):

File "d:\tools\anaconda3\lib\runpy.py", line 197, in _run_module_as_main

return _run_code(code, main_globals, None,

File "d:\tools\anaconda3\lib\runpy.py", line 87, in _run_code

exec(code, run_globals)

File "H:\ECON-master\apps\infer.py", line 32, in <module>

from apps.Normal import Normal

File "H:\ECON-master\apps\Normal.py", line 2, in <module>

from lib.common.train_util import batch_mean

File "H:\ECON-master\lib\common\train_util.py", line 18, in <module>

from ..dataset.mesh_util import *

File "H:\ECON-master\lib\dataset\mesh_util.py", line 162, in <module>

model_path=SMPLX().model_dir,

File "H:\ECON-master\lib\dataset\mesh_util.py", line 92, in __init__

self.smplx_front_flame_vid = self.smplx_flame_vid[np.load(self.front_flame_path)]

IndexError: index 5484 is out of bounds for axis 0 with size 5023I manage to isntall on windows and I was getting all the data ready to share, but I've got to stop to solve other issues on other projects, so, to have something avilalble, I'm sharing here the parts that I took note that might help other having problems installing ECON.

Probably the biggest problem is intalling Pythorch3d

To do that, it was actually simple, since you followed good directions, like the one in this link

https://stackoverflow.com/a/74913303/9473295

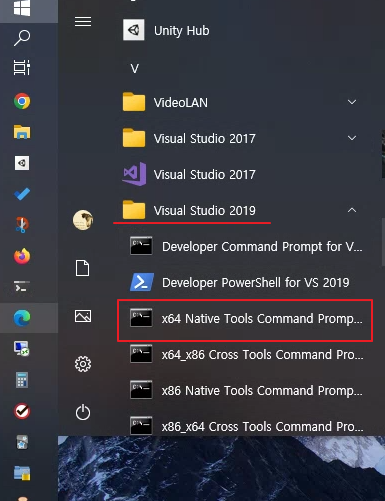

In summary, you need to, install Visual studio community 2019 with the C++ librarires,

using conda, create your venv, with python 3.8 (i think i had problems with python 3.9)

coudl be something like this

conda create -n econ python=3.8

then you activate it

conda activate econ

then install these

conda install pytorch torchvision torchaudio pytorch-cuda=11.6 -c pytorch -c nvidia

and

conda install -c fvcore -c iopath -c conda-forge fvcore iopath

I think you dont need to load the VS Native command tools

but in case you need to, it must be loaded after you activate the econ venv (with the previous packages already installed)

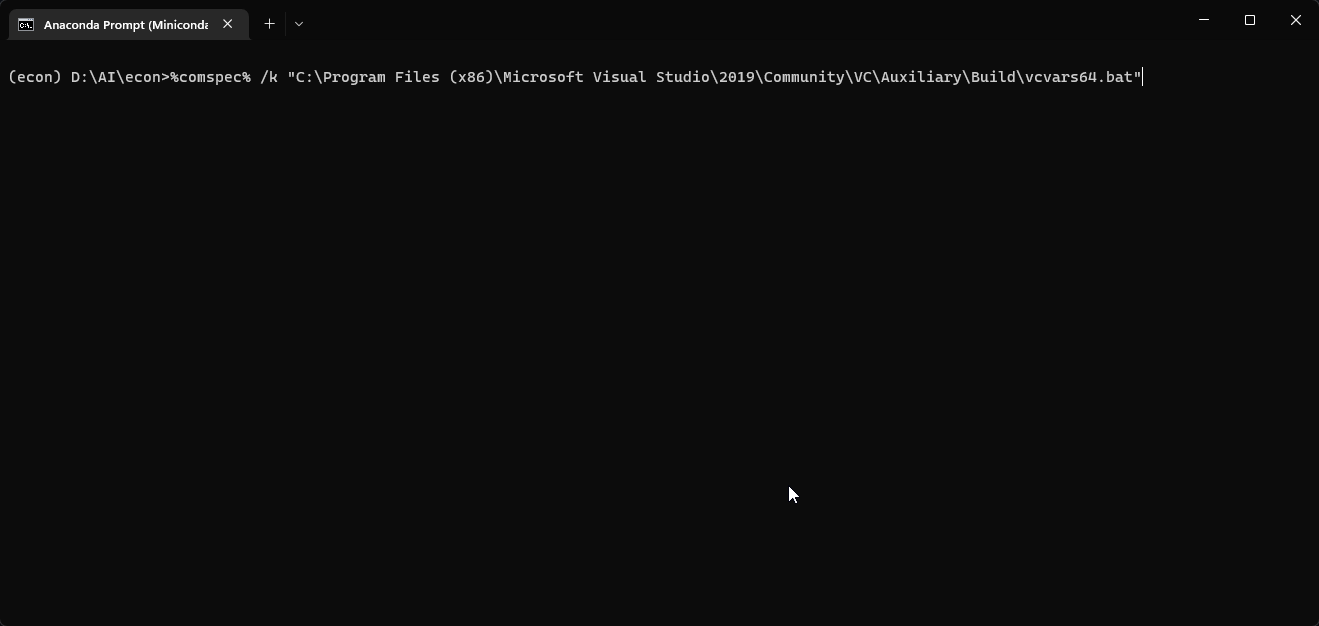

to do that you could, in CMD pront, execute this line of command

%comspec% /k "C:\Program Files (x86)\Microsoft Visual Studio\2019\Community\VC\Auxiliary\Build\vcvars64.bat"

before you press enter it will looks something like this

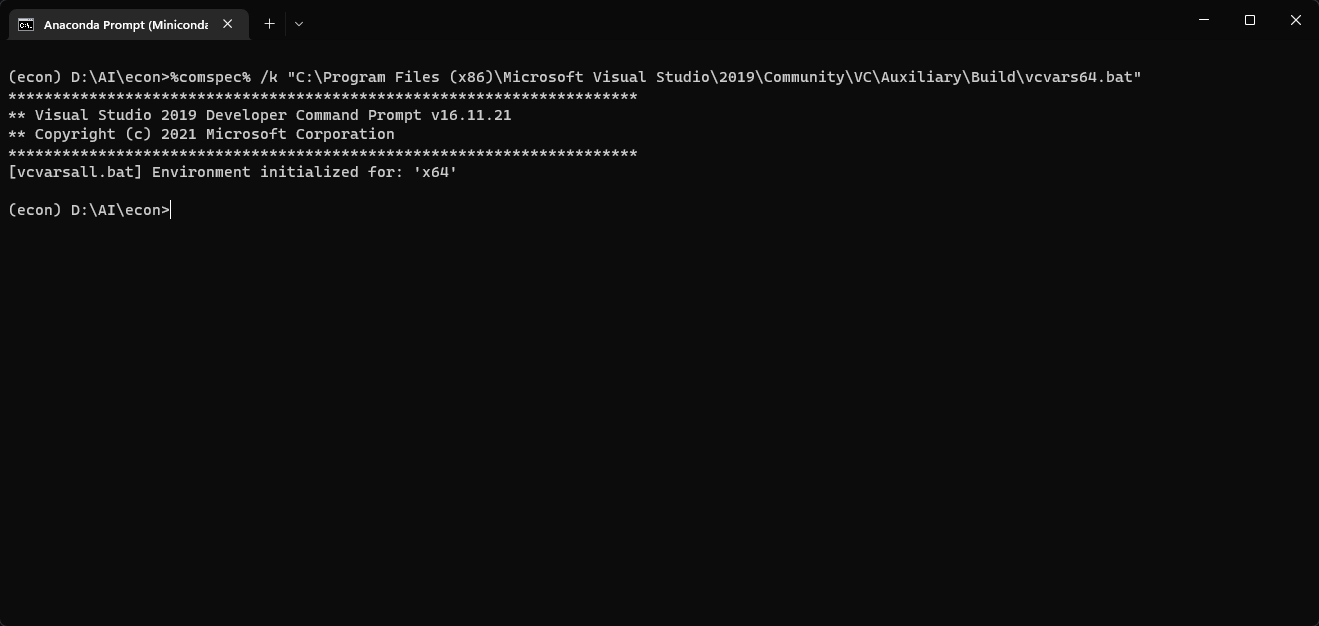

and after pressing enter, it might look like this

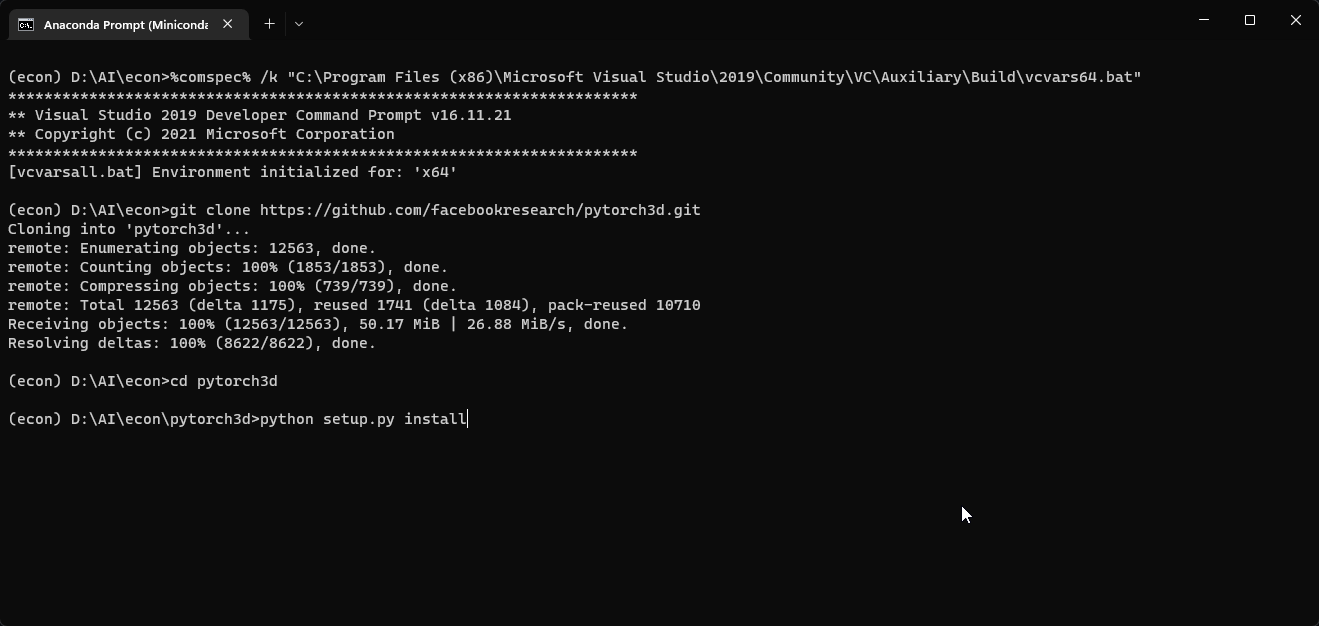

then get the pytorch3d package using

git clone https://github.com/facebookresearch/pytorch3d.git

enter pytorch3d folder

cd pytorch3d

python setup.py install

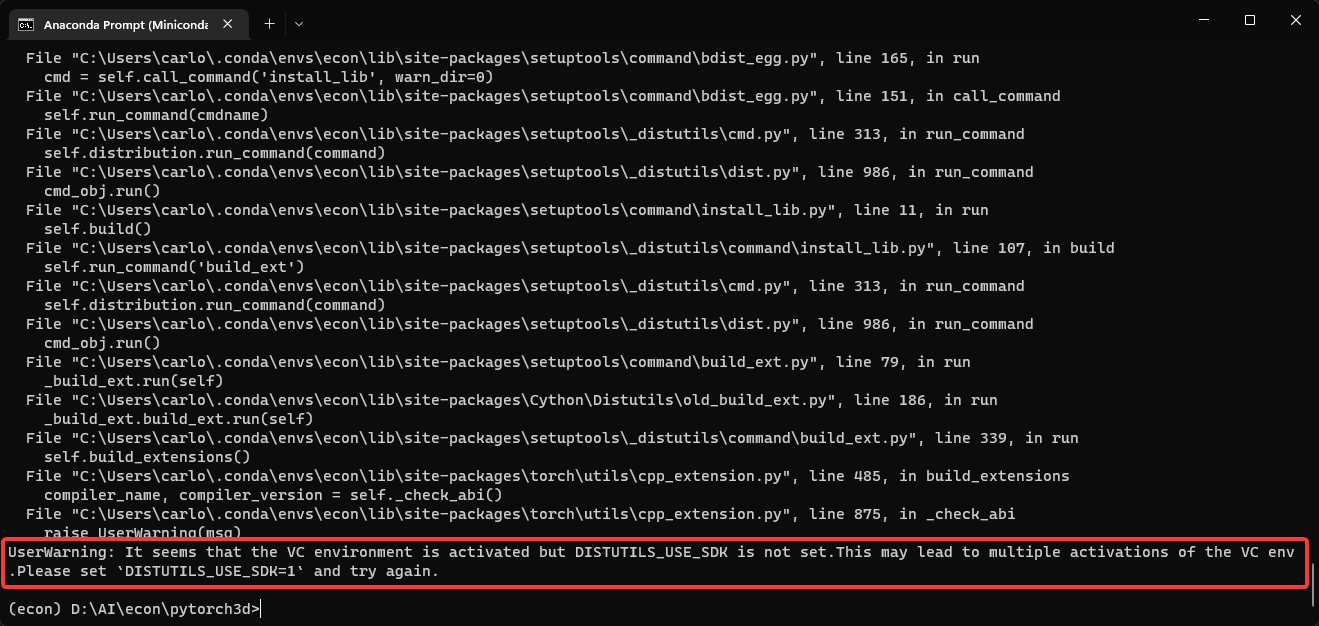

Probably you will receive this error

You just have to put this command:

set DISTUTILS_USE_SDK=1

and run again

python setup.py install

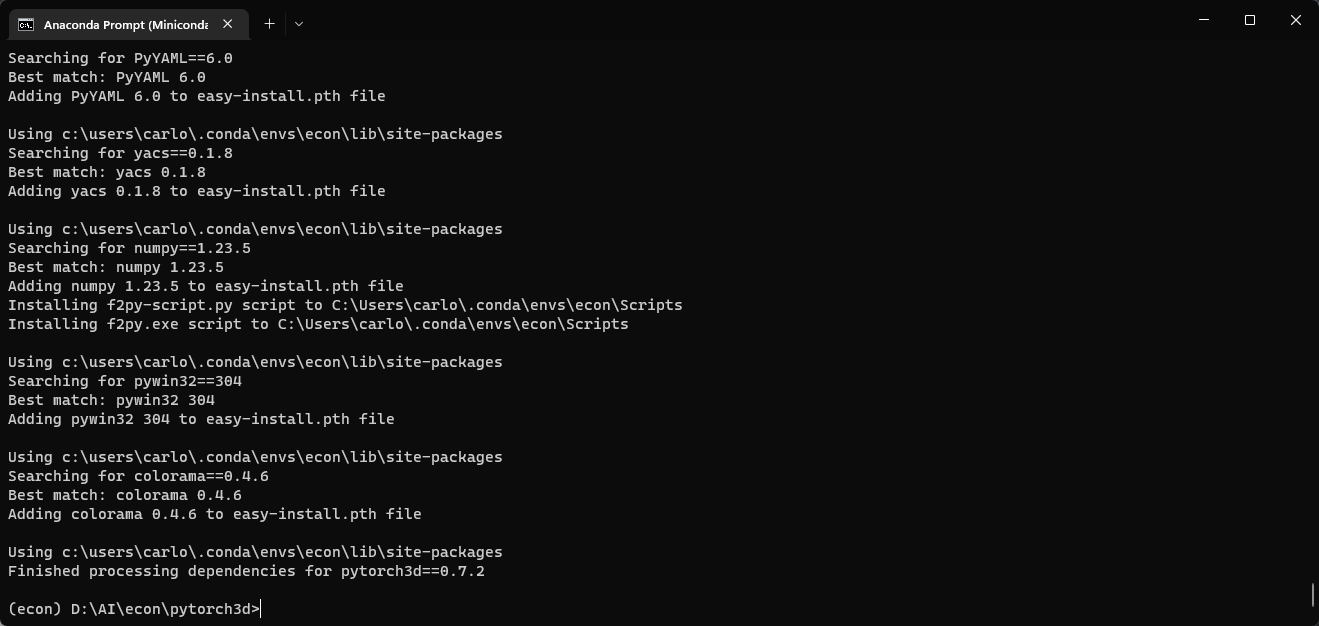

It will take A LOT OF TIME to finish and it will show something like this

After it has finished, you can install the rest of python packages.

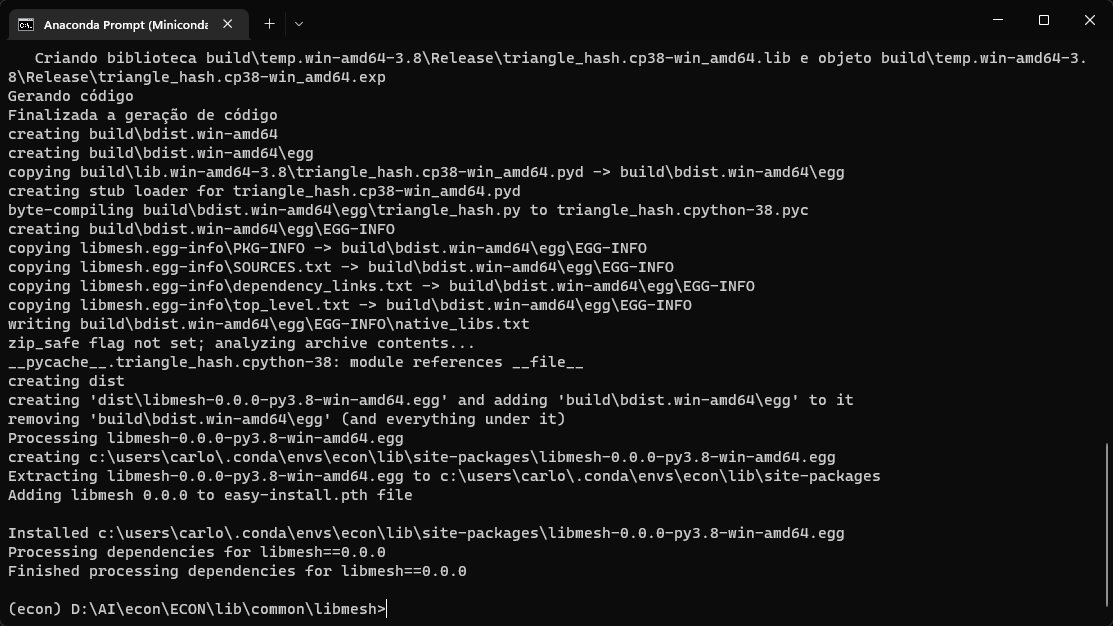

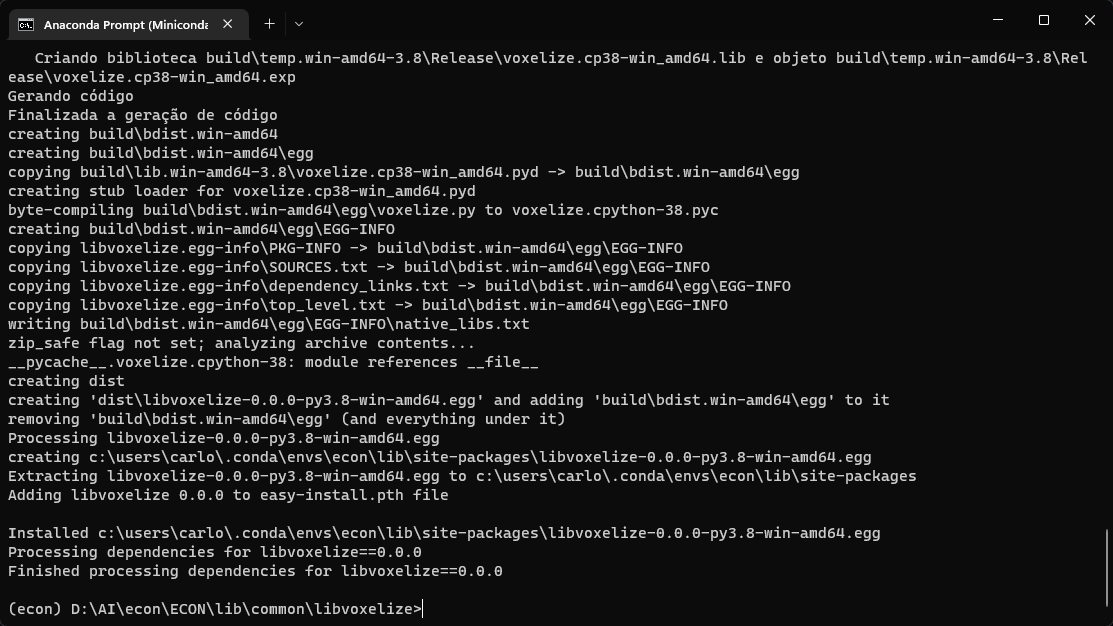

There are two other packeges you need to install inside econ folder. they are at

D:\AI\econ\ECON\lib\common\libmesh

and

D:\AI\econ\ECON\lib\common\libvoxelize

you should go inside each of those two folders and type

python setup.py install

After executing python setup.py install for libmesh

After executing python setup.py install for libvoxelize

I think those were the most problematic part of the intalation (before it was a pain to isntall pypoisson, but luckly the amazing author manages to use another solution, so we dont have to suffer with that 😀

Missing file

python -m apps.avatarizer -n shows killed in line 163.

I'm sorry to bother you, but I met a problem when replacing the hand posture parameters. I notice that your hand attitude parameter is in the tensor form of [1*15*3*3]. I have the hand attitude parameter in the form of 1*15*3 tensor, converted by the angle_axis_to_rotation_matrix () ————https://github.com/kornia/kornia.

def _compute_rotation_matrix(angle_axis, theta2, eps=1e-6):

# We want to be careful to only evaluate the square root if the

# norm of the angle_axis vector is greater than zero. Otherwise

# we get a division by zero.

k_one = 1.0

theta = torch.sqrt(theta2)

wxyz = angle_axis / (theta + eps)

wx, wy, wz = torch.chunk(wxyz, 3, dim=1)

cos_theta = torch.cos(theta)

sin_theta = torch.sin(theta)

r00 = cos_theta + wx * wx * (k_one - cos_theta)

r10 = wz * sin_theta + wx * wy * (k_one - cos_theta)

r20 = -wy * sin_theta + wx * wz * (k_one - cos_theta)

r01 = wx * wy * (k_one - cos_theta) - wz * sin_theta

r11 = cos_theta + wy * wy * (k_one - cos_theta)

r21 = wx * sin_theta + wy * wz * (k_one - cos_theta)

r02 = wy * sin_theta + wx * wz * (k_one - cos_theta)

r12 = -wx * sin_theta + wy * wz * (k_one - cos_theta)

r22 = cos_theta + wz * wz * (k_one - cos_theta)

rotation_matrix = concatenate([r00, r01, r02, r10, r11, r12, r20, r21, r22], dim=1)

return rotation_matrix.view(-1, 3, 3)

def _compute_rotation_matrix_taylor(angle_axis):

rx, ry, rz = torch.chunk(angle_axis, 3, dim=1)

k_one = torch.ones_like(rx)

rotation_matrix = concatenate([k_one, -rz, ry, rz, k_one, -rx, -ry, rx, k_one], dim=1)

return rotation_matrix.view(-1, 3, 3)

# stolen from ceres/rotation.h

_angle_axis = torch.unsqueeze(angle_axis, dim=1)

theta2 = torch.matmul(_angle_axis, _angle_axis.transpose(1, 2))

theta2 = torch.squeeze(theta2, dim=1)

# compute rotation matrices

rotation_matrix_normal = _compute_rotation_matrix(angle_axis, theta2)

rotation_matrix_taylor = _compute_rotation_matrix_taylor(angle_axis)

# create mask to handle both cases

eps = 1e-6

mask = (theta2 > eps).view(-1, 1, 1).to(theta2.device)

mask_pos = (mask).type_as(theta2)

mask_neg = (~mask).type_as(theta2)

# create output pose matrix

rotation_matrix = eye_like(3, angle_axis, shared_memory=False)

# fill output matrix with masked values

rotation_matrix[..., :3, :3] = mask_pos * rotation_matrix_normal + mask_neg * rotation_matrix_taylor

return rotation_matrix # Nx3x3

The method adopted is to directly replace the hand parameters in the data with the converted hand parameters, but the final result is very bad. May I ask what should we pay attention to when replacing the hand posture parameters

pbar = tqdm(dataset)

for data in pbar:

print(data)

data['left_hand_pose'] = left_hand_pose_apeng

data['right_hand_pose'] = right_hand_pose_apeng

.......

May I ask when the author will publish the training code?

Hi, I was wondering if you could provide the testing and training code so that we can test and train on other datasets!

Thanks very much!

Hello,

Firstly, I would like to thank you for sharing your code on Github. It's been very helpful.

I was wondering if you could provide some guidance on how to obtain the normal vector information for the image using your code? Any help or suggestions would be greatly appreciated.

Thank you.

Would it be possible, given multi-view input (even 2-3 sides, poses) to have the model optimize for a 'consensus' mesh and texture? Especially given the occlusion for a single POV, there are many blindspots, so the confidence level for different parts of the texture should be very different.

Amazing result. I want to input the hand image into the neural network and get the hand in obj/mesh format. Then I want to fit into the result of Econ, what should I do? Can I get the result by changing the hand replacement in the infer.py file?

like animation(bone rig, skin, bvh driver, deformation),and uv map, material extract.

Hello, the paper is great! May I ask when are you going to release the training code?

I recently tried out the newly added TEXTure feature, expecting it to complete the texture based on the input image as shown in the TEXTure Paper. However, the code from YuliangXiu/TEXTure seems to be determined by the prompt in the TEXTure/configs/text_guided/avatar.yaml file, specifically on line 5: text: "A gorgeously composed, high-resolution photograph of a 30 years old man wearing red shirt, blue pants, brown shoes, {} view to the camera".

Is it currently impossible to generate a texture that matches the original image provided as input to ECON?

I've come to realize that this issue stems from the original https://github.com/TEXTurePaper/TEXTurePaper code not providing this functionality yet. Are there any plans to implement this feature directly in the future, or to integrate a similar functionality using another open-source texture generator?

Thanks for your apealling work,sir.The character model being generated has beyond my preliminary imagination,better than other models I 've used before.It certainly will be way more better if I am capable of coloring it.

**So I wanna ask that is there any convenient way that I can color my own generated character model or get the model texture and apply to it?**I would be very grateful if you could answer.Thanks

Hello, ECON this job can be used for SCANimate? It looks like their output is the same.

Hi Authors,

I hope you are doing great. I am trying to run this repository my machine in the conda environment with the following specifications,

Ubuntu : 20.04

Cuda: 11.7

Python : 3.8.15

GPU: Tesla V100 16GB

I followed the step by steps instructions in the installation guide to setup the environment and there was no error during that.

Now, when I try to run inference using this command

python -m apps.infer -cfg ./configs/econ.yaml -in_dir ./examples -out_dir ./results

I got the following output with an error,

resume Normal weights from ./data/ckpt/normal.ckpt

Resume MLP weights from ./data/ckpt/ifnet.ckpt

Use IF-Nets (Implicit)+ for completion

Use PIXIE to estimate human pose and shape

Dataset Size: 1

0%| | 0/1 [00:00<?, ?it/s]INFO: Created TensorFlow Lite XNNPACK delegate for CPU.

304e9c4798a8c3967de7c74c24ef2e38: 0%| | 0/1 [00:07<?, ?it/s]terminate called after throwing an instance of 'std::bad_alloc'

what(): std::bad_alloc

Aborted (core dumped)Can you please suggest the possible solution?

Traceback (most recent call last):

File "/home/td/anaconda3/envs/econ/lib/python3.8/runpy.py", line 194, in _run_module_as_main

return _run_code(code, main_globals, None,

File "/home/td/anaconda3/envs/econ/lib/python3.8/runpy.py", line 87, in _run_code

exec(code, run_globals)

File "/home/td/Project/ECON/apps/infer.py", line 116, in <module>

for data in pbar:

File "/home/td/anaconda3/envs/econ/lib/python3.8/site-packages/tqdm/std.py", line 1195, in __iter__

for obj in iterable:

File "/home/td/Project/ECON/lib/dataset/TestDataset.py", line 178, in __getitem__

arr_dict = process_image(img_path, self.hps_type, self.single, 512, self.detector)

File "/home/td/Project/ECON/lib/common/imutils.py", line 152, in process_image

img_raw, (in_height, in_width) = load_img(img_file)

File "/home/td/Project/ECON/lib/common/imutils.py", line 60, in load_img

return torch.tensor(img).permute(2, 0, 1).unsqueeze(0).float(), img.shape[:2]

TypeError: can't convert np.ndarray of type numpy.uint16. The only supported types are: float64, float32, float16, complex64, complex128, int64, int32, int16, int8, uint8, and bool.

the first error i met is above.

so i changed the code (/lib/common/imutils.py - line 60)

return torch.tensor(img).permute(2, 0, 1).unsqueeze(0).float(), img.shape[:2]

->

return torch.tensor(img.astype(np.int32)).permute(2, 0, 1).unsqueeze(0).float(), img.shape[:2]

Then I infered png image that size is (3840,2160) extracted from mp4 video, but i got error.

(econ) td@td-AI-server:~/Project/ECON$ CUDA_VISIBLE_DEVICES=1 python -m apps.infer -cfg ./configs/econ.yaml -in_dir /data/ECON/frames/ -out_dir /data/ECON/result

Resume Normal Estimator from ./data/ckpt/normal.ckpt

Complete with SMPL-X (Explicit)

SMPL-X estimate with PIXIE

Dataset Size: 2763

0%| | 0/2763 [00:00<?, ?it/s]

INFO: Created TensorFlow Lite XNNPACK delegate for CPU.

Body Fitting -- normal: 0.000 | silhouette: 0.076 | joint: 0.000 | Total: 0.076| loose:1, occluded:0: 100%|████████████████████████████| 50/50 [00:27<00:00, 1.80it/s]

frame00001: 0%| | 0/2763 [05:01<?, ?it/s]

Traceback (most recent call last):

File "/home/td/anaconda3/envs/econ/lib/python3.8/runpy.py", line 194, in _run_module_as_main

return _run_code(code, main_globals, None,

File "/home/td/anaconda3/envs/econ/lib/python3.8/runpy.py", line 87, in _run_code

exec(code, run_globals)

File "/home/td/Project/ECON/apps/infer.py", line 433, in <module>

BNI_object.extract_surface(False)

File "/home/td/Project/ECON/lib/common/BNI.py", line 45, in extract_surface

bni_result = double_side_bilateral_normal_integration(

File "/home/td/Project/ECON/lib/common/BNI_utils.py", line 608, in double_side_bilateral_normal_integration

vertices_front, faces_front = remove_stretched_faces(vertices_front, faces_front)

File "/home/td/Project/ECON/lib/common/BNI_utils.py", line 119, in remove_stretched_faces

faces_cam_angles = np.dot(mesh.face_normals, camera_ray)

File "/home/td/anaconda3/envs/econ/lib/python3.8/site-packages/trimesh/base.py", line 340, in face_normals

triangles=self.triangles,

File "/home/td/anaconda3/envs/econ/lib/python3.8/site-packages/trimesh/caching.py", line 139, in get_cached

value = function(*args, **kwargs)

File "/home/td/anaconda3/envs/econ/lib/python3.8/site-packages/trimesh/base.py", line 782, in triangles

triangles = self.vertices.view(np.ndarray)[self.faces]

IndexError: index 0 is out of bounds for axis 0 with size 0

here is my environment

ubuntu 20.04.5 LTS

Ryzen 5900x

NVIDIA RTX A6000 48GB

CUDA 11.6

and other libraries installed following your installation script.

did i miss something?

the last 3 lines of the file look like this. i copied exactly. you tell me what is wrong

open3d

tinyobjloader==2.0.0rc7

git+https://github.com/facebookresearch/pytorch3d.git

git+https://github.com

please respect windows users.

Hi, I find that you train ECON and ICON with Thuman2 in Experiment. However, when I use thuman2 to train the back normal net in ICON, I got a bad result. Could you tell me how you train ICON with Thuman2? What are the training details?

Congrats to ECON! 🎉🎉

It is just a kind reminder that an updated version of PyMAF-X is now available for better face and hand mesh regression.

i) the updated PyMAF-X uses the same version of SMPL-X (v2020) as PIXIE;

ii) the face and hand inputs of PyMAF-X are simplified (no need to provide local transformation information);

iii) the face regression performance should be on-par with PIXIE, see Fig. 7 and Table 4 of the updated paper;

Please check the updated PyMAF-X for more details :)

Thank you very much for publishing your work. I have tried to run it on the subset of the People Snapshot Dataset, you can find it in the zip file, and get the following error. Do you have an idea, how to fix it?

Body Fitting -- normal: 0.000 | silhouette: 0.143 | joint: 0.409 | Total: 0.552| loose:1, occluded:0: 100%|██████████| 50/50 [00:07<00:00, 6.69it/s]

0: 0%| | 0/130 [04:28<?, ?it/s]: 0.143 | joint: 0.409 | Total: 0.552| loose:1, occluded:0: 100%|██████████| 50/50 [00:07<00:00, 6.46it/s]

Traceback (most recent call last):

File ".../Miniconda3/envs/econ/lib/python3.8/runpy.py", line 194, in _run_module_as_main

return _run_code(code, main_globals, None,

File ".../Miniconda3/envs/econ/lib/python3.8/runpy.py", line 87, in _run_code

exec(code, run_globals)

File ".../Projects/ECON/apps/infer.py", line 478, in <module>

BNI_object.extract_surface(False)

File ".../Projects/ECON/lib/common/BNI.py", line 45, in extract_surface

bni_result = double_side_bilateral_normal_integration(

File ".../Projects/ECON/lib/common/BNI_utils.py", line 640, in double_side_bilateral_normal_integration

vertices_front, faces_front = remove_stretched_faces(vertices_front, faces_front)

File ".../Projects/ECON/lib/common/BNI_utils.py", line 124, in remove_stretched_faces

faces_cam_angles = np.dot(mesh.face_normals, camera_ray)

File ".../Miniconda3/envs/econ/lib/python3.8/site-packages/trimesh/base.py", line 340, in face_normals

triangles=self.triangles,

File ".../Miniconda3/envs/econ/lib/python3.8/site-packages/trimesh/caching.py", line 139, in get_cached

value = function(*args, **kwargs)

File ".../Miniconda3/envs/econ/lib/python3.8/site-packages/trimesh/base.py", line 783, in triangles

triangles = self.vertices.view(np.ndarray)[self.faces]

IndexError: index 0 is out of bounds for axis 0 with size 0Thank you for sharing your awesome work.

I want to use part your work(apps/benchmark.py) to other dataset for mesh evaluation.

In your code, you evaluate mesh using different scales for each dataset, how can you determine the scale? (in your code, cape is 100.0, renderer people is 1.0)

Thank you !

All 6 prior steps executed correctly without issue.

hi yuliang, thank u for your amazing job.i have a question abou multi-camera.hope u can answer or give me some suggestions,very thank u.

now,the model(ECON) input is 1 image. But in my project , i can offer 4(or more) images, how can i use 4 images(front, back ,left-side,right-side) to reconstruce 1 cloth body ?

现在ECON的模型输入是1张单视角的rgb图,在我的项目中,我会有4个相机,同时对一个人的四个面进行拍照(正面、背面、左侧、右侧),我期望能够把这4张图共同输入进网络,以获取纹理更好的人体三维模型。我应该怎么做?希望yuliang能够指点迷津,非常感谢!

Great work that you are doing, congrats on that.

I'm testing ECON on windows, and I suffered a lot to build pytorch3d, and mainly the pypoisson.

But ended up sucessfully building it, but when executing, I've got this error.

TypeError: 'NoneType' object is not subscriptable

The full error

(econ) D:\AI\econ\ECON>python -m apps.infer -cfg ./configs/econ.yaml -in_dir ./examples -out_dir ./results Resume Normal weights from ./data/ckpt/normal.ckpt Complete with IF-Nets+ (Implicit) SMPL-X estimate with PIXIE Dataset Size: 1 0%| | 0/1 [00:00<?, ?it/s]INFO: Created TensorFlow Lite XNNPACK delegate for CPU. Body Fitting --- normal: 0.023 | silhouette: 0.053 | joint: 0.059 | Total: 0.135| loose:0, occluded:0: 100%|█| 50/50 [0 examples\304e9c4798a8c3967de7c74c24ef2e38: 0%| | 0/1 [01:25<?, ?it/s] Traceback (most recent call last): File "C:\Users\carlo\.conda\envs\econ\lib\runpy.py", line 194, in _run_module_as_main return _run_code(code, main_globals, None, File "C:\Users\carlo\.conda\envs\econ\lib\runpy.py", line 87, in _run_code exec(code, run_globals) File "D:\AI\econ\ECON\apps\infer.py", line 422, in <module> verts_IF, faces_IF = ifnet_model.reconEngine.export_mesh(sdf) File "C:\Users\carlo\.conda\envs\econ\lib\site-packages\torch\autograd\grad_mode.py", line 27, in decorate_context return func(*args, **kwargs) File "D:\AI\econ\ECON\lib\common\seg3d_lossless.py", line 619, in export_mesh final = occupancys[1:, 1:, 1:].contiguous() TypeError: 'NoneType' object is not subscriptable

Do you have an idea of how to solve this error?

thanks a lot.

I observe that ECON performs well for SMPL-like human. However, it cannot perform well for the case other than SMPL-like figure, such as a kid, with the default settings. Can these cases be addressed with some other settings?

Thank you so much for your awesome work,sir!

But something strange happen in the exporting obj file like this:

The input is a standard T-pose photo,but the output is skew(including the arms and legs).How could it happen?Maybe my picture is not so suitable for the model to predict. So are there certain requirements or something to pay attention to for the input images?

Thank you again!

If I want to test on THuman, what scale should I set?

Thanks very much!!

The image of geometry in your paper looks smooth and clean. Can you share the method of rendering and its detailed parameters?

I have tried rendering with meshlab but my results seems transparent in a lot regions of human body and doesn't looks great, as I showed in the second picture.

Thanks for your help!

excuse me, I read the statement in the paper “ Z b ∗ is the front or back coarse body depth image rendered from the SMPL-X mesh”,May I ask where this part is implemented in the code?

Great work on ECON! Is there a way to store the UVs in SMPLx UV map format?

Thanks for your great work!

I was wondering if the CAPE-NFP set is in the pose.txt after I downloaded the cape data. I find that the pose.txt happens to have 100 scans' name. Did you use that for testing? Besides there is another file called test150.txt, is this also used for testing?

Thanks very much!

hi,yuliang

amazing job

avatarizer.py has error when hps_type use pymafx will case "RuntimeError: einsum(): subscript l has size 250 for operand 1 which does not broadcast with previously seen size 20"

╭─────────────────────────────── Traceback (most recent call last) ────────────────────────────────╮

│ D:\anaconda3\lib\runpy.py:194 in _run_module_as_main │

│ │

│ 191 │ main_globals = sys.modules["__main__"].__dict__ │

│ 192 │ if alter_argv: │

│ 193 │ │ sys.argv[0] = mod_spec.origin │

│ ❱ 194 │ return _run_code(code, main_globals, None, │

│ 195 │ │ │ │ │ "__main__", mod_spec) │

│ 196 │

│ 197 def run_module(mod_name, init_globals=None, │

│ │

│ D:\anaconda3\lib\runpy.py:87 in _run_code │

│ │

│ 84 │ │ │ │ │ __loader__ = loader, │

│ 85 │ │ │ │ │ __package__ = pkg_name, │

│ 86 │ │ │ │ │ __spec__ = mod_spec) │

│ ❱ 87 │ exec(code, run_globals) │

│ 88 │ return run_globals │

│ 89 │

│ 90 def _run_module_code(code, init_globals=None, │

│ │

│ D:\xkk\human\ECON-master\ECON-master\apps\avatarizer.py:69 in <module> │

│ │

│ 66 # obtain the pose params of T-pose, DA-pose, and the original pose │

│ 67 for pose_type in ["a-pose", "t-pose", "da-pose", "pose"]: │

│ 68 │ smpl_out_lst.append( │

│ ❱ 69 │ │ smpl_model( │

│ 70 │ │ │ body_pose=smplx_param["body_pose"], │

│ 71 │ │ │ global_orient=smplx_param["global_orient"], │

│ 72 │ │ │ betas=smplx_param["betas"], │

│ │

│ D:\anaconda3\lib\site-packages\torch\nn\modules\module.py:1194 in _call_impl │

│ │

│ 1191 │ │ # this function, and just call forward. │

│ 1192 │ │ if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks o │

│ 1193 │ │ │ │ or _global_forward_hooks or _global_forward_pre_hooks): │

│ ❱ 1194 │ │ │ return forward_call(*input, **kwargs) │

│ 1195 │ │ # Do not call functions when jit is used │

│ 1196 │ │ full_backward_hooks, non_full_backward_hooks = [], [] │

│ 1197 │ │ if self._backward_hooks or _global_backward_hooks: │

│ │

│ D:\xkk\human\ECON-master\ECON-master\lib\smplx\body_models.py:1316 in forward │

│ │

│ 1313 │ │ shapedirs = torch.cat([self.shapedirs, self.expr_dirs], dim=-1) │

│ 1314 │ │ │

│ 1315 │ │ if return_joint_transformation or return_vertex_transformation: │

│ ❱ 1316 │ │ │ vertices, joints, joint_transformation, vertex_transformation = lbs( │

│ 1317 │ │ │ │ shape_components, │

│ 1318 │ │ │ │ full_pose, │

│ 1319 │ │ │ │ self.v_template, │

│ │

│ D:\xkk\human\ECON-master\ECON-master\lib\smplx\lbs.py:194 in lbs │

│ │

│ 191 │ device, dtype = betas.device, betas.dtype │

│ 192 │ │

│ 193 │ # Add shape contribution │

│ ❱ 194 │ v_shaped = v_template + blend_shapes(betas, shapedirs) │

│ 195 │ │

│ 196 │ # Get the joints │

│ 197 │ # NxJx3 array │

│ │

│ D:\xkk\human\ECON-master\ECON-master\lib\smplx\lbs.py:366 in blend_shapes │

│ │

│ 363 │ # Displacement[b, m, k] = sum_{l} betas[b, l] * shape_disps[m, k, l] │

│ 364 │ # i.e. Multiply each shape displacement by its corresponding beta and │

│ 365 │ # then sum them. │

│ ❱ 366 │ blend_shape = torch.einsum("bl,mkl->bmk", [betas, shape_disps]) │

│ 367 │ return blend_shape │

│ 368 │

│ 369 │

│ │

│ D:\anaconda3\lib\site-packages\torch\functional.py:373 in einsum │

│ │

│ 370 │ │ _operands = operands[0] │

│ 371 │ │ # recurse incase operands contains value that has torch function │

│ 372 │ │ # in the original implementation this line is omitted │

│ ❱ 373 │ │ return einsum(equation, *_operands) │

│ 374 │ │

│ 375 │ if len(operands) <= 2 or not opt_einsum.enabled: │

│ 376 │ │ # the path for contracting 0 or 1 time(s) is already optimized │

│ │

│ D:\anaconda3\lib\site-packages\torch\functional.py:378 in einsum │

│ │

│ 375 │ if len(operands) <= 2 or not opt_einsum.enabled: │

│ 376 │ │ # the path for contracting 0 or 1 time(s) is already optimized │

│ 377 │ │ # or the user has disabled using opt_einsum │

│ ❱ 378 │ │ return _VF.einsum(equation, operands) # type: ignore[attr-defined] │

│ 379 │ │

│ 380 │ path = None │

│ 381 │ if opt_einsum.is_available(): │

╰──────────────────────────────────────────────────────────────────────────────────────────────────╯

the version of pytorch3d in requirement is 0.7.1

but that version only supports pytorch 1.12.1

not support pytorch 1.13.0

I am not sure that is the reason that causes local installation failed

and i use conda install pytorch3d 0.7.4

but the result is not good as yours

ur result looks so fit and smooth

is that the pytorch3d problem or I need to do some post-process?

how i got my result same as yours

I don't want my results to have a colored back with a picture on the front and a gray interface

Trying to install ECON on my workstation. Ryzen 7950X and RTX4090, Ubuntu 20.04 (WSL2 on windows 11)

Fails installing requirements with:

ERROR: Failed building wheel for pytorch3d

ValueError: Unknown CUDA arch (8.9) or GPU not supported

Hi, thanks for your excellent work. As the paper saying, “the positional embedding (N freqs = 6) of query points is concatenated with multi-scale deep features to account for high-frequency details. '' I don't find positional embedding part in the Code. So I would like to know where this part of code is?

Thanks!

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.