multihash implementation in Rust.

First add this to your Cargo.toml

[dependencies]

multihash = "*"Then run cargo build.

The minimum supported Rust version for this library is 1.64.0.

This is only guaranteed without additional features activated.

The multihash crate exposes a basic data structure for encoding and decoding multihash.

It does not provide any hashing functionality itself.

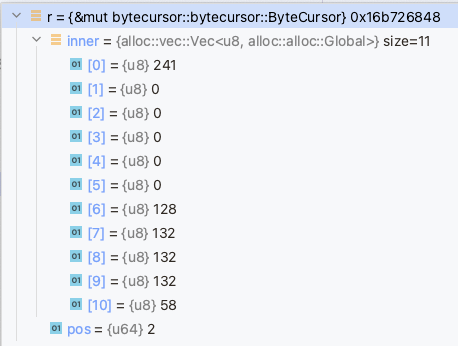

Multihash uses const-generics to define the internal buffer size.

You should set this to the maximum size of the digest you want to support.

use multihash::Multihash;

const SHA2_256: u64 = 0x12;

fn main() {

let hash = Multihash::<64>::wrap(SHA2_256, b"my digest");

println!("{:?}", hash);

}You can derive your own application specific code table using the multihash-derive crate.

The multihash-codetable provides predefined hasher implementations if you don't want to implement your own.

use multihash_derive::MultihashDigest;

#[derive(Clone, Copy, Debug, Eq, MultihashDigest, PartialEq)]

#[mh(alloc_size = 64)]

pub enum Code {

#[mh(code = 0x01, hasher = multihash_codetable::Sha2_256)]

Foo,

#[mh(code = 0x02, hasher = multihash_codetable::Sha2_512)]

Bar,

}

fn main() {

let hash = Code::Foo.digest(b"my hash");

println!("{:02x?}", hash);

}SHA1SHA2-256SHA2-512SHA3/KeccakBlake2b-256/Blake2b-512/Blake2s-128/Blake2s-256Blake3Strobe

Captain: @dignifiedquire.

Contributions welcome. Please check out the issues.

Check out our contributing document for more information on how we work, and about contributing in general. Please be aware that all interactions related to multiformats are subject to the IPFS Code of Conduct.

Small note: If editing the README, please conform to the standard-readme specification.