Implementation and Evaluation of Deep Learning Approaches for Real-time 3D Robot Localization in an Augmented Reality Use Case

- The manual 6D object pose annotation tool - http://annotate.photo/

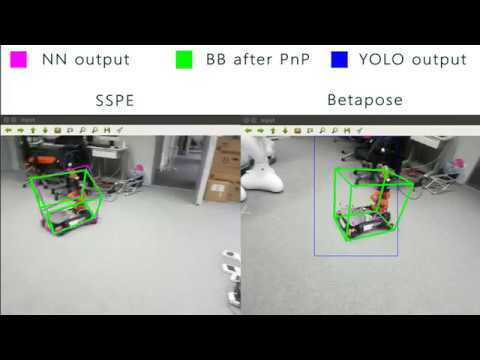

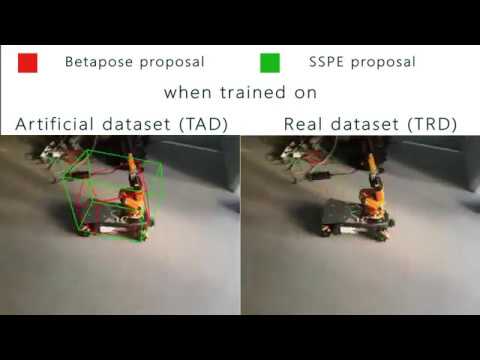

- Single Shot Pose Estimation (SSPE)

- Betapose

- Gabriel

- The 5 datasets used for training and evaluation - Google Drive

- The weights for SSPE and Betapose - Google Drive

- Presentation slides and documentation

This project is a fusion of SSPE, Betapose and Gabriel. The betapose and sspe directories are used for training and evaluation. The tools directory contrains useful tools like projectors, dataset converters and augmetors as well as PLY-manipulators which are mainly useful for data preparation. The gabriel directory contains the Gabriel code base with 2 new proxies (cognitive engines) for Betapose and SSPE.

In many subdirectories there is a commands.txt file which contains the actual commands that were used during the project. They can be used for reference.

The programs were run with Nvidia driver 418.87 and CUDA version 10.0. Betapose and SSPE (including their gabriel proxies) were run on Python 3.6.8, while the 2 other servers of Gabriel were run on Python 2.7.16.

If you are going to use the already existing weights you can skip this step.

The betapose and sspe folders are used for training. To train each of the methods reformat the datasets in the needed format. You can do that by using the tools provided in this repository under tools/converters/. After your dataset is ready follow the README.md files of each method which explain how to do the training in more detail.

Notice: For training SSPE you would need a GPU with at least 8GB of memory

To evaluate SSPE just run the SSPE evaluation script.

To evaluate Betapose with the same metrics as the ones used by SSPE do the following:

- Run the betapose_evaluate.py with

saveoption enabled - Then get the outputed

Betapose-results.jsonfile and move it in the directory of the evaluation script. Then also move the SSPE label files for the same images in the same directory. Then run the evaluation script

You have to run the Gabriel servers locally. In case you're stuck with those instructions you can look at the Gabriel README for more info.

It is advised to have 3 Anaconda environments - for Betapose (Python 2.6), for SSPE (Python 2.6) and for Gabriel (Python 2.6). After having installed all needed libraries in the coresponding environments you can purceed with running the servers:

- Connect the computer and the AR-enabled device to the same WiFi network. It would be easiest if you make one of the devices a hotspot.

- (Gabriel Environment) Run the control server from the

gabriel/server/bindirectory

python gabriel-control -n wlp3s0 -l

- (Gabriel Environment) Run the ucomm server from the

gabriel/server/bindirectory

python gabriel-ucomm -n wlp3s0

Now we have the core of Gabriel running with no cognitive engines running. To start the SSPE congnitive engine do the following:

- Go to the

gabriel/server/bin/example-proxies/gabriel-proxy-sspedirectory - Put the PLY 3D model in the

./sspd/3d_modelsdirectory - Put the configuration file in the

./sspd/cfgdirectory - Put the weights in the

./sspd/backup/kukadirectory - Run the following command (it might change according to the names you've used):

python proxy.py ./sspd/cfg/kuka.data ./sspd/cfg/yolo-pose-noanchor.cfg ./sspd/backup/kuka/model.weights 0.0.0.0:8021

To start the Betapose cognitive engine do the following:

- Go to the

gabriel/server/bin/example-proxies/gabriel-proxy-betaposedirectory - Put the PLY 3D object model in the

./betapose/models/modelsdirectory - Put the PLY 3D key points model in the

./betapose/models/kpmodelsdirectory - Put the KPD weights named

kpd.pklin the./betapose/weightsdirectory - Put the YOLO weights named

yolo.weightsin the./betapose/weightsdirectory - Run the following command:

python proxy.py --control_server 0.0.0.0:8021