Project Page | Paper | Video

Official implementation of HumanNorm, a method for generating high-quality and realistic 3D Humans from prompts.

Xin Huang1*,

Ruizhi Shao2*,

Qi Zhang1,

Hongwen Zhang2,

Ying Feng1,

Yebin Liu2,

Qing Wang1

1Northwestern Polytechnical University, 2Tsinghua University, *Equal Contribution

teaser_low.mp4

This part is the same as the original threestudio. Skip it if you already have installed the environment.

See installation.md for additional information, including installation via Docker.

- You must have an NVIDIA graphics card with at least 20GB VRAM and have CUDA installed.

- Install

Python >= 3.8. - (Optional, Recommended) Create a virtual environment:

pip3 install virtualenv # if virtualenv is installed, skip it

python3 -m virtualenv venv

. venv/bin/activate

# Newer pip versions, e.g. pip-23.x, can be much faster than old versions, e.g. pip-20.x.

# For instance, it caches the wheels of git packages to avoid unnecessarily rebuilding them later.

python3 -m pip install --upgrade pip- Install

PyTorch >= 1.12. We have tested ontorch1.12.1+cu113andtorch2.0.0+cu118, but other versions should also work fine.

# torch1.12.1+cu113

pip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 --extra-index-url https://download.pytorch.org/whl/cu113

# or torch2.0.0+cu118

pip install torch torchvision --index-url https://download.pytorch.org/whl/cu118- (Optional, Recommended) Install ninja to speed up the compilation of CUDA extensions:

pip install ninja- Install dependencies:

pip install -r requirements.txt- (Optional)

tiny-cuda-nninstallation might require downgrading pip to 23.0.1

You can download our fine-tuned models on HuggingFace: Normal-adapted-model, Depth-adapted-model, Normal-aligned-model and ControlNet. We provide the script to download load these models.

./download_models.shAfter downloading, the pretrained_models/ is structured like:

./pretrained_models

├── normal-adapted-sd1.5/

├── depth-adapted-sd1.5/

├── normal-aligned-sd1.5/

└── controlnet-normal-sd1.5/

You can download the predefined Tetrahedra for DMTET by

sudo apt-get install git-lfs # install git-lfs

cd load/

sudo chmod +x download.sh

./download.shAfter downloading, the load/ is structured like:

./load

├── lights/

├── shapes/

└── tets

├── ...

├── 128_tets.npz

├── 256_tets.npz

├── 512_tets.npz

└── ...

The directory scripts contains scripts used for full-body, half-body, and head-only human generations. The directory configs contains parameter settings for all these generations.

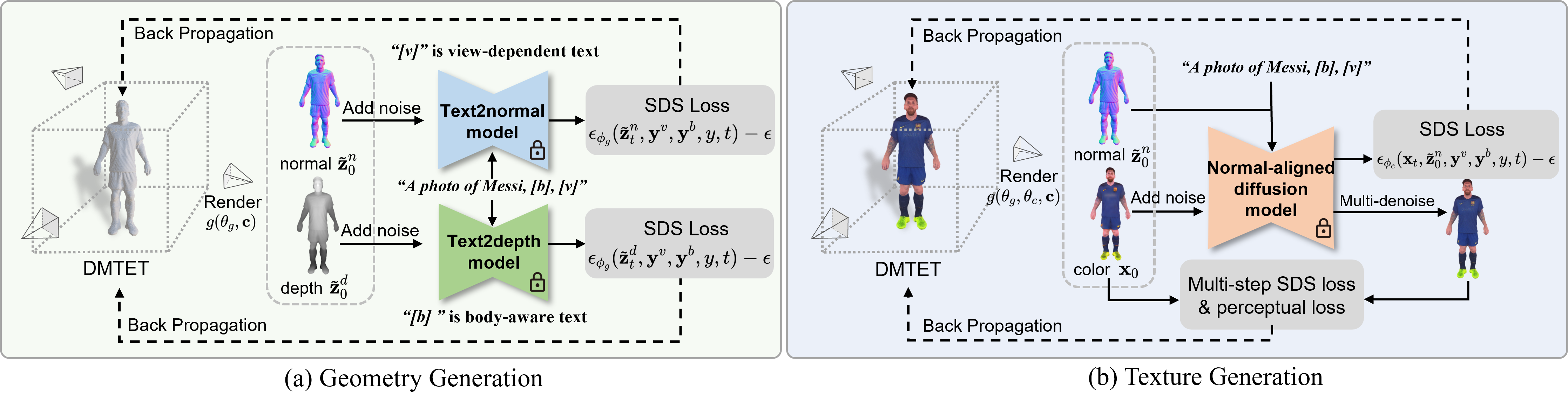

HumanNorm generates 3D humans in three steps including geometry generation, coarse texture generation, and fine texture generation. You can directly execute these three steps using these scripts. For example,

./script/run_generation_full_body.shAfter generation, you can get the result for each step.

output.mp4

You can also modify the prompt in run_generation_full_body.sh to generate other models. The script looks like this:

#!/bin/bash

exp_root_dir="./outputs"

test_save_path="./outputs/rgb_cache"

timestamp="_20231223"

tag="curry"

prompt="a DSLR photo of Stephen Curry"

# Stage1: geometry generation

exp_name="stage1-geometry"

python launch.py \

--config configs/humannorm-geometry-full.yaml \

--train \

timestamp=$timestamp \

tag=$tag \

name=$exp_name \

exp_root_dir=$exp_root_dir \

data.sampling_type="full_body" \

system.prompt_processor.prompt="$prompt, black background, normal map" \

system.prompt_processor_add.prompt="$prompt, black background, depth map" \

system.prompt_processor.human_part_prompt=false \

system.geometry.shape_init="mesh:./load/shapes/full_body.obj"

# Stage2: coarse texture generation

geometry_convert_from="$exp_root_dir/$exp_name/$tag$timestamp/ckpts/last.ckpt"

exp_name="stage2-coarse-texture"

root_path="./outputs/$exp_name"

python launch.py \

--config configs/humannorm-texture-coarse.yaml \

--train \

timestamp=$timestamp \

tag=$tag \

name=$exp_name \

exp_root_dir=$exp_root_dir \

system.geometry_convert_from=$geometry_convert_from \

data.sampling_type="full_body" \

data.test_save_path=$test_save_path \

system.prompt_processor.prompt="$prompt" \

system.prompt_processor.human_part_prompt=false

# Stage3: fine texture generation

ckpt_name="last.ckpt"

exp_name="stage3-fine-texture"

python launch.py \

--config configs/humannorm-texture-fine.yaml \

--train \

system.geometry_convert_from=$geometry_convert_from \

data.dataroot=$test_save_path \

timestamp=$timestamp \

tag=$tag \

name=$exp_name \

exp_root_dir=$exp_root_dir \

resume="$root_path/$tag$timestamp/ckpts/$ckpt_name" \

system.prompt_processor.prompt="$prompt" \

system.prompt_processor.human_part_prompt=false- Release the reorganized code.

- Improve the quality of texture generation.

- Release the finetuning code.

If you find our work useful in your research, please cite:

@article{huang2023humannorm,

title={Humannorm: Learning normal diffusion model for high-quality and realistic 3d human generation},

author={Huang, Xin and Shao, Ruizhi and Zhang, Qi and Zhang, Hongwen and Feng, Ying and Liu, Yebin and Wang, Qing},

journal={arXiv preprint arXiv:2310.01406},

year={2023}

}

Our project benefits from the amazing open-source projects:

We are grateful for their contribution.