Encrypt/decrypt anything in the browser using streams on background threads.

Quickly and efficiently decrypt remote resources in the browser. Display the files in the DOM, or download them with conflux.

| .decrypt | .encrypt | .saveZip | |

|---|---|---|---|

| Chrome | ✅ | ✅ | ✅ |

| Edge >18 | ✅ | ✅ | ✅ |

| Safari ≥14.1 | ✅ | ✅ | ✅ |

| Firefox ≥102 | ✅ | ✅ | ✅ |

| Safari <14.1 | 🐢 | 🐢 | 🟡 |

| Firefox <102 | 🐢 | 🐢 | 🟡 |

| Edge 18 | ❌ | ❌ | ❌ |

✅ = Full support with workers

🐢 = Uses main thread (lacks native WritableStream support)

🟡 = 32 MiB limit

❌ = No support

yarn add @transcend-io/penumbra

# or

npm install --save @transcend-io/penumbraimport { penumbra } from '@transcend-io/penumbra';

penumbra.get(...files).then(penumbra.save);<script src="lib/main.penumbra.js"></script>

<script>

penumbra

.get(...files)

.then(penumbra.getTextOrURI)

.then(displayInDOM);

</script>Check out this guide for asynchronous loading.

penumbra.get() uses RemoteResource descriptors to specify where to request resources and their various decryption parameters.

/**

* A file to download from a remote resource, that is optionally encrypted

*/

type RemoteResource = {

/** The URL to fetch the encrypted or unencrypted file from */

url: string;

/** The mimetype of the resulting file */

mimetype?: string;

/** The name of the underlying file without the extension */

filePrefix?: string;

/** If the file is encrypted, these are the required params */

decryptionOptions?: PenumbraDecryptionInfo;

/** Relative file path (needed for zipping) */

path?: string;

/** Fetch options */

requestInit?: RequestInit;

/** Last modified date */

lastModified?: Date;

/** Expected file size */

size?: number;

};Fetch and decrypt remote files.

penumbra.get(...resources: RemoteResource[]): Promise<PenumbraFile[]>Encrypt files.

penumbra.encrypt(options: PenumbraEncryptionOptions, ...files: PenumbraFile[]): Promise<PenumbraEncryptedFile[]>

/**

* penumbra.encrypt() encryption options config (buffers or base64-encoded strings)

*/

type PenumbraEncryptionOptions = {

/** Encryption key */

key: string | Buffer;

};Encrypt an empty stream:

size = 4096 * 128;

addEventListener('penumbra-progress', (e) => console.log(e.type, e.detail));

addEventListener('penumbra-complete', (e) => console.log(e.type, e.detail));

file = penumbra.encrypt(null, {

stream: new Response(new Uint8Array(size)).body,

size,

});

data = [];

file.then(async ([encrypted]) => {

console.log('encryption complete');

data.push(new Uint8Array(await new Response(encrypted.stream).arrayBuffer()));

});Encrypt and decrypt text:

const te = new self.TextEncoder();

const td = new self.TextDecoder();

const input = '[test string]';

const buffer = te.encode(input);

const { byteLength: size } = buffer;

const stream = new Response(buffer).body;

const options = null;

const file = {

stream,

size,

};

const [encrypted] = await penumbra.encrypt(options, file);

const decryptionInfo = await penumbra.getDecryptionInfo(encrypted);

const [decrypted] = await penumbra.decrypt(decryptionInfo, encrypted);

const decryptedData = await new Response(decrypted.stream).arrayBuffer();

const decryptedText = td.decode(decryptedData);

console.log('decrypted text:', decryptedText);Get decryption info for a file, including the iv, authTag, and key. This may only be called on files that have finished being encrypted.

penumbra.getDecryptionInfo(file: PenumbraFile): Promise<PenumbraDecryptionInfo>Decrypt files.

penumbra.decrypt(options: PenumbraDecryptionInfo, ...files: PenumbraEncryptedFile[]): Promise<PenumbraFile[]>const te = new TextEncoder();

const td = new TextDecoder();

const data = te.encode('test');

const { byteLength: size } = data;

const [encrypted] = await penumbra.encrypt(null, {

stream: data,

size,

});

const options = await penumbra.getDecryptionInfo(encrypted);

const [decrypted] = await penumbra.decrypt(options, encrypted);

const decryptedData = await new Response(decrypted.stream).arrayBuffer();

return td.decode(decryptedData) === 'test';Save files retrieved by Penumbra. Downloads a .zip if there are multiple files. Returns an AbortController that can be used to cancel an in-progress save stream.

penumbra.save(data: PenumbraFile[], fileName?: string): AbortControllerLoad files retrieved by Penumbra into memory as a Blob.

penumbra.getBlob(data: PenumbraFile[] | PenumbraFile | ReadableStream, type?: string): Promise<Blob>Get file text (if content is text) or URI (if content is not viewable).

penumbra.getTextOrURI(data: PenumbraFile[]): Promise<{ type: 'text'|'uri', data: string, mimetype: string }[]>Save a zip containing files retrieved by Penumbra.

type ZipOptions = {

/** Filename to save to (.zip is optional) */

name?: string;

/** Total size of archive in bytes (if known ahead of time, for 'store' compression level) */

size?: number;

/** PenumbraFile[] to add to zip archive */

files?: PenumbraFile[];

/** Abort controller for cancelling zip generation and saving */

controller?: AbortController;

/** Allow & auto-rename duplicate files sent to writer. Defaults to on */

allowDuplicates: boolean;

/** Zip archive compression level */

compressionLevel?: number;

/** Store a copy of the resultant zip file in-memory for inspection & testing */

saveBuffer?: boolean;

/**

* Auto-registered `'progress'` event listener. This is equivalent to calling

* `PenumbraZipWriter.addEventListener('progress', onProgress)`

*/

onProgress?(event: CustomEvent<ZipProgressDetails>): void;

/**

* Auto-registered `'complete'` event listener. This is equivalent to calling

* `PenumbraZipWriter.addEventListener('complete', onComplete)`

*/

onComplete?(event: CustomEvent<{}>): void;

};

penumbra.saveZip(options?: ZipOptions): PenumbraZipWriter;

interface PenumbraZipWriter extends EventTarget {

/**

* Add decrypted PenumbraFiles to zip

*

* @param files - Decrypted PenumbraFile[] to add to zip

* @returns Total observed size of write call in bytes

*/

write(...files: PenumbraFile[]): Promise<number>;

/**

* Enqueue closing of the Penumbra zip writer (after pending writes finish)

*

* @returns Total observed zip size in bytes after close completes

*/

close(): Promise<number>;

/** Cancel Penumbra zip writer */

abort(): void;

/** Get buffered output (requires saveBuffer mode) */

getBuffer(): Promise<ArrayBuffer>;

/** Get all written & pending file paths */

getFiles(): string[];

/**

* Get observed zip size after all pending writes are resolved

*/

getSize(): Promise<number>;

}

type ZipProgressDetails = {

/** Percentage completed. `null` indicates indetermination */

percent: number | null;

/** The number of bytes or items written so far */

written: number;

/** The total number of bytes or items to write. `null` indicates indetermination */

size: number | null;

};Example:

const files = [

{

url: 'https://s3-us-west-2.amazonaws.com/bencmbrook/tortoise.jpg.enc',

name: 'tortoise.jpg',

mimetype: 'image/jpeg',

decryptionOptions: {

key: 'vScyqmJKqGl73mJkuwm/zPBQk0wct9eQ5wPE8laGcWM=',

iv: '6lNU+2vxJw6SFgse',

authTag: 'ELry8dZ3djg8BRB+7TyXZA==',

},

},

];

const writer = penumbra.saveZip();

await writer.write(...(await penumbra.get(...files)));

await writer.close();Configure the location of Penumbra's worker threads.

penumbra.setWorkerLocation(location: WorkerLocationOptions | string): Promise<void>const decryptedText = await penumbra

.get({

url: 'https://s3-us-west-2.amazonaws.com/bencmbrook/NYT.txt.enc',

mimetype: 'text/plain',

filePrefix: 'NYT',

decryptionOptions: {

key: 'vScyqmJKqGl73mJkuwm/zPBQk0wct9eQ5wPE8laGcWM=',

iv: '6lNU+2vxJw6SFgse',

authTag: 'gadZhS1QozjEmfmHLblzbg==',

},

})

.then((file) => penumbra.getTextOrURI(file)[0])

.then(({ data }) => {

document.getElementById('my-paragraph').innerText = data;

});const imageSrc = await penumbra

.get({

url: 'https://s3-us-west-2.amazonaws.com/bencmbrook/tortoise.jpg.enc',

filePrefix: 'tortoise',

mimetype: 'image/jpeg',

decryptionOptions: {

key: 'vScyqmJKqGl73mJkuwm/zPBQk0wct9eQ5wPE8laGcWM=',

iv: '6lNU+2vxJw6SFgse',

authTag: 'ELry8dZ3djg8BRB+7TyXZA==',

},

})

.then((file) => penumbra.getTextOrURI(file)[0])

.then(({ data }) => {

document.getElementById('my-img').src = data;

});penumbra

.get({

url: 'https://s3-us-west-2.amazonaws.com/bencmbrook/africa.topo.json.enc',

filePrefix: 'africa',

mimetype: 'image/jpeg',

decryptionOptions: {

key: 'vScyqmJKqGl73mJkuwm/zPBQk0wct9eQ5wPE8laGcWM=',

iv: '6lNU+2vxJw6SFgse',

authTag: 'ELry8dZ3djg8BRB+7TyXZA==',

},

})

.then((file) => penumbra.save(file));

// saves africa.jpg file to diskpenumbra

.get([

{

url: 'https://s3-us-west-2.amazonaws.com/bencmbrook/africa.topo.json.enc',

filePrefix: 'africa',

mimetype: 'image/jpeg',

decryptionOptions: {

key: 'vScyqmJKqGl73mJkuwm/zPBQk0wct9eQ5wPE8laGcWM=',

iv: '6lNU+2vxJw6SFgse',

authTag: 'ELry8dZ3djg8BRB+7TyXZA==',

},

},

{

url: 'https://s3-us-west-2.amazonaws.com/bencmbrook/NYT.txt.enc',

mimetype: 'text/plain',

filePrefix: 'NYT',

decryptionOptions: {

key: 'vScyqmJKqGl73mJkuwm/zPBQk0wct9eQ5wPE8laGcWM=',

iv: '6lNU+2vxJw6SFgse',

authTag: 'gadZhS1QozjEmfmHLblzbg==',

},

},

{

url: 'https://s3-us-west-2.amazonaws.com/bencmbrook/tortoise.jpg', // this is not encrypted

filePrefix: 'tortoise',

mimetype: 'image/jpeg',

},

])

.then((files) => penumbra.save({ data: files, fileName: 'example' }));

// saves example.zip file to disk// Resources to load

const resources = [

{

url: 'https://s3-us-west-2.amazonaws.com/bencmbrook/NYT.txt.enc',

filePrefix: 'NYT',

mimetype: 'text/plain',

decryptionOptions: {

key: 'vScyqmJKqGl73mJkuwm/zPBQk0wct9eQ5wPE8laGcWM=',

iv: '6lNU+2vxJw6SFgse',

authTag: 'gadZhS1QozjEmfmHLblzbg==',

},

},

{

url: 'https://s3-us-west-2.amazonaws.com/bencmbrook/tortoise.jpg.enc',

filePrefix: 'tortoise',

mimetype: 'image/jpeg',

decryptionOptions: {

key: 'vScyqmJKqGl73mJkuwm/zPBQk0wct9eQ5wPE8laGcWM=',

iv: '6lNU+2vxJw6SFgse',

authTag: 'ELry8dZ3djg8BRB+7TyXZA==',

},

},

];

// preconnect to the origins

penumbra.preconnect(...resources);

// or preload all of the URLS

penumbra.preload(...resources);You can listen to encrypt/decrypt job completion events through the penumbra-complete event.

window.addEventListener(

'penumbra-complete',

({ detail: { id, decryptionInfo } }) => {

console.log(

`finished encryption job #${id}%. decryption options:`,

decryptionInfo,

);

},

);You can listen to download and encrypt/decrypt job progress events through the penumbra-progress event.

window.addEventListener(

'penumbra-progress',

({ detail: { percent, id, type } }) => {

console.log(`${type}% ${percent}% done for ${id}`);

// example output: decrypt 33% done for https://example.com/encrypted-data

},

);Note: this feature requires the Content-Length response header to be exposed. This works by adding Access-Control-Expose-Headers: Content-Length to the response header (read more here and here)

On Amazon S3, this means adding the following line to your bucket policy, inside the <CORSRule> block:

<ExposeHeader>Content-Length</ExposeHeader>// Set only the base URL by passing a string

penumbra.setWorkerLocation('/penumbra-workers/');

// Set all worker URLs by passing a WorkerLocation object

penumbra.setWorkerLocation({

base: '/penumbra-workers/',

penumbra: 'worker.penumbra.js',

StreamSaver: 'StreamSaver.js',

});

// Set a single worker's location

penumbra.setWorkerLocation({ penumbra: 'worker.penumbra.js' });<script src="lib/main.penumbra.js" async defer></script>const onReady = async ({ detail: { penumbra } } = { detail: self }) => {

await penumbra.get(...files).then(penumbra.save);

};

if (!self.penumbra) {

self.addEventListener('penumbra-ready', onReady);

} else {

onReady();

}You can check if Penumbra is supported by the current browser by comparing penumbra.supported(): PenumbraSupportLevel with penumbra.supported.levels.

if (penumbra.supported() > penumbra.supported.levels.possible) {

// penumbra is partially or fully supported

}

/** penumbra.supported.levels - Penumbra user agent support levels */

enum PenumbraSupportLevel {

/** Old browser where Penumbra does not work at all */

none = -0,

/** Modern browser where Penumbra is not yet supported */

possible = 0,

/** Modern browser where file size limit is low */

size_limited = 1,

/** Modern browser with full support */

full = 2,

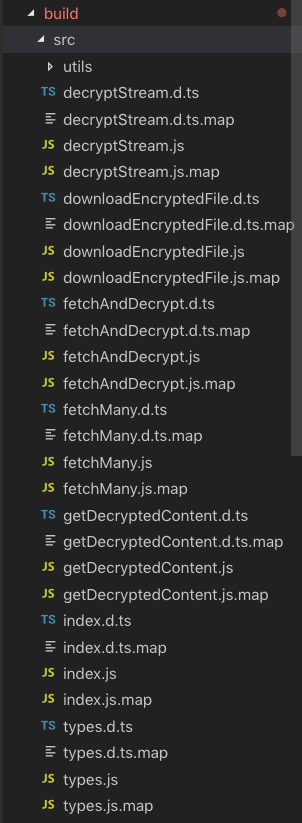

}Penumbra is compiled and bundled on npm. The recommended use is to copy in the penumbra build files into your webpack build.

We do this with copy-webpack-plugin

i.e.

const fs = require('fs');

const CopyPlugin = require('copy-webpack-plugin');

const path = require('path');

const PENUMBRA_DIRECTORY = path.join(

__dirname,

'node_modules',

'@transcend-io/penumbra',

'dist',

);

module.exports = {

plugins: [

new CopyPlugin({

patterns: fs.readdirSync(PENUMBRA_DIRECTORY)

.filter((fil) => fil.indexOf('.') > 0)

.map((fil) => ({

from: `${PENUMBRA_DIRECTORY}/${fil}`,

to: `${outputPath}/${fil}`,

})),

}),

]# setup

yarn

yarn build

# run tests

yarn test:local

# run tests in the browser console

yarn test:interactive