Repository

https://github.com/to-the-sun/amanuensis

Details

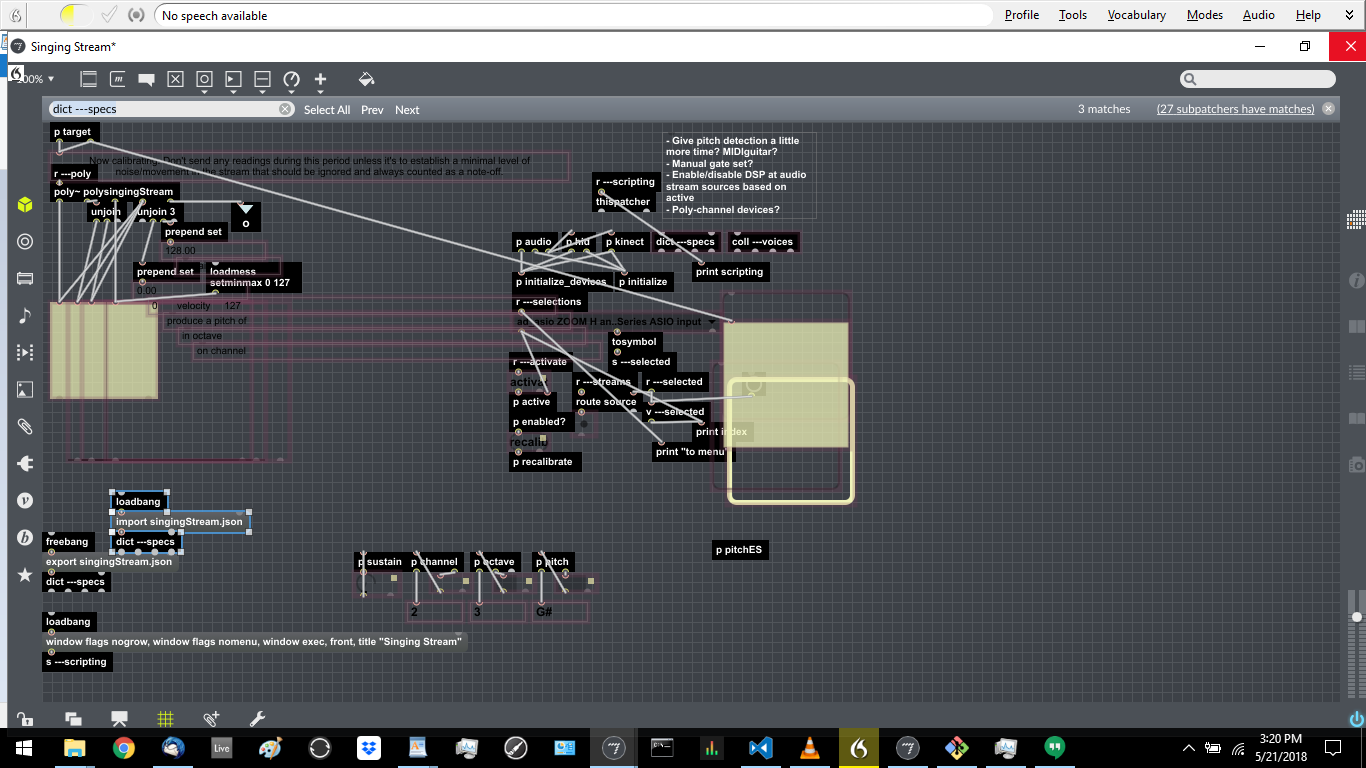

Quite a while back the issue arose of the rhythmic analysis being too much computationally for the program to handle at the same time it was dealing with all the necessary DSP. Lagging and choppiness would occur with every note played. My solution was to move the analysis to an external Python script and communicate back and forth with it via UDP. This successfully offloaded the processing burden, but created a new issue, namely the latency required to make that round-trip. All sorts of bugs and admittedly somewhat-sloppy fixes have occurred in the meantime, calculating and compensating for this delay-gap.

It would therefore be an immense alleviation to find a way around this latency. It should be possible; the assumption has always been that the analysis needs to take place before each note triggers the decision of whether to start or stop recording, but if the analysis comes through at its own pace and simply updates a table with its results, notes can immediately look up their recording commands in it without delay.

This would mean that the analysis would be based on every note prior, but not the note itself, but this shouldn't actually be a problem. If anything, one would expect that in trying to determine if a beat falls into a rhythm, that that beat should not yet be included in that rhythm.

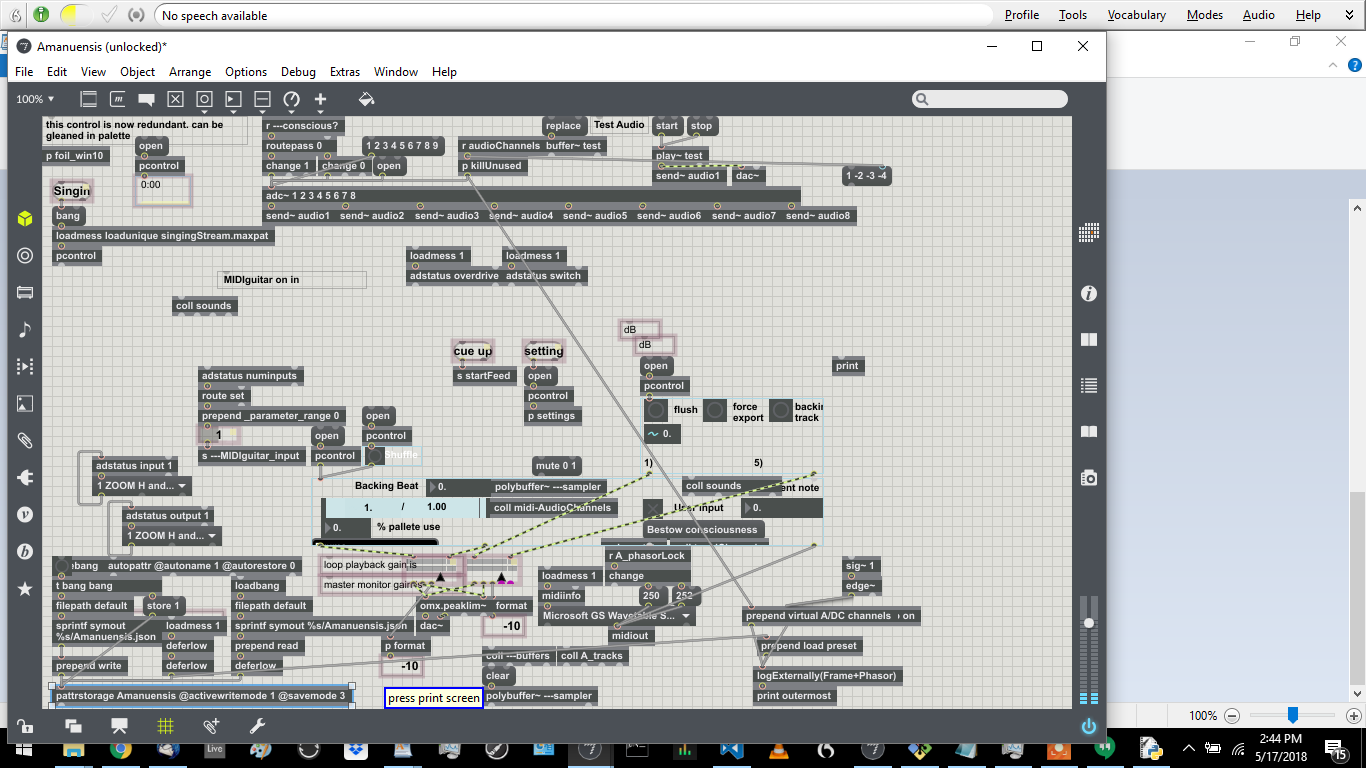

Components

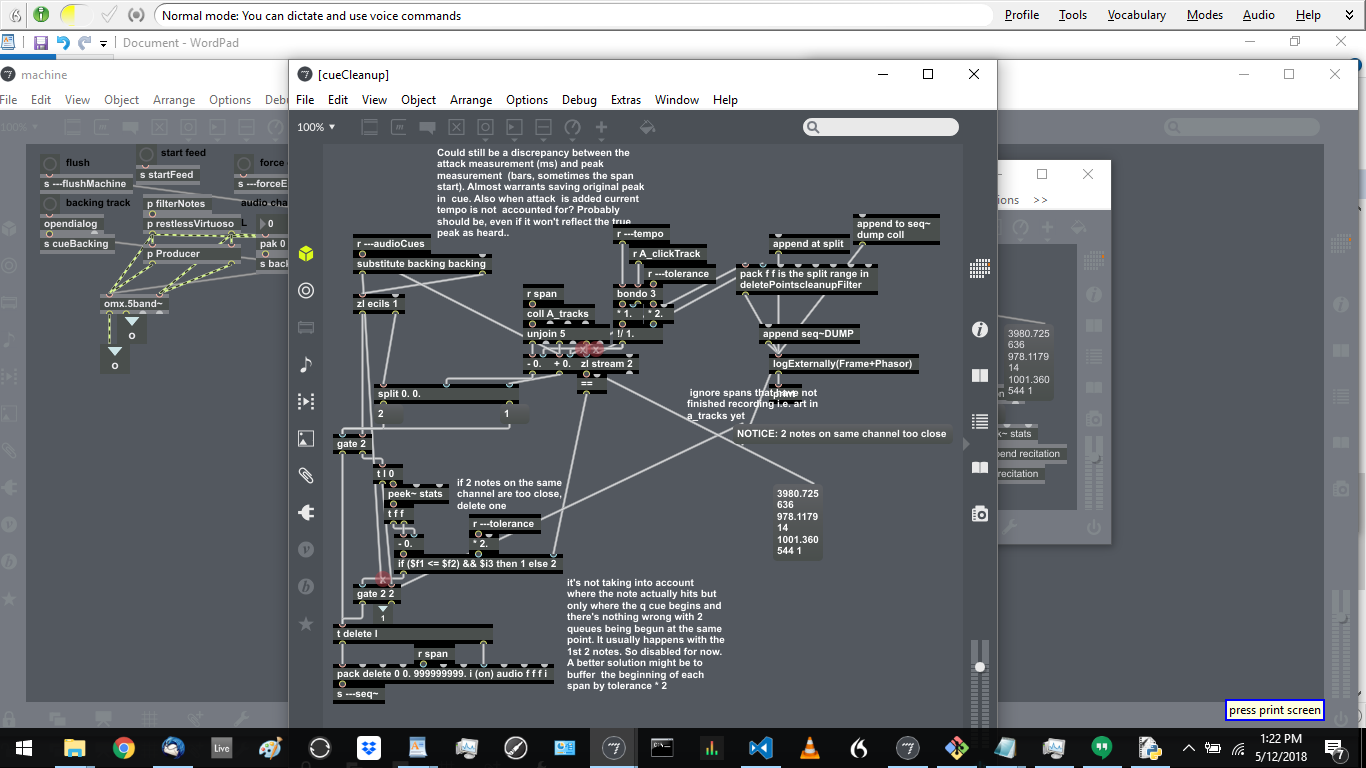

If this strategy had been implemented sooner, a lot less of the program would be affected, but as it is changes may need to be made in a wide range of components. The primary one would be the Python script, consciousness.py. Rather than calculate a simple likelihood variable, a one or zero, it will need to generate every value that will or will not return a likelihood of one, so they can be used to fill a table back in the main program. This will result in less traffic than might seem at first, as only some dozens of values will ever be likely to change upon each analysis.

Basically, instead of simply deciding whether the incoming interval is at the top of a plateau, each aggregate that is incremented will need to be analyzed to see which portions of that range are or are not a plateau. The values that change can be sent back via UDP. Certain other variables will still also need to be sent in the usual manner, such as lock and tempo.

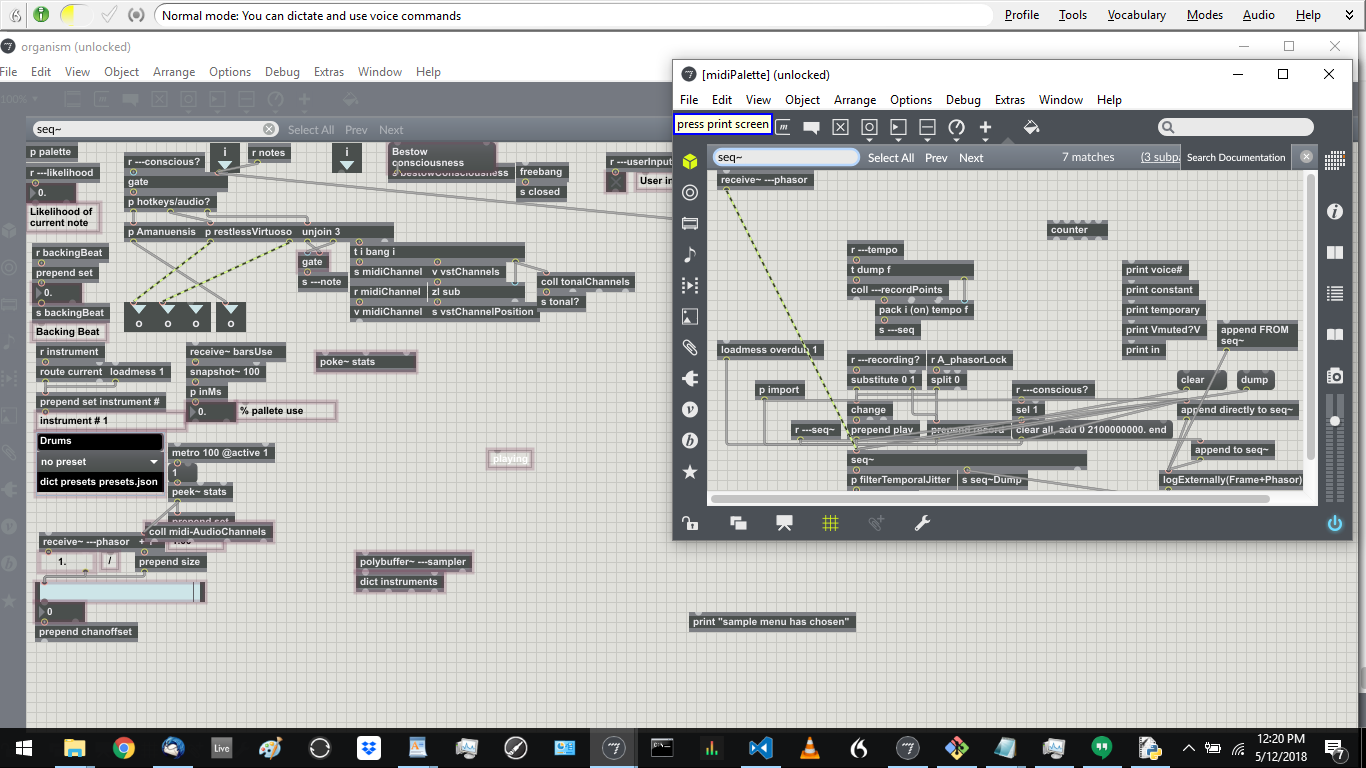

Additionally, the interval value will need to be calculated in the main program rather than the Python script, so it can be used for table-lookup. This change and whatever other slight modifications would need to be made in the [p collectPre-Cog] subpatcher of organism.maxpat, the place where messages are assembled before being sent off via UDP.

Initially and until these changes can test out as functional, most of the variable points back in the main program can just be sewn together directly. For example, [v pre-Gen~Moment] can simply be set with the frame value (from the 0th index of the stats buffer) at the same time the interval is sent off to the Python script for analysis. The Python script's return messages come in in [p receiveStats] (of organism.maxpat), so this would be the primary place to look to for these modifications to be made.

Deadline

There is no deadline, but we can discuss how long it might take to execute.

Communication

Reply to this post or contact me through Github for more details.

Proof of Work Done

https://github.com/to-the-sun