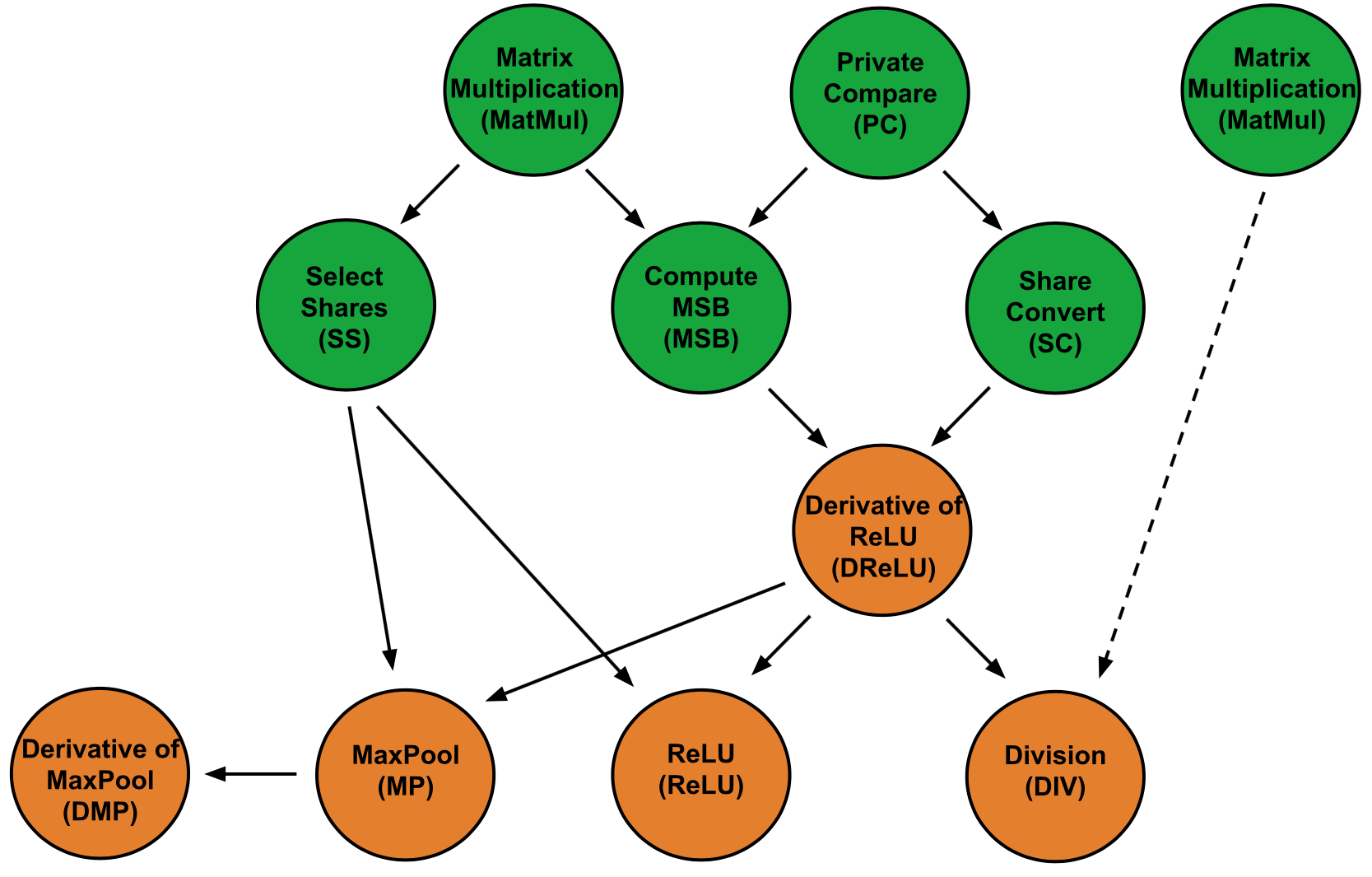

Secure multi-party computation (SMC/MPC) provides a cryptographically secure framework for computations where the privacy of data is a requirement. MPC protocols enable computations over this shared data while providing strong privacy guarantees – the parties only learn output of the computation while learning nothing about the individual inputs. Here we develop a framework for efficient 3-party protocols tailored for state-of-the-art neural networks. SecureNN builds on novel modular arithmetic to implement exact non-linear functions while avoiding the use of interconversion protocols as well as general purpose number theoretic libraries.

We develop and implement efficient protocols for the above set of functionalities. This work is published in Privacy Enhancing Technologies Symposium (PETS) 2019. Paper available here. Feel free to checkout the follow-up work Falcon and it's imporved implementation. If you're looking to run Neural Network training, strongly consider using this GPU-based codebase Piranha.

-

The code should work on any Linux distribution of your choice (It has been developed and tested with Ubuntu 16.04 and 18.04).

-

Required packages for SecureNN:

Install these packages with your favorite package manager, e.g,

sudo apt-get install <package-name>.

files/- Shared keys, IP addresses and data files.lib_eigen/- Eigen library for faster matrix multiplication.mnist/- Parsing code for converting MNIST data into SecureNN format data.src/- Source code for SecureNN.utils/- Dependencies for AES randomness.

To build SecureNN, run the following commands:

git clone https://github.com/snwagh/SecureNN.git

cd SecureNN

make

SecureNN can be run either as a single party (to verify correctness) or as a 3 (or 4) party protocol. It can be run on a single machine (localhost) or over a network. Finally, the output can be written to the terminal or to a file (from Party P_0). The makefile contains the promts for each. To run SecureNN, run the appropriate command after building (a few examples given below).

make standalone

make abcTerminal

make abcFile

SecureNN currently supports three types of layers, fully connected, convolutional (without padding), and convolutional layers (with zero padding). The network can be specified in src/main.cpp. The core protocols from SecureNN are implemented in src/Functionalities.cpp. The code supports both training and testing.

A number of debugging friendly functions are implemented in the library. For memory bugs, use valgrind, install using sudo apt-get install valgrind. Then run a single party in debug mode:

* Set makefile flags to -g -O0 (instead of -O3)

* make clean; make

* valgrind --tool=memcheck --leak-check=full --track-origins=yes --dsymutil=yes <executable-file-command>

libmiracl.a is compiled locally, if it throws errors, download the source files from https://github.com/miracl/MIRACL.git and compile miracl.a yourself and copy into this repo.

Matrix multiplication assembly code only works for Intel C/C++ compiler. Use the non-assembly code from src/tools.cpp if needed (might have correctness issues).

You can cite the paper using the following bibtex entry:

@article{wagh2019securenn,

title={{S}ecure{NN}: 3-{P}arty {S}ecure {C}omputation for {N}eural {N}etwork {T}raining},

author={Wagh, Sameer and Gupta, Divya and Chandran, Nishanth},

journal={Proceedings on Privacy Enhancing Technologies},

year={2019}

}

Report any bugs to [email protected]