An algorithm to optimize database queries that run multiple times

- 1. You make a query to the database which returns the result in 100 milliseconds

- 2. A write event occurs on the database and changes some data

- 3. To get the new version of the query's results you now have three options:

- a. Run the query over the database again which takes another 100 milliseconds

- b. Write complex code that somehow merges the incoming event with the old state

- c. Use Event-Reduce to calculate the new results on the CPU without disc-IO nearly instant

In the browser demo you can see that for randomly generated events, about 94% of them could be optimized by EventReduce. In real world usage, with non-random events, this can be even higher. For the different implementations in common browser databases, we can observe an up to 12 times faster displaying of new query results after a write occurred.

EventReduce uses 18 different state functions to 'describe' an event+previousResults combination. A state function is a function that returns a boolean value like isInsert(), wasResultsEmpty(), sortParamsChanged() and so on.

Also there are 16 different action functions. An action function gets the event+previousResults and modifies the results array in a given way like insertFirst(), replaceExisting(), insertAtSortPosition(), doNothing() and so on.

For each of our 2^19 state combinations, we calculate which action function gives the same results that the database would return when the full query is executed again.

From this state-action combinations we create a big truth table that is used to create a binary decision diagram. The BDD is then optimized to call as few state functions as possible to determine the correct action of an incoming event-results combination.

The resulting optimized BDD is then shipped as the EventReduce algoritm and can be used in different programming languages and implementations. The programmer does not need to know about all this optimisation stuff and can directly use three simple functions like shown in the javascript implementation

You can use this to

- reduce the latency until a change to the database updates your application

- make observing query results more scalable by doing less disk-io

- reduce the bandwith when streaming realtime query results from the backend to the client

- create a better form of caching where instead of invalidating the cache on write, you update its content

-

EventReduce only works with queries that have a predictable sort-order for any given documents. (you can make any query predicable by adding the primary key as last sort parameter)

-

EventReduce can be used with relational databases but not on relational queries that run over multiple tables/collections. (you can use views as workarround so that you can query over only one table). In theory Event-Reduce could also be used for relational queries but I did not need this for now. Also it takes about one week on an average machine to run all optimizations, and having more state functions looks like an NP problem.

At the moment there is only the JavaScript implementation that you can use over npm. Pull requests for other languages are welcomed.

-

Meteor uses a feature called OplogDriver that is limited on queries that do not use

skiporsort. Also watch this video to learn how OpLogDriver works. -

RxDB used the

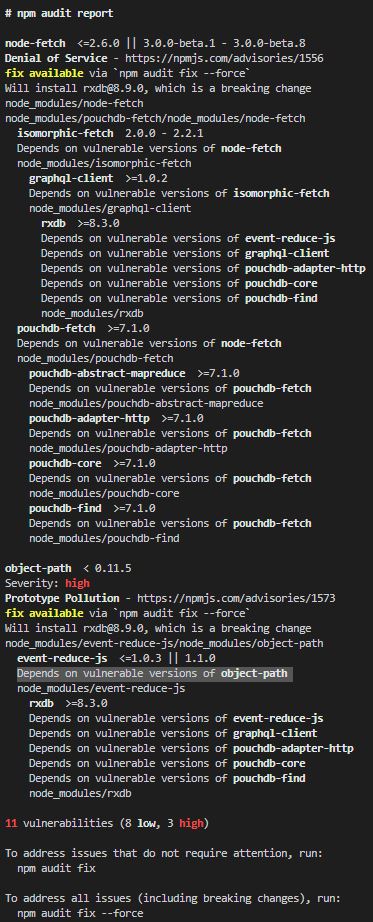

QueryChangeDetectionwhich works by many handwritten if-else comparisons. RxDB switched to EventReduce since version 9.0.0. -

Baqend is creating a database that optimizes for realtime queries. Watch the video Real-Time Databases Explained: Why Meteor, RethinkDB, Parse & Firebase Don't Scale to learn more.