Unity AR package to track your hand in realtime!

As seen on "Let's All Be Wizards!" : https://apps.apple.com/app/id1609685010

- 60 FPS hand detection

- 3D Bones world detection

SampleSmall.mov

SampleVideoLightning.mov

- Unity 2020.3 LTS

- ARFoundation

- iPhone with Lidar support (iPhone 12+ Pro)

- Add the package

RealtimeHandto your manifest - Add the

SwiftSupportpackage to enable swift development - Check the

RealtimeHandSamplefor usage

screenPos: 2D position in normalized screen coordinates;texturePos: 2D position in normalized CPU image coordinates;worldPos: 3D position in worldpsacename: name of the joint, matching the native onedistance: distance from camera in meterisVisible: if the joint has been identified (from the native pose detection)confidence: confidence of the detection

Do most of the heavy work for you : just add it to your project, and subscribe to the ``HandUpdated` event to be notified when a hand pose has been detected

Steps:

- Create a GameObject

- Add the

RealtimeHandManagercomponent - Configure it with the

ARSession,ARCameraManager,AROcclusionManagerobjects - Subscribe to

Action<RealtimeHand> HandUpdated;to be notified

OcclusionManager must be configured with

temporalSmoothing=Offandmode=fastestfor optimal result

If you want to have a full control on the flow, you can manually intialize and call the hand detection process : more work, but more control.

IsInitialized: to check if the object has been properly initialized (ie: the ARSession has been retrieved)IsVisivle: to know if the hand is currently visible or notJoints: dictionary of the all the joints

Initialize(ARSession _session, ARCameraManager _arCameraManager, Matrix4x4 _unityDisplayMatrix): initialize the object with the required components

The session must be in tracking mode

Dispose(): release the component and it resourcesProcess( CPUEnvironmentDepth _environmentDepth, CPUHumanStencil _humanStencil ): launch the detection method using the depth buffers

Check the RealtimeHandManageras an example

When a camera frame is received :

- Execute synchronously

VNDetectHumanHandPoseRequestto retrieve a 2D pose estimation from the OS - Retrieve the

environmentDepthand'humanStencilCPU images - From the 2D position of each bone, extract its 3D distance using the depth images to reconstruct a 3D position

- Linkedin Original Post : https://www.linkedin.com/posts/oliviergoguel_unity-arkit-arfoundation-activity-6896360209703407616-J3K7

- Making Of : https://www.linkedin.com/feed/update/urn:li:activity:6904398846399524864/

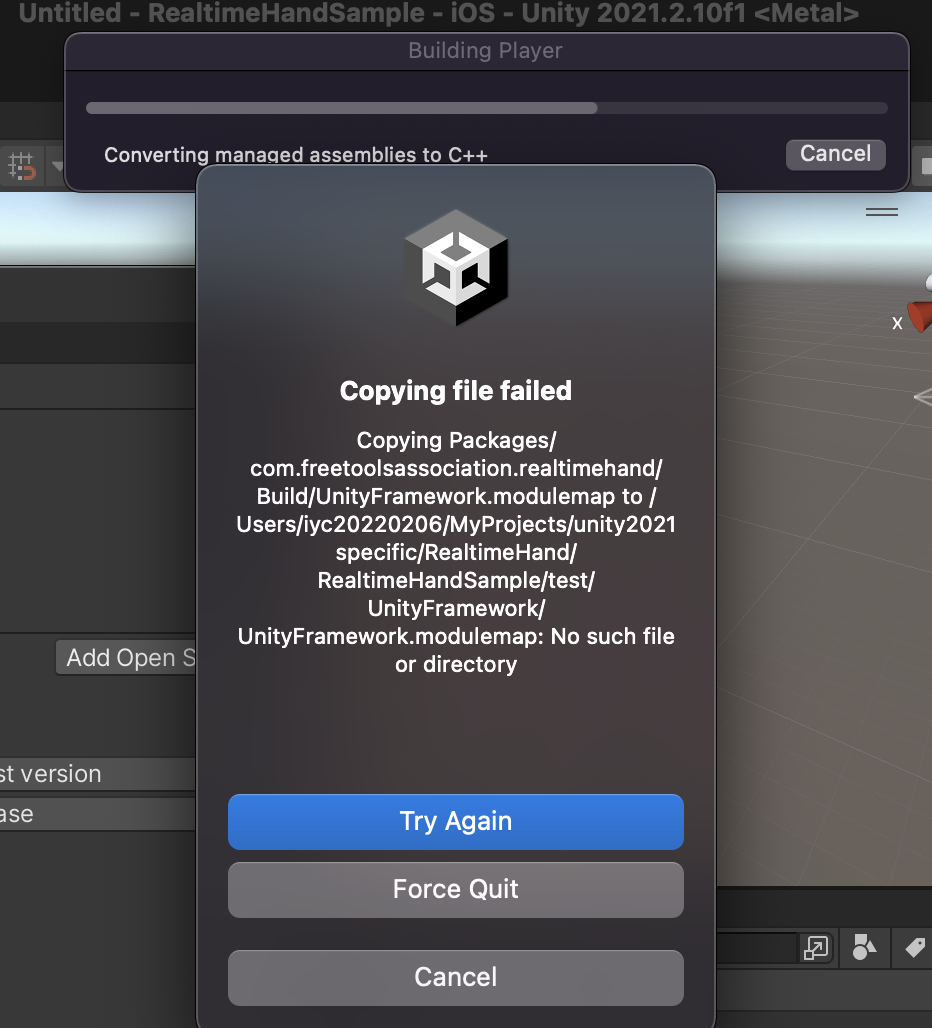

- Fix compatibility with Unity 2020.3

- Added Lightning Shader & effects

- Initial Release