PromptBench: A Unified Library for Evaluating and Understanding Large Language Models.

Paper

·

Documentation

·

Leaderboard

·

More papers

Table of Contents

- [13/03/2024] Add support for multi-modal models and datasets.

- [05/01/2024] Add support for BigBench Hard, DROP, ARC datasets.

- [16/12/2023] Add support for Gemini, Mistral, Mixtral, Baichuan, Yi models.

- [15/12/2023] Add detailed instructions for users to add new modules (models, datasets, etc.) examples/add_new_modules.md.

- [05/12/2023] Published promptbench 0.0.1.

PromptBench is a Pytorch-based Python package for Evaluation of Large Language Models (LLMs). It provides user-friendly APIs for researchers to conduct evaluation on LLMs. Check the technical report: https://arxiv.org/abs/2312.07910.

- Quick model performance assessment: We offer a user-friendly interface that allows for quick model building, dataset loading, and evaluation of model performance.

- Prompt Engineering: We implemented several prompt engineering methods. For example: Few-shot Chain-of-Thought [1], Emotion Prompt [2], Expert Prompting [3] and so on.

- Evaluating adversarial prompts: promptbench integrated prompt attacks [4], enabling researchers to simulate black-box adversarial prompt attacks on models and evaluate their robustness (see details here).

- Dynamic evaluation to mitigate potential test data contamination: we integrated the dynamic evaluation framework DyVal [5], which generates evaluation samples on-the-fly with controlled complexity.

We provide a Python package promptbench for users who want to start evaluation quickly. Simply run:

pip install promptbenchNote that the pip installation could be behind the recent updates. So, if you want to use the latest features or develop based on our code, you should install via GitHub.

First, clone the repo:

git clone [email protected]:microsoft/promptbench.gitThen,

cd promptbenchTo install the required packages, you can create a conda environment:

conda create --name promptbench python=3.9then use pip to install required packages:

pip install -r requirements.txtNote that this only installed basic python packages. For Prompt Attacks, you will also need to install TextAttack.

promptbench is easy to use and extend. Going through the examples below will help you get familiar with promptbench for quick use, evaluate existing datasets and LLMs, or create your own datasets and models.

Please see Installation to install promptbench first.

If promptbench is installed via pip, you can simply do:

import promptbench as pbIf you installed promptbench from git and want to use it in other projects:

import sys

# Add the directory of promptbench to the Python path

sys.path.append('/home/xxx/promptbench')

# Now you can import promptbench by name

import promptbench as pbWe provide tutorials for:

- evaluate models on existing benchmarks: please refer to the examples/basic.ipynb for constructing your evaluation pipeline. For a multi-modal evaluation pipeline, please refer to examples/multimodal.ipynb

- test the effects of different prompting techniques:

- examine the robustness for prompt attacks, please refer to examples/prompt_attack.ipynb to construct the attacks.

- use DyVal for evaluation: please refer to examples/dyval.ipynb to construct DyVal datasets.

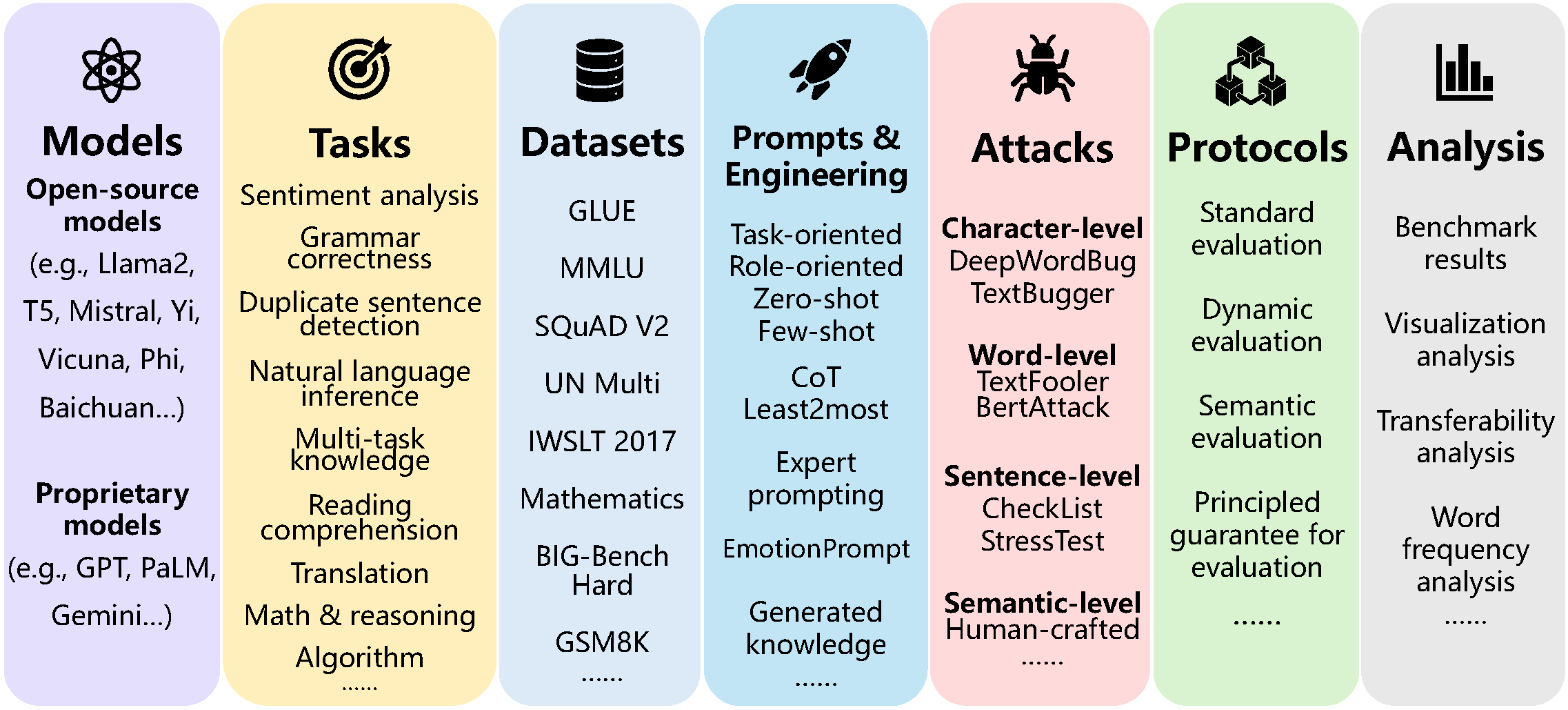

PromptBench currently supports different datasets, models, prompt engineering methods, adversarial attacks, and more. You are welcome to add more.

- Language datasets:

- GLUE: SST-2, CoLA, QQP, MRPC, MNLI, QNLI, RTE, WNLI

- MMLU

- BIG-Bench Hard (Bool logic, valid parentheses, date...)

- Math

- GSM8K

- SQuAD V2

- IWSLT 2017

- UN Multi

- CSQA (CommonSense QA)

- Numersense

- QASC

- Last Letter Concatenate

- Multi-modal datasets:

- VQAv2

- NoCaps

- MMMU

- MathVista

- AI2D

- ChartQA

- ScienceQA

Language models:

- Open-source models:

- google/flan-t5-large

- databricks/dolly-v1-6b

- Llama2 series

- vicuna-13b, vicuna-13b-v1.3

- Cerebras/Cerebras-GPT-13B

- EleutherAI/gpt-neox-20b

- Google/flan-ul2

- phi-1.5 and phi-2

- Proprietary models

- PaLM 2

- GPT-3.5

- GPT-4

- Gemini Pro

Multi-modal models:

- Open-source models:

- BLIP2

- LLaVA

- Qwen-VL, Qwen-VL-Chat

- InternLM-XComposer2-VL

- Proprietary models

- GPT-4v

- Gemini Pro Vision

- Qwen-VL-Max, Qwen-VL-Plus

- Chain-of-thought (COT) [1]

- EmotionPrompt [2]

- Expert prompting [3]

- Zero-shot chain-of-thought

- Generated knowledge [6]

- Least to most [7]

- Character-level attack

- DeepWordBug

- TextBugger

- Word-level attack

- TextFooler

- BertAttack

- Sentence-level attack

- CheckList

- StressTest

- Semantic-level attack

- Human-crafted attack

- Standard evaluation

- Dynamic evaluation

- Semantic evaluation

- Benchmark results

- Visualization analysis

- Transferability analysis

- Word frequency analysis

Please refer to our benchmark website for benchmark results on Prompt Attacks, Prompt Engineering and Dynamic Evaluation DyVal.

- TextAttack

- README Template

- We thank the volunteers: Hanyuan Zhang, Lingrui Li, Yating Zhou for conducting the semantic preserving experiment in Prompt Attack benchmark.

[1] Jason Wei, et al. "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models." arXiv preprint arXiv:2201.11903 (2022).

[2] Cheng Li, et al. "Emotionprompt: Leveraging psychology for large language models enhancement via emotional stimulus." arXiv preprint arXiv:2307.11760 (2023).

[3] BenFeng Xu, et al. "ExpertPrompting: Instructing Large Language Models to be Distinguished Experts" arXiv preprint arXiv:2305.14688 (2023).

[4] Zhu, Kaijie, et al. "PromptBench: Towards Evaluating the Robustness of Large Language Models on Adversarial Prompts." arXiv preprint arXiv:2306.04528 (2023).

[5] Zhu, Kaijie, et al. "DyVal: Graph-informed Dynamic Evaluation of Large Language Models." arXiv preprint arXiv:2309.17167 (2023).

[6] Liu J, Liu A, Lu X, et al. Generated knowledge prompting for commonsense reasoning[J]. arXiv preprint arXiv:2110.08387, 2021.

[7] Zhou D, Schärli N, Hou L, et al. Least-to-most prompting enables complex reasoning in large language models[J]. arXiv preprint arXiv:2205.10625, 2022.

Please cite us if you find this project helpful for your project/paper:

@article{zhu2023promptbench2,

title={PromptBench: A Unified Library for Evaluation of Large Language Models},

author={Zhu, Kaijie and Zhao, Qinlin and Chen, Hao and Wang, Jindong and Xie, Xing},

journal={arXiv preprint arXiv:2312.07910},

year={2023}

}

@article{zhu2023promptbench,

title={PromptBench: Towards Evaluating the Robustness of Large Language Models on Adversarial Prompts},

author={Zhu, Kaijie and Wang, Jindong and Zhou, Jiaheng and Wang, Zichen and Chen, Hao and Wang, Yidong and Yang, Linyi and Ye, Wei and Gong, Neil Zhenqiang and Zhang, Yue and others},

journal={arXiv preprint arXiv:2306.04528},

year={2023}

}

@article{zhu2023dyval,

title={DyVal: Graph-informed Dynamic Evaluation of Large Language Models},

author={Zhu, Kaijie and Chen, Jiaao and Wang, Jindong and Gong, Neil Zhenqiang and Yang, Diyi and Xie, Xing},

journal={arXiv preprint arXiv:2309.17167},

year={2023}

}

@article{chang2023survey,

title={A survey on evaluation of large language models},

author={Chang, Yupeng and Wang, Xu and Wang, Jindong and Wu, Yuan and Zhu, Kaijie and Chen, Hao and Yang, Linyi and Yi, Xiaoyuan and Wang, Cunxiang and Wang, Yidong and others},

journal={arXiv preprint arXiv:2307.03109},

year={2023}

}

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

If you have a suggestion that would make promptbench better, please fork the repo and create a pull request. You can also simply open an issue with the tag "enhancement". Don't forget to give the project a star! Thanks again!

- Fork the project

- Create your branch (

git checkout -b your_name/your_branch) - Commit your changes (

git commit -m 'Add some features') - Push to the branch (

git push origin your_name/your_branch) - Open a Pull Request

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.