As described in the associated page on the Azure Reference Architecture center, in this repository, we use the scenario of applying style transfer onto a video (collection of images). This architecture can be generalized for any batch scoring with deep learning scenario. An alternative solution using Azure Kubernetes Service can be found here.

The above architecture works as follows:

- Upload a video file to storage.

- The video file will trigger Logic App to send a request to the AML pipeline published endpoint.

- The pipeline will then process the video, apply style transfer with MPI, and postprocess the video.

- The output will be saved back to blob storage once the pipeline is completed.

| Style image: | Input/content video: | Output video: |

|---|---|---|

|

click to view video click to view video |

click to view click to view |

Local/Working Machine:

- Ubuntu >=16.04LTS (not tested on Mac or Windows)

- (Optional) NVIDIA Drivers on GPU enabled machine [Additional ref: https://github.com/NVIDIA/nvidia-docker]

- Conda >=4.5.4

- AzCopy >=7.0.0

- Azure CLI >=2.0

Accounts:

- Azure Subscription

- (Optional) A quota for GPU-enabled VMs

While it is not required, it is also useful to use the Azure Storage Explorer to inspect your storage account.

- Clone the repo

git clone https://github.com/Azure/Batch-Scoring-Deep-Learning-Models-With-AML cdinto the repo- Setup your conda env using the environment.yaml file

conda env create -f environment.yml- this will create a conda environment called batchscoringdl_aml - Activate your environment

conda activate batchscoringdl_aml - Log in to Azure using the az cli

az login

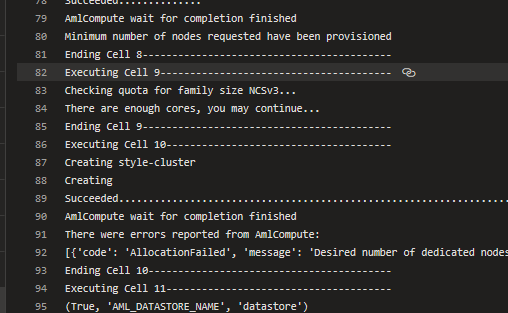

Run throught the following notebooks:

To clean up your working directory, you can run the clean_up.sh script that comes with this repo. This will remove all temporary directories that were generated as well as any configuration (such as Dockerfiles) that were created during the tutorials. This script will not remove the .env file.

To clean up your Azure resources, you can simply delete the resource group that all your resources were deployed into. This can be done in the az cli using the command az group delete --name <name-of-your-resource-group>, or in the portal. If you want to keep certain resources, you can also use the az cli or the Azure portal to cherry pick the ones you want to deprovision. Finally, you should also delete the service principle using the az ad sp delete command.

All the step above are covered in the final notebook.

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.microsoft.com.

When you submit a pull request, a CLA-bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., label, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

Microsoft AI Github Find other Best Practice projects, and Azure AI Designed patterns in our central repository.