We're hiring! If you like what we're building here, come join us at LMNT.

WaveGrad is a fast, high-quality neural vocoder designed by the folks at Google Brain. The architecture is described in WaveGrad: Estimating Gradients for Waveform Generation. In short, this model takes a log-scaled Mel spectrogram and converts it to a waveform via iterative refinement.

- stable training (22 kHz, 24 kHz)

- high-quality synthesis

- mixed-precision training

- multi-GPU training

- custom noise schedule (faster inference)

- command-line inference

- programmatic inference API

- PyPI package

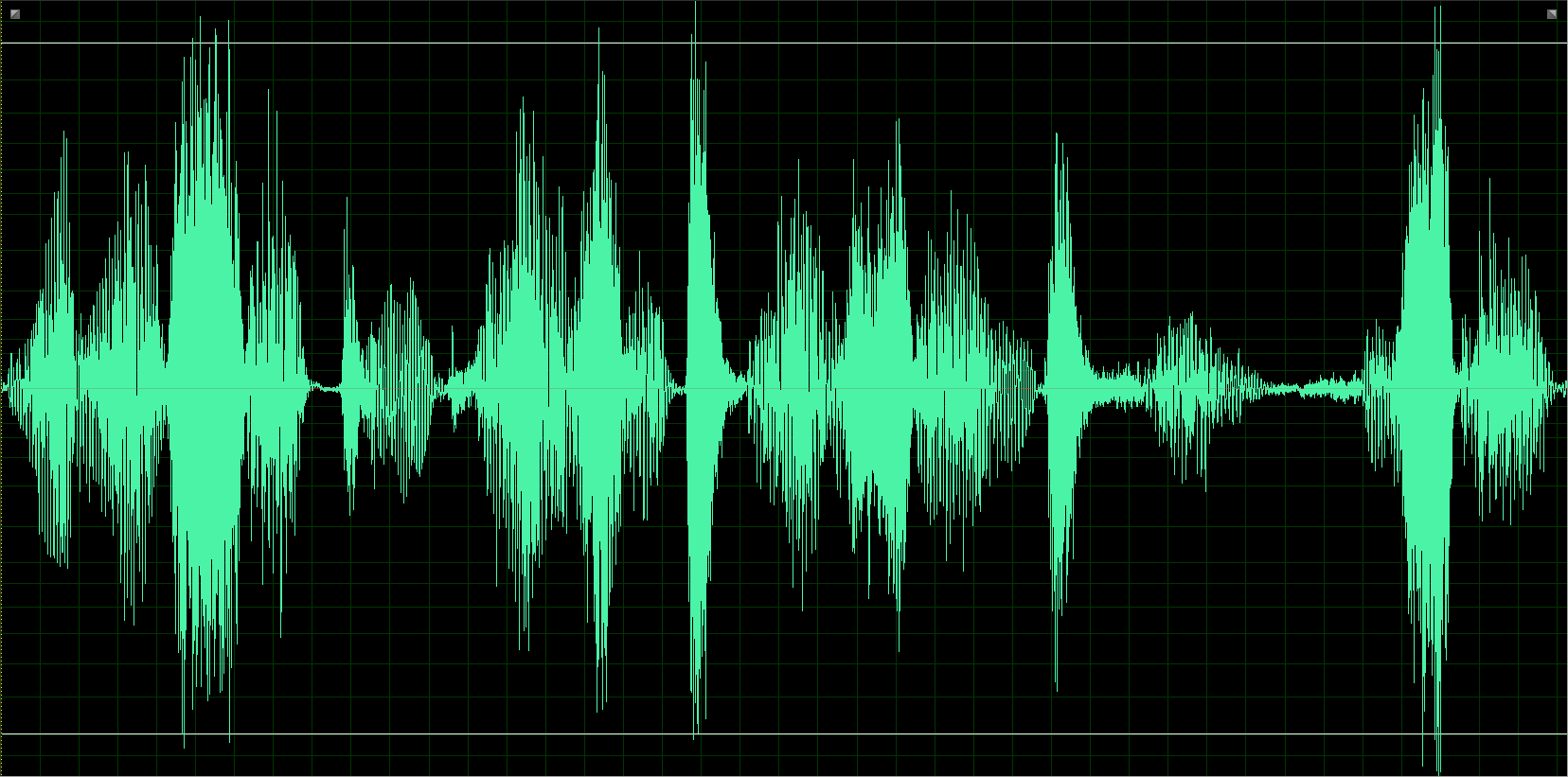

- audio samples

- pretrained models

- precomputed noise schedule

24 kHz pretrained model (183 MB, SHA256: 65e9366da318d58d60d2c78416559351ad16971de906e53b415836c068e335f3)

Install using pip:

pip install wavegrad

or from GitHub:

git clone https://github.com/lmnt-com/wavegrad.git

cd wavegrad

pip install .

Before you start training, you'll need to prepare a training dataset. The dataset can have any directory structure as long as the contained .wav files are 16-bit mono (e.g. LJSpeech, VCTK). By default, this implementation assumes a sample rate of 22 kHz. If you need to change this value, edit params.py.

python -m wavegrad.preprocess /path/to/dir/containing/wavs

python -m wavegrad /path/to/model/dir /path/to/dir/containing/wavs

# in another shell to monitor training progress:

tensorboard --logdir /path/to/model/dir --bind_all

You should expect to hear intelligible speech by ~20k steps (~1.5h on a 2080 Ti).

Basic usage:

from wavegrad.inference import predict as wavegrad_predict

model_dir = '/path/to/model/dir'

spectrogram = # get your hands on a spectrogram in [N,C,W] format

audio, sample_rate = wavegrad_predict(spectrogram, model_dir)

# audio is a GPU tensor in [N,T] format.If you have a custom noise schedule (see below):

from wavegrad.inference import predict as wavegrad_predict

params = { 'noise_schedule': np.load('/path/to/noise_schedule.npy') }

model_dir = '/path/to/model/dir'

spectrogram = # get your hands on a spectrogram in [N,C,W] format

audio, sample_rate = wavegrad_predict(spectrogram, model_dir, params=params)

# `audio` is a GPU tensor in [N,T] format.python -m wavegrad.inference /path/to/model /path/to/spectrogram -o output.wav

The default implementation uses 1000 iterations to refine the waveform, which runs slower than real-time. WaveGrad is able to achieve high-quality, faster than real-time synthesis with as few as 6 iterations without re-training the model with new hyperparameters.

To achieve this speed-up, you will need to search for a noise schedule that works well for your dataset. This implementation provides a script to perform the search for you:

python -m wavegrad.noise_schedule /path/to/trained/model /path/to/preprocessed/validation/dataset

python -m wavegrad.inference /path/to/trained/model /path/to/spectrogram -n noise_schedule.npy -o output.wav

The default settings should give good results without spending too much time on the search. If you'd like to find a better noise schedule or use a different number of inference iterations, run the noise_schedule script with --help to see additional configuration options.