demo_1.mp4

This repository contains a Wav2Lip UHQ extension for Automatic1111.

It's an all-in-one solution: just choose a video and a speech file (wav or mp3), and the extension will generate a lip-sync video. It improves the quality of the lip-sync videos generated by the Wav2Lip tool by applying specific post-processing techniques with Stable diffusion tools.

- 🚀 Updates

- 🔗 Requirements

- 💻 Installation

- 🐍 Usage

- 📖 Behind the scenes

- 💪 Quality tips

⚠️ Noted Constraints- 📝 To do

- 😎 Contributing

- 🙏 Appreciation

- 📝 Citation

- 📜 License

2023.08.17

- 🐛 Fixed purple lips bug

2023.08.16

- ⚡ Added Wav2lip and enhanced video output, with the option to download the one that's best for you, likely the "generated video".

- 🚢 Updated User Interface: Introduced control over CodeFormer Fidelity.

- 👄 Removed image as input, SadTalker is better suited for this.

- 🐛 Fixed a bug regarding the discrepancy between input and output video that incorrectly positioned the mask.

- 💪 Refined the quality process for greater efficiency.

- 🚫 Interruption will now generate videos if the process creates frames

2023.08.13

- ⚡ Speed-up computation

- 🚢 Change User Interface : Add controls on hidden parameters

- 👄 Only Track mouth if needed

- 📰 Control debug

- 🐛 Fix resize factor bug

- latest version of Stable Diffusion WebUI Automatic1111 by following the instructions on the Stable Diffusion Webui repository.

- Launch Automatic1111

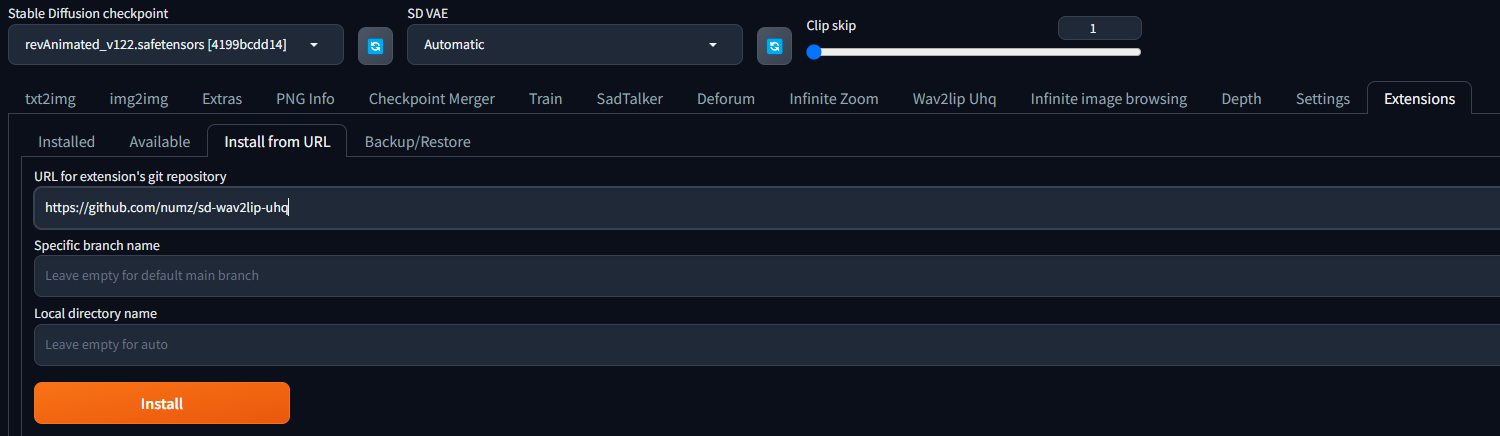

- In the extensions tab, enter the following URL in the "Install from URL" field and click "Install":

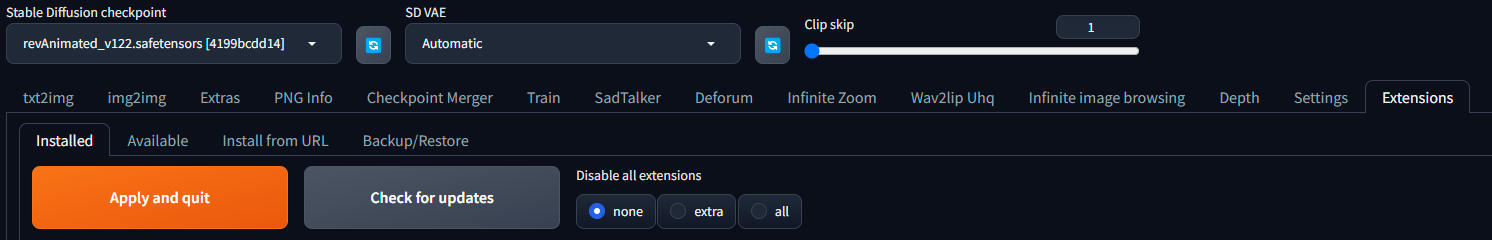

- Go to the "Installed Tab" in the extensions tab and click "Apply and quit".

-

If you don't see the "Wav2Lip UHQ tab" restart Automatic1111.

-

🔥 Important: Get the weights. Download the model weights from the following locations and place them in the corresponding directories (take care about the filename, especially for s3fd)

| Model | Description | Link to the model | install folder |

|---|---|---|---|

| Wav2Lip | Highly accurate lip-sync | Link | extensions\sd-wav2lip-uhq\scripts\wav2lip\checkpoints\ |

| Wav2Lip + GAN | Slightly inferior lip-sync, but better visual quality | Link | extensions\sd-wav2lip-uhq\scripts\wav2lip\checkpoints\ |

| s3fd | Face Detection pre trained model | Link | extensions\sd-wav2lip-uhq\scripts\wav2lip\face_detection\detection\sfd\s3fd.pth |

| landmark predicator | Dlib 68 point face landmark prediction (click on the download icon) | Link | extensions\sd-wav2lip-uhq\scripts\wav2lip\predicator\shape_predictor_68_face_landmarks.dat |

| landmark predicator | Dlib 68 point face landmark prediction (alternate link) | Link | extensions\sd-wav2lip-uhq\scripts\wav2lip\predicator\shape_predictor_68_face_landmarks.dat |

| landmark predicator | Dlib 68 point face landmark prediction (alternate link click on the download icon) | Link | extensions\sd-wav2lip-uhq\scripts\wav2lip\predicator\shape_predictor_68_face_landmarks.dat |

- Choose a video (avi or mp4 format) with a face in it. If there is no face in only one frame of the video, process will fail. Note avi file will not appear in Video input but process will works.

- Choose an audio file with speech.

- choose a checkpoint (see table above).

- Padding: Wav2Lip uses this to add a black border around the mouth, which is useful to prevent the mouth from being cropped by the face detection. You can change the padding value to suit your needs, but the default value gives good results.

- No Smooth: When checked, this option retains the original mouth shape without smoothing.

- Resize Factor: This is a resize factor for the video. The default value is 1.0, but you can change it to suit your needs. This is useful if the video size is too large.

- Only Mouth: This option tracks only the mouth, removing other facial motions like those of the cheeks and chin.

- Mouth Mask Dilate: This will dilate the mouth mask to cover more area around the mouth. depends on the mouth size.

- Face Mask Erode: This will erode the face mask to remove some area around the face. depends on the face size.

- Mask Blur: This will blur the mask to make it more smooth, try to keep it under or equal to Mouth Mask Dilate.

- Code Former Fidelity:

- A value of 0 offers higher quality but may significantly alter the person's facial appearance and cause noticeable flickering between frames.

- A value of 1 provides lower quality but maintains the person's face more consistently and reduces frame flickering.

- Using a value below 0.5 is not advised. Adjust this setting to achieve optimal results. Starting with a value of 0.75 is recommended.

- Active debug: This will create step-by-step images in the debug folder.

- Click on the "Generate" button.

This extension operates in several stages to improve the quality of Wav2Lip-generated videos:

- Generate a Wav2lip video: The script first generates a low-quality Wav2Lip video using the input video and audio.

- Mask Creation: The script creates a mask around the mouth and tries to keep other facial motions like those of the cheeks and chin.

- Video Quality Enhancement: It takes the low-quality Wav2Lip video and overlays the low-quality mouth onto the high-quality original video guided by the mouth mask.

- Face Enhancer: The script then sends the original image with the low-quality mouth on face_enhancer tool of stable diffusion to generate a high-quality mouth image.

- Video Generation: The script then takes the high-quality mouth image and overlays it onto the original image guided by the mouth mask.

- Video Post Processing: The script then uses the ffmpeg tool to generate the final video.

- Use a high quality video as input

- Utilize a video with a consistent frame rate. Occasionally, videos may exhibit unusual playback frame rates (not the standard 24, 25, 30, 60), which can lead to issues with the face mask.

- Use a high quality audio file as input, without background noise or music. Clean audio with a tool like https://podcast.adobe.com/enhance.

- Try to minimize the grain on the face on the input as much as possible. For example, you can use the "Restore faces" feature in img2img before using an image as input for Wav2Lip.

- Dilate the mouth mask. This will help the model retain some facial motion and hide the original mouth.

- Mask Blur maximum twice the value of Mouth Mask Dilate. If you want to increase the blur, increase the value of Mouth Mask Dilate otherwise the mouth will be blurred and the underlying mouth could be visible.

- Upscaling can be good for improving result, particularly around the mouth area. However, it will extend the processing duration. Use this tutorial from Olivio Sarikas to upscale your video: https://www.youtube.com/watch?v=3z4MKUqFEUk. Ensure the denoising strength is set between 0.0 and 0.05, select the 'revAnimated' model, and use the batch mode.

- Ensure there is a face on each frame of the video. If the face is not detected, process will stop.

- The model may struggle with beards.

- If the initial phase is excessively lengthy, consider using the "resize factor" to decrease the video's dimensions.

- While there's no strict size limit for videos, larger videos will require more processing time. It's advisable to employ the "resize factor" to minimize the video size and then upscale the video once processing is complete.

- Add Suno/Bark to generate text to speech audio as wav file input (see bark) and Add a way to generate a high quality speech audio file from a text input

- Possibility to resume a video generation

- Will be renamed to "Wav2Lip Studio" in Automatic1111

- Add more examples and tutorials

- Convert avi to mp4. Avi is not show in video input but process work fine

We welcome contributions to this project. When submitting pull requests, please provide a detailed description of the changes. see CONTRIBUTING for more information.

If you use this project in your own work, in articles, tutorials, or presentations, we encourage you to cite this project to acknowledge the efforts put into it.

To cite this project, please use the following BibTeX format:

@misc{wav2lip_uhq,

author = {numz},

title = {Wav2Lip UHQ},

year = {2023},

howpublished = {GitHub repository},

publisher = {numz},

url = {https://github.com/numz/sd-wav2lip-uhq}

}

- The code in this repository is released under the MIT license as found in the LICENSE file.