jacobdwatters / flag4j Goto Github PK

View Code? Open in Web Editor NEWFast linear algebra library for Java.

Home Page: https://jacobdwatters.github.io/Flag4j/

License: MIT License

Fast linear algebra library for Java.

Home Page: https://jacobdwatters.github.io/Flag4j/

License: MIT License

Currently, methods which behave the same for dense/sparse tensors, matrices, and vectors are implemented in both the sparse and dense versions of each. These methods should be implemented a single time in the base abstract class.

For instance, abs() need only be implemented once in the base RealTensorBase class.

All matrices should have a method which allows for the computation/estimation of a condition number similar to the one provided by numpy.

The condition number of X is defined as the norm of X times the norm of the inverse of X. The condition number can be computed with various norms but the most common are the

When the matrix is symmetric or normal, the condition number is equals the ratio of the absolute maximum/minimum eigenvalues.

Specifically, cond = max(abs(lambdas)) / min(abs(lambdas)).

The condition number using the

The condition number using the

The condition number using the

Many method in the tensor, matrix, and vector classes which return void should return a reference to themself (i.e. return this).

This would make the following possible:

Matrix A = Matrix.I(5).set(-1.0, 1, 1);

Whereas currently, since set(...) returns void, the same statement must be written as:

Matrix A = Matrix.I(5);

A.set(-1.0, 1, 1);

Currently, the implementation of Sparse/Dense and Sparse/Sparse matrix multiplication is extremely inefficient. A correctness fix was added into the concurrent implementations of these matrix multiplications which degraded the efficiency significantly. As such, only the iterative implementations are being used which are also inefficient. Several improvements should be explored.

Some quick and dirty benchmarks were run on a desktop with the following specs and JVM info.

System Info:

OS: Windows 10 - v10.0

Architecture: amd64

CPU: 12th Gen Intel Core i7-12700k

CPU Cores: 12

Logical Cores: 20

L1 Cache: 1.0 MB

L2 Cache: 12.0 MB

L3 Cache: 25.0MB

RAM: DDR4 32 GB

Max JVM Memory: 8.522825728 GB

Java Version: v16.0.2

Below are the results of Sparse/Dense matrix multiplication of various sizes.

DenseTime is a measurement of how long the equivalent Dense/Dense matrix multiplication problem

took and SparseTime is how long the Sparse/Dense matrix multiplication took.

All tests were averaged over 5 runs with random matrices.

Shapes: 3253x632, 632x1794 - Sparsity: 0.938709

DenseTime: 451.837020 ms

SparseTime: 413.320420 ms

Shapes: 3366x2464, 2464x1243 - Sparsity: 0.581160

DenseTime: 1009.434920 ms

SparseTime: 5766.651440 ms

Shapes: 1133x419, 419x2339 - Sparsity: 0.600573

DenseTime: 133.660100 ms

SparseTime: 386.180140 ms

Shapes: 3087x1159, 1159x184 - Sparsity: 0.960400

DenseTime: 67.717240 ms

SparseTime: 29.962820 ms

Shapes: 375x3800, 3800x247 - Sparsity: 0.606162

DenseTime: 67.471960 ms

SparseTime: 120.309400 ms

Shapes: 855x1238, 1238x1187 - Sparsity: 0.770059

DenseTime: 150.682260 ms

SparseTime: 258.105620 ms

Shapes: 19x3536, 3536x2341 - Sparsity: 0.703349

DenseTime: 43.847800 ms

SparseTime: 43.665140 ms

Shapes: 3938x3253, 3253x2029 - Sparsity: 0.678685

DenseTime: 2615.055140 ms

SparseTime: 7826.447000 ms

Shapes: 2746x3345, 3345x2061 - Sparsity: 0.846642

DenseTime: 1982.079240 ms

SparseTime: 2727.135780 ms

Shapes: 2821x951, 951x343 - Sparsity: 0.513327

DenseTime: 112.178160 ms

SparseTime: 358.993360 ms

It can be observed that for large, very sparse matrices (> 96% sparse), the Sparse/Dense implementation may be quite a bit faster than the Dense/Dense implementation. However, for sparsity ~50-60%, the Sparse/Dense implementation may be significantly slower.

Below are the results of Sparse/Sparse matrix multiplication of various sizes.

DenseTime is a measurement of how long the equivalent Dense/Dense matrix multiplication problem

took and SparseTime is how long the Sparse/Sparse matrix multiplication took.

The Sparse/Sparse matrix multiplication is MUCH less efficient than the dense problem for nearly all matrices. The only case where this is not true is for very large, very sparse (> 99.9999% sparse) matrices.

All tests were averaged over 5 runs with random matrices.

Shapes: 1180x2456, 2456x1103 - Sparsity: 0.848517

DenseTime: 225.910500 ms

SparseTime: 85254.761500 ms

Shapes: 1723x1262, 1262x2090 - Sparsity: 0.992088

DenseTime: 205.525300 ms

SparseTime: 4014.238400 ms

Shapes: 1628x1827, 1827x773 - Sparsity: 0.952725

DenseTime: 89.624900 ms

SparseTime: 4558.299500 ms

Shapes: 718x2258, 2258x1814 - Sparsity: 0.857182

DenseTime: 152.445000 ms

SparseTime: 13930.521900 ms

Shapes: 326x1612, 1612x1819 - Sparsity: 0.905012

DenseTime: 45.816900 ms

SparseTime: 4784.875400 ms

Shapes: 792x2290, 2290x2326 - Sparsity: 0.975274

DenseTime: 244.374300 ms

SparseTime: 82.944400 ms

Shapes: 799x1746, 1746x1690 - Sparsity: 0.807611

DenseTime: 107.001900 ms

SparseTime: 8284.154300 ms

Shapes: 500x2236, 2236x1541 - Sparsity: 0.966418

DenseTime: 83.873700 ms

SparseTime: 1449.174600 ms

Shapes: 433x1508, 1508x817 - Sparsity: 0.859663

DenseTime: 20.877200 ms

SparseTime: 3311.930100 ms

Shapes: 2284x951, 951x215 - Sparsity: 0.936992

DenseTime: 19.431200 ms

SparseTime: 20.701400 ms

The Hessenberg decomposition of a general matrix will produce a similar matrix in upper Hessenberg form at the cost of ~

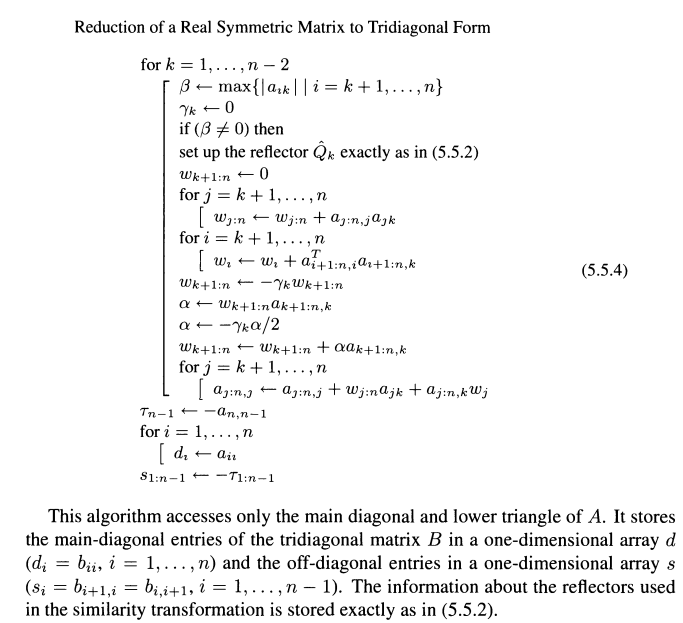

A pseudocode implementation from Fundamentals of matrix computation (David S Watkins) is provided as reference.

getDiag() should return the diagonal entries of a matrix as a vector.

getTriu() should return the upper triangular portion of the matrix with the rest of the entries zeros.

getTriu(int idx) should return the upper triangular portion on and above the diagonal idx with the rest of the entries set to zero. Where idx=0 indicate the principle diagonal, idx=1 indicates the first super diagonal and idx=-1 indicates the first sub diagonal.

getTril() should return the lower triangular portion of the matrix with the rest of the entries zeros.

getTril(int idx) should return the lower triangular portion on and below the diagonal idx with the rest of the entries set to zero. Where idx=0 indicate the principle diagonal, idx=1 indicates the first super diagonal and idx=-1 indicates the first sub diagonal.

The LUSolver class should be renamed ExactSolver or Solver. This will allow another solver to be created which solves a System Ax=b <-> LUx=b provided the LU matrices have been precomputed.

Further, the Exact solver, should compute the LU decomposition then use this solver under the hood.

The current SparseMatrix class is implemented in the sparse COO format. However, with just a rudimentary CSR implementation, a significant performance improvement for sparse-sparse matrix multiplication was observed. As such, the following changes should be made.

SparseMatrix class to SparseCooMatrix.SparseCsrMatrix class which stores matrices int the compressed sparse row (CSR) format.mult method for all sparse matrices should return a dense matrix by default.

mult2Sparse method for sparse matrices which computes the sparse-sparse matrix multiplication but stores as a sparse matrix.The Schur decomposition sometimes converges to a block triangular form instead of a upper triangular form even when the eigenvalues are real. This block form does appear to be correct but the matrix needs to be deflated to that it is in upper triangular form.

For instance, the following matrix converges to a block diagonal form despite the fact it is symmetric (hence it has real eigenvalues and should converge to a proper diagonal matrix):

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.