- This repository is created from Linux Academy course, AWS certified solutions architect - Associate Level and my readings of different websites such as AWS docs

- It includes

- AWS Services description (see the table of contents, below):

- It describes AWS services from the architect role perspective

- There's particularly a section for the following topics: Scalability, Consistency, Resilience, Disaster Recovery, Security which includes Encryption, Pricing, Use cases, Limits and, Best practices

- AWS CLI commands: it's still a work in progress

- Anki flashcards exported file: 318 cards

- AWS Services description (see the table of contents, below):

- Infrastructure

- Security: Identity and Access Control (IAM)

- Security: Security Token Service (STS)

- AWS Organization

- Compute - Elastic Cloud Computing (EC2)

- Serverless Compute - Lambda

- Serverless Compute - API Gateway

- Containerized Compute - Elastic Container Service (ECS)

- Networking - Virtual Private Cloud (VPC)

- Networking - Route 53

- Storage - Simple Storage Service (S3)

- Networking - CloudFront

- Storage - Elastic File System (EFS)

- Database - SQL - Relational Database Service (RDS)

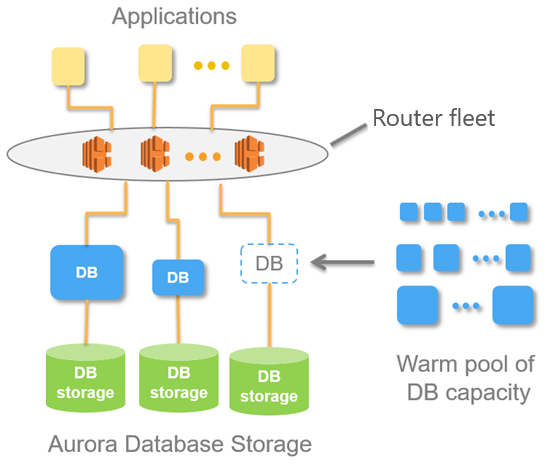

- Database - SQL - RDS Aurora Provisioned

- Database - SQL - RDS Aurora Serverless

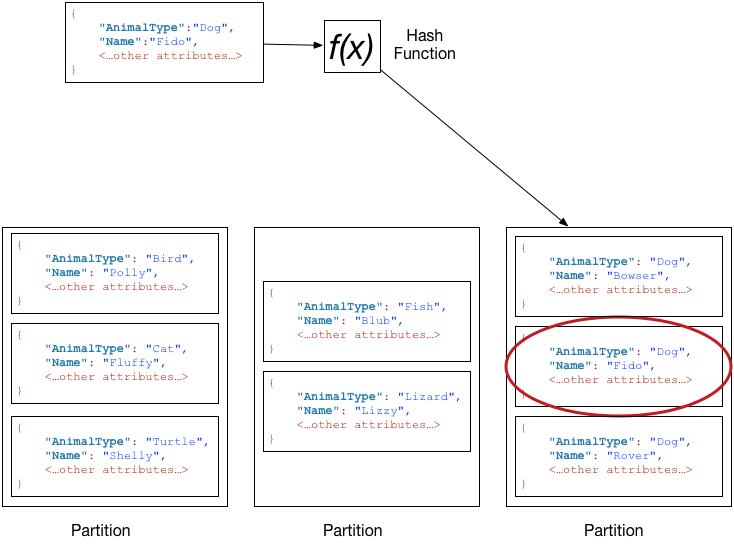

- Database - NoSQL - DynamoDB

- Database - In-Memory Caching

- Hybrid and Scaling - Elastic Load Balancing (ELB)

- Hybrid and Scaling - Auto scaling Groups (ASG)

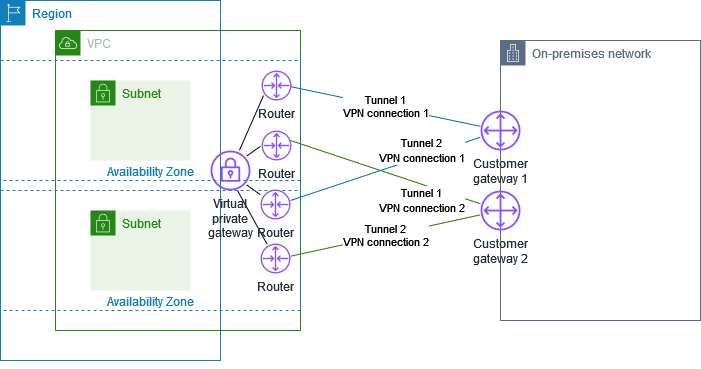

- Hybrid and Scaling - Virtual Private Networks (VPN)

- Hybrid and Scaling - Direct Connect (DX)

- Hybrid and Scaling - Snow*

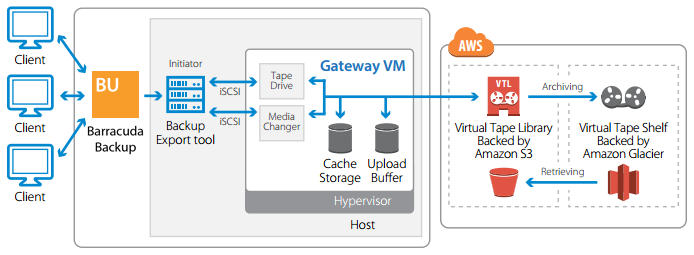

- Hybrid and Scaling - Data Migration - Storage Gateway

- Hybrid and Scaling - Data Migration - DB Migration Service (DMS)

- Hybrid and Scaling - Cognito

- Application Integration - Simple Notification Service (SNS)

- Application Integration - Simple Queue Service (SQS)

- Application Integration - Elastic Transcder

- Analytics - Athena

- Analytics - Elastic Map Reduce (EMR)

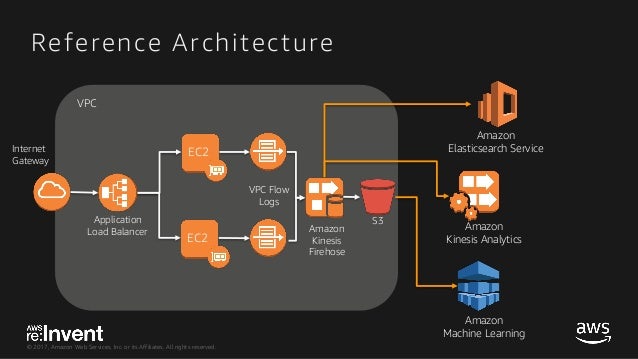

- Analytics - Kinesis

- Analytics - Redshift

- Logging and Monitoring - CloudWatch

- Logging and Monitoring - CloudTrail

- Logging and Monitoring - VPC Flow Logs

- Operations - CloudWatch Events

- Operations: Key Management Service (KMS)

- Deployment - CloudFormation

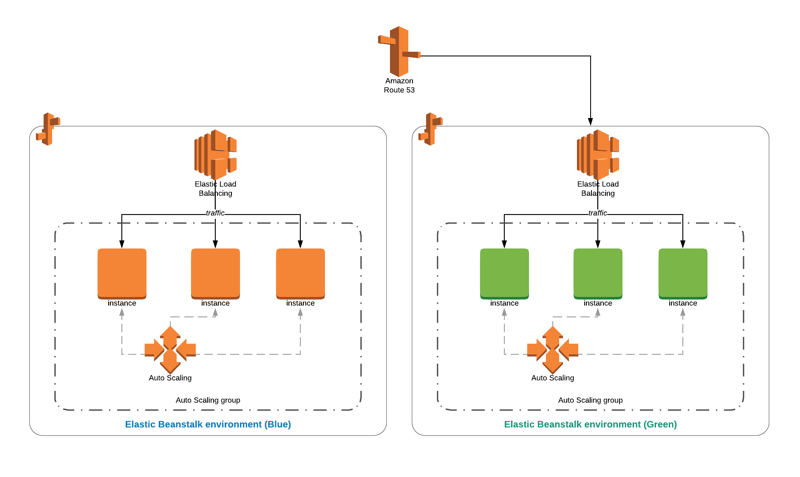

- Deployment: Elastic BeansTalk

- Deployment: OpsWorks

- AWS Services - Comparisons

Global Infrastructure

Region

- It's a collection of data centers (AZs)

- It has 2 or more data centers (AZs)

- Regions AZs are independ from each other (to decrease failure likeliness)

- Regions AZs are close enough to each other so that latency is low between them

- High Speed network:

- Some regions are linked by a direct high speed network (see link above)

- It'sn't a public network

- E.g., Paris and Virginia regions are linked by a high speed network

- Data created is a specific region wont leave the region

- Unless we decide otherwise (data replication to another region)

- Regions allow to operate in a specific country where laws are known

- We make sure that data will only operate under the jurisdiction of those laws

- E.g., US East (N. Virginia) region:

- It's the 1st AWS region (launched in 2006)

- It's always up-to-date: all new services are delivered 1st in this region

- It's good for all training purposes

Availability Zone (AZ)

- It's a logical data center within a region

- There could be more physical data centers within an AZ

- Its name could be different from 1 aws account to another

Edge Locations

- They're also called "Points of Presence" (Pops)

- They host AWS CDN

- There're many more than regions

Regional Edge Caches

- It's a Larger version of Pops

- It has more capacity

- It can serve larger areas

- There're less of them

Description

-

It's a centralised control of an AWS account

-

It's a global service

-

It controls access to AWS Services via policies that can be attached to IAM Identities

-

It's a shared Access to our AWS account.

-

It has granular Permissions:

- It allows to set permission at service level

-

It allows Multifactor Authentication (MFA)

-

It allows to set up our own password rotation policy

-

It supports PCI DSS Compliance (see Foundation, below)

User

- It's an IAM Identity

- It's given long-term credentials

- It's good for known identities

- It has NO permission when it's created (Default Deny or Non-Explicit Deny)

- It has Permission Boundaries:

- It allows to define boundaries beyond which user permission should never go

- For more details

- It has an access type:

-

Programmatic access by key ID and a secrete access key

- It couble active or inactive

- It's viewable only once (view, download in a csv file)

- It's deleted, when it's lost. A new one is generated

-

Programmatic access by SSH public keys to authenticate access to AWS CodeCommit repositories

-

AWS Management Console access:, it uses email/password

-

- It's possible to add from 1 to 10 users at once

- ARN:

- Format: arn:partition:service:region:account:user/userName

- E.g. 1, arn:aws:iam::091943097519:user/hamid.gasmi (normal aws servers)

- E.g. 2, arn:aws-cn:iam::091943097519:user/hamid.gasmi (Beijin aws servers).

Group

- It's an IAM Identity

- It's NOT a real identity

- because it can't be identified as a Principal in a permission policy

- It's used for administrative functions:

- It's a way to attach policies to multiple users at one time

- ARN:

- Format: arn:partition:service:region:account:group/groupName

- E.g., arn:aws:iam::091943097519:group/ITDevelopers

Role

- It's an IAM Identity

- It's given temporary access credentials when it's assumed (max: 36 hours)

- It allows to delegate access with defined permissions to trusted entities without having to share long-term access keys

- It's not logged in; it's assumed

- It's assumed as follow:

- An identity makes an AssumeRole API call: it requests to assume a role

- Then Security Token Service (STS) uses IAM Trust Policy to check if the identity is allowed to assume the role

- The STS uses then IAM Permission Policy attached to the role to generates a temporary access keys for the identity

- For more details

- Manage Multiple AWS Accounts with Role Switching

Policy

- It's attached to an IAM identity

- It's evaluated as follow:

- All attached policies are merged

- Explicit Deny => Explicit Allow => Implicit Deny

- Identity vs. Resource Policies:

- Identity Policy: it's attached to an IAM identity (role, user, group)

- Resource Policy: it's attached to a resource.

- Inline vs. Managed Policies:

- Inline Policy:

- It's created inside an IAM identity (role, user, group)

- It allows exceptions to be applied to identities

- Managed Policy:

- It's created independently from any IAM identity

- It's available on Policy screen of IAM console

- It allows the same policy to be reused and to impact many identities

- It's low overhead but lack flexibility

- Customer-Managed policy is flexible but requires administration

- Inline Policy:

- Policy Json Document:

- Json version: 2012-10-17;

- Statement: [{sid: "myStatementId", effect: "Allow"; "Action": ""; "Resource":""} ... ]

- Identity Based Policy:

- ARN:

- Format: arn:partition:service:region:account:policy/policyName

- E.g., arn:aws:iam::aws:policy/AmazonDynamoDBFullAccess (this is a default aws policy: see account)

Use cases

- Roles:

- To delegate access to IAM users managed within your account

- To delegate access to IAM users under a different AWS account

- To delegate access to an AWS service such as EC2

- E.g. 1: create a role to allow for example an EC2 machine to talk to an S3 server

- E.g. 2: for Break Glass style process:

- We have a support team with low right access

- In case of a crash, we may need to grant them temporary extra rights to let them fix the issues

- E.g. 3, Merging with another company

- Our current company has 2000 employees, and the bought company has also 2000 employees

- We want to quickly let the bought company employees to be able to access our AWS account while the merger is taking place

- Instead of starting the creation of 2000 new users in our company's AWS account (lot of work)

- We could create a new role and set the trust policy to the AWS account of the company that is merging with our

- So our admin overhead is only creating the role by adding the trust policy for this remote account

- E.g. 4, a company with multiple accounts:

- If a company has multiple AWS accounts. Let say 10

- Instead of creating for each employee, a user in each 10 AWS account,

- We could create them in a single account and create a role to let them connect to the other accounts

- E.g. 5, a company of more than 5000 employee and multiple accounts:

- If a company has more than 5,000 employees, it has also multiple accounts

- Roles could also help to let users of account A to have access to account B

- E.g. 6: Web Identity Federation

- Our company developed an application

- The application may have more than 5,000 users

- One of the users operations is writing to a database that is in a single account

- How could we let all these users writing on the database on their behalf?

- We can define a role by adding the trust policy for facebook users/twitter users, ...

Limits

- IAM Users # / AWS account: 5,000

- IAM MFA # / AWS account: 5,000 (Same as above: Users # / account)

- IAM MFA # / User: 1

- IAM Access keys # / User: 2 (regardless the status of the access keys: Active or Inactive)

- IAM Groups # / User: 10

- IAM Role credential expiration: 36 hours

- IAM Managed policies # / User: 10

- IAM Managed policies # / Role: 10

- IAM Inline policies # / Identity: Unlimited

- IAM Inline policies Total Size / User: 2,048 characters (white space aren't counted)

- IAM Inline policies Total Size / Group: 5,120 characters

- IAM Inline policies Total Size / Role: 10,240 characters

Best practices

- IAM Users and Groups should be given least privileges (only the required access to aws resources) Don't create (or delete) access keys for root account. Always setup an MFA on our root account.

- Authentication by secret keys is not recommended:

- If aws is hacked (ec2 instance?), secret keys will be found in

~/.awsfolder.

- If aws is hacked (ec2 instance?), secret keys will be found in

Foundation

- Principal

- It could be a person or application that can make an authenticated

- It could be also an anonymous request to perform an action on a system

- Authentication:

- It's the process of authenticating a principal against an identity

- It could be via username/password or API keys

- Identity:

- It's an object that requires authentication

- It's authorized to access a resource

- When the authentication succeeds, the principal is an Authenticated identity

- Authorization:

- It's the process of checking and allowing or denying access to a resource for an identity (Policies)

- Payment Card Industry Data Security Standard (PCI DSS):

- It's a compliance

- It ensures that a company that is dealing with credit card information (accepts, processes, stores or transmits) maintains a secure environment

Description

-

in progress

-

Session tokens from regional STS endpoints are valid in all AWS Regions.

-

If you use regional STS endpoints, no action is required.

Use cases

Limits

Best practices

- To use regional STS endpoints to reduce latency

Limits

- Organization Max Account #: 2 (Default limit)

- It could be increased:

- Support > Support Center > Create a Case > Service Limit Increase >

- Enter "Organization" in "Limit Type" field

- Select "Number of Accounts" in "Limit" field

- Enter a value in "New limit value" field

- Enter a description in "Use case description"

Best practices

Description

- It provides sizable compute capacity in the cloud

- It's a IaaS (Infrastructure as a Service) AWS Service

- It takes 2mn to obtain and boot new server instances

- It allows to quickly scale capacity both up and down as your computing requirement changes

- It has 1 or more storage volumes

- It has a Root Volume is attached to an instance during the launch process

- Additional volume could be attached to an instance after it's launched

- ARN:

- Format: arn:${Partition}:ec2:${Region}:${Account}:instance/${InstanceId}

- E.g., arn:aws:ec2::191449997525:instance/1234j8r3kdj

Families, Types and, Sizes

- Each EC2 family is designed for a specific broad type workload

- A type determines a certain set of features

- A Size decides the level of workload a machine can cope with

- Instance name: Type + Generation number + [a] + [d] + [n] + ".[Size or Metal]"

- Type letter + Generation #: see item below (families)

- "a" it's for AMD CPUs

- "d" it's for NVMe storage +

- "n" it's for Higher speed networking +

- ".Size": "nano", "micro", "small", "medium", "large", "xlarge", "nxlarge" (n > 2) and, "large"

- ".metal" it's for bare metal instances

- E.g.,: t2.micro, t2.2xlarge, t3a.nano, m5ad.4xlarge, i3.metal, u-6tb1.metal

- General Purpose Family:

- A1: Arm-based machine

- Scale-out workloads, web servers

- T2, T3:

- It's Low-cost instance types

- It uses Credits

- It's for occasional traffic bursts (non for 24/7 workloads)

- It's for general and occasional workloads

- E.g., test Web Servers, small DBs

- M4:

- M5, M5a, M5n:

- They're for general workloads: 100% of resources at all times (24/7)

- E.g., Application Servers

- A1: Arm-based machine

- Compute Optimized Family: - C5, C5n, C4: - They provides more capable CPU - E.g., CPU intensive Apps/DBs

- Memory Optimized Family: - R5, R5a, R5n, R4: - Optimize large amounts of fast memory - E.g., Memory Intensive Apps, memory intensive DBs - X1e, X1: - Optimize large amounts of fast memory - E.g., SAP HANA, Apache Spark - High Memory (u-6tb1.metal, ..., u-24tb1.metal) - z1d: - High compute capacity and a high memory footprint - E.g., Ideal for electronic design automation, EDA - E.g., Certain relational DB workloads with high per-core licensing costs

- Storage Optimized Family: - I3, I3en: - They Deliver fast I/O - E.g., NoSQL DBs, Data Warehousing - D2: - Dense Storage - E.g., Fileservers, Data Warehousing, Hadoop - H1: - High Disk Throughput - E.g., MapReduce-based workloads, - E.g., Distributed file systems such as HDFS and MapR-FS

- Accelerated Computing Family: - P3, P2: - They deliver GPU - They're for General Purpose GPU - E.g., Machine Learning, Bitcoin Mining - G4, G3: - They deliver GPU - E.g., Video Encoding, 3D Application Streaming - F1: - It delivers FPGA - E.g., Genomics research, financial analytics, real time video processing, big data

- For more details

Virtualization

- Xen-based hypervisor: The Xen Project is a Linux Foundation Collaborative Project

- The Nitro Hypervisor that is based on core KVM technology

- Bare metal instances: With virtualization (High Memory Instance)

- For more details

Instance Metadata & User Data

- Instance Metadata:

- It's available at: http://169.254.169.254/latest/meta-data/metadataName from within the EC2 instance itself

- To get the list of all available metadata: #curl http://169.254.169.254/latest/meta-data/

- E.g., ami-id, instance-id, instance-type, local-ipv4, mac, public-ipv4, security-groups

- User Data:

- It's available at: http://169.254.169.254/latest/user-data/ from within the EC2 instance

- To get the list of all available user data: #curl http://169.254.169.254/latest/user-data/

- For more details

Bootstrap

- It's the process of providing "build" directives to an EC2 instance

- It uses user data and can take in

- Shell script-style commands: Power Shell for Windows or Bash for Linux

- Cloud-init directives

- These commands or directives are executed during the instance launch process

- User data can be used to run these commands or directives

- Actions could be involved:

- Configuring an existing application on an EC2

- Performing software installation on an EC2

- Configuring an EC2 instance

- Action that can't be involved

- Configuring resource policies

- Creating an IAM User

Storage: Elastic Block Storage (EBS) Volume

- It's a virtual hard disk in the cloud

- It's a persistent block storage volume for EC2 instances

- It's located outside of the EC2 Host hardware but in the same AZ as the EC2 instance it's attached to

- It's automatically replicated within its AZ to protect from component failure

- It supports a maximum throughput of 1,750 MiB/s per-instance

- It supports a maximum IOPS: 80,000 per instance

- General Purpose (gp2):

- It's SSD based storage (Small IO size)

- Its performance dominant attribute: IOPS

- IOPS / volume: 100 IOPS - 16,000

- IOPS Scalability: 3 IOPS / GiB

- Max Bursts IOPS / volume: 3,000 (credit based)

- Max Throughput / volume: 250 MiB/s

- Size: 1 GiB - 16 TiB

- Use case patterns: It's the default for most workloads

- Provisioned IOPS (io1):

- It's SSD based storage (Small IO size)

- Its performance dominant attribute: IOPS

- Max IOPS / volume: up to 64,000

- Max Throughput / volume: 1,000 MiB/s

- Size: 4 GiB- 16 TiB

- Use case patterns: applications that require sustained IOPS performance with small IOPS size

- Throughput Optimized (st1):

- It's HDD based storage (Large IO size)

- Its performance dominant attribute: Throughput

- Max Throughput / volume: 500 MiB/s

- Max IOPS / volume: 500

- Size: 500 GiB - 16 TiB

- It has a Low storage cost

- Use case patterns: It's used for frequently accessed, throughput-intensive workloads; it can't be a boot volume

- Cold HDD (sc1):

- It's HDD based storage (Large IO size)

- Its performance dominant attribute: Throughput

- Max Throughput / volume: 250 MiB/s

- Max IOPS / volume: 250

- Size of 500 GiB - 16 TiB

- It has the lowest storage cost

- Use case patterns: Infrequently accessed data, Cannot be a boot volume (See use case)

- It could be created at the same time as an instance is created

- It could be created from scratch (type, size, AZ, encryption, tags, ...)

- It could be created (restored) from a snapshot

- It could be created in any AZ within the snapshot region

- If a snapshot isn't encrypted, we could choose weather or not to create an encrypted volume

- If a snapshot is encrypted, we can only create an encrypted volume

- EBS-Optimized vs. non-EBS-Optimized instances:

- Legacy non-EBS-optimized instances:

- It used a shared networking path for data and storage communications

- It resulted in lower performance for storage and normal networking

- EBS-optimized mode:

- It was historically optional

- It's the default now

- It adds optimizations and dedicated communication paths for storage and traditional data networking

- It allows consistent utilization of both

- It's one required feature to support higher performance storage

- Legacy non-EBS-optimized instances:

- More details:

Storage: Instance Store (Ephemeral) Volume

- It's a non persistent storage

- It's based on Non-Volatil Memory Express NVMe

- It has the highest Throughtput and IOPS (data is accessed simultaneously because of its thousands of queues and commands in each queue)

- Its data is lost when:

- The underlying disk drive fails

- The underlying EC2 host fails

- The instance stops or terminates

- It's located within the the EC2 Host hardware

- It's included as part of its instance's usage cost

- There're EC2 instances that include:

- Instance store volumes only: to create it:

- Choose an AMI from Community AMIs > Select "Root Device Type"

- Filter: "Instance Store" > choose a machine

- A mix of Instance store volumes and EBS, to create it:

- Choose an instance which "Instance Storage (GB)" is different from "EBS Only"

- EBS volume only, to create it:

- Choose an instance which "Instance Storage (GB)" is "EBS Only"

- Instance store volumes only: to create it:

- For more details

Storage: Snapshot

- It's an Incremental backup

- It doesn't have the limitation of incremental backup:

- A restore could be not possible if an intermediate backup (Backup i) is lost

- To restore a backup at time "t", all backups from 1 to t will be used

- It's stored in S3:

- It doesn't have a storage limitation

- It's crash consistent:

- It's consistent to their point-in-time

- It's done transparently from the OS and any applications that are inside the instance

- It could potentially contain data in an inconsistent state: data that isn't persisted is lost

- Crash Consistent vs. Application consistent

- It could be created from a volume:

- If a volume is encrypted, the snapshot will be encrypted

- If a volume isn't encrypted, the snapshot won't be encrypted

- It could be created from another snapshot:

- It could be done by using "Copy Snapshot" feature

- It could be done from one region to a new region

- If the source snapshot isn't encrypted, the target snapshot could be encrypted

- If the source snapshot is encrypted, the target snapshot will be encrypted

- Snapshot Lifecycle Policy:

- It allows to automate snapshot creation

- It's run periodically

- It requires to be attached to an IAM role

- Automating the Amazon EBS Snapshot Lifecycle

- For more details

Amazon Machine Image (AMI)

- It's stored in S3

- It contains base OS and any "baked" components

- Instance Store-backed AMI:

- It's for instance store backed instance

- It creates an instance with an instance store backed root volume

- It's created from a template which includes bootstrapping code

- EBS-backed AMI

- It's for EBS-backed instance

- It creates an instance with an EBS backed root volume

- It references 1 or more Snapshots

- It contains Block device mapping:

- It links its snapshots to how they're mapped to the new instance

- It's used when an instance is created to map its volumes to the instance

- It contains permission: who can use it to create a new instance:

- It's by default private for the account it is created in

- It could be shared with specific AWS accounts (no encryption)

- It could be public (no encryption)

- For more details

Network: Elastic Network Interface (ENI)

- It's a logical networking component in a VPC that represents a virtual network card

- It's attached to 1 Subnet (and 1 VPC, consequently)

- It can be associated with a max of 5 Security Groups

- Each EC2 instance is created with a Primary ENI device (eth0):

- Additional ENI devices (eth1 ...) could be added to an EC2 instance, if supported

- Elastic Network Interfaces

Network: Private IP

- It's associated to an ENI device

- Primary private IP @:

- It's associated with the primary ENI device (eth0)

- It's created during the instance launch process

- It's static: it remains unchanged during instance lifetime

- It remains unchanged when an instance is in stopped state

- It remains unchanged when an stopped instance is restarted

- It's known by the instance OS: it's displayed by ifconfig command

- Secondary private IPs:

- They're assigned when supported by the instance type the ENI is attached to

- Are They known by the instance OS? Are they displayed by ipconfig

- They're within the IP range of the subnet their instance is associated with

Network: Public IP

- It could be associated to an EC2 instance

- It isn't configured on an instance itself

- A NAT is done to translate between the private and the public addresses

- See Internet Gateway in VPC description

- It's unknown by the instance OS

- It isn't displayed by ipconfig command

- It's dynamic:

- It's released when an instance is stopped

- It's released when an Elastic API is allocated to an instance (To check)

- There's not any public IP attached to a stopped instance

- It's changed when a stopped instance starts

- because the EC2 instance moves to a new physical EC2 host

Network: Elastic IP (EIP)

- It's Static

- It's Public

- It's picked from AWS Elastic IP pool (it's NOT AZ specific)

- It replaces the normal public IP when it's allocated to a public instance:

- It changes the instance public DNS name

- It remains unchanged even if the instance is stopped

- When it's disassociated from an EC2 public instance, a new public IPv4 and public DNS are released and associated with the EC2 instance

- It can be moved to a new instances

- It's charged (because they're in short supply)

Network: Private DNS

- It only works inside its internal network (VPC)

- It's based on the primary Private IP

- Format: ip-0-0-0-0.ec2.internal

- E.g., an EC2 instance which private IPv4 is 172.31.9.16, the private DNS will be ip-172-31-9-16.ec2.internal

- It remains unchanged during instance stop/start

- It's released when the instance is terminated

Network: Public DNS

- Resolution:

- It's resolved to the public address externally

- It's resolved to the the private address internally (in its VPC)

- When it's pinged inside the EC2 instance VPC, the private IP is returned

- When it's pinged outside of EC2 instance VPC (E.g., Internet), the public IP is returned

- It's based on the Public IP (Public IP or Elastic IP):

- Format: ec2-0-0-0-0.compute-1.amazonaws.com

- E.g., an EC2 instance with a public IPv4 54.164.90.18, its public DNS is: ec2-54-164-90-18.compute-1.amazonaws.com

- It's dynamic:

- It's released when an instance is stopped

- There's not any public DNS attached to a stopped instance

- It's changed when a stopped instance starts

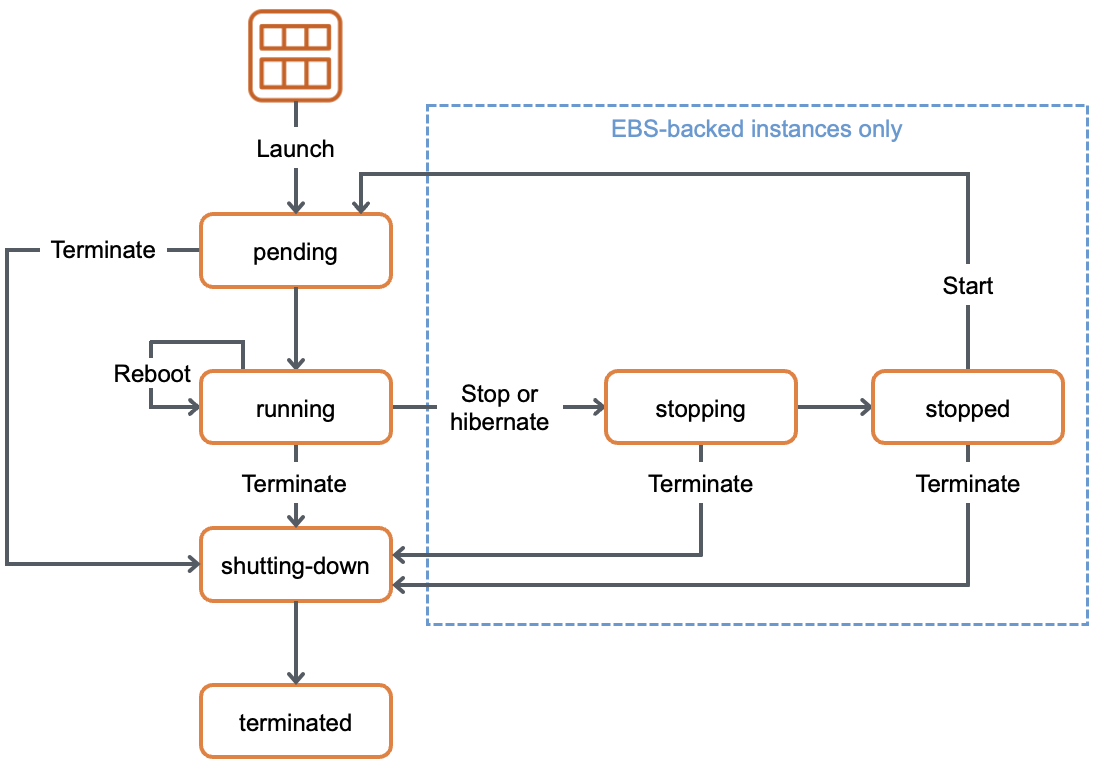

Operations

- EBS backed-instance Pending:

- A new instance is launched in a host within the selected AZ (Subnet)

- EBS and/or Instance store volumes are created and attached to the instance

- A default ENI (eth0) is attached to the instance:

- A private IP within the EC2 subnet IP range is created

- A private DSN name is associated with the instance

- A public IP is created and mapped to the instance eth0, if applicable (a public subnet + public IP sitting is enabled)

- Bootstrap script is run

- EBS backed-instance Stopping:

- It performs a normal shutdown and transition to a stopped state

- All EBS volumes are kept

- All Instance store volumes are detached from the instance (their data is lost)

- Plaintext DEK is discarded from EC2 Host hardware, if applicable

- Private DNS, IPv4 & IPv6 are unchanged

- Public DNS, IPv4 & IPv6 are released from the instance, if applicable (in case of public Subnet)

- Charges related to the instance (instance and instance store volumes) is suspended

- Charge related to EBS storage remains

- EBS backed-instance Stopped:

- Attach/detach EBS volumes

- Create an AMI

- Create a Snapshot

- Scale down/up: Change the kernel, ram disk, instance type

- EBS backed-instance Starting (from stopped):

- An instance is launched in a new the host and in the intial AZ

- EBS volumes are attached to the new instance

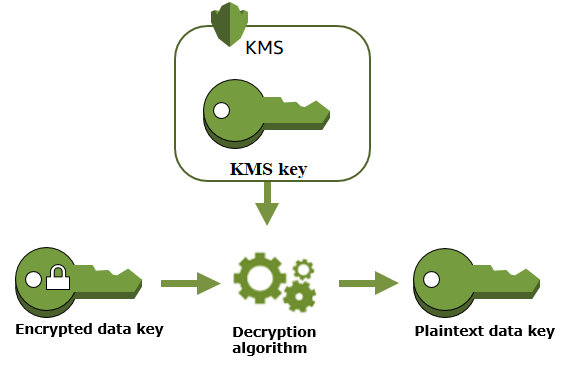

- Encrypted EBS volumes DEK is decrypted by KMS, if applicable

- The plaintext DEK is stored in EC2 host hardware, if applicable

- Bootstrap script is run?

- Instance store volumes are back to their initial states when the instance was 1st started (or impacted by bootstrapping)

- Private DNS, IPv4 & IPv6 are unchanged

- New Public DNS, IPv4 & IPv6 are attached to the instance, if applicable (in case of public Subnet)

- EBS backed-instance Rebooted:

- The EC2's plaintext DEK is discarded

- "Starting" action are run?

- EBS backed-instance Terminating:

- Private IPv4 & IPv6 are released from the instance

- Public IPv4 & IPv6 are released from the instance

- For more details

Performance

- Use EBS-Optimized Instances (See EBS)

- EBS Optimization:

- It's about the performance of restoring a volume from a Snapshot

- When we restore a volume from a snapshot, it doesn't immediately copy all that data to EBS

- Data is copied as It's requested

- So, we get the max performance of a the EBS volume, only when all that data has been copied across in the background

- Solution: to perform a read of every part of that volume in advance before It's moved into production

- To ensure that our restored volume always functions at peak capacity in production, we can force the immediate initialization of the entire volume using dd or fio

- For more details:

- Enhanced Networking - SR-IOV:

- It stands for Single Root I/O volume

- Opposite to the traditional network virtualization

- Which is using Multi-Root I/O Volume (MR-IOV)

- A software-based hypervisor is managing virtual controllers of virtual machines to access one physical network card

- It's slow

- SR-IOV allows virtual devices (controllers) to be implemented in hardware (virtual functions)

- In other words, it allows a single physical network card to appear as multiple physical devices

- Each instance be given one of these (fake) physical devices

- This results in faster transfer rates, lower CPU usage, and lower consistent latency

- EC2 delivers this via the Elastic Network Adapter (ENA) or Intel 82599 Virtual Function (VF) interface

- Fore more details

- Enhanced Networking - Placement Groups:

- It's a good way to increase Performance or Reliability

- Clustered Placement Group:

- Instances grouped within a single AZ

- It's good to increase performance

- It's recommended for application that need low network latency, high network throughput (or both)

- Only certain instances can be launched in to a clustered group

- Spread Placement Group:

- It's good to increase availability

- Instances are each individual placed on distinct underlying hardware (separate racks)

- It's possible to have spread placement groups inside different AZ within one region

- So if a rack does go through and fail, It's only going to affect 1 instance

- Partition Placement Group:

- It's good to increase availability for large infrastructure platforms where we want to have some visibility of where those instances are from a partition perspective

- Similar to spread placement group except there are multiple EC2 instances within a partition

- Each partition within a placement group has its own hardware (own set of racks)

- Each rack has its own network and power source

- It allows to isolate the impact of hardware failure within our application

- If needed, we can even make it automated, if we give that information to our applications itself, it can have visibility over its infrastructure placement

- Multiple EC2 instances HDFS, HBase, and Cassandra

- Dedicated Hosts:

- Physical server dedicated for our use for a given type and size (Type and Size are inputs)

- The number of instances that run on the host is fixed - depending on the type and size (see print screen below)

- It can help reduce cost by allowing us to use our existing server-bound software licenses

- It can be purchased On-Demand (hourly)

- Could be purchased as a reservation for up to 70% off On-Demand price

- Amazon EBS Volume Performance on Linux Instances

Scalability

Resilience

- An instance is located in a subnet which is located in an individual AZ

- It uses EBS storage that is also located in the instance AZ

- If its AZ fails, the instance fails

- It's NOT resilient by design across an AZ

- EBS is automatically replicated within its AZ, though

- Solution to improve EC2 resiliency:

- See Spread Placement Group

- See Partition Placement Group

- See Auto Scalling Group with 1 desired instance; 1 min instance; 1 max instance

Disaster Recovery

- See EBS Snapshot Lifecycle Policy

Security

- Instance Role:

- It's a type of IAM Role that could be assumed by EC2 instance or application

- An application that is running within EC2,

- It'sn't a valid AWS identity

- It can't therefore assume AWS Role directly

- They need to use an intermediary called instance profile:

- It's a container for the role that is associated with an EC2 instance

- It allows applications running on EC2 to assume a role and

- It allows application to access to temporary security credentials available in the instance metadata

- It's attached to an EC2 instance at launch process or after

- Its name is similar to the IAM role's one It's associated to

- It's created automatically when using the AWS console UI

- Or It's created manually when using the CLI or Cloud Formation

- EC2 AWS CLI Credential Order:

- (1) Command Line Options:

- aws [command] —profile [profile name]

- This approach uses longer term credentials stored locally on the instance

- It's NOT RECOMMENDED for production environments

- (2) Environment Variables:

- You can store values in the environment variables: AWS_ACCESS_KEY_ID, AWS_ACCESS_KEY, AWS_SESSION_TOKEN

- It's recommended for temporary use in non-production environments

- (3) AWS CLI credentials file:

- aws configure

- This command creates a credentials file

- Linux, macOS, Unix: it's stored at ~/.aws/credentials

- Windows, it's store at: C:\Users\USERNAME.aws\credentials

- It can contain the credentials for the default profile and any named profiles

- This approach uses longer term credentials stored locally on the instance

- It's NOT RECOMMENDED for producuon environments

- (4) Container Credentials:

- IAM Roles associated with AWS Elastic Container Service (ECS) Task Definitions

- Temporary credentials are available to the Task's containers

- This is recommended for ECS environments

- (5) Instance Profile Credentials

- IAM Roles associated with Amazon Elastic Compute Cloud (EC2) instances via Instance Profiles

- Temporary credentials are available to the Instance

- This is recommended for EC2 environments

- (1) Command Line Options:

- Security Group (SG):

- It acts at the instance level, not the subnet level

- See VPC description

- Network Access Control List (NACL):

- It acts at the subnet level

- See VPC description

- Snapshot Permission:

- It's by default private for the account it is created in

- It could be shared with specific AWS accounts if it's not encrypted

- It could be public if it's not encrypted

- AMI Permission:

- Same as Snapshot permission

- Encryption in Transit:

- It's done by EC2 host hardware to encrypt data in transit between an EC2 instance and its EBS storages

- Encryption At Rest:

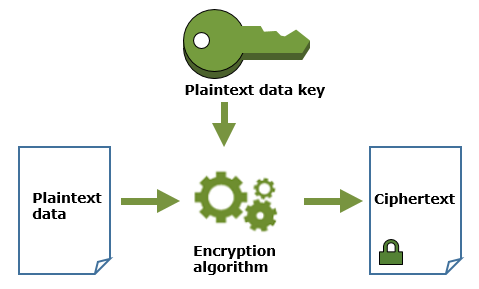

- It's done by EC2 host hardware to encrypt/decypt EC2 volumes:

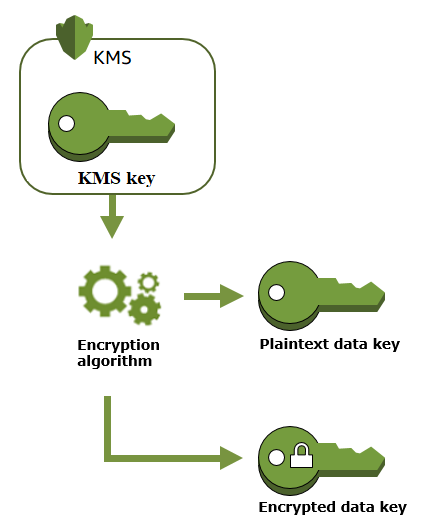

- It uses AWS KMS CMK to generates a Data Encryption Key (DEK) in each region

- AWS KMS encryption/decryption is supported by most instance types (Especially those that use the Nitro Platform)

- AWS KMS isn't encrypting neither It's decrypting EBS data

- It stores the DEK with each EC2 EBS volume

- It uses the same DEK to encrypt any EC2 volume, snapshots, AMIs

- It doesn't impact EC2 performance:

- EC2 instance and OS see plaintext data

- EC2 host hardware:

- It uses AWS KMS to decrypt EC2 DEK by using the related AWS KMS CMK

- It stores the plaintext DEK in its memory

- It uses the plaintext DEK to encrypt (decrypt) data from (into) EC2 instance to (from) an EBS volume

- It erases the plaintext DEK when the instance is stopped/rebooted

- Encryption from an OS perspective:

- It requires to use an OS level encryption available on most OS (Microsoft Windows, Linux)

- It ensures that data is encrypted from from the OS perspective

- It's possible to use OS encryption + EC2 volume encryption at rest

- Snapshot: when an encrypted EBS snapshot is copied into another region:

- A new CMK should be created in the destination region

- The new snapshot will be encrypted

- For more details:

Monitoring

- VolumeReadBytes:

- VolumeWriteOps:

- VolumeThroughputPercentage:

- Monitoring the Status of Your Volumes

Pricing

- On Demand:

- It allows to pay a fixed rate by second with a minimum of 60 seconds

- No commitment and It's the default

- Spot:

- It enables to bid whatever price we want for instance capacity

- It's exactly like the stock market: it goes up and down (The price moves around)

- When Amazon have excess capacity (there're available EC2 servers capacity. Not used)

- Amazon drops then the price of their EC2 instances to try and get people to use that spare capacity

- The maximum price indicate the highest amount the customer is willing to pay for an EC2 instance

- We get the price that we want to bid at,

- if it hits that price we have our instances

- If it goes above that price then we're going to lose our instances within 2 minutes window

- The default behavior is to automatically bid the current spot instance price

- The price fluctuates, but will never exceed the normal on-demand rates for EC2 instances

- Real examples: https://aws.amazon.com/ec2/spot/testimonials/

- Spot Fleet:

- It's a container for "capacity needs"

- We can specify pools of instances of certain types/sizes aiming for a given "capacity"

- A minimum percentage of on-demand can be set to ensure the fleet is always active

- Reserved:

- Contract terms: 1 or 3 Year Terms

- Payment Option: No Upfront, Partial Upfront, All Upfront (max cost saving)

- It could be Zonal: The capacity is then reserved in the specific zone (capacity reservation). So if there capacity constraint on a zone, those with zonal reserved instances are prioritized

- It could be also on regional: The capacity isn't reserved in a particular region's zone? (more flexibility)

- It offers a significant discount on the hourly charge for an instance

- Standard Reserved Instance:

- Up to 75% off on demand instances

- The more we pay up front and the longer the contract, the greater the discount

- Convertible Reserved Instances:

- Up to 54% off on demand capacity to change the attributes of the RI as long as the exchange results in the creation of reserved instances of equal or greater value

- So it allows you to change between your different instance families

- E.g.: We have an EC2 R5 instance that is very high ram with Ram utilization; we would like to convert it to EC2 C5 instance that has very very good CPE use

- Scheduled Reserved Instances:

- They're available to launch within the time windows we reserve (predictable: fraction of a day/week/month)

- E.g. 1: We run a school

- E.g. 2: We need to scale when everyone comes in at 9:00 on logs

- Capacity Priority: how AWS resolves capacity constraint:

- Zonal Reserved instance are guaranteed to get the reserved instances on the zone

- On Demand instances

- Spot instances

Use cases

- EC2 instance:

- Monolothic application that require a traditional OS to work

- EC2 AMI:

- AMI baking (or AMI pre-baking):

- Base installation:

- Immutable architecture:

- It's a technique where servers (EC2 here) are never modified after they're created

- E.g., if a web app. failed for unknown reasons,

- Rather than connecting to it, performing diagnostics, fixing it and hopefully getting it back into a working state,

- We could just stop it and launch a brand new one from its known working AMI and

- Optionally investigate offline the failed instance if necessary or terminate it

- Scaling and High-availability: see ASG

- EC2 storge:

- General Purpose (gp2) is the default for most workloads

- Recommended for most workloads

- System boot volumes

- Virtual desktops

- Low-latency interactive apps

- Development and test environments

- Provisioned IOPS (io1):

- Critical applications that require sustained IOPS performance, or more than 16,000 IOPS or 250 MiB/s of throughput per volume

- Large database workloads: MongoDB, Cassandra

- Applications that require sustained IOPS performance

- Throughput Optimized (st1):

- Frequently accessed,

- Throughput-intensive workloads

- Streaming workloads requiring consistent, fast throughput at a low price

- Big data,

- Data warehouses

- Log processing

- It cannot be a boot volume

- Cold HDD (sc1):

- Throughput-oriented storage for large volumes of data that is infrequently accessed

- Scenarios where the lowest storage cost is important

- It cannot be a boot volume

- General Purpose (gp2) is the default for most workloads

- EC2 ENI:

- To use for applications that are Media Access Control (MAC) address dependent

- It's possible to create it with a fixed MAC address

- It ensures that the MAC address of the EC2 instance will not change even if the instance restarts or reboots

- To use for applications that are Media Access Control (MAC) address dependent

- Princing models:

- On Demand:

- Application with short term, spiky, or unpredictable workloads that can't be interrupted

- Application being developed or tested on Amazon EC2 for the 1st time

- Spot:

- Good for stateless parts of application (servers)

- Good for workloads that can tolerate failures

- Applications that have flexible start and end times

- Applications that are only feasible at very low compute prices

- Users with urgent computing needs for large amounts of additional capacity

- Spot instances tend to be useful for dev/test workloads, or perhaps for adding extra computing power to large-scale data analytics projects

- Antipattern: spot isn't suitable for long-running workloads and require stability and can't tolerate interruptions

- Spot Fleet:

- Reserved:

- Long-running, understood, and consistent workloads

- Applications that require reserved capacity

- Users able to make upfront payments to reduce their total computing

- On Demand:

- Placement Groups:

- Spread Placement Group:

- Applications that have a small # of critical instances that should be kept separate from each other: email servers, Domain controllers, file servers

- Partition Placement Group:

- Multiple EC2 instances HDFS, HBase, and Cassandra

- Spread Placement Group:

- Dedicated Hosts:

- Regulatory requirements that may not support multi-tenant virtualization

- Licensing which doesn't support multi-tenancy or cloud deployments

- We can control instance placement

Limits

- EBS max throughput / instance: 1,750 MiB/s

- EBS max IOPS / instance: 80,000 (instance store volume if more is needed)

- Max SG # / Instance (ENI): 5

- Max instance # / Spread Placement Group: 7 (SPG is located in a single AZ)

- Max Instance # / Partition Placement Group: 7 partitions per AZ

- Encryption is NOT supported by all Instance types

- Add/remove an instance store volume after an instance is created: Not possible

Best practices

- To create application-consistent Snapshot, it's recommended:

- To stop the EC2 instance or to Freeze applications running on it

- To start the snapshot only when

- The application running on the instance are running on backup mode

- The application running on the instance are "flushed" any in memory cache to disk

- To unfreeze (release the "Freeze" operation), as soon as the snapshot starts (snapshot is consistent to its point-in-time)

- Clustered Placement Group:

- We should always try to launch all of the instances that go inside a placement group at the same time

- AWS recommends homogenous instances within cluster placement groups

- We might get a capacity issue when we ask to launch additional instances in an existing placement group

Description

- It's a Function as a Service (FaaS):

- It care of provisioning and managing the servers where to run the code

- It's an abstraction layer where AWS manages everything:

- Data centers, hardware, Assembly code/Protocols, OS, Application layer/AWS APIs, scaling

- All we need to worry about is our code

- It scales automatically: 2 requests => 2 independent functions are triggered

- It supports Event-Driven architecture:

- It runs our code in response to events: this includes schedule time

- These events could be changes to data in S3 bucket, or a DynamoDB table, etc

- These events are called triggers

- It runs in response to HTTP requests using AWS API Gateway or API calls made using the AWS SDKs

- It's stateless by design: each run is clean

- It supports different languages: Node.js, Java, Python, C#, PowerShell, Ruby

- It can consumes:

- Internet API endpoints or Other Services

- Other Lambda functions (a Lambda Function can trigger other Lambda functions)

- It could be allowed to access a VPC

- It allows access to private resources

- It's slightly slow to start

- It has an IP address

- It inherits any of the networking configuration inside the VPC (custom DNS, custom routing)

- ARN:

- Qualified ARN

- The function ARN with the version suffix

- arn:aws:lambda:aws-region:acct-id:function:my-function:$LATEST

- arn:aws:lambda:aws-region:acct-id:function:my-function:$Version$

- E.g. 1, arn:aws:lambda:aws-region:acct-id:function:helloworld:$LATEST

- E.g. 2, arn:aws:lambda:aws-region:acct-id:function:helloworld:1

- Unqualified ARN

- The function ARN without the version suffix

- arn:aws:lambda:aws-region:acct-id:function:my-function

- It can't be used to create an alias

- E.g. , arn:aws:lambda:aws-region:acct-id:function:helloworld

- Alias ARN:

- It's like a pointer to a specific Lambda function version

- It's used to access a specific version of a function

- A Lambda function can have one or more aliases

- arn:aws:lambda:aws-region:acct-id:function:my-function:my-alias

- arn:aws:lambda:aws-region:acct-id:function:helloworld:PROD

- arn:aws:lambda:aws-region:acct-id:function:helloworld:DEV

- For more details:

- Qualified ARN

Runtime environment

- It's a temporary environment where the code is running

- It's used by Lambda function to store some files:

- E.g., Libraries when Lambda includes additional libraries

- When a lambda function is executed, it's downloaded to a fresh runtime environment

- Limit: 128 MB to 3008 MB

Triggers

- API Gateway: api application-services

- AWS IoT

- Alexa Skills Kit

- Alexa Smart Home

- Application Load Balancer

- CloudFront

- CloudWatch Events

- CloudWatch Logs

- CodeCommit

- Cognito Sync Trigge: authentication aws identity mobile-services sync

- DynamoDB

- Kinesis

- S3

- SNS

- SQS

Scalability

- It scales automatically: 2 requests => 2 independent functions are triggered

- When it's used with a VPC, we must make sure that our VPC has sufficient ENI capacity to support the scale requirements of our Lambda function:

- ENI capacity = Projected peak concurrent executions * (Memory in GB / 3 GB)

- Peak Concurrent Execution = Peak Requests per Second * Average Function Duration (in seconds)

- Scaling Lambdas inside a VPC

- VPC Configuration

- Configuring a Lambda Function to Access Resources in a VPC

- Reserved concurrency:

- Concurrency is subject to a Regional limit that is shared by all functions in a Region (see limit section)

- When a function has reserved concurrency, no other function can use that concurrency

- It ensures that it can scale to, but not exceed, a specified number of concurrent invocations

- It ensures to not lose requests due to other functions consuming all of the available concurrency

- For more details

- AWS Lambda Function Scaling

Consistency

Resilience

- It's HA

- It runs in multiple AZs to ensure that it's available to process events in case of a service interruption in a single AZ

- For more details

Disaster Recovery

Security

- Execution role:

- It's the role that Lambda assumes to access AWS services

- It gets temporary security credentials via STS

- It's basic permission is to CloudWatch

- Resource Policies:

- It allows to give give a service, resource, or account access to a Lambda function

- It could be applied on a function or to one of its versions

- It's updated either through the AWS CLI or the AWS DSK (it's NOT possible through AWS Console)

- Using Resource-based Policies for AWS Lambda

- Lambda Function Versions and Ressource Policies

Monitoring/Auditing/Debugging

- AWS X-Ray:

- It collects data about events that a function processes,

- It identifies the cause of errors in an serverless applications

- It lets trace requests to an application's API, function invocations, and downstream calls

- CloudWatch

- 1 Log Group per Lambda Function

- 1 Log Stream per period of time

Pricing

- Number of Requests:

- 1st 1 million requests are free

- $0.20 per 1 million requests

- Duration and Memory:

- It's calculated from the time our code begins executing until it returns or terminates

- It's rounded up to the nearest 100ms

- It depends on the amount of memory we allocate to our function

- We're charged $0.00001667 for every GB-second used

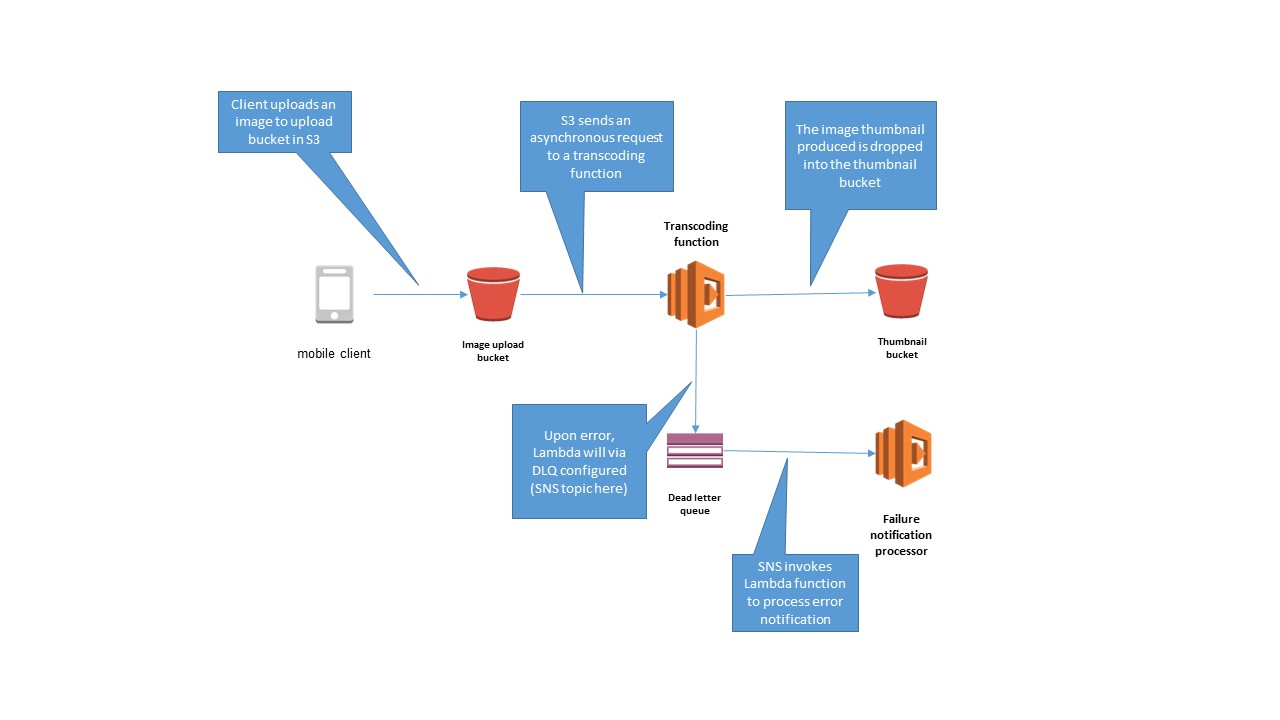

Use cases

- Lambda:

- Robust Serverless Application Design with AWS Lambda Dead Letter Queues (DLQ)

- Failed events are sent to a specified SQS Queue

- SNS invokes Lambda function to process error notification

- For more details

- Robust Serverless Application Design with AWS Lambda Dead Letter Queues (DLQ)

- Alias:

- To define multiple version

- PROD

- DEV-UNIT

- DEV-ACCP

- To avoid deployment overhead when a Lambda function versions changes:

- When an Event sources is mapping configuration is used with alias ARN, no change is required when the function version changes

- When a resource policy is created with an alias ARN, no change is required when the function version changes

- To define multiple version

Limits

- Function timeout: 900 s (15 minutes)

- Function memory allocation: 128 MB to 3,008 MB, in 64 MB increments

- Max concurrent executions: 1,000 per Region shared by all functions in a Region (default limit: it can be increased)

- Max /tmp directory storage size: 512 MB

- For more details

Best practices

- If a Lambda function is configured to connect to a VPC, specify subnets in multiple AZs to ensure high availability

- Manage RDS Connections from AWS Lambda Serverless Function:

- Ensure that the maximum number of connections configured for an RDS database is less than the Lambda function Peak Concurrent Execution

- More details

- Scaling Lambdas inside a VPC:

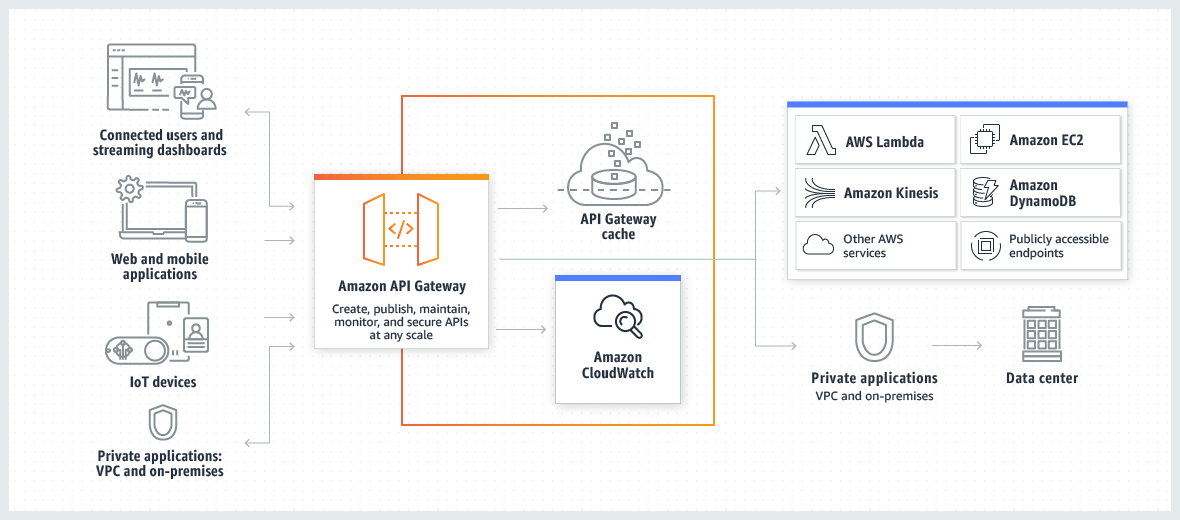

Description

- It's a fully managed Web API service

- It supports: Rest API and WebSocket API

- Expose HTTPS endpoints to define a RESTful API

- Serverless-ly connect services like Lambda & DynamoDB

- It could access directly some services without the need for any intermediate compute

- It allows to maintain multiple versions of our API (Unit, Acceptance, Production APIs for example)

- E.g., it allows access to data stored in DynamoDB with a Lambda function

Configuration

- Protocol: Rest API, WebSocket API

- Define an API (container):

- Define Resources and nested Resources (URL paths):

- For each Resource:

- Select supported HTTP methods

- Set security

- Choose target (EC2, Lambda, DynamoDB)

- Set request and Response transformations

Integration

- VPC Link to integrate on-premises backend solutions through DirectConnect and private VPC

Deployment

- It Uses API Gateway domain, by default

- It can use custom domain

- It supports AWS Certificate Manager: free SSL/TLS certificates

Scalability

- It scales effortlessly

- API Gateway Caching:

- It caches endpoints' responses (E.g., DynamoDB endpoint)

- It allows to reduce the # of calls made to an endpoint (reduce costs)

- It allows to improve APIs latency

- It requires to be enabled

- It requires to specify a TTL (time-to-live) period in seconds

- Enable Amazon API Gateway Caching

Consistency

Resilience

Disaster Recovery

Security

- It throttles requests to prevent attacks:

- It sets a limit on a steady-state rate and a burst of request submissions against all APIs in an account

- It's using the token bucket algorithm where the token counts for a request

- The steady-state rate:

- The number of requests per second and API Gateway can handle

- It's set to 10,000 by default

- The burst:

- It's the maximum bucket size across all APIs within an AWS account

- It's the number of concurrent request submissions that API Gateway can fulfill at any moment without returning 429 Too Many Requests error reponse

- By defaut it's set to 5,000

- It fails the limit-exceeding requests and returns 429 Too Many Requests error to the client, when request submissions exceed the steady-state rate and bust limits

- E.g. 1, If a caller sends 10,000 req. in a 1 second period evenly (10 req/ms), API Gateway processes all req. without dropping any

- E.g. 2, If a caller sends 10,000 req. in the 1st ms, API Gateway serves 5,000 of those req. and throttles the rest in the 1-second period

- E.g. 3, If a caller sends 5,000 req. in the 1st ms and then evenly spreads another 5,000 req. through the remaining 999 ms (~5 req/ms), API Gateway processes all 10,000 req. in the 1-second period without returning 429 error error responses

- E.g. 4, If a caller sends 5,000 req. in the 1 ms and waits until the 101st ms to send another 5,000 requests,

- API Gateway processes 6,000 req. and throttles the rest in the 1-second period

- This is because at the rate of 10,000 rps, API Gateway has served 1,000 requests after the first 100 ms and thus emptied the bucket by the same amount

- Of the next spike of 5,000 requests, 1,000 fill the bucket and are queued to be processed

- The other 4,000 exceed the bucket capacity and are discarded

- E.g. 5, If a caller sends 5,000 req. in the 1st ms, sends 1,000 requests at the 101st ms, and then evenly spreads another 4,000 req through the remaining 899 milliseconds,

- API Gateway processes all 10,000 requests in the 1-second period without throttling

- Token Bucket Burst

- Throttle API Requests for Better Throughput

- Amazon API Gateway Usage Plans Now Support Method Level Throttling

- It's provided by default with "Distributed Denial-of-Service" (DDoS) attacks

- IAM Roles and Policies

- Resource Policy:

- It supports AWS Certificate Manager: free SSL/TLS certificates

- CORS (Cross-Origin Resource Sharing):

- It's a way to relax same-origin policy

- It allows different AWS components to talk to each other (They've different domain names: S3, CloudFront, API Gateway domain names)

- Enable CORS for an API Gateway REST API Resource

- Lambda authorizers

- Amazon Cognito user pools

- Client-side SSL certificates

- Usage plans

- Controlling Access to API Gateway APIs

Monitoring

- Cloud-Watch to log all requests for monitoring

- Access Loggin:

- It's to log who has accessed an API and how the called accessed it

- For more details

- AWS CloudTrail:

- It provides a record of action taken by an AWS user, role, or an AWS service in API Gateway

- For more details

Pricing

- API calls # +

- Data transferred Size +

- Caching required to improve performance

Use cases

- Migration: From On-premise monolith application to Cloud Serverless application

- Traditional APIs can be migrated to API Gateway in a monolithic form

- Then gradually they can be moved to a microservices architecture

- Finally, once components have been fully broken up to micro-services, a serverless and FaaS based architecture is possible

- v1: Monolith application in AWS:

- API Gateway can access some AWS services directly using prixy mode

- E.g. EC2 instances

- v2: Microservices:

- API Gateway + Amazon Fargate + Amazon Aurora

- v3: Serverless:

- API Gateway + AWS Lambda + Amazon DynamoDB

Limits

- Throttle steady-state request rate: 10,000 rps (default)

- The burst size: 5,000 requests across all APIs within an AWS account (default)

Best practices

Foundation

- Web-API and Rest

- Web Socket protocol

- Same-Origin policy

- CORS

- SSL/TLS certificates

- Micro-Services architecture

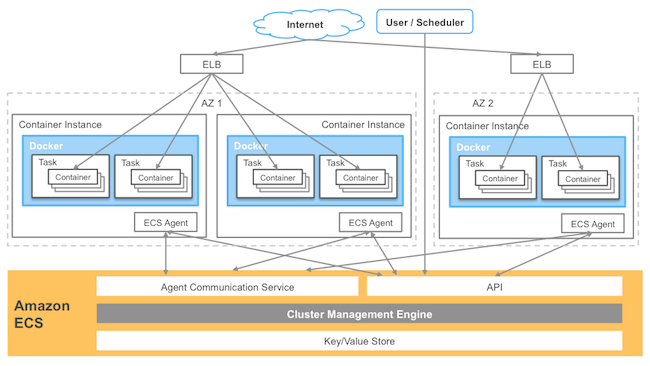

Description

- It's a managed container engine

- It allows Docker containers to be deployed and managed within AWS environments

- An ECS container instance:

- It's an EC2 instance

- It runs the ECS Container Agent

- A Cluster

- It's a container

- It's a logical collection of ECS resources (either ECS EC2 instances or ECS Fargate infrastructure)

- A Task Definition:

- It Defines an application

- It's similar to a Dockerfile but for running containers in ECS

- It can contain multiple containers

- It's used by ECS Placement Engine to create 1 or more running copies of a given application (Tasks)

- A Container Definition:

- Inside a task definition, it defines the individual containers a task uses

- It controls the CPU and memory each container has, in addition to port mappings for the container

- A Task is a copy of an application

- It's a single running copy of any containers

- It's defined by a task definition

- It's one working copy of an application (e.g., DB and web containers)

- It's usually made of 1 or two containers that work together

- E.g., an nginx container with a php-fpm container

- We can ask ECS to start or stop a task

- A Service:

- It allows task definitions to be scaled by adding additional tasks

- It defines minimum and maximum values

- A Registry

- It's a storage for container images

- It's used to download image to create containers

- E.g., Amazon Elastic Container Registry or Dockerhub

- 2 Modes: ECS can use infrastructure clusters based on EC2 or Fargate:

- ECS with EC2 Mode:

- ECS with Fargate mode

Architecture

EC2 Mode

- It's NOT serverless

- It resources are: Cluster + VPC + Subnet + Auto Scaling group with a Linux/Windows AMI

- Task: EC2 Task

- The container instance is owned and managed customers

Fargate Mode

- It's serverless

- It's a managed service: AWS manages the backing infrastructure

- Its resources are: Cluster + VPC (optional) + Subnets (optional)

- Task: Fargate Task

- Tasks are auto placed: AWS Fargate manages the task execution

- There's not any EC2 instances to manage anymore but behind the scene, it uses also EC2 instances

- Each task comes with a dedicated ENI, a private IP @

- All containers of the same task can communicate with each other via localhost

- Inbound and outbound task communication goes through the ENI

- A public IP @ can be enabled as well

Scalability

- EC2 Mode by Auto Scaling Group

- There's not obvious metric to scale a cluster

- There's not integration to scale when the task placement fails because of insufficient capacity

- ECS and ASG are not aware of each other: It makes task deployments very hard during cluster scale in or rolling updates via CloudFormation

- We have to scale down without killing running tasks which is an even more significant challenge for long lived tasks

- Fargate mode: Scale out and in automatically:

- For more details

Consistency

Resilience

- EC2 Mode:

- It's not resilient by design

- It's the responsability of customer to design it HA architecture (2 or 3 AZs)

- Fargate mode:

Disaster Recovery

Security

- A Task Role gives a task (an application) the permissions to interact with other AWS resources

Monitoring

Pricing

- EC2 Mode: ECS is free of charge. We only pay for the EC2 instances

- Fargate mode: We pay for running tasks

Use cases

Limits

Best practices

Description

- It's a virtual network within AWS: It's our private data center inside AWS platform

- It can be configured to be public/private or a mixture

- It's isolated from other VPCs by default

- It can't talk to anything outside itself unless we configure it otherwise

- It's isolated from network blast radius

- It's Regional: it can't span regions

- It's highly available: It's on multiple AZs which allows a HA (Highly Available) architecture

- It can be connected to our data center and corporate networks: Hardware Virtual Private Network (VPN)

- It supports different Tenancy types: it could be:

- Dedicated tenant: it can't be changed (Locked). It's expensive

- multi-tenant (default): it still could be switched to a dedicated tenant

IPv4 CIDR

- It's from /28 (16 IPs) to /16 (65,536 IPs)

- We need to plan in advance CIDR to support whatever service we will deploy in the VPC:

- We need to make sure our CIDR will support enough subnets

- We need to make sure our CIDR will let our subnets have enough IP addresses

- Some AWS services require a minimum number of IP addresses before they can deploy

- We need to plan a CIDR that allows HA architecture:

- We need to break our CIDR down based on the number of AZs we will be using and then

- We need to break down our CIDR based on the number of tiers (subnets) our VPC will have. E.g., public/private/db tiers

- We need to plan for future evolutions: additional AZs, additional tiers (subnets)

Types

- Default VPC:

- It's created by default in every region for each new AWS account (to make easy the onboarding process)

- It's required for some services:

- Historically some services failed if the default VPC didn't exist

- It was initially not something we could create, but we could delete it

- So if we delete, we could run into problems where certain services wouldn't launch,

- We needed to create a ticket to get it recreated on our behalf

- It's used as a default for most

- Its initial state is as follow:

- CIDR: default 172.31.0.0/16 (65,000 IP addresses)

- Subnet: 1 "/20" public subnet by AZ

- DHCP: Default AWS Account DHCP option set is attached

- DNS Names: Enabled

- DNS Resolution: Enabled

- Internet Gateway: Included

- Route table: Main route table routes traffic to local and Internet Gateway (see below)

- NACL: Default NACL allows all inbound and outbound traffic (see below)

- Security Group: Default SG allows all inbound traffic (see below)

- ENI: Same ENI is used by all subnets and all security group

- Custom VPC (or "Bespoke"):

- it can be designed and configured in any valid way

- Its initial state is as follow:

- CIDR: initial configuration

- Subnet: none

- DHCP: Default AWS Account DHCP option set is attached

- DNS Names: Disabled

- DNS Resolution: Enabled

- Internet Gateway: none

- Route table: Main route table routes traffic to local (see below)

- NACL: Default NACL allows all inbound and outbound traffic (see below)

- Security Group: Default SG allows all inbound traffic from itself; allows all outbound traffic (see below)

- ENI: none

DHCP Options Sets

- It stands for: Dynamic Host Configuration Protocol

- It's a configuration that sets various things that have provided to resources inside a VPC when they use DHCP

- It's a protocol that allows resources inside a network to auto configure their network card such as IP address

- It allows any instance in a VPC to point to the specified domain and DNS servers to resolve their domain names

- The default EC2 instance private DNS name is: ip-X-X-X-X.ec2.internal (Xs correspond to EC2 instance private IP digits)

- More details

DNS

- It stands for: Domain Name System

- There're 2 features related to DNS: VPC DNS hostnames and DNS Resolution

- It allows to associate a public DNS name to a VPC public instance

- The default EC2 instance public DNS name is: ec2-X-X-X-X.compute-1.amazonaws.com (Xs correspond to EC2 instance public IP digits)

- Public DNS name resolution:

- From outside EC2 instance VPC, it's resolved to the EC2 instance Public IP

- From inside EC2 instance VPC, it's resolved to the EC2 instance Private IP

Subnet

- Analogy: It's like a floor (or a component of it) in our data center

- Description: It's a part of a VPC

- Location: It's inside an AZ: subnets can't span AZs

- CIDR blocks:

- It can't be bigger than CIDR blocks of the VPC It's attached to

- It can't overlap with any CIDR blocks inside the VPC It's attached to

- It can't be created outside of the CIDR of the VPC It's attached to

- 5 Reserved IPs:

- Subnet's Network IP address: e.g., 10.0.0.0

- Subnet's Router IP address ("+1"): Example: 10.0.0.1

- Subnet's DNS IP address ("+2"): E.g., 10.0.0.2

- For VPCs with multiple CIDR blocks, the IP address of the DNS server is located in the primary CIDR

- For more details

- Subnet's Future IP address ("+3"): e.g., 10.0.0.3

- Subnet's Network Broadcast IP address ("Last"): E.g., 10.0.0.255

- For more details

- Security and Sharing:

- Share a subnet with Organizations or AWS accounts

- Resources deployed to the subnet are owned by the account that deployed them: so we can't update them

- The account we shared the subnet with can't update our subnet (what if there is a role that allow them so?)

- A subnet is private by default

- A subnet is Public if:

- If It's configured to allocate public IP

- If the VPC has an associated Internet Gateway

- If It's attached to a route table with a default route to the Internet Gateway

- Share a subnet with Organizations or AWS accounts

- Type:

- Default Subnet:

- It's a subnet that is created automatically by AWS at the same time as a default VPC

- It's public

- There is as many default subnets as AZs of the region where the default VPC is created in

- Custom Subnet: It's a subnet created by a customer in a costum VPC

- Default Subnet:

- Associations:

- Subnet & VPC:

- A subnet is attached to 1 VPC

- A VPC can have 1 or more subnets: The number of subnets depends on VPC CIDR range and Subnets CIDR ranges

- If all subnets have the same CIDR prefix, the formula would be: 2^(Subnet CIDR Prefix - VPC CIDR Prefix)

- For a VPC of /16, we could create: 1 single subnet of a /16 netmask; 2 subnets of /17; 4 subnets of /18; ... 256 subnets of /24

- Subnet & Route Table:

- A subnet must be associated with 1 and only 1 route table (main or custom)

- When a subnet is created, It's associated by default to the VPC main route table

- Subnet & NACL:

- A subnet must be associated with 1 and only 1 NACL (default or custom)

- When a subnet is created, It's associated by default to the VPC default NACL

- Subnet & VPC:

Router

- It's a virtual routing device that is in each VPC

- It controls traffic entering the VPC (Internet Gateway, Peer Connection, Virtual Private Gateway, ...)

- It control traffic leaving the subnets

- It has an interface in every subnet known as the "Subnet+1" address (is it the ENI?)

- It's fully managed by AWS

- It's highly available and scalable

Route table (RT)

- It controls what the VPC router does with subnet Outbound traffic

- It's a collection of Routes:

- They're used when traffic from a subnet arrives at the VPC router

- They contain a destination and a target

- Traffic is forwarded to the target if its destination matches the route's destination

- Default Routes (0.0.0.0/0 IPv4 and ::/0 IPv6) could be added

- A route Target can be:

- An IP @ or

- An AWS networking object: Egress-Only G., IGW, NAT G., Network Interface, Peering Connection, Transit G., Virtual Private G.,

- Location: -

- Types:

- Local Route:

- Its (Destination, Target) = (VPC CIDR, Local)

- It lets traffic be routed between subnets

- It doesn't forward traffic to any target because the VPC router can handle it

- It allows all subnets in a VPC to be able to talk to one another even if they're in different AZs

- It's included in all route tables

- It can't be deleted from its route table

- Static Route: It's added manually to a route table

- Propagated Route:

- It's added dynamically to a route table by attaching a Virtual Private Gateway (VPG) to the VPC

- We could then elect to propagate any route that it learned onto a particular route table

- It's a way that we can dynamically populate new routes that are learned by the VPG

- Certain types of AWS networking products (VPN, Direct Connect) can dynamically learn routes using BGP (Border Gateway Protocol)

- External networking products (a VPN or direct connect) that support BGP could be integrated with AWS VPC, they can dynamically generate Routes and insert them to a route table

- We don't need then to do it manually by a static route table

- Main Route table:

- It's created by default at the same time as a VPC It's attached to

- It's associated "implicitly" by default to all subnets in the VPC until they're explicitly associated to a custom one

- In a default VPC: it routes outbound traffic to local and to outside (Internet Gateway)

- In a custom VPC: It routes outbound traffic to local

- "Custom" route table:

- It could be created and customized to subnets' requirements

- It's explicitly associated with subnets

- Local Route:

- Routing Priority:

- Rule #1: Most Specific Route is always chosen:

- It's when multiple routes' destination maches with traffic destination

- A matched /32 destination route (a single IP address) will be always chosen first

- A matched /24 destination route will be chosen before a matched /16 destination route

- The default route matches with all traffic destination but will be chosen last

- Rule #2:

- Static routes take priority over the propagated routes

- When multiple routes' destination with same prefix maches with traffic destination and longest prefix match cannot be applied (Rule #1):

- Static is prefered over the dynamic ones

- A matched /24 destination static route will be always chosen first before a matched /24 destination propagated route

- More details

- Rule #1: Most Specific Route is always chosen:

- Associations:

- A RT could be associated with multiple subnets

- A subnet must be associated with 1 and only 1 route table (main or custom)

Internet Gateway (IGW)

- It can route traffic for public IPs to and from the internet

- It's created and attached to a VPC

- A VPC could be attached to 1 and only 1 Internet Gateway

- It doesn't applies public IPv4 addresses to a resource's ENI

- It provides Static NAT (Network Address Translation):

- It's the process of 1:1 translation where an internet gateway converts a private address to a public IP address

- It make the instance a true public machine

- When an Internet Gateway receives any traffic from an EC2 instance, if the EC2 has an allocated public IP:

- Then the Internet Gateway adjusts those traffic's packets (Layer 3 in OSI model)

- It replaces the EC2 private IP in the packet source IP with the EC2 associated Public IP address

- It sends then the packets through to the public Internet

- When an Internet Gateway receives any traffic from the public internet,

- It adjusts those packets as well,

- It replaces the Public IP @ in the packet source IP with the associate EC2 private IP address

- It sends then the packets to the EC2 instance through the VPC Router

Network Access Control Lists (NACL)

- It's a security feature that operates at Layer 4 of the OSI model (Transport Layer: TCP/UDP and below)

- It impacts traffic crossing the boundary of a subnet

- It doesn't impact traffic local to a subnet: Communications between 2 instances inside a subnet aren't impacted

- It acts FIRST before Security Groups: if an IP is denied, it won't reach security group

- It's stateless

- It includes Rules:

- There're 2 sets of rules: Inbound and Outbound rules

- They're explicitly allow or deny traffic based on: traffic Type (protocol), Ports (or range), Source (or Destination)

- Their Source (or Destination) could only be IP/CIDR

- Their Source (or Destination) can't be an AWS objects (NACL is Layer 4 feature)

- Each rule has a Rule #

- They're processed in number of order, "Rule #": Lowest first

- When a match is found, that action is taken and processing stops

- The "*" rule is an implicit deny: It's processed last

- Ephemeral Ports:

- When a client initiates communications with a server, it uses a well-known port #: e.g., TCP/443, ssh/22

- The response from the server is NOT always on the same port

- The client decides the ephemeral port (e.g., TCP/22000)

- This is why a NACL outbound rules need to include the ephemeral port range: 1024-65535:

- E.g, connection to a server with ssh

- NACL outbound rules should include: SSH (22) TCP (6) 22 0.0.0.0/0 ALLOW

- NACL outbound rules should include: Custom TCP Rule TCP (6) 1024 - 65535 0.0.0.0/0 ALLOW

- Location: It'sn't specific to any AZ

- Type:

- Default NACL:

- It's created by default at the same as the VPC It's attached to

- It's associated "implicitly" to all subnets as long as they're not associated explicitly to a custom NACL

- It Allows ALL traffic: Rule 100: Allow everything

- Custom NACL:

- It's created by users

- It should be associated "explicitly" to a subnet

- It blocks ALL traffic, by default: it only includes "*" rule only

- Default NACL:

- Associations:

- It could be associated with multiples subnets

- A subnet has to be associated with 1 NACL

Security Group (SG)

- It's a Software firewall that surrounds AWS products

- It a Layer 5 firewall (session layer) in OSI model

- It acts at the instance level, not the subnet level

- It could be attached/detached from an EC2 instance at anytime

- It's Stateful:

- The response to an allowed inbound (or outbound) request, will be allowed to flow out (or in), regardless of outbound (or inbound) rules

- If we send a request from our instance and It's allowed by the corresponding SG rule, its response is then allowed to flow in regardless of inbound rules

- More details (see Tracking)

- Comparison between Security Group and ACL (stateless)

- SG Rules include: Inbound and Outbound rule sets:

- Type: TCP

- Protocol: e.g., HTTP, SSH