An open-source AutoML & PyTorch Model Training Library

- 2021-10-7: v0.0.6 Release blog post

- 2021-10-5: Hacktoberfest 2021 Kickoff event

- 2021-10-4: Model Trainer support

- 2021-8-29: Migrated to Ray Tune

- 2021-8-25: Released first version 0.0.1 ✨ 🎉

!!! attention

🚨 GradsFlow is changing fast. There will be a lot of breaking changes until we reach 0.1.0.

Feel free to give your feedback by creating an issue or join our Slack group.

GradsFlow is an open-source AutoML Library based on PyTorch. Our goal is to democratize AI and make it available to everyone.

It can automatically build & train Deep Learning Models for different tasks on your laptop or to a remote cluster directly from your laptop. It provides a powerful and easy-to-extend Model Training API that can be used to train almost any PyTorch model. Though GradsFlow has its own Model Training API it also supports PyTorch Lightning Flash to provide more rich features across different tasks.

!!! info

Gradsflow is built for both beginners and experts! AutoTasks provides zero-code AutoML while

Model and Tuner provides custom model training and Hyperparameter optimization.

Recommended:

The recommended method of installing gradsflow is either with pip from PyPI or, with conda from conda-forge channel.

-

with pip

pip install -U gradsflow

-

with conda

conda install -c conda-forge gradsflow

Latest (unstable):

You can also install the latest bleeding edge version (could be unstable) of gradsflow, should you feel motivated enough, as follows:

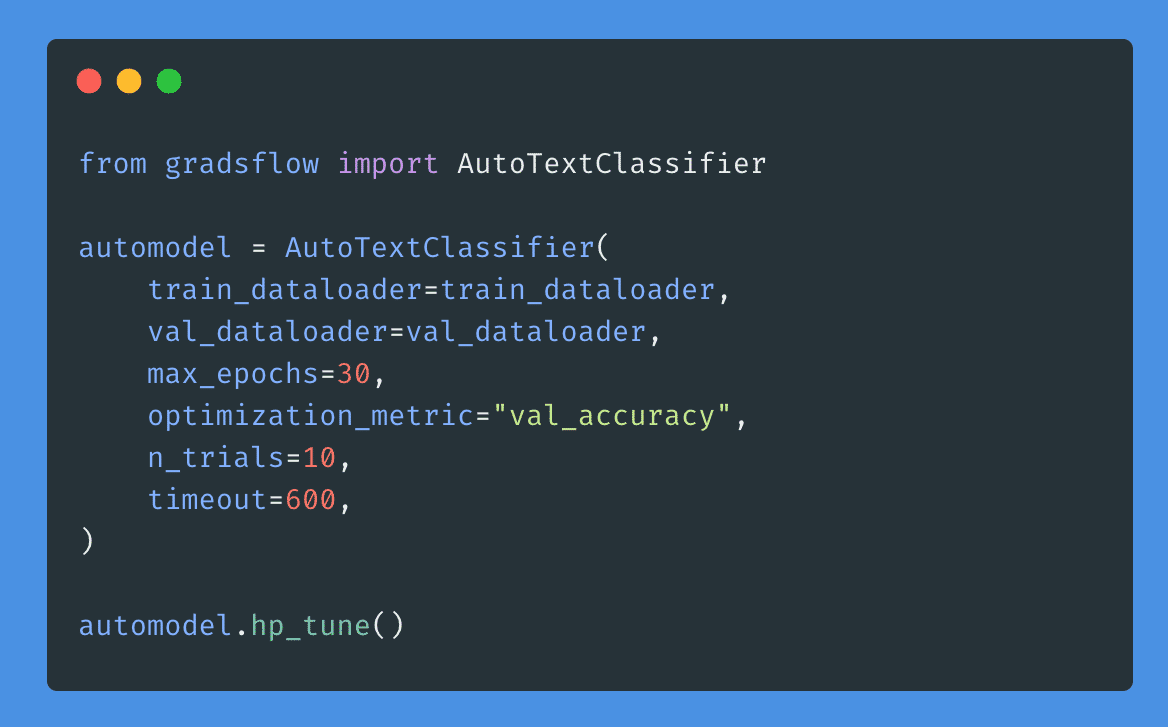

pip install git+https://github.com/gradsflow/gradsflow@mainAre you a beginner or from non Machine Learning background? This section is for you. Gradsflow AutoTask provides

automatic model building and training across various different tasks

including Image Recognition, Sentiment Analysis, Text Summarization and more to come.

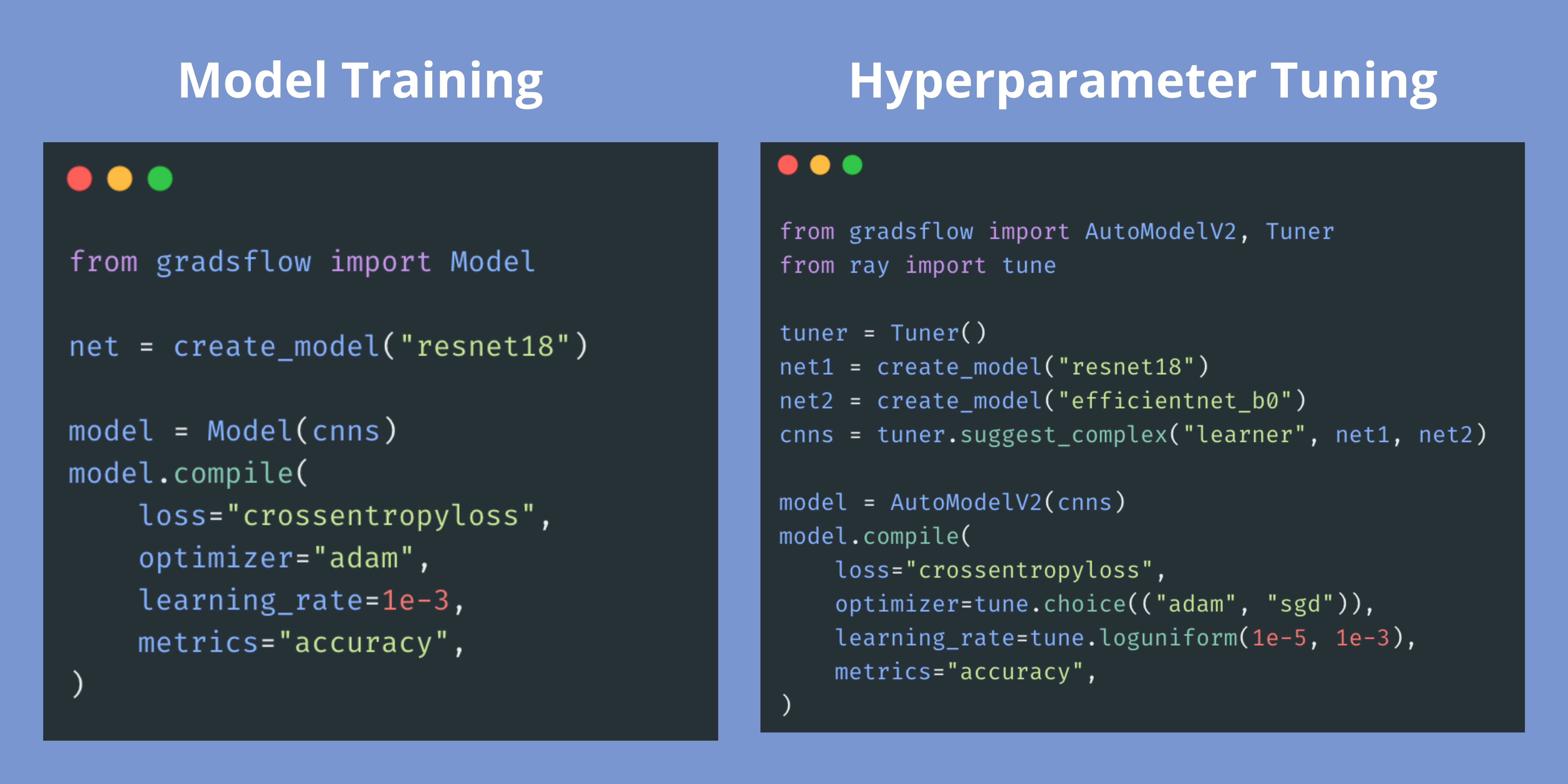

Tuner provides a simplified API to move from Model Training to Hyperparameter optimization.

-

gradsflow.core: Core defines the building blocks of AutoML tasks. -

gradsflow.autotasks: AutoTasks defines different ML/DL tasks which is provided by gradsflow AutoML API. -

gradsflow.model: GradsFlow Model provides a simple and yet customizable Model Training API. You can train any PyTorch model usingmodel.fit(...)and it is easily customizable for more complex tasks. -

gradsflow.tuner: AutoModel HyperParameter search with minimal code changes.

📑 Check out notebooks examples to learn more.

🧡 Sponsor on ko-fi

📧 Do you need support? Contact us at [email protected]

Social: You can also follow us on Twitter @gradsflow and Linkedin for the latest updates.

💬 Join the Slack group to chat with us.

Contributions of any kind are welcome. You can update documentation, add examples, fix identified issues, add/request a new feature.

For more details check out the Contributing Guidelines before contributing.

We pledge to act and interact in ways that contribute to an open, welcoming, diverse, inclusive, and healthy community.

Read full Contributor Covenant Code of Conduct

GradsFlow is built with help of awesome open-source projects (including but not limited to) Ray, PyTorch Lightning, HuggingFace Accelerate, TorchMetrics. It takes inspiration from multiple projects Keras & FastAI.