This is the official repository for the CVPRW 2023 paper EVREAL: Towards a Comprehensive Benchmark and Analysis Suite for Event-based Video Reconstruction by Burak Ercan, Onur Eker, Aykut Erdem, and Erkut Erdem.

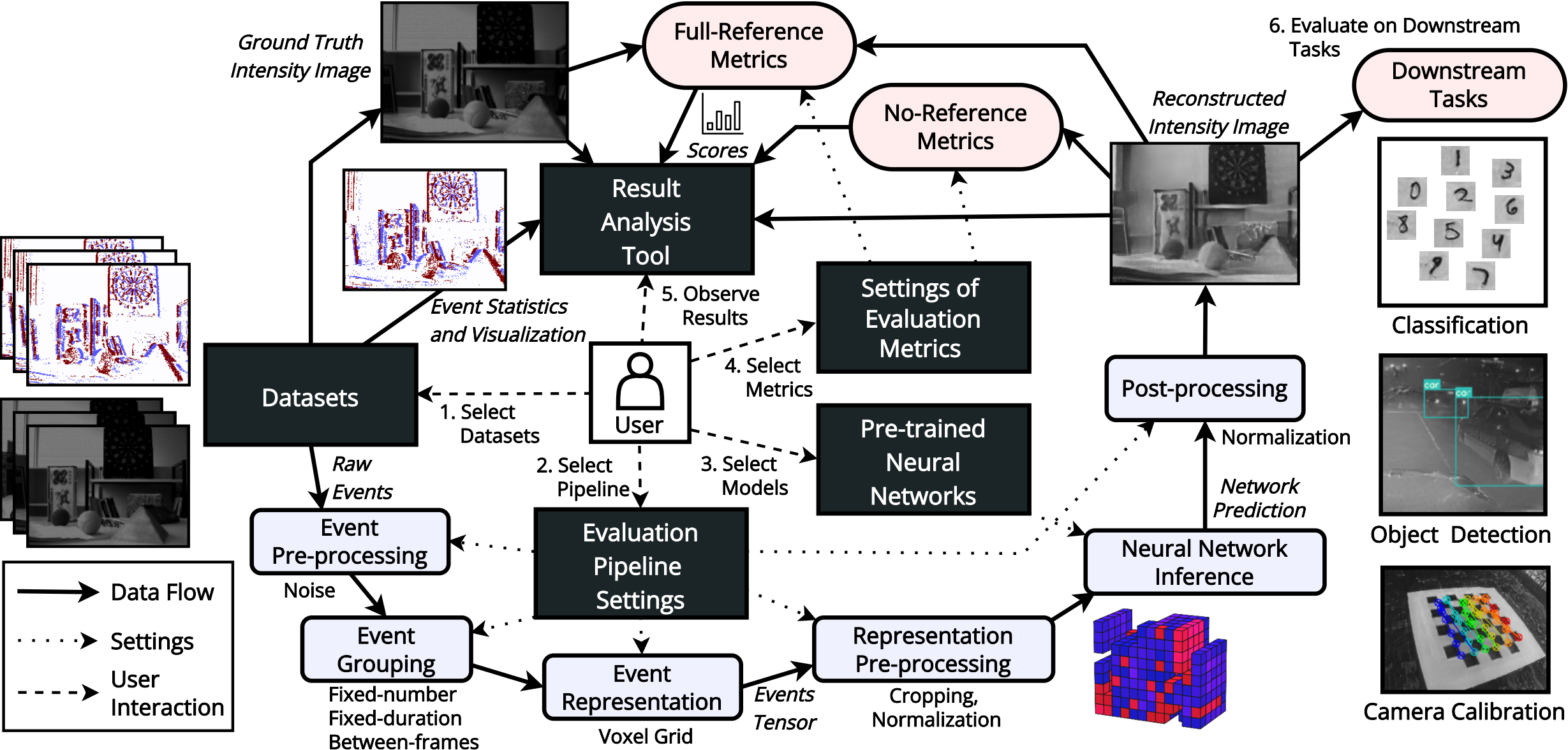

In this work, we present EVREAL, an open-source framework that offers a unified evaluation pipeline to comprehensively benchmark PyTorch based pre-trained neural networks for event-based video reconstruction, and a result analysis tool to visualize and compare reconstructions and their scores.

For more details please see our paper.

For qualitative and quantitative experimental analyses please see our project website, or interactive demo.

- Second version of our EVREAL paper, which includes updated results, is published at arXiv now.

- Codes for robustness analysis are added. Please see the example commands below.

- Codes for downstream tasks are added. Please refer to the README file for downstream tasks for instructions on performing object detection, image classification, and camera calibration experiments.

- Codes for color reconstruction added.

- Evaluation codes are published now. Please see below for installation, dataset preparation and usage instructions. (Codes for robustness analysis and downstream tasks will be published soon.)

- In our result analysis tool, we also share results of a new state-of-the-art model, HyperE2VID, which generates higher-quality videos than previous state-of-the-art, while also reducing memory consumption and inference time. Please see the HyperE2VID webpage for more details.

- The web application of our result analysis tool is ready now. Try it here to interactively visualize and compare qualitative and quantitative results of event-based video reconstruction methods.

- We will present our work at the CVPR Workshop on Event-Based Vision in person, on the 19th of June 2023, during Session 2 (starting at 10:30 local time). Please see the workshop website for details.

Installing the packages required for evaluation, in a conda environment named evreal:

conda create -y -n evreal python=3.10

conda activate evreal

conda install pytorch torchvision pytorch-cuda=12.1 -c pytorch -c nvidia

pip install -r requirements.txt

EVREAL processes event datasets in a numpy memmap format similar to the format of event_utils library. The datasets used for the experiments presented in our paper are the ECD, MVSEC, HQF, BS-ERGB, and the HDR datasets.

In the tools folder, we present tools to download these datasets and convert their format. Here, you can find commands to install a separate conda environment named evreal-tools, and commands to download and install aforementioned datasets (except BS-ERGB). After preparing the datasets with these commands, the dataset folder should look as follows:

├── EVREAL

├── data

├── ECD # First dataset

├── boxes_6dof # First sequence

├── events_p.npy

├── events_ts.npy

├── events_xy.npy

├── image_event_indices.npy

├── images.npy

├── images_ts.npy

├── metadata.json

├── calibration # Second sequence

├── ...

├── HQF # Second dataset

├── ...

The main script of EVREAL is eval.py. This script takes a set of evaluation settings, methods, datasets, and evaluation metrics as command line arguments, and generates reconstructed images and scores using each given method and for each sequence in the given datasets. For example, the following command evaluates the E2VID and HyperE2VID methods using the std evaluation configuration, on the ECD dataset, using the MSE, SSIM and LPIPS quantitative metrics:

python eval.py -m E2VID HyperE2VID -c std -d ECD -qm mse ssim lpipsEach evaluation configuration, method, and dataset has a specific json formatted file in config/eval, config/method, and config/dataset folders, respectively. Therefore the example command given above reads the settings to use from the std.json, ECD.json, E2VID.json, and HyperE2VID.json files. For the MSE and SSIM metrics, the implementations from scikit-image library are used. For all the other metrics, we use the PyTorch IQA Toolbox.

After the evaluation is finished, the scores of each method in each dataset are printed in a table format, and the results are stored in outputs folder in the following structure:

├── EVREAL

├── outputs

├── std # Evaluation config

├── ECD # Dataset

├── boxes_6dof # Sequence

├── E2VID # Method

├── mse.txt # MSE scores

├── ssim.txt # SSIM scores

├── lpips.txt # LPIPS scores

├── frame_0000000000.png # Reconstructed frames

├── frame_0000000001.png

├── ...

├── HyperE2VID

├── ...

To generate full-reference metric scores for each method on four datasets (as in Table 2 of the paper, with the addition of the new method HyperE2VID):

python eval.py -m E2VID FireNet E2VID+ FireNet+ SPADE-E2VID SSL-E2VID ET-Net HyperE2VID -c std -d ECD MVSEC HQF BS_ERGB_handheld -qm mse ssim lpipsTo generate no-reference metric scores for each method on ECD-FAST and MVSEC-NIGHT datasets (as in Table 3 of the paper, with the addition of the new method HyperE2VID):

python eval.py -m E2VID FireNet E2VID+ FireNet+ SPADE-E2VID SSL-E2VID ET-Net HyperE2VID -c std -d ECD_fast MVSEC_night -qm brisque niqe maniqaTo generate no-reference metric scores for each method on HDR datasets (as in Table 3 of the paper, with the addition of the new method HyperE2VID):

python eval.py -m E2VID FireNet E2VID+ FireNet+ SPADE-E2VID SSL-E2VID ET-Net HyperE2VID -c t40ms -d TPAMI20_HDR -qm brisque niqe maniqaTo perform robustness experiments:

python eval.py -m E2VID FireNet E2VID+ FireNet+ SPADE-E2VID SSL-E2VID ET-Net HyperE2VID -c k5k k10k k15k k20k k25k k30k k35k k40k k45k -d ECD MVSEC HQF -qm mse ssim lpips

python eval.py -m E2VID FireNet E2VID+ FireNet+ SPADE-E2VID SSL-E2VID ET-Net HyperE2VID -c t10ms t20ms t30ms t40ms t50ms t60ms t70ms t80ms t90ms t100ms -d ECD MVSEC HQF -qm mse ssim lpips

python eval.py -m E2VID FireNet E2VID+ FireNet+ SPADE-E2VID SSL-E2VID ET-Net HyperE2VID -c kr0.1 kr0.2 kr0.3 kr0.4 kr0.5 kr0.6 kr0.7 kr0.8 kr0.9 kr1.0 -d ECD MVSEC HQF -qm mse ssim lpips

python analyze_robustness.pyTo generate color reconstructions on CED dataset:

python eval.py -m HyperE2VID -c color -d CED If you use this library in an academic context, please cite the following:

@inproceedings{ercan2023evreal,

title={{EVREAL}: Towards a Comprehensive Benchmark and Analysis Suite for Event-based Video Reconstruction},

author={Ercan, Burak and Eker, Onur and Erdem, Aykut and Erdem, Erkut},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

month={June},

year={2023},

pages={3942-3951}}

- This work was supported in part by KUIS AI Center Research Award, TUBITAK-1001 Program Award No. 121E454, and BAGEP 2021 Award of the Science Academy to A. Erdem.

- This code borrows from or inspired by the following open source repositories:

- Here are the open-source repositories (with model codes and pretrained models) of the methods that we compare with EVREAL: