This repository is a modification of the Two-Stream network based on :

Also utilizing of the Non-Local Block to enhance the spatial CNN of the Two-Stream network:

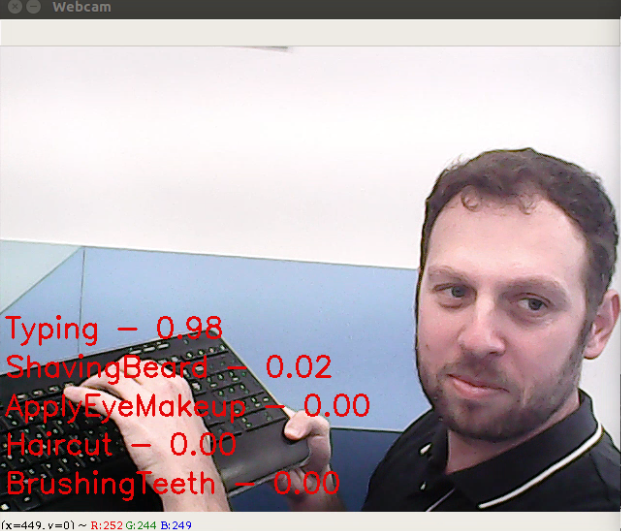

The main added feature of this repository is adding an inference method to the networks so you can see the model's predictions (Top-5 and their score) in real-time on a webcam feed

You can click the image to view the demo video ^

Please note that this is repository was built on Python 2.7. Unfortunately, at the time of creating this repo, I did not have the best Git protocols and haven't made a proper requirements.txt - My apologies.

If you want to train the model from scratch you need to download the UCF-101 data, I recommend visiting Jefferey's Huang repository linked above and follow his detailed instructions.

If you just want to run inference download the pre-trained model here:

Link to ResNet101 trained on UCF-101

Then run

python spatial_cnn_gpu --resume /PATH/TO/model_best.pth.tar --demo

You can run a cpu only version just by changing the script's name to spatial_cnn_cpu.py The best real-time results come from running only the Spatial CNN without the Temporal Stream on a GPU

We didn't include the pre-trained weights to the Non-Local-Network version because we didn't observe any improvement in performance by adding the Non-Local Blocks (NLBs). We believe that very big batch-sizes are required for NLBs to contribute to the precision's score, which we didn't have the resources for.