SIEM, Visibility, and Event-Driven Architecture Curated Solutions.

Diagram from my Security Operations Data Engineering Framework (SODEF) paper. These are the pieces you can look at when trying to build a cost-effective data engineering architecture and system for analytics and threat detection in your organization.

SIEM Research - A_Cost_Effective_SIEM_Framework___BenR___2024_IUPUI_MSCTS Grad School Projects and Content - https://github.com/cybersader/grad-school-projects

These are some things to consider when looking at SIEMs and security data analytics architectures.

- Pricing models

- Ingest-based for smaller implementations

- Self-hosted

- Compute accurate models or models that estimate or measure your computation and infrastructure usage with a premium on top to cover the technology and other costs (support, etc) (example: Splunk SVCs)

- Data models, compatibility, or normalization approaches and/or logic

- Data transformation capabilities

- Transforming logs

- Query language/syntax, building analytics?

- Sigma rules?

- Control over what gets warehoused or used with computation or infrastructure?

- This is why data pipelines like Cribl should be used so that their is control

- Detection Engineering

- Machine Learning and AI

- Anomaly Detection Models

- Types of functions

- Correlation

- Community Marketplace / Database for Queries/Detections

- Machine Learning and AI

Send all of your log data from your IT systems to a data pipelining system like any of the ones mentioned below.

The biggest reason to use data pipelines is that IT teams need to be log users rather than just log keepers. It allows them to align infrastructure costs with usage. Namely, they aren't spending an exorbitant amount of money warehousing data that never needed warehousing (Sidenote: warehousing or indexing the data to make it easier to query and analyze costs A LOT). Without a central data transformation proxy like a data pipeline tool, teams will likely be playing into the hands of analytics/SIEM platforms pricing that benefits from people who don't use all of their data similar to how Costco banks on consumers that don't make good use of their memberships.

-

Cribl Stream & Alternatives

- This is my favorite tool to integrate with SIEMs. However, I've had trouble finding similar tools. I wonder what their competitors are

- Cribl Stream - Simplify Data Stream, Routing & Collection

- Stitch: Simple, extensible cloud ETL built for data teams | Stitch

- Dataflow Google Cloud - Stream processing from Google

- ETL Service - Serverless Data Integration - AWS Glue - AWS

- Azure Stream Analytics

-

Security Data Pipelines

- Normalize all of your data to use...

- CSV or DELIMITED format for "flat" data,

- JSON for "nested" data,

- and Parquet where optimization is valuable AND where not all of the columns are always being used

For at-home implementations

- Graylog

- Wazuh

-

Hive Project - not too many features for a large company

-

- security data lake

- Bring your own buckets (S3)

-

Panther | A Cloud SIEM Platform for Modern Security Teams

- also security data lake but it's limited to Snowflake

-

Hunters SOC Platform: SIEM Alternative | Automate Detection & Response

- Integrates with Databricks lakehouse

-

Devo

-

Splunk - go with SVC to save money for businesses

-

Security Data Pipelines

-

Misc threat intelligence stuff

-

SOAR - security orchestration automation response

-

Swimlane - AI Enabled Security Automation, SOC Automation, SOAR

-

For specific systems:

Advanced implementation for at-home.

- Files to Minio to Trino and Iceberg to Hive Metastore to Maria DB

- Loki, Grafana

- good for analytics

- does not offer transformation and pipelines. Would need an ETL proxy in front of it

- Grafana Loki OSS | Log aggregation system

- Databricks - lakehouse

- Data Lakehouse Architecture and AI Company | Databricks

- Platform for working with Apache Spark.

- Automated infra management

- Microsoft Azure Databricks (integrated into Azure)

- Really powerful and flexible

- Not sure about price and stuff

- Data Lakehouse Architecture and AI Company | Databricks

- Dremio

- Starburst - a data lake that could apply to security too

- SIEM - aggregating data and doing analysis

- SOAR - logic to react to analysis (integrates with SIEM)

- SOAR tools are a combination of threat intelligence platforms, Security Incident Response Platforms (SIRP) and Security Orchestration and Automation (SOA).

- SIRP - security incident response platform

- TIP - threat intel platform

- .

- Matano - (SIEM alternative) for threat hunting, detection & response, and cybersecurity analytics at petabyte scale on AWS

- TheHive Project

- Graylog- User-friendly interface and powerful log management and analysis features. It offers easy log centralization, analysis, and alerting capabilities. : https://graylog.org/

- Wazuh- Security monitoring platform that combines intrusion detection, vulnerability detection, and log analysis. It integrates with the ELK Stack and offers real-time threat detection : https://wazuh.com/

- Not really a SIEM. Takes in some certain types of data and has pre-made detections and integrations for it, but not viable for large organization with heterogenous IT systems and data.

- GitHub - wazuh/wazuh: Wazuh - The Open Source Security Platform. Unified XDR and SIEM protection for endpoints and cloud workloads.

- Linode Hosting - Wazuh Infrastructure Security Analytics Application | Akamai

- Security Onion- Network security monitoring, intrusion detection, and log management. It incorporates tools like Suricata, Zeek (formerly Bro), and Elasticsearch. : https://securityonionsolutions.com/

- More for network threat detection and not large amounts of crazy log data

- Microsoft Azure Marketplace

- Enhance Security with OSSIM | AT&T Cybersecurity

- UTMStack | Open Source SIEM, XDR and Compliance Solution

- Pricing | Blumira

- Gurucul | Global Leader in Advanced Cybersecurity Solutions

- Microsoft Sentinel - Cloud SIEM Solution | Microsoft Security

- Chronicle SIEM | Google Cloud

- Dynatrace | Modern cloud done right

- Cloud Log Management, Monitoring, SIEM Tools | Sumo Logic

- Securonix: Security Analytics at Cloud Scale

- Exabeam SIEM - Exabeam

- FortiSIEM | SIEM Solutions & Tools | Get Best Enterprise SIEM Software

- QRadar | IBM Security SIEM

- Elastic SIEM | SIEM & Security Analytics | Elastic Security

- LogSentinel SIEM and XDR | Next-gen cloud-first | Affordable for SMEs

- Arcsight - Security Information and Event Management Tool | SIEM Software | CyberRes

- Devo: Cloud-Native Integrated SIEM | SOAR | UEBA | AI Solution

- Hunters SOC Platform: SIEM Alternative | Automate Detection & Response

- Comodo NxSIEM | Security Information and Event

- Intelligent Security Operations Platform (ISOP) | NSFOCUS

- LogRhythm SIEM Security & SOC Services | Cloud & Self-Hosted

- Trellix | Revolutionary Threat Detection and Response

- Security Analytics | Datadog

- Splunk | The Key to Enterprise Resilience

- Logz.io: Cloud Observability & Security Powered by Open Source

- Matano | Cloud native SIEM

- The Average SIEM Deployment Costs $18M Annually…Clearly, Its time for a change! | by Dan Schoenbaum | Medium

- Security-driven data can be dimensional, dynamic, and heterogeneous, thus, data warehouse solutions are less effective in delivering the agility and performance users need.

- A data lake is considered a subset of a data warehouse, however, in terms of flexibility, it is a major evolution. The data lake is more flexible and supports unstructured and semi-structured data, in its native format and can include log files, feeds, tables, text files, system logs, and more.

- For example, .03 cents per/GB/per month if in an S3 bucket. This capability makes the data lake the penultimate evolution of the SIEM.

- Why Your Security Data Lake Project Will SUCCEED! | by Omer Singer | Medium

- It turned out that security teams didn’t have time for a science project like Apache Spot or Metron.

- The Hadoop data lake was causing enough headaches for the core business units that depended on it, and security had alternatives available in purpose-built SIEM and log management systems.

- Apache Spot

- One reason why they are being chosen over legacy incumbents in the SIEM market is that these providers don’t have to spend precious cycles developing and maintaining their data backend.

- Security Operations on the Data Lakehouse: Hunters SOC Platform is now available for Databricks customers | Databricks Blog

- What is a Medallion Architecture?

- Why Security Teams Are Adopting Security Data Lakes As Part Of A SIEM Strategy

- What is a SIEM-less Architecture | Anvilogic

- Why Your Security Data Lake Project Will SUCCEED! | by Omer Singer | Medium

- Connected Apps | Missing Layer in the Modern Data Stack | by Arunim Samat | Medium

- Modernizing Enterprise SOC’s: Anvilogic’s Automated Detection Engineering On Snowflake Security Data Lake Using Generative AI | by Ravi Kumar | Medium

- Security Data Lake | Gurucul Scalable Architecture

- Security Risk Advisors - Security Data Pipeline Modernization

- Improve SOC Efficiency with Cribl Observability Pipelines in Cloud-Native SIEM - Exabeam

- What a Robust Security Data Pipeline is Critical in 2023

- Security Operations on the Data Lakehouse: Hunters SOC Platform is now available for Databricks customers | Databricks Blog

- What is a Medallion Architecture?

- Why Security Teams Are Adopting Security Data Lakes As Part Of A SIEM Strategy

- What is a SIEM-less Architecture | Anvilogic

- Connected Apps | Missing Layer in the Modern Data Stack | by Arunim Samat | Medium

- Modernizing Enterprise SOC’s: Anvilogic’s Automated Detection Engineering On Snowflake Security Data Lake Using Generative AI | by Ravi Kumar | Medium

- Security Data Lake | Gurucul Scalable Architecture

- circulate.dev/blog/security-logs-and-asset-data-in-2023-pt1-the-foundation

- Why Security Teams Are Adopting Security Data Lakes As Part Of A SIEM Strategy

- The Average SIEM Deployment Costs $18M Annually…Clearly, Its time for a change! | by Dan Schoenbaum | Medium

- Security-driven data can be dimensional, dynamic, and heterogeneous, thus, data warehouse solutions are less effective in delivering the agility and performance users need. A data lake is considered a subset of a data warehouse, however, in terms of flexibility, it is a major evolution. The data lake is more flexible and supports unstructured and semi-structured data, in its native format and can include log files, feeds, tables, text files, system logs, and more. You can stream all of your security data, none is turned away, and everything will be retained. This can easily be made accessible to a security team at a low cost. For example, .03 cents per/GB/per month if in an S3 bucket. This capability makes the data lake the penultimate evolution of the SIEM.

- The value of the process is to compare newly observed behavior with historical trends, sometimes comparing to datasets spanning 10 years. This would be cost-prohibitive in a traditional SIEM.

- Interesting companies to power your security data lake:

- If you are planning on deploying a security data lake or already have, here are three cutting edge companies you should know about. I am not an employee of any of these companies, but I am very familiar with them and believe that each will change our industry in a very meaningful way and can transform your own security data lake initiative.

-

- Panther:Snowflake is a wildly popular data platform primarily focused on mid-market to enterprise departmental use. It was not a SIEM and had no security capabilities. Along came engineers from AWS and Airbnb who created Panther, a platform for threat detection and investigations. The company recently connected Panther with Snowflake and is able to join data between the two platforms to make Snowflake a “next-generation SIEM” or — perhaps better positioning — evolve Snowflake into a highly-performing, cost-effective, security data lake. It is still a newer solution, but it’s a cool idea with a lot of promise and has been replacing Splunk implementations at companies like Dropbox and others at an impressive clip. If you want to get a sense for what the future will look like, you can even try it for free here.

-

- Team Cymru is the most powerful security company you have yet to hear of. They have assembled a global network of sensors that “listen” to IP-based traffic on the internet as it passes through ISP’s and can “see” and therefore know more than anyone in a typical SOC. They have built the company by selling this data to large, public security companies such as Crowdstrike, FireEye, Microsoft, and now Palo Alto Networks, with their acquisition of Expanse, which they snapped up for a cool $800M. In addition, cutting-edge SOC teams at JPMC and Walmart are embracing Cymru’s telemetry data feed. Now you can get access to this same data, you will want their 50+ data types and 10+ years of intelligence inside of your data lake to help your team to better identify adversaries and bad actors based on certain traits such as IP or other signatures.

- Varada.io: The entire value of a security data lake is easy, rapid, and unfettered access to vast amounts of information. It eliminates the need to move and duplicate data and offers the agility and flexibility users demand. As data lakes grow, queries become slower and require extensive data-ops to meet business requirements. Cloud storage may be cheap, but compute becomes very expensive quickly as query engines are most often based on full scans. Varada solved this problem by indexing and virtualizing all critical data in any dimension. Data is kept closer to the SOC — on SSD volumes — in its granular form so that data consumers can leverage the ultimate flexibility in running any query whenever they need. The benefit is a query response time up to 100x faster at a much cheaper rate by avoiding time-consuming full scans. This enables workloads such as the search for attack indicators, post-incident investigation, integrity monitoring, and threat-hunting. Varada was so innovative that data vendor Starburst recently acquired them.

- The Security Data lake, while not a simple, “off the shelf” approach, centralizes all of your critical threat and event data in a large, central repository with simple access. It can still leverage an existing SIEM, which may leverage correlation, machine learning algorithms and even AI to detect fraud by evaluating patterns and then triggering alerts. However configured, the security data lake is an exciting step you should be considering, along with the three innovative companies I mentioned in this article.

- Tenzir's security data pipeline platform optimizes SIEM, cloud, and data costs - Help Net Security

- collection, shaping, enrichment, and routing of data between any security and data technology using a rich set of data types and security-native operators purpose-built for security use cases

- Why you need Data Engineering Pipelines before an enterprise SIEM | by Alex Teixeira | Oct, 2023 | Detect FYI

- Content engineering and detection engineering

- Benefits: log viz, data quality, data collab, routing.

- What a Robust Security Data Pipeline is Critical in 2023

- Launch YC: Tarsal: Data pipeline built for modern security teams | Y Combinator

- Telemetry Data Pipeline & Log Analysis Solutions | Mezmo

- General Data Engineering, Storage, Analytics, Visualization?

- multi-language, agnostic analytics engine

- data engineering platform

- distributed data analytics

- data warehouse analytics

- data lake analytics

- Kibana- While commonly used with the ELK (Elasticsearch, Logstash, Kibana) stack for log analysis, Kibana can be adapted for non-security-related data visualization and exploration. : https://www.elastic.co/kibana/

- Pentaho- Is an open-source business analytics and data integration platform. It's utilized for data visualization, reporting, and ETL (Extract, Transform, Load) tasks. : https://www.hitachivantara.com/en-us/products/pentaho-platform/data-integration-analytics.html

- Metabase- Is a business intelligence and data exploration tool that allows users to create interactive dashboards and analyze data stored in various databases. : https://www.metabase.com/

- Jupyter- Is an open-source web application that allows you to create and share documents that contain live code, equations, visualizations, and narrative text. It's widely used in data science and research for data analysis and visualization. : https://jupyter.org/

-

General Data Engineering, Storage, Analytics, Visualization, Data Lakehouse

- Data Lakehouse Architecture and AI Company | Databricks

- Platform for working with Apache Spark.

- Automated infra management

- Microsoft Azure Databricks (integrated into Azure)

- Datadog Log Management | Datadog

- Dremio | The Easy and Open Data Lakehouse Platform

- Microsoft Fabric

- Data Lakehouse Architecture and AI Company | Databricks

-

Databases, Data Storage, Data Indexing, Data Warehouse, Data Lakes

- Curations

- Home - Database of Databases

- Browse - Database of Databases - timeseries, OS, commercial

- Home - Database of Databases

- Related to Log Management

- Curations

-

Automation, IFTTT, Integration Platform as a Service (iPaaS), APIs, Cloud Integration, ESB (enterprise service bus), EAI (enterprise application integration), Middleware

- Power Automate | Microsoft Power Platform

- IBM App Connect - Application integration software

- n8n.io - a powerful workflow automation tool

- Connect APIs, Remarkably Fast - Pipedream

- Make | Automation Software | Connect Apps & Design Workflows

- Zapier | Automation that moves you forward

- 8 Million+ Ready Automations For 1100+ Apps | Integrately

- IFTTT - Automate business & home

- Unified, AI-powered iPaaS for every team to automate at scale | Tray.io

- The Modern Leader in Automation | Workato

- Albato — a single no-code platform for all automations

- Celigo: A New Approach to Integration and Automation

- https://github.com/huginn/huginn

- [Microsoft)](https://azure.microsoft.com/en-us/services/logic-apps/)

- WayScript

- https://www.ondiagram.com/ > features

- ESB

- Anypoint Platform - A comprehensive API management and integration platform that simplifies connecting applications from Mulesoft.

- Apache ServiceMix - An open-source integration container that combines the functionality of Apache ActiveMQ, Camel, CXF, and Karaf, providing a flexible solution.

- ArcESB - A versatile integration platform that seamlessly synchronizes data across applications, integrates with partners, and provides data accessibility.

- IBM App Connect - An integration platform that can connect applications, irrespective of the message formats or protocols they use, formerly known as IBM Integration Bus.

- [⭐1.9k)](https://github.com/Particular/NServiceBus) - A .NET-based service bus that offers an intuitive developer-friendly environment.

- Oracle Service Bus - An integration platform that connects, virtualizes, and manages interactions between services and applications.

- Oracle SOA Suite - A platform that enables system developers to set up and manage services and to orchestrate them into composite applications and business processes.

- Red Hat Fuse - A cloud-native integration platform that supports distributed integration capabilities.

- Software AG webMethods Integration Server - An integration platform that enables faster integration of any application.

- TIBCO BusinessWorks - A platform that implements enterprise patterns for hybrid integrations.

- UltraESB - An ESB that supports zero-copy proxying for extreme performance utilizing Direct Memory Access and Non-Blocking IO.

- [⭐352)](https://github.com/wso2/product-ei) - An API-centric, cloud-native, and distributed integration platform designed to provide a robust solution for software engineers.

- https://github.com/stn1slv/awesome-integration#ipaas

- Anypoint Platform - A powerful integration platform that combines API management and integration capabilities in a single platform, enabling software engineers to integrate various applications with ease.

- Boomi AtomSphere - A cloud-native, unified, open, and intelligent platform that connects everything and everyone, allowing software engineers to create and manage integrations easily.

- Jitterbit Harmony - A comprehensive integration platform that provides pre-built templates and workflows to automate business processes. It integrates thousands of applications and simplifies integration for software engineers.

- IBM Cloud Integration - A next-generation integration platform that uses AI to provide software engineers with an innovative approach to integration. This platform accelerates integration processes, making it faster and more scalable.

- Informatica Intelligent Cloud Services - A suite of cloud data management products designed to accelerate productivity and improve speed and scale. Software engineers can use this platform to manage data and integrate applications efficiently.

- OpenText Alloy - A powerful enterprise data management platform that empowers organizations to move beyond basic integration and turn data into insights and action. Software engineers can use this platform to manage data and improve business outcomes.

- Oracle Integration Cloud Service - A robust platform that accelerates time to go live with pre-built connectivity to any SaaS or on-premises application. Software engineers can use this platform to simplify integration processes and streamline operations.

- SnapLogic Intelligent Integration Platform - A comprehensive integration platform that connects various applications and data landscapes. Software engineers can use this platform to integrate data and applications quickly and efficiently.

- Software AG webMethods Hybrid Integration Platform - An all-in-one integration platform that enables software engineers to integrate all their applications in a single platform. This platform simplifies integration processes and improves efficiency.

- TIBCO Cloud Integration - A flexible platform that enables software engineers to integrate anything with API-led and event-driven integration. This platform empowers everyone to integrate anything, making integration processes faster and more efficient.

- Workato - A single platform for integration and workflow automation across your organization, providing software engineers with a powerful platform for simplifying integration processes and streamlining operations.

-

Analytics & Visualization

-

Data Ingestion & Aggregation

- MISP Open Source Threat Intelligence Platform & Open Standards For Threat Information Sharing

- OpenDXL- Framework designed for the integration of security tools and the management of security events. It's built to enhance the interoperability of security products. : https://www.opendxl.com/filebase/

- Patrowl/PatrowlManager: PatrOwl - Open Source, Smart and Scalable Security Operations Orchestration Platform

- .

- Automated Incident Response

- Incident Handling

- Security Automation

- IFTTT

- TheHive

- nsacyber/WALKOFF: A flexible, easy to use, automation framework allowing users to integrate their capabilities and devices to cut through the repetitive, tedious tasks slowing them down. #nsacyber

- EveBox- Interface for the Suricata intrusion detection system (IDS). It provides alert management and visualization features for Suricata-generated alerts. : https://evebox.org/

If this then this, security integrations, automations

-

Swimlane - AI Enabled Security Automation, SOC Automation, SOAR

-

For specific systems:

ETL (Extract Transform Load), Data Transformation & Integration, Stream Processing, Moving Data, Data Quality, Data Pipelines, Observability

-

Airbyte | Open-Source Data Integration Platform | ELT tool - Free by self-hosting with Docker. Cheap cloud options.

-

Jitsu : Open Source Data Integration Platform - Open-source alternative to Segment. Free by self-hosting with Dock.

-

dbt Labs | Transform Data in Your Warehouse - Uses SQL (con), Open Source, Jinja templates with SQL (ehhh sorta unrelated to most pipeline tools)

-

Meltano: Unlock all the data that powers your product features

-

Apache Flink® — Stateful Computations over Data Streams | Apache Flink

-

Vector | A lightweight, ultra-fast tool for building observability pipelines

-

Cribl Stream & Alternatives

- This is my favorite tool to integrate with SIEMs. However, I've had trouble finding similar tools. I wonder what their competitors are

- Cribl Stream - Simplify Data Stream, Routing & Collection

- Stitch: Simple, extensible cloud ETL built for data teams | Stitch

- Dataflow Google Cloud - Stream processing from Google

- ETL Service - Serverless Data Integration - AWS Glue - AWS

- Azure Stream Analytics

-

Streaming? (I'm so confused about some of the differences or targets of these solutions)

- Apache Kafka - Apache Kafka is an open-source distributed event streaming platform used by thousands of companies for high-performance data pipelines, streaming analytics, data integration, and mission-critical applications.

-

Data Pipelines

- Apache Airflow - programmatically author, schedule and monitor workflows.

-

Misc

- Cloud Data Fusion | Google Cloud - Fully managed, cloud-native data integration at any scale. Visual point-and-click interface enabling code-free deployment of ETL/ELT data pipelines.

- Fivetran | Automated data movement platform

- Managed Etl Service - AWS Data Pipeline - AWS

- Data Science and Analytics Automation Platform | Alteryx

- Apache Flink® — Stateful Computations over Data Streams | Apache Flink

-

GUIs (Vendor Lockin, Limited)

- Apache NiFi - An easy to use, powerful, and reliable system to process and distribute data. Apache NiFi supports powerful and scalable directed graphs of data routing, transformation, and system mediation logic.

-

data quality

- Elementary - dbt native data observability, built for data and analytics engineers - With Elementary you can monitor your data pipelines in minutes, in your dbt project. Gain immediate visibility to your jobs, models runs and test results. Detect data issues with freshness, volume, anomaly detection and schema tests. Explore all your test results and data health in a single interface and understand impact and root cause with rich lineage. Distribute actionable alerts to different channels and owners, and be on top of your data health.

- The State of Data Engineering 2022 - Git for Data - lakeFS

- The State of Data Engineering 2023 - Data Version Control at Scale

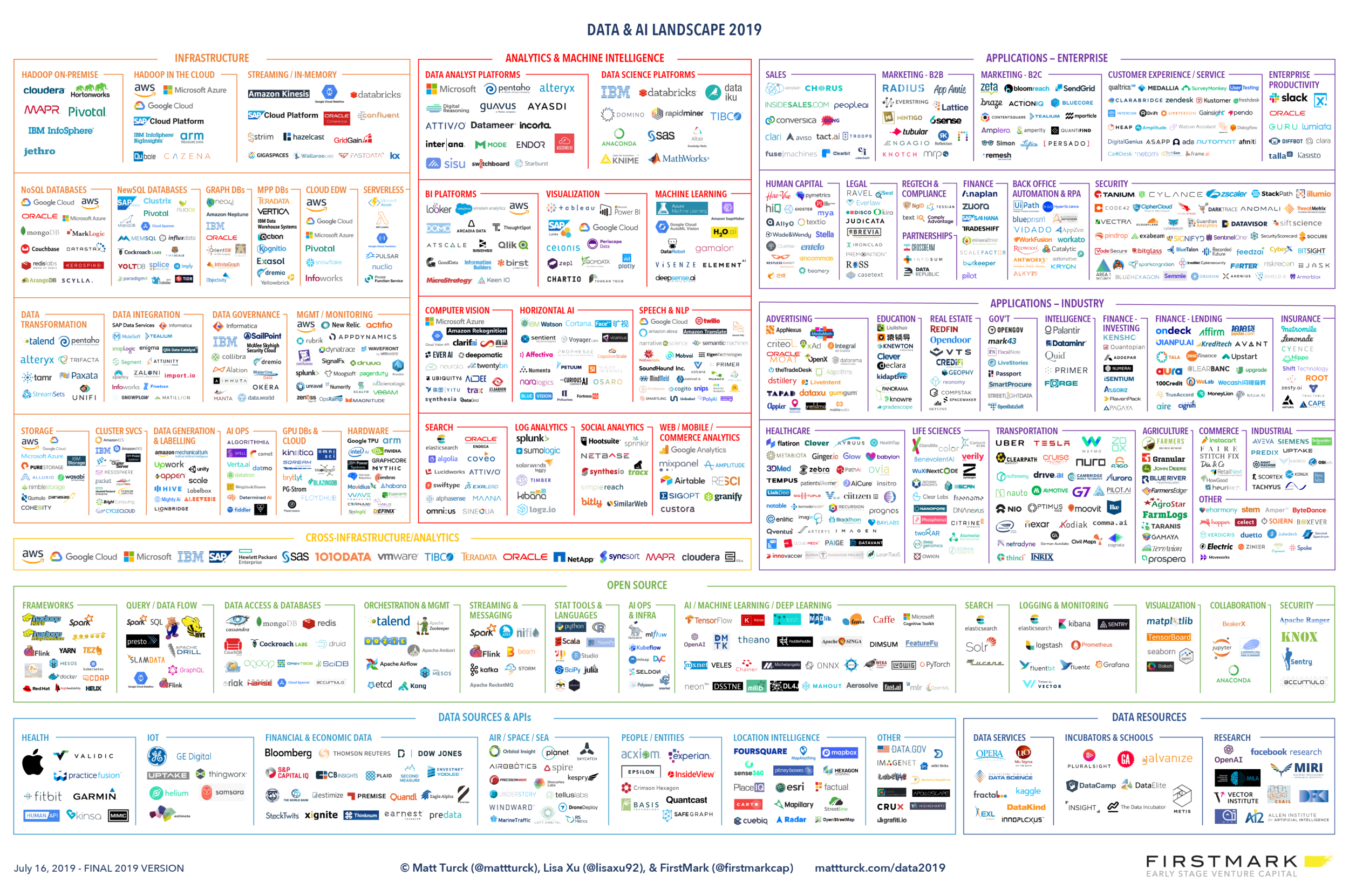

- A Turbulent Year: The 2019 Data & AI Landscape – Matt Turck

- Resilience and Vibrancy: The 2020 Data & AI Landscape – Matt Turck

- 2021 - Data & AI Landscape (MAD) Landscape – Matt Turck

- The 2023 MAD (Machine Learning, Artificial Intelligence & Data) Landscape – Matt Turck

- MAD 2023 - Version 1.0 - pdf

- Normalize all of your data to use...

- CSV or DELIMITED format for "flat" data,

- JSON for "nested" data,

- and Parquet where optimization is valuable AND where not all of the columns are always being used

- From my research

- Today, two data formats stick out more than any other and can cover most use cases of log and event data. Those data formats are the tabular or flat format "CSV" (comma-separated values) and the nested JSON (Javascript object notation) file format. In terms of tabular formats (think Excel files), the CSV file has been around since the 1970s. The CSV format was made official by RFC-4180. Tabular formats are incredibly useful for transporting relational and structured data. Where this structure is not possible, JSON is the next option. However, some logs or events do not follow either of these formats. Some may follow a delimited format which can be nested or flat such as a log using a mix of colons and brackets. Extensible Markup Language (XML) used to be the most popular option for nested data. However, over the years JSON has been adopted as the simpler and practical option for nested data (shown in Figure 1.) JSON was first introduced in 2001 but grew quickly in popularity as big tech companies like Google and Facebook started using it. XML also had additional security risks in implementation, so REST APIs and JSON became standard for development. On a side note, formats like Parquet are good for optimizing with certain use cases such as where one column in the tabular format is being used. Parquet performs better than CSV in those cases, so there are some caveats to CSV and use with flat data. Based on these facts, a good approach to threat detection would be to utilize data in these two formats as much as possible with some outliers where optimization is worth it. This makes it easier to transport and more likely to interface well with data engineering infrastructure and threat detection setups.

- Apache Parquet - (Apache foundation / Data Format / Open Source / Free).

- Apache ORC - (Apache foundation / Hortonworks / Facebook / Data Format / Open Source / Free).

- Apache Avro - (Apache foundation / Data Format / Open Source / Free).

- Apache Kudu - (Apache foundation / Cloudera / Data Format / Open Source / Free).

- Apache Arrow - (Apache foundation / Data Format / Open Source / Free).

- Delta - (Databricks / Data Format / Free or License fee).

- JSON - (Data Format / Free).

- CSV - (Data Format / Free).

- TSV - (Data Format / Free).

- HDF5 - (The HDF Group / Data Format / Open Source (licensed by HDF5) / Free).

- Data Integration Platform for Enterprise Companies | StreamSets

- Extract & Load

- Observability Pipelines | Datadog

- fluentbit

- Data transformation and data quality | Stitch

- Transformations | Fivetran

- 19 Best ETL Tools for 2023

- Talend Open Studio: Open-source ETL and Free Data Integration | Talend

- Open-source ETL: Talend Open Studio for Data Integration | Talend

- Singer | Open Source ETL

- Top ETL Tools Comparison - Skyvia

- Top 14 ETL Tools for 2024 | Integrate.io

- 15+ Best ETL Tools Available in the Market in 2023

- Apache NiFi

- Data Landscape Tools - Artboard 1

- SnapLogic Snaps | Pre-built Intelligent Connectors

- Datameer | A Data Transformation Platform - Datameer

- Data Pipeline Automation Platform

- Azure Data Factory - Data Integration Service | Microsoft Azure

- Analytics & Business Intelligence

- iPaas (Integration Platform)

- Data Science & ML Platforms

- Personalization Engines?

- Awesome-SOAR

- https://github.com/meirwah/awesome-incident-response#playbooks

- https://github.com/Correia-jpv/fucking-awesome-incident-response#playbooks

- https://github.com/cyb3rxp/awesome-soc/blob/main/README.md

- https://github.com/cyb3rxp/awesome-soc/blob/main/threat_intelligence.md

- https://github.com/academic/awesome-datascience#miscellaneous-tools

- https://github.com/academic/awesome-datascience#visualization-tools

- https://github.com/igorbarinov/awesome-data-engineering#databases

- https://github.com/igorbarinov/awesome-data-engineering#data-ingestion

- https://github.com/igorbarinov/awesome-data-engineering#workflow

- https://github.com/igorbarinov/awesome-data-engineering#data-lake-management

- https://github.com/igorbarinov/awesome-data-engineering#elk-elastic-logstash-kibana

- https://github.com/newTendermint/awesome-bigdata#data-ingestion

- https://github.com/newTendermint/awesome-bigdata#data-visualization

- https://github.com/0x4D31/awesome-threat-detection#detection-alerting-and-automation-platforms

- https://github.com/LetsDefend/awesome-soc-analyst#network-devices-logs

- https://github.com/pawl/awesome-etl