We've been trying to deploy bosh into our vSphere environment for a few weeks without much success. HUGE THANK YOU goes out to @mjavault for tracking down and documenting this bug. All of the credit for the content below goes to @mjavault.

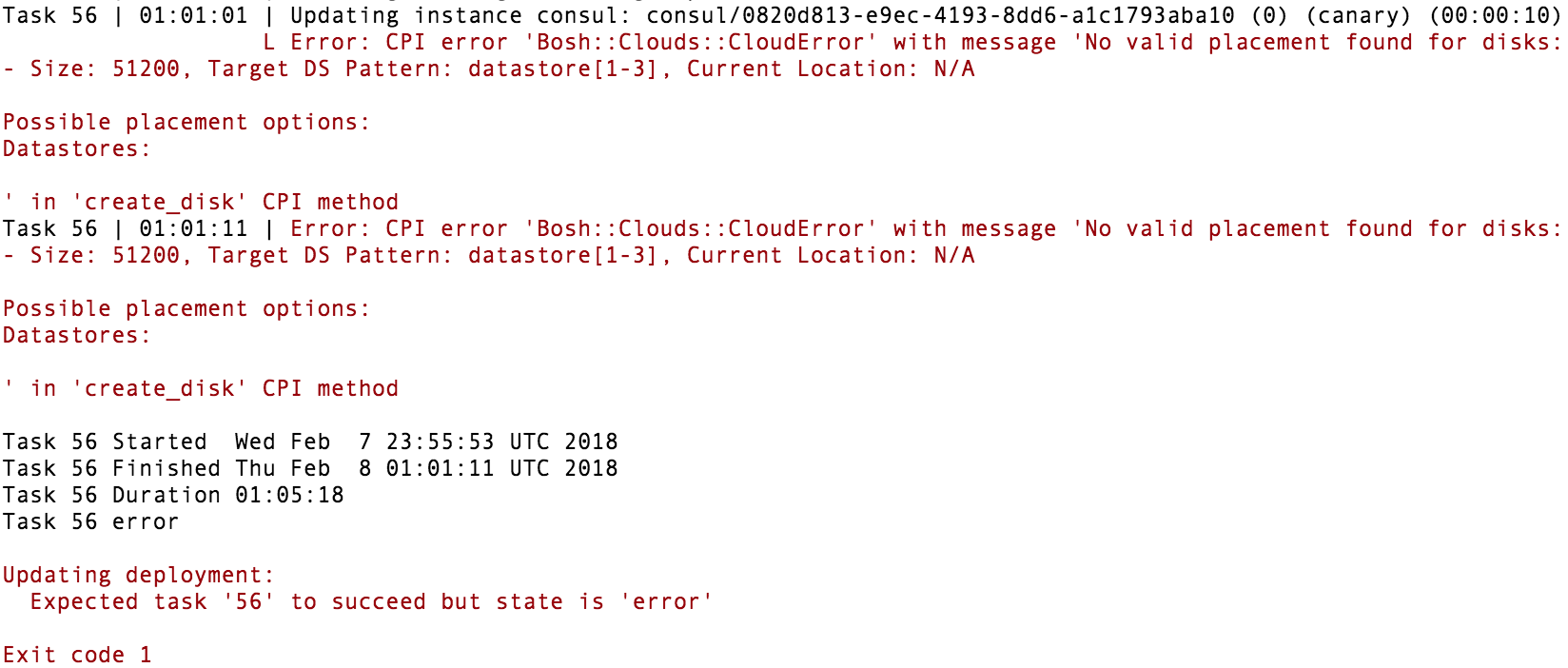

When we try to run bosh-init deploy manifest.yml against our vSphere deployment, we get the following error:

Deployment manifest: '/git/manifest.yml'

Deployment state: '/git/manifest-state.json'

Started validating

Downloading release 'bosh'... Skipped [Found in local cache] (00:00:00)

Validating release 'bosh'... Finished (00:00:02)

Downloading release 'bosh-vsphere-cpi'... Skipped [Found in local cache] (00:00:00)

Validating release 'bosh-vsphere-cpi'... Finished (00:00:00)

Validating cpi release... Finished (00:00:00)

Validating deployment manifest... Finished (00:00:00)

Downloading stemcell... Finished (00:01:03)

Validating stemcell... Finished (00:00:03)

Finished validating (00:01:09)

Started installing CPI

Compiling package 'vsphere_cpi_mkisofs/b3ebe039dae6a312784ece4da34d66053d1dfbba'... Finished (00:02:43)

Compiling package 'vsphere_cpi_ruby/3ce375f2863799664bff235e2c778a3131f1e981'... Finished (00:01:47)

Compiling package 'vsphere_cpi/6cce7d152770ee8a2d2309d2278cc83af878757d'... Finished (00:00:52)

Installing packages... Finished (00:00:00)

Rendering job templates... Finished (00:00:00)

Installing job 'vsphere_cpi'... Finished (00:00:00)

Finished installing CPI (00:05:24)

Starting registry... Finished (00:00:00)

Uploading stemcell 'bosh-vsphere-esxi-ubuntu-trusty-go_agent/3149'... Failed (00:01:56)

Stopping registry... Finished (00:00:00)

Cleaning up rendered CPI jobs... Finished (00:00:00)

Command 'deploy' failed:

creating stemcell (bosh-vsphere-esxi-ubuntu-trusty-go_agent 3149):

Unmarshalling external CPI command output: STDOUT: '', STDERR: 'at depth 1 - 19: self signed certificate in certificate chain

I, [2016-01-07T16:06:31.576680 #26031] INFO -- : Extracting stemcell to: /tmp/d20160107-26031-f6jcqs

I, [2016-01-07T16:06:36.033533 #26031] INFO -- : Generated name: sc-f8687c5b-30bf-4f10-99e4-4ad8f6cf9901

D, [2016-01-07T16:06:36.033812 #26031] DEBUG -- : All clusters provided: {"cluster1"=>#<VSphereCloud::ClusterConfig:0x007f182922c4d0 @name="cluster1", @config={}>}

at depth 1 - 19: self signed certificate in certificate chain

at depth 1 - 19: self signed certificate in certificate chain

D, [2016-01-07T16:08:04.610612 #26031] DEBUG -- : cluster1 ephemeral disk bound

D, [2016-01-07T16:08:04.610796 #26031] DEBUG -- : Acceptable clusters: [[<Cluster: <[Vim.ClusterComputeResource] domain-c63525> / cluster1>, 5929]]

D, [2016-01-07T16:08:04.610881 #26031] DEBUG -- : Choosing cluster by weighted random

D, [2016-01-07T16:08:04.610955 #26031] DEBUG -- : Selected cluster 'cluster1'

D, [2016-01-07T16:08:04.611021 #26031] DEBUG -- : Looking for a ephemeral datastore in cluster1 with 529MB free space.

D, [2016-01-07T16:08:04.611094 #26031] DEBUG -- : All datastores within cluster cluster1: ["Somerville 3PAR 2 VM 5 (3137714MB free of 4194048MB capacity)"]

D, [2016-01-07T16:08:04.611172 #26031] DEBUG -- : Datastores with enough space: ["Somerville 3PAR 2 VM 5 (3137714MB free of 4194048MB capacity)"]

I, [2016-01-07T16:08:04.611252 #26031] INFO -- : Deploying to: <[Vim.ClusterComputeResource] domain-c63525> / <[Vim.Datastore] datastore-188772>

I, [2016-01-07T16:08:05.045048 #26031] INFO -- : Importing VApp

I, [2016-01-07T16:08:05.141158 #26031] INFO -- : Waiting for NFC lease to become ready

I, [2016-01-07T16:08:07.168564 #26031] INFO -- : Uploading

I, [2016-01-07T16:08:07.178566 #26031] INFO -- : Uploading disk to: https://vmhost/nfc/52b32078-1cfe-c4f3-a2e8-94769d02a483/disk-0.vmdk

at depth 0 - 20: unable to get local issuer certificate

I, [2016-01-07T16:08:22.260389 #26031] INFO -- : Removing NICs

I, [2016-01-07T16:08:23.315795 #26031] INFO -- : Taking initial snapshot

/home/user/.bosh_init/installations/7f8327b7-578b-4d35-7a62-2ee15c504e92/packages/vsphere_cpi_ruby/lib/ruby/2.1.0/json/common.rb:223:in `encode': "\xC2" on US-ASCII (Encoding::InvalidByteSequenceError)

from /home/user/.bosh_init/installations/7f8327b7-578b-4d35-7a62-2ee15c504e92/packages/vsphere_cpi_ruby/lib/ruby/2.1.0/json/common.rb:223:in `generate'

from /home/user/.bosh_init/installations/7f8327b7-578b-4d35-7a62-2ee15c504e92/packages/vsphere_cpi_ruby/lib/ruby/2.1.0/json/common.rb:223:in `generate'

from /home/user/.bosh_init/installations/7f8327b7-578b-4d35-7a62-

from /home/user/.bosh_init/installations/7f8327b7-578b-4d35-7a62-2ee15c504e92/packages/vsphere_cpi/vendor/bundle/ruby/2.1.0/gems/bosh_cpi-1.3093.0/lib/bosh/cpi/cli.rb:114:in `result_response'

from /home/user/.bosh_init/installations/7f8327b7-578b-4d35-7a62-2ee15c504e92/packages/vsphere_cpi/vendor/bundle/ruby/2.1.0/gems/bosh_cpi-1.3093.0/lib/bosh/cpi/cli.rb:82:in `run'

from /home/user/.bosh_init/installations/7f8327b7-578b-4d35-7a62-2ee15c504e92/packages/vsphere_cpi/bin/vsphere_cpi:37:in `<main>'

':

unexpected end of JSON input

The deploy process is downloading a huge xml file that contains UTF8 characters. Now that file is properly parsed by ruby, but the code also specifies that the full output should be added as a string in a hash object, for logging purposes. Later in the code, that hash object is converted back to json, but the encoder fails because it finds invalid characters, specifically UTF8 characters in a ASCII string.(edited)

code extract, cli.rb:

def result_response(result)

hash = {

result: result,

error: nil,

log: @logs_string_io.string,

}

@result_io.print(JSON.dump(hash)); nil

end

The problem here is the log: @logs_string_io.string, the UTF8 encoding is lost. I doubt the variable is actually very useful, but in doubt, I patched it this way:

log: @logs_string_io.string.force_encoding(Encoding::UTF_8)

I have never coded in ruby in my life, so ruby people would probably come up with a cleaner fix, but this effectively forces the string as UTF8, and the later calls to generate() work just fine! With that file patched, the process goes all the way (I'm still getting some warnings, that I assume are related to the way I hacked my patch into the archives)

So, until we come up with a cleaner, official fix, here is a step by step guide on how to patch it yourself.

1. Run `bosh-init deploy manifest.yml` once, and let it fail

2. Go into `~/.bosh_init/installations/{install uuid}/blobs` (the `{installation uuid}` can be found in the error message that you got at step 1)

3. look for the largest file in that folder (there should be three files), and note the name (it should look like a UUID)

4. create a `tmp` folder here, and extract the file: `tar -xvzf {file} -C tmp`

5. edit `tmp/vendor/bundle/ruby/2.1.0/gems/bosh_cpi-1.3093.0/lib/bosh/cpi/cli.rb` (note: if the file does not exist, it's likely you didn't pick the right file out of the three)

6. patch line 112: `log: @logs_string_io.string.force_encoding(Encoding::UTF_8),`, save the file

7. from inside the `tmp` folder: `tar -cvzf ..\patched.tgz *`

8. rename `patched.tgz` to the original file name from step 3 (you might want to rename the original file first as a backup)

9. generate the sha1: `sha1sum {file}` and write it down

10. edit `~/.bosh_init/installations/{install uuid}/compiled_packages.json`, search for the `BlobID` that is the name of the file from step 3, and update the corresponding `BlobSHA1`

11. that's it. Go back into you working folder, and run `bosh-init deploy manifest.yml` one more time.