CAMeL Tools is suite of Arabic natural language processing tools developed by the CAMeL Lab at New York University Abu Dhabi.

Please use GitHub Issues to report a bug or if you need help using CAMeL Tools.

You will need Python 3.7 - 3.10 (64-bit) as well as the Rust compiler installed.

You will need to install some additional dependencies on Linux and macOS. Primarily CMake, and Boost.

On Ubuntu/Debian you can install these dependencies by running:

sudo apt-get install cmake libboost-all-devOn macOS you can install them using Homewbrew by running:

brew install cmake boostpip install camel-tools

# or run the following if you already have camel_tools installed

pip install camel-tools --upgradeOn Apple silicon Macs you may have to run the following instead:

CMAKE_OSX_ARCHITECTURES=arm64 pip install camel-tools

# or run the following if you already have camel_tools installed

CMAKE_OSX_ARCHITECTURES=arm64 pip install camel-tools --upgrade# Clone the repo

git clone https://github.com/CAMeL-Lab/camel_tools.git

cd camel_tools

# Install from source

pip install .

# or run the following if you already have camel_tools installed

pip install --upgrade .To install the datasets required by CAMeL Tools components run one of the following:

# To install all datasets

camel_data -i all

# or just the datasets for morphology and MLE disambiguation only

camel_data -i light

# or just the default datasets for each component

camel_data -i defaultsSee Available Packages for a list of all available datasets.

By default, data is stored in ~/.camel_tools. Alternatively, if you would like to install the data in a different location, you need to set the CAMELTOOLS_DATA environment variable to the desired path.

Add the following to your .bashrc, .zshrc, .profile, etc:

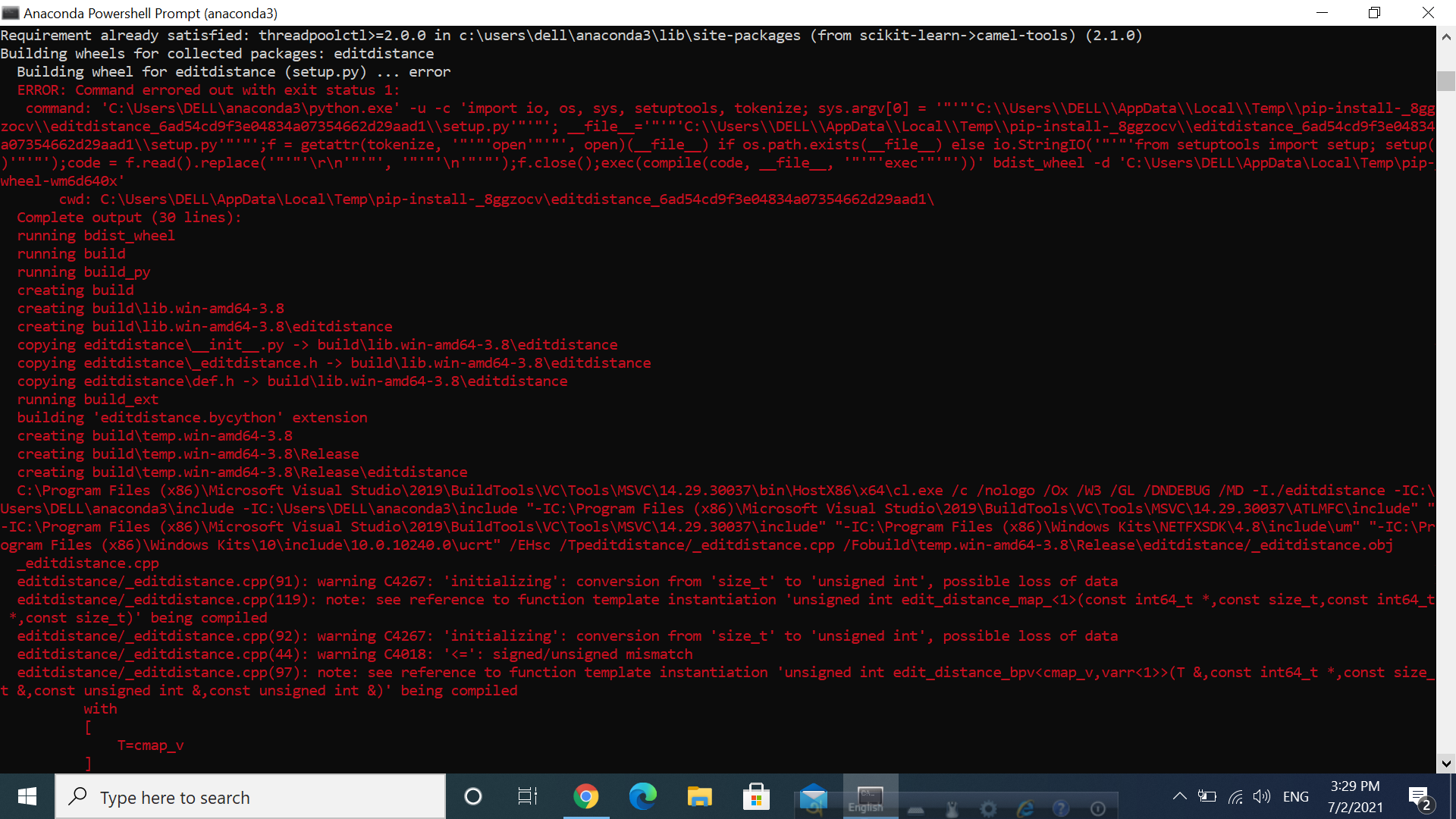

export CAMELTOOLS_DATA=/path/to/camel_tools_dataNote: CAMeL Tools has been tested on Windows 10. The Dialect Identification component is not available on Windows at this time.

pip install camel-tools -f https://download.pytorch.org/whl/torch_stable.html

# or run the following if you already have camel_tools installed

pip install --upgrade -f https://download.pytorch.org/whl/torch_stable.html camel-tools# Clone the repo

git clone https://github.com/CAMeL-Lab/camel_tools.git

cd camel_tools

# Install from source

pip install -f https://download.pytorch.org/whl/torch_stable.html .

pip install --upgrade -f https://download.pytorch.org/whl/torch_stable.html .To install the data packages required by CAMeL Tools components, run one of the following commands:

# To install all datasets

camel_data -i all

# or just the datasets for morphology and MLE disambiguation only

camel_data -i light

# or just the default datasets for each component

camel_data -i defaultsSee Available Packages for a list of all available datasets.

By default, data is stored in C:\Users\your_user_name\AppData\Roaming\camel_tools. Alternatively, if you would like to install the data in a different location, you need to set the CAMELTOOLS_DATA environment variable to the desired path. Below are the instructions to do so (on Windows 10):

- Press the Windows button and type

env. - Click on Edit the system environment variables (Control panel).

- Click on the Environment Variables... button.

- Click on the New... button under the User variables panel.

- Type

CAMELTOOLS_DATAin the Variable name input box and the desired data path in Variable value. Alternatively, you can browse for the data directory by clicking on the Browse Directory... button. - Click OK on all the opened windows.

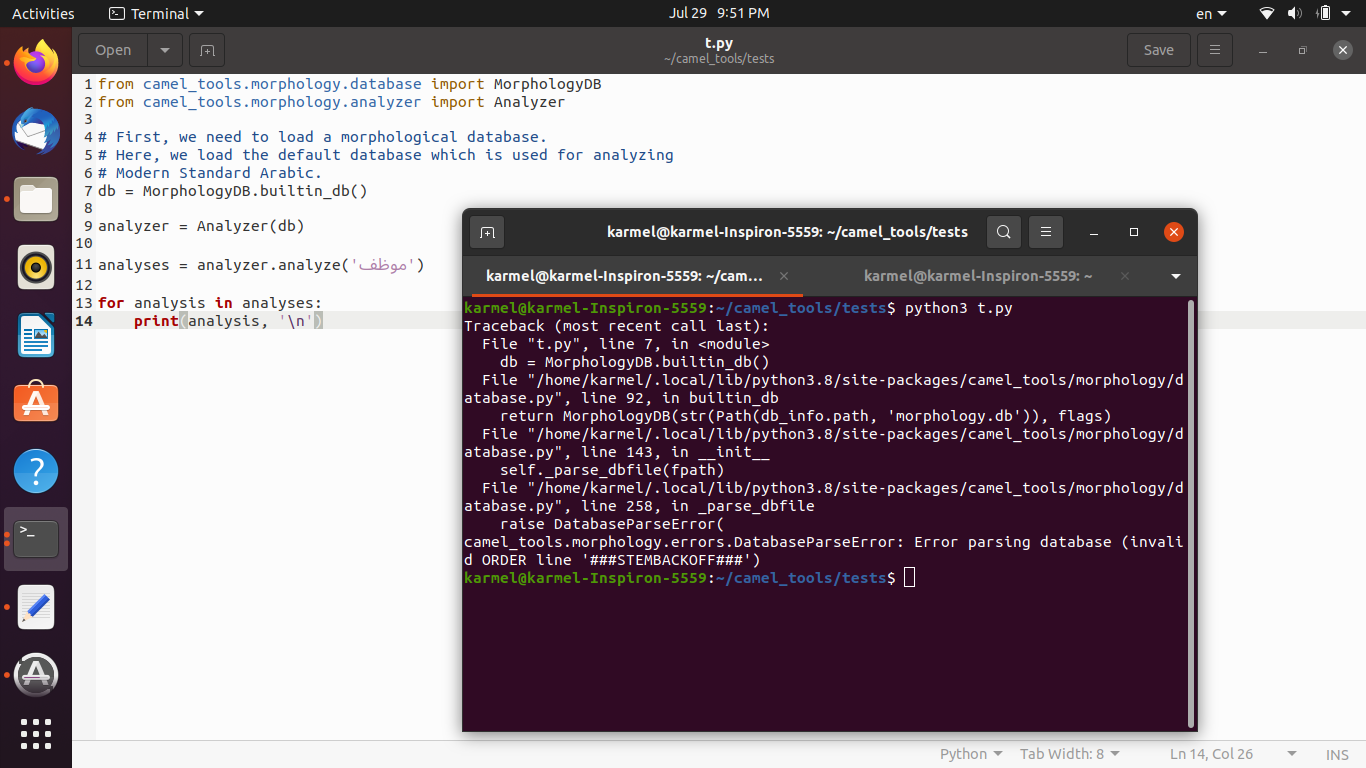

To get started, you can follow along the Guided Tour for a quick overview of the components provided by CAMeL Tools.

You can find the full online documentation here for both the command-line tools and the Python API.

Alternatively, you can build your own local copy of the documentation as follows:

# Install dependencies

pip install sphinx recommonmark sphinx-rtd-theme

# Go to docs subdirectory

cd docs

# Build HTML docs

make htmlThis should compile all the HTML documentation in to docs/build/html.

If you find CAMeL Tools useful in your research, please cite our paper:

@inproceedings{obeid-etal-2020-camel,

title = "{CAM}e{L} Tools: An Open Source Python Toolkit for {A}rabic Natural Language Processing",

author = "Obeid, Ossama and

Zalmout, Nasser and

Khalifa, Salam and

Taji, Dima and

Oudah, Mai and

Alhafni, Bashar and

Inoue, Go and

Eryani, Fadhl and

Erdmann, Alexander and

Habash, Nizar",

booktitle = "Proceedings of the 12th Language Resources and Evaluation Conference",

month = may,

year = "2020",

address = "Marseille, France",

publisher = "European Language Resources Association",

url = "https://www.aclweb.org/anthology/2020.lrec-1.868",

pages = "7022--7032",

abstract = "We present CAMeL Tools, a collection of open-source tools for Arabic natural language processing in Python. CAMeL Tools currently provides utilities for pre-processing, morphological modeling, Dialect Identification, Named Entity Recognition and Sentiment Analysis. In this paper, we describe the design of CAMeL Tools and the functionalities it provides.",

language = "English",

ISBN = "979-10-95546-34-4",

}CAMeL Tools is available under the MIT license. See the LICENSE file for more info.

If you would like to contribute to CAMeL Tools, please read the CONTRIBUTE.rst file.