Performance testing companion for React and React Native.

- The problem

- This solution

- Installation and setup

- CI setup

- Assessing CI stability

- Analyzing results

- API

- External References

- Contributing

- License

- Made with ❤️ at Callstack

You want your React Native app to perform well and fast at all times. As a part of this goal, you profile the app, observe render patterns, apply memoization in the right places, etc. But it's all manual and too easy to unintentionally introduce performance regressions that would only get caught during QA or worse, by your users.

Reassure allows you to automate React Native app performance regression testing on CI or a local machine. In the same way, you write your integration and unit tests that automatically verify that your app is still working correctly, you can write performance tests that verify that your app is still working performantly.

You can think about it as a React performance testing library. In fact, Reassure is designed to reuse as much of your React Native Testing Library tests and setup as possible.

Reassure works by measuring render characteristics – duration and count – of the testing scenario you provide and comparing that to the stable version. It repeats the scenario multiple times to reduce the impact of random variations in render times caused by the runtime environment. Then, it applies statistical analysis to determine whether the code changes are statistically significant. As a result, it generates a human-readable report summarizing the results and displays it on the CI or as a comment to your pull request.

In addition to measuring component render times it can also measure execution of regular JavaScript functions.

To install Reassure, run the following command in your app folder:

Using yarn

yarn add --dev reassureUsing npm

npm install --save-dev reassureYou will also need a working Jest setup as well as one of either React Native Testing Library or React Testing Library.

You can check our example projects:

Reassure will try to detect which Testing Library you have installed. If both React Native Testing Library and React Testing Library are present, it will warn you about that and give precedence to React Native Testing Library. You can explicitly specify Testing Library to be used by using configure option:

configure({ testingLibrary: 'react-native' });

// or

configure({ testingLibrary: 'react' });You should set it in your Jest setup file, and you can override it in particular test files if needed.

Now that the library is installed, you can write your first test scenario in a file with .perf-test.js/.perf-test.tsx extension:

// ComponentUnderTest.perf-test.tsx

import { measurePerformance } from 'reassure';

import { ComponentUnderTest } from './ComponentUnderTest';

test('Simple test', async () => {

await measurePerformance(<ComponentUnderTest />);

});This test will measure render times of ComponentUnderTest during mounting and resulting sync effects.

Note: Reassure will automatically match test filenames using Jest's

--testMatchoption with value"<rootDir>/**/*.perf-test.[jt]s?(x)". However, if you want to pass a custom--testMatchoption, you may add it to thereassure measurescript to pass your own glob. More about--testMatchin Jest docs

If your component contains any async logic or you want to test some interaction, you should pass the scenario option:

import { measurePerformance } from 'reassure';

import { screen, fireEvent } from '@testing-library/react-native';

import { ComponentUnderTest } from './ComponentUnderTest';

test('Test with scenario', async () => {

const scenario = async () => {

fireEvent.press(screen.getByText('Go'));

await screen.findByText('Done');

};

await measurePerformance(<ComponentUnderTest />, { scenario });

});The body of the scenario function is using familiar React Native Testing Library methods.

In case of using a version of React Native Testing Library lower than v10.1.0, where screen helper is not available, the scenario function provides it as its first argument:

import { measurePerformance } from 'reassure';

import { fireEvent } from '@testing-library/react-native';

test('Test with scenario', async () => {

const scenario = async (screen) => {

fireEvent.press(screen.getByText('Go'));

await screen.findByText('Done');

};

await measurePerformance(<ComponentUnderTest />, { scenario });

});If your test contains any async changes, you will need to make sure that the scenario waits for these changes to settle, e.g. using findBy queries, waitFor or waitForElementToBeRemoved functions from RNTL.

For more examples, look into our example apps:

To measure your first test performance, you need to run the following command in the terminal:

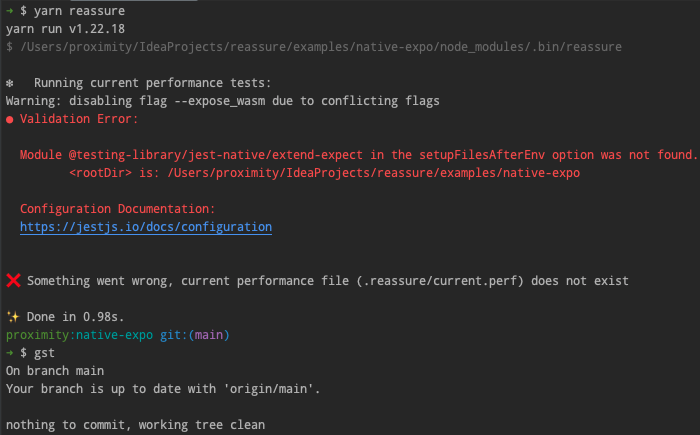

yarn reassureThis command will run your tests multiple times using Jest, gathering performance statistics and will write them to .reassure/current.perf file. To check your setup, check if the output file exists after running the command for the first time.

Note: You can add

.reassure/folder to your.gitignorefile to avoid accidentally committing your results.

Reassure CLI will automatically try to detect your source code branch name and commit hash when you are using Git. You can override these options, e.g. if you are using a different version control system:

yarn reassure --branch [branch name] --commit-hash [commit hash]To detect performance changes, you must measure the performance of two versions of your code current (your modified code) and baseline (your reference point, e.g. main branch). To measure performance on two branches, you must switch branches in Git or clone two copies of your repository.

We want to automate this task to run on the CI. To do that, you will need to create a performance-testing script. You should save it in your repository, e.g. as reassure-tests.sh.

A simple version of such script, using a branch-changing approach, is as follows:

#!/usr/bin/env bash

set -e

BASELINE_BRANCH=${BASELINE_BRANCH:="main"}

# Required for `git switch` on CI

git fetch origin

# Gather baseline perf measurements

git switch "$BASELINE_BRANCH"

yarn install --force

yarn reassure --baseline

# Gather current perf measurements & compare results

git switch --detach -

yarn install --force

yarn reassureTo make setting up the CI integration and all prerequisites more convenient, we have prepared a CLI command to generate all necessary templates for you to start with.

Simply run:

yarn reassure initThis will generate the following file structure

├── <ROOT>

│ ├── reassure-tests.sh

│ ├── dangerfile.ts/js (or dangerfile.reassure.ts/js if dangerfile.ts/js already present)

│ └── .gitignore

You can also use the following options to adjust the script further

This is one of the options controlling the level of logs printed into the command prompt while running reassure scripts. It will

Just like the previous, this option also controls the level of logs. It will suppress all logs besides explicit errors.

Basic script allowing you to run Reassure on CI. More on the importance and structure of this file in the following section.

If your project already contains a dangerfile.ts/js, the CLI will not override it in any way. Instead, it will generate a dangerfile.reassure.ts/js file, allowing you to compare and update your own at your convenience.

If the .gitignore file is present and no mentions of reassure appear, the script will append the .reassure/ directory to its end.

To detect performance changes, you must measure the performance of two versions of your code current (your modified code) and baseline (your reference point, e.g. main branch). To measure performance on two branches, you must switch branches in Git or clone two copies of your repository.

We want to automate this task to run on the CI. To do that, you will need to create a performance-testing script. You should save it in your repository, e.g. as reassure-tests.sh.

A simple version of such script, using a branch-changing approach, is as follows:

#!/usr/bin/env bash

set -e

BASELINE_BRANCH=${BASELINE_BRANCH:="main"}

# Required for `git switch` on CI

git fetch origin

# Gather baseline perf measurements

git switch "$BASELINE_BRANCH"

yarn install --force

yarn reassure --baseline

# Gather current perf measurements & compare results

git switch --detach -

yarn install --force

yarn reassureAs a final setup step, you must configure your CI to run the performance testing script and output the result. For presenting output at the moment, we integrate with Danger JS, which supports all major CI tools.

You will need a working Danger JS setup.

Then add Reassure Danger JS plugin to your dangerfile:

// /<project_root>/dangerfile.reassure.ts (generated by the init script)

import path from 'path';

import { dangerReassure } from 'reassure';

dangerReassure({

inputFilePath: path.join(__dirname, '.reassure/output.md'),

});If you do not have a Dangerfile (dangerfile.js or dangerfile.ts) yet, you can use the one generated by the reassure init script without making any additional changes.

It is also in our example file Dangerfile.

Finally, run both the performance testing script & danger in your CI config:

- name: Run performance testing script

run: ./reassure-tests.sh

- name: Run Danger.js

run: yarn danger ci

env:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}You can also check our example GitHub workflow.

The above example is based on GitHub Actions, but it should be similar to other CI config files and should only serve as a reference in such cases.

Note: Your performance test will run much longer than regular integration tests. It's because we run each test scenario multiple times (by default, 10) and repeat that for two branches of your code. Hence, each test will run 20 times by default. That's unless you increase that number even higher.

We measure React component render times with microsecond precision during performance measurements using React.Profiler. This means the same code will run faster or slower, depending on the machine. For this reason, baseline & current measurements need to be run on the same machine. Optimally, they should be run one after another.

Moreover, your CI agent needs to have stable performance to achieve meaningful results. It does not matter if your agent is fast or slow as long as it is consistent in its performance. That's why the agent should not be used during the performance tests for any other work that might impact measuring render times.

To help you assess your machine's stability, you can use the reassure check-stability command. It runs performance measurements twice for the current code, so baseline and current measurements refer to the same code. In such a case, the expected changes are 0% (no change). The degree of random performance changes will reflect the stability of your machine.

This command can be run both on CI and local machines.

Normally, the random changes should be below 5%. Results of 10% and more are considered too high, meaning you should work on tweaking your machine's stability.

Note: As a trick of last resort, you can increase the

runoption from the default value of 10 to 20, 50 or even 100 for all or some of your tests, based on the assumption that more test runs will even out measurement fluctuations. That will, however, make your tests run even longer.

You can refer to our example GitHub workflow.

Looking at the example, you can notice that test scenarios can be assigned to certain categories:

- Significant Changes To Duration shows test scenarios where the performance change is statistically significant and should be looked into as it marks a potential performance loss/improvement

- Meaningless Changes To Duration shows test scenarios where the performance change is not statistically significant

- Changes To Count shows test scenarios where the render or execution count did change

- Added Scenarios shows test scenarios which do not exist in the baseline measurements

- Removed Scenarios shows test scenarios which do not exist in the current measurements

Custom wrapper for the RNTL render function responsible for rendering the passed screen inside a React.Profiler component,

measuring its performance and writing results to the output file. You can use the optional options object that allows customizing aspects

of the testing

async function measurePerformance(

ui: React.ReactElement,

options?: MeasureOptions,

): Promise<MeasureResults> {interface MeasureOptions {

runs?: number;

warmupRuns?: number;

wrapper?: React.ComponentType<{ children: ReactElement }>;

scenario?: (view?: RenderResult) => Promise<any>;

writeFile?: boolean;

}runs: number of runs per series for the particular testwarmupRuns: number of additional warmup runs that will be done and discarded before the actual runs (default 1).wrapper: React component, such as aProvider, which theuiwill be wrapped with. Note: the render duration of thewrapperitself is excluded from the results; only the wrapped component is measured.scenario: a custom async function, which defines user interaction within the UI by utilising RNTL or RTL functionswriteFile: (defaulttrue) should write output to file.

Allows you to wrap any synchronous function, measure its execution times and write results to the output file. You can use optional options to customize aspects of the testing. Note: the execution count will always be one.

async function measureFunction(

fn: () => void,

options?: MeasureFunctionOptions

): Promise<MeasureResults> {interface MeasureFunctionOptions {

runs?: number;

warmupRuns?: number;

}runs: number of runs per series for the particular testwarmupRuns: number of additional warmup runs that will be done and discarded before the actual runs.

The default config which will be used by the measuring script. This configuration object can be overridden with the use

of the configure function.

type Config = {

runs?: number;

warmupRuns?: number;

outputFile?: string;

verbose?: boolean;

testingLibrary?:

| 'react-native'

| 'react'

| { render: (component: React.ReactElement<any>) => any; cleanup: () => any };

};const defaultConfig: Config = {

runs: 10,

warmupRuns: 1,

outputFile: '.reassure/current.perf',

verbose: false,

testingLibrary: undefined, // Will try auto-detect first RNTL, then RTL

};runs: the number of repeated runs in a series per test (allows for higher accuracy by aggregating more data). Should be handled with care.

warmupRuns: the number of additional warmup runs that will be done and discarded before the actual runs.outputFile: the name of the file the records will be saved toverbose: make Reassure log more, e.g. for debugging purposestestingLibrary: where to look forrenderandcleanupfunctions, supported values'react-native','react'or object providing customrenderandcleanupfunctions

function configure(customConfig: Partial<Config>): void;The configure function can override the default config parameters.

resetToDefault(): voidReset the current config to the original defaultConfig object

You can use available environmental variables to alter your test runner settings.

TEST_RUNNER_PATH: an alternative path for your test runner. Defaults to'node_modules/.bin/jest'or on Windows'node_modules/jest/bin/jest'TEST_RUNNER_ARGS: a set of arguments fed to the runner. Defaults to'--runInBand --testMatch "<rootDir>/**/*.perf-test.[jt]s?(x)"'

Example:

TEST_RUNNER_PATH=myOwnPath/jest/bin yarn reassure- The Ultimate Guide to React Native Optimization 2023 Edition - Mentioned in "Make your app consistently fast" chapter.

See the contributing guide to learn how to contribute to the repository and the development workflow.

Reassure is an Open Source project and will always remain free to use. The project has been developed in close partnership with Entain and was originally their in-house project. Thanks to their willingness to develop the React & React Native ecosystem, we decided to make it Open Source. If you think it's cool, please star it 🌟

Callstack is a group of React and React Native experts. If you need help with these or want to say hi, contact us at [email protected]!

Like the project? ⚛️ Join the Callstack team who does amazing stuff for clients and drives React Native Open Source! 🔥