Master: Develop:

*

Upload data to standardized observation repositories

The SOS Importer is a tool for importing observations into standardized observation repositories. This enables interoperable spatial data and information exchange.

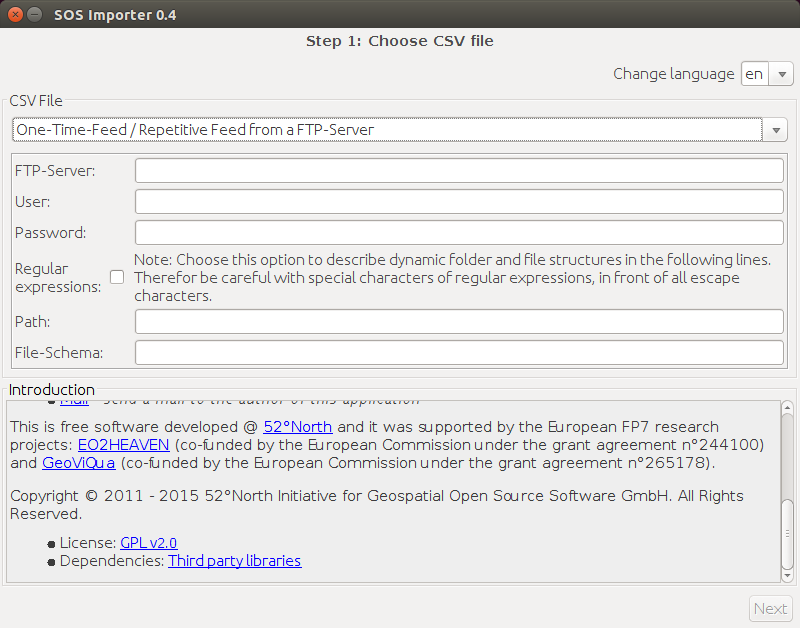

The SOS Importer is a tool for importing observations from CSV files into a running SOS instance. Those CSV files can either be locally available or remotely (FTP support). The application makes use of the wizard design pattern which guides the user through different steps. These and their purposes are briefly characterized in the table below.

Wizard Module

The Wizard Module is the GUI wizard for creating the configurations (metadata about the CSV file).

Feeder Module

The Feeder Module uses the configurations created by the Wizard Module for importing the data into a running SOS instance.

Please check the how to run section for instructions to start the two modules.

ℹ️ Click to view a larger version of the image.

The SOS Importer requires JAVA 1.7+ and a running SOS instance (OGC SOS v1.0 or v2.0) to work. The wizard module requires a GUI capable system.

Several sensor web specific terms are used within this topic:

- Feature of Interest

- Observed Property

- Unit Of Measurement

- Procedure or Sensor

If you are not familiar with them, please take a look at this explaining OGC tutorial. It's short and easy to understand.

| Step | Description |

|---|---|

| 1 | Choose a CSV file from the file system to publish in a SOS instance. Alternatively you can also obtain a CSV file from a remote FTP server. |

| 2 | Provide a preview of the CSV file and select settings for parsing (e.g. which character is used for separating columns) |

| 3 | Display the CSV file in tabular format and assign metadata to each column (e.g. indicate that the second column consists of measured values). Offer customizable settings for parsing (e.g. for date/time patterns) |

| 4 | In case of more than one date/time, feature of interest, observed property, unit of measurement, sensor identifier or position has been identified in step 3, select the correct associations to the according measured value columns (e.g. state that date/time in column 1 belongs to the measured values in column 3 and date/time in column 2 belongs to the measured values in column 4). When there is exactly one appearance of a certain type, automatically assign this type to all measured values |

| 5 | Check available metadata for completeness and ask the user to add information in case something is missing (e.g. EPSG-code for positions) |

| 6 | When there is no metadata of a particular type present in the CSV file (e.g. sensor id), let the user provide this information (e.g. name and URI of this sensor) |

| 7 | Enter the URL of a Sensor Observation Service where measurements and sensor metadata in the CSV file shall be uploaded to |

| 8 | Summarize the results of the configuration process and provide means for importing the data into the specified SOS instance |

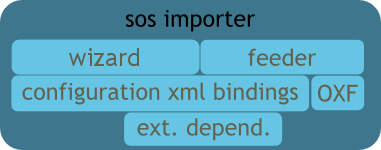

The SOS importer projects consists of three modules now:

- wizard

- feeder

- configuration xml bindings

The first two are "applications" using the third to do their work: enabling the user to store metadata about his CSV file and import the contained data into a running SOS (OGC schema 1.0.0 or 2.0.0) instance for one time or repeatedly. In this process the wizard module is used to create the xml configuration documents. It depends on the 52n-sos-importer-bindings module to write the configuration files (the XML schema: stable, development). The feeder module reads this configuration file and the defined data file, creates the requests for inserting the data, and registers the defined sensors in the SOS if not done already. For communicating with the SOS some modules of the OxFramework are used:

- oxf-sos-adapter

- oxf-common

- oxf-feature

- oxf-adapter-api

- 52n-oxf-xmlbeans

Thanks to the new structure of the OxFramework the number of modules and dependencies is reduced. The following figure shows this structure.

The wizard module contains the following major packages:

org.n52.sos.importercontroller- contains all the business logic including aStepControllerfor each step in the workflow and theMainControllerwhich controls the flow of the application.model- contains all data holding classes, each for one step and the overall !XMLModel build using the52n-sos-importer-bindingsmodule.view- contains all views (here:StepXPanel) including theMainFrameand theBackNextPanel. The sub packages contain special panels required for missing resources or re-used tabular views. A good starting point for new developers is to take a look at theMainController.setStepController(!StepController),BackNextController.nextButtonClicked(), and theStepController. During each step the !XMLModel is updatedMainController.updateModel().

All important constants are stored in org.n52.sos.importer.Constants.

The feeder module contains the following major packages:

org.n52.sos.importer.feeder- contains classesConfigurationandDataFilewhich offer means accessing the xml configuration and the csv data file, the application's main classFeederwhich controls the application workflow, and theSensorObservationServiceclass, which imports the data using theDataFileandConfigurationclasses for creating the required requests.model- contains data holding classes for the resources like feature of interest, sensor, requests (insert observation, register/insert sensor)...task- contains controllers required for one time and repeated feeding used by the centralFeederclass. A good starting point for new developers is to take a look at theFeeder.main(String[])and follow the path through the code. When changing something regarding communication with the SOS and data parsing, take a look at:SensorObservationService,Configuration, andDataFile.

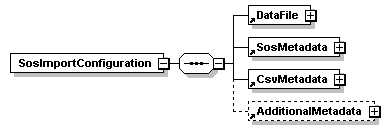

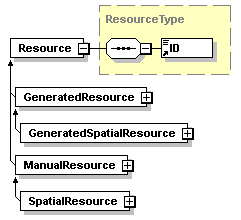

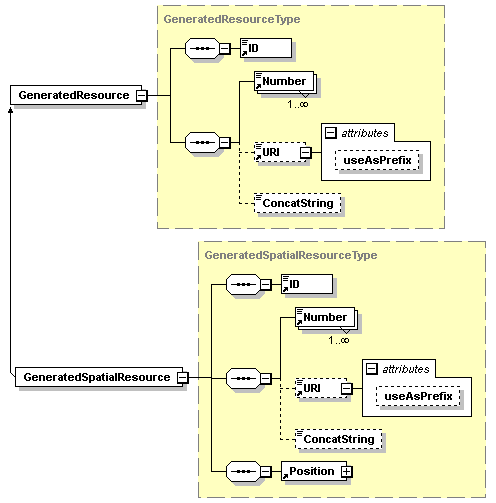

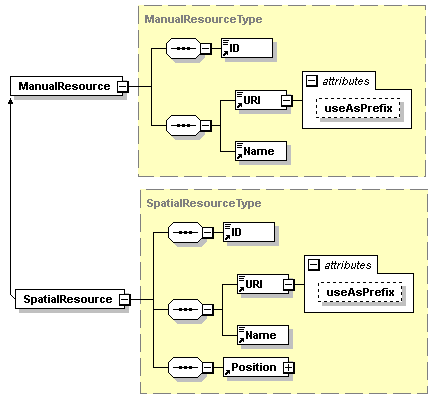

The configuration schema is used to store metadata about the data file and the import procedure. The following diagrams show the XML schema used within the wizard and feeder module for storing and re-using the metadata that is required to perform the import process. In other words, the configuration XML files use this schema. Theses configuration files are required to create messages which the SOS understands.

Each SosImportConfiguration contains three mandatory sections. These are DataFile, SosMetadata, and CsvMetadata. The section AdditionalMetadata is optional.

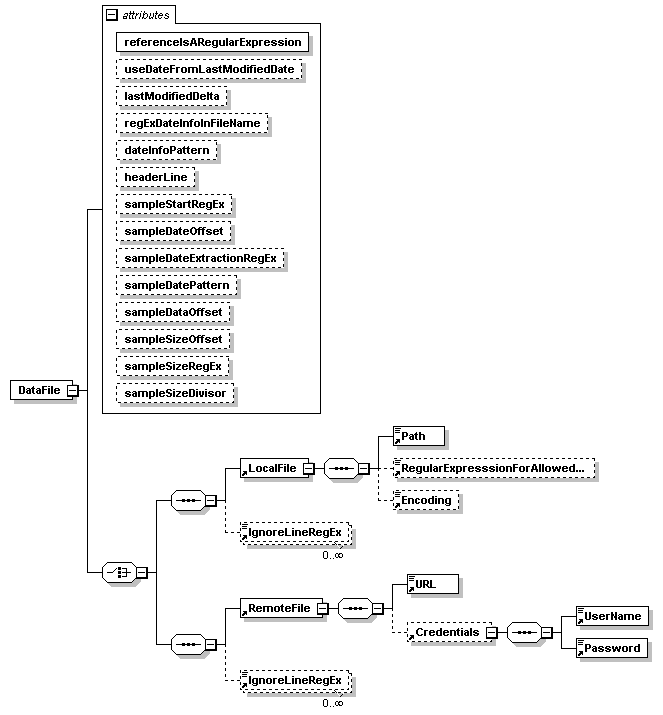

The DataFile contains information about the file containing the observations. The attributes are described in the table below. The second section of the DataFile is the choice between LocalFile or RemoteFile. A LocalFile has a Path and two optional parameters. The Encoding is used while reading the file (e.g. Java would use the system default but the file is in other encoding. Example: UTF-8). The RegularExpresssionForAllowedFileNames is used to find files if Path is not a file but a directory (e.g. you might have a data folder with files from different sensors). A RemoteFile needs an URL and optional Credentials (:information_source: These are stored in plain text!). The last optional section of the DataFile is the IgnoreLineRegEx array. Here, you can define regular expressions to ignore lines which make problems during the import process or contain data that should not be imported.

| Attribute | Description | Optional |

|---|---|---|

referenceIsARegularExpression |

If set to TRUE the LocaleFile.Path or RemoteFile.URL contains a regular expression needing special handling before retrieving the data file. | ❌ |

useDateFromLastModifiedDate |

If set to TRUE the last modified date of the datafile will be used as date value for the observation of this data file. | ✅ |

lastModifiedDelta |

If available and useDateFromLastModifiedDate is set to TRUE, the date value will be set to n days before last modified date. |

✅ |

regExDateInfoInFileName |

If present, the contained regular expression will be applied to the file name of the datafile to extract date information in combination with the "dateInfoPattern". Hence, this pattern is used to extract the date string and the dateInfoPattern is used to convert this date string into valid date information. The pattern MUST contain one group that holds all date information! | ✅ |

dateInfoPattern |

MUST be set, if regExDateInfoInFileName is set, for converting the date string into valid date information. Supported pattern elements: y, M, d, H, m, s, z, Z |

✅ |

headerLine |

Identifies the header line. MUST be set in the case of having the header line repeatedly in the data file. | ✅ |

sampleStartRegEx |

Identifies the beginning of an new sample in the data file. Requires the presence of the following attributes: sampleDateOffset, sampleDateExtractionRegEx, sampleDatePattern, sampleDataOffset. A "sample" is a single samplingrun having additional metadata like date information which is not contained in the lines. |

✅ |

sampleDateOffset |

Defines the offset in lines between the first line of a sample and the line containing the date information. | ✅ |

sampleDateExtractionRegEx |

Regular expression to extract the date information from the line containing the date information of the current sample. The expression MUST result in ONE group. This group will be parsed to a java.util.Date using sampleDatePattern attribute. |

✅ |

sampleDatePattern |

Defines the pattern used to parse the date information of the current sample. | ✅ |

sampleDataOffset |

Defines the offset in lines from sample beginning till the first lines with data. | ✅ |

sampleSizeOffset |

Defines the offset in lines from sample beginning till the line containing the sample size in lines with data. | ✅ |

sampleSizeRegEx |

Defines a regular expression to extract the sample size. The regular expression MUST result in ONE group which contains an integer value. | ✅ |

sampleSizeDivisor |

Defines a divisor that is applied to the sample size. Can be used in cases the sample size is not giving the number of samples but the time span of the sample. The divisor is used to calculate the number of lines in a sample. | ✅ |

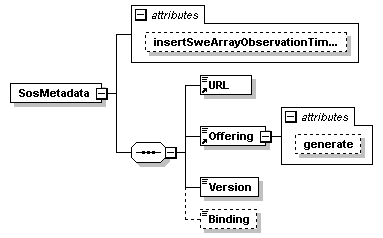

The SosMetadata section has one optional attribute insertSweArrayObservationTimeoutBuffer, three mandatory sections URL, Offering with attribute generate, and Version. The section Binding is optional. The insertSweArrayObservationTimeoutBuffer is required if the import strategy SweArrayObservationWithSplitExtension (more details later) is used. It defines an additional timeout that's used when sending the !InsertObservation requests to the SOS. The URL defines the service endpoint that receives the requests (e.g. !Insert!|RegisterSensor, !InsertObservation). The Offering should contain the offering identifier to use, or its attribute generate should be set to true. Than, the sensor identifier is used as offering identifier. The Version section defines the OGC specification version, that is understood by the SOS instance, e.g. 1.0.0, 2.0.0. The optional Binding section is required when selecting SOS version 2.0.0 and defines which binding should be used, e.g. SOAP, POX.

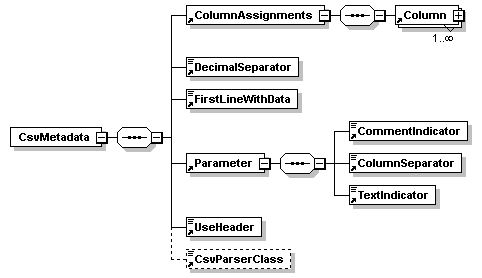

The CsvMetadata contains information for the CSV parsing. The mandatory sections DecimalSeparator, Parameter/CommentIndicator, Parameter/ColumnSeparator and Parameter/TextIndicator define, how to parse the raw data into columns and rows. The optional CsvParserClass is required if another CsvParser implementation than the default is used (see Extend CsvParser section below for more details). The FirstLineWithData defines how many lines should be skipped before the data content starts. The most complex and important section is the ColumnAssignments sections with contains 1..∞ Column sections.

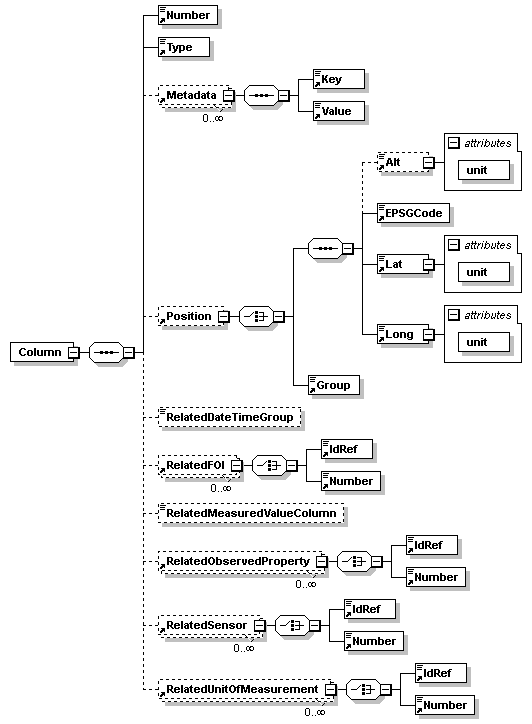

The Column contains two mandatory sections Number and Type. The Number indicates to which column in the data file this metadata is related to. Counting starts with 0. The Type indicates the column type. The following types are supported:

Type

| Type | Content of the column |

|---|---|

DO_NOT_EXPORT |

Do not export this column. It should be ignored by the application. It's the default type. |

MEASURED_VALUE |

The result of the performed observation, in most cases some value. |

DATE_TIME |

The date or time or date and time of the performed observation. |

POSITION |

The position of the performed observation. |

FOI |

The feature of interest. |

OBSERVED_PROPERTY |

The observed property. |

UOM |

The Unit of measure using UCUM codes. |

SENSOR |

The sensor id. |

OM_PARAMETER |

A om:parameter holding column. |

Some of these types require several Metadata elements, consisting of a Key and a Value. Currently supported values of Key:

Key

| Key | Value |

|---|---|

GROUP |

Indicates the membership of this column in a POSITION or DATE_TIME group. |

NAME |

Specifies the name of the OM_PARAMETER column it is used within. |

OTHER |

Not used. |

PARSE_PATTERN |

Used to store the parse pattern of a POSITION or DATE_TIME column. |

POSITION_ALTITUDE |

The altitude value for the positions for all observations in the related MEASURED_VALUE column. |

POSITION_EPSG_CODE |

The EPSG code for the positions for all observations in the related MEASURED_VALUE column. |

POSITION_LATITUDE |

The latitude value for the positions for all observations in the related MEASURED_VALUE column. |

POSITION_LONGITUDE |

The longitude value for the positions for all observations in the related MEASURED_VALUE column. |

TIME |

Not used. |

TIME_DAY |

The day value for the time stamp for all observations in the related MEASURED_VALUE column. |

TIME_HOUR |

The hour value for the time stamp for all observations in the related MEASURED_VALUE column. |

TIME_MINUTE |

The minute value for the time stamp for all observations in the related MEASURED_VALUE column. |

TIME_MONTH |

The month value for the time stamp for all observations in the related MEASURED_VALUE column. |

TIME_SECOND |

The second value for the time stamp for all observations in the related MEASURED_VALUE column. |

TIME_YEAR |

The year value for the time stamp for all observations in the related MEASURED_VALUE column. |

TIME_ZONE |

The time zone value for the time stamp for all observations in the related MEASURED_VALUE column. |

TYPE |

Supported values: MEASURED_VALUE column: NUMERIC, COUNT, BOOLEAN, TEXT. DATE_TIME column: COMBINATION, UNIX_TIME. OM_PARAMETER column: NUMERIC, COUNT, BOOLEAN, TEXT, CATEGORY. |

The RelatedDateTimeGroup is required by an MEASURED_VALUE column and identifies all columns that contain information about the time stamp for an observation. The RelatedMeasuredValueColumn identifies the MEASURED_VALUE column for columns of other types, e.g. DATE_TIME, SENSOR, FOI. The Related(FOI|ObservedProperty|Sensor|UnitOfMeasurement) sections contain either a IdRef or a Number. The number denotes the Column that contains the value. The IdRef links to a Resource in the AdditionalMetadata section (:information_source: The value of IdRef is unique within the document and only for document internal links).

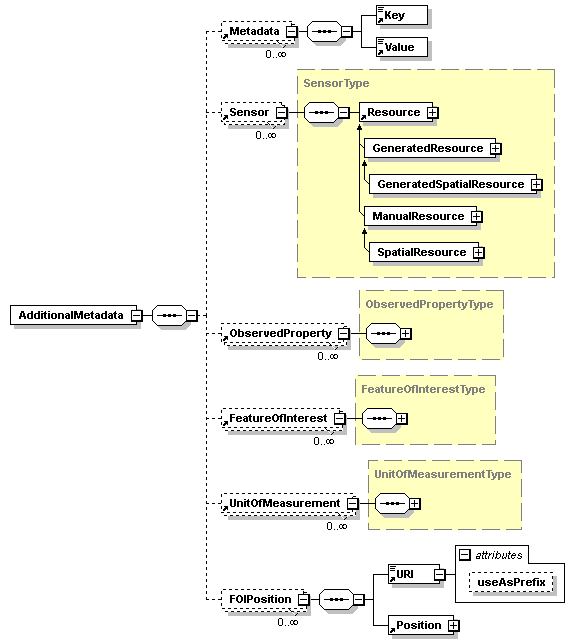

The AdditionalMetadata is the last of the four top level sections and it is optional. The intention is to provide additional metadata. These are generic Metadata elements, Resources like Sensor, ObservedProperty, FeatureOfInterest, UnitOfMeasurement and FOIPositions. The table below lists the supported values for the Metadata elements.

| Key | Value |

|---|---|

IMPORT_STRATEGY |

The import strategy to use: SingleObservation (default strategy) or SweArrayObservationWithSplitExtension. The second one is only working if the SOS instance supports the SplitDataArrayIntoObservations request extension. It results in better performance and less data transfered. |

HUNK_SIZE |

Integer value defining the number of rows that should be combined in one SWEArrayObservation. |

OTHER |

Not used. Maybe used by other CsvParser implementations. |

A Resource is a sensor, observed property, feature of interest or unit of measurement and it has a unique ID within each configuration. A resource can have a Position (e.g. a feature of interest). The information can be entered manually or it can be generated from values in the same line of the data file (GeneratedResource).

The Number define the Column which content is used for the identifier and name generation. The order of the Numbers is important. The optional ConcatString is used to combine the values from the different columns. The URI defines a URI for all Resources, or it is used as prefixed for an generated URI, if useAsPrefix is set to true.

A ManualResource has a Name, URI (when useAsPrefix is set, the URI := URI + Name).

Legend:

- ⬜ → denotes future versions and not implemented features

- ✅ → denotes achieved versions and implemented features

- → denotes open issues

- → denotes closed issues

ℹ️ Dear developer, please update our trello board accordingly!

ℹ️ Please add feature requests as new issue with label enhancement.

- Allow regular expressions to describe dynamic directory/file names (repeated feeding)

- Generic web client for multiple protocol support

- Pushing new data directly into a SOS database through a database connection (via according SQL statements)

- Feed to multiple SOS instances

- Support SOAP binding (might be an OX-F task)

- Support KVP binding (might be an OX-F task)

- Switch to

joda-timeor [EOL](https://docs.oracle.com/javase/8/docs/api/index.html?java/time/package-summary.html][java 8 DateTime API]] ⇒ switch to java 8 because of [[http://www.oracle.com/technetwork/java/eol-135779.html) - handle failing insertobservations, e.g. store in common csv format and re-import during next run.

- Switch wizard to Java FX.

Please take a look at the github issues list.

- Features

- Add support for profile observations

- Refactoring:

- Introduced Importer and Collector interface to split code in Feeder and support multithreading for parsing and feeding observation in the SOS

- for specifying the implementation of the Collector interface

- MultiFeederTask This feature provides means for harvesting many observation data source at once. The required inputs are an folder with configurations, the number of importing threads, and the period in minutes when all import configurations are processed again. This includes the option for changing the content of the configuration directory between each run.

- Add support for HTTP remote files incl. fix for FTP client

- Add support for parent feature relations

- Bindings

- Wizard

- Feeder

- Add support for no data values for time series in

- Bindings

- Feeder

- Add support to ignore lines with invalid number of columns (=> log error but no import abort) in

- Wizard

- Bindings

- Feeder

- Add support for unix time with milliseconds

- Add support for om:parameter

- Bindings

- Wizard

- Feeder

- Changes:

- Moved documentation to github, hence content of README.md and https://wiki.52north.org/bin/view/SensorWeb/SosImporter merged

- Updated to latest 52N parent → javadoc and dependency plugin cause a lot of minor adjustments

- Fixed issues

- #nn: Fix problem with count values containing double

- #nn: Fix handling of timestamp columns without any group assignment

- #nn: Fix handling of data files with multiple date & time columns

- #nn: Fix bug in unix time parsing

- #nn: Fix typo in xml schema: expresssion -> expression

- #78: NullPointerException when parsing Date/Time

- #76: Server generated offering identifier

- #67: Fix/parsing unixtime bug

- #66: Parsing Unix time fails

- #65: Fix/read lines not stored

- #64: Fix/cannot build timezone error

- #63: Cannot build importer when host in timezone MST (-07:00)

- #62: Number of read lines not stored

- #61: Fix/iae sample based import

- #60: Fix/read foi position

- #59: Fix/npe during register sensor

- #58: Null Pointer Exception (NPE) when feeder tries to register a sensor

- #57: Feeder fails to read FoI position

- #36: Strategy "SweArrayObservationWithSplitExtension" doesn't work with TextObservations

- #35: Could not parse ISO8601 timestamps with timezone "Z"

- #86: Wizard module ClassCastException

- Code: github

- Features

- Rename Core module to Wizard

- Support for SOS 2.0 incl. Binding definition

- Start Screen offers button to see all dependency licenses

- Support for sensors with multiple outputs

- Introduced import strategies:

- SingleObservation:

Default strategy - SweArrayObservationWithSplitExtension:

Readshunksize# lines and imports each time series using an SWEArrayObservation in combination with the SplitExtension of the 52North SOS implementation. Hence, this strategy works only in combination with 52North implementation. Other impl. might work, too, but not as expected. Hunksize and import strategy are both optional<AdditionalMetadata><Metadata>elements.

- SingleObservation:

- Support for date information extraction from file name using two new OPTIONAL attributes in element

<DataFile>:- "regExDateInfoInFileName" for extracting date information from file names.

- "dateInfoPattern" for parsing the date information into a

java.util.Date.

- Date information extraction from last modified date using two new OPTIONAL attributes:

- "useDateFromLastModifiedDate" for enabling this feature

- "lastModifiedDelta" for moving the date n days back (this attribute is OPTIONAL for this feature, too.)

- Ignore lines with regular expressions feature: 0..infinity elements can be added to the element. Each element will be used as regular expression and applied to each line of the data file before parsing.

- Handling of data files containing several sample runs. A sample run contains additional metadata like its size (number of performed measurements) and a date. The required attributes are:

- "sampleStartRegEx" - the start of a new sample (MUST match the whole line).

- "sampleDateOffset" - the offset of the line containing the date of the sample from the start line.

- "sampleDateExtractionRegEx" - the regular expression to extract the date information from the line containing the date information of the current sample. The expression MUST result in ONE group. This group will be parsed to a

java.util.Dateusing "sampleDatePattern" attribute. - "sampleDatePattern" - the pattern used to parse the date information of the current pattern.

- "sampleDataOffset" - the offset in lines from sample beginning till the first lines with data.

- "sampleSizeOffset" - the offset in lines from sample beginning till the line containing the sample size in lines with data.

- "sampleSizeRegEx" - the regular expression to extract the sample size. The regular expression MUST result in ONE group which contains an integer value.

- Setting of timeout buffer for the insertion of SweArrayObservations:

With the attribute "insertSweArrayObservationTimeoutBuffer" of<SosMetadata>it is possible to define an additional timeout buffer for connect and socket timeout when using import strategy "SweArrayObservationWithSplitExtension". Scale is in milliseconds, e.g.1000→ 1s more connect and socket timeout. The size of this value is related to the set-up of the SOS server, importer, and theHUNK_SIZEvalue.

The current OX-F SimpleHttpClient implementation uses a default value of 5s, hence setting this to25,000results in 30s connection and socket timeout. - More details can be found in the release notes.

- Fixed Bugs/Issues

- #06: Hardcoded time zone in test

- #10: NPE during feeding if binding value is not set

- #11: !BadLocationException in the case of having empty lines in csv file

- #20: Current GUI is broken when using sample based files with minor inconsistencies

- #24: Fix/ignore line and column: Solved two NPEs while ignoring lines or columns

- #25: Fix/timezone-bug-parse-timestamps: Solved bug while parsing time stamps

- #NN: Fix bug with timestamps of sample files

- #NN: Fix bug with incrementing lastline causing data loss

- #NN: Fix bug with data files without headerline

- #NN: NSAMParser: Fix bug with timestamp extraction

- #NN: NSAMParser: Fix bug with skipLimit

- #NN: NSAMParser: Fix bug with empty lines, line ending, time series encoding

- #NN: fix/combinationpanel: On step 3 it was not possible to enter parse patterns for position and date & time

- #NN: fix problem with textfield for CSV file when switching to German

- #NN: fix problem with multiple sensors in CSV file and register sensor

- 878

- "Too many columns issue"

- Fixed issues

- Release files:

- Release file: Core Module md5, Feeding Module md5

- Code: Github

- Features

- Use SOSWrapper from OXF

- Support more observation types

- FTP Remote File Support

- Fixed Bugs

- Release file: Core Module md5, Feeding Module md5

- Code: Github

- Features

- maven build

- multi language support

- xml configuration

- generation of FOIs and other data from columns

- feeding component

- Release file: 52n-sensorweb-sos-importer-0.1

- Code: Github

- Features

- Swing GUI

- CSV file support

- one time import

- Active

- Eike Jürrens

- Your name here!

- Former

- Eric Fiedler

- Raimund Schnürer

- Jan Schulte

You may first get in touch using the sensor web mailinglist (Mailman page including archive access, Forum view for browser addicted). In addition, you might follow the overall instruction about getting involved with 52°North which offers more than contributing as developer like designer, translator, writer, .... Your help is always welcome!

The project received funding by

- by the European FP7 research project EO2HEAVEN (co-funded by the European Commission under the grant agreement n°244100).

- by the European FP7 research project GeoViQua (co-funded by the European Commission under the grant agreement n°265178).

- by University of Leicester during 2014.

The om:parameter can be used for encoding additional attributes of an observation, e.g. quality information.

The supported ways of encoding the metadata about the om:parameter values are the following:

-

When having more than one measured value column, the om:parameter requires a linking element within the measured value column. This element is named

<RelatedOmParameter>and contains the number of the column of theom:parameter. Here is an example linking the mesured value column #4 with itsom:parametercolumn #8.<Column> <Number>4</Number> <Type>MEASURED_VALUE</Type> [...] <RelatedOmParameter>8</RelatedOmParameter> -

Each

OM_PARAMETERcolumn requires two<Metadata>elements:- The first one with

<Key>TYPEdefines the type. - The second one with

<Key>NAMEdefines the name of theom:parameterused for encoding theom:parameteras<NamedValue>.

- The first one with

-

A unit of measurement is required when choosing the

TYPENUMERIC. It can be encoded in two ways. A<RelatedUnitOfMeasurement>element, which is:- referencing another

<Column>by its number, or - referencing a

<Resource>in the<AdditionalMetadata>section.

- referencing another

Here are some additional examples for encoding om:parameter columns:

<Column>

<Number>1</Number>

<Type>OM_PARAMETER</Type>

<Metadata>

<Key>TYPE</Key>

<Value>COUNT</Value>

</Metadata>

<Metadata>

<Key>NAME</Key>

<Value>count-parameter</Value>

</Metadata>

</Column>

<Column>

<Number>2</Number>

<Type>OM_PARAMETER</Type>

<Metadata>

<Key>TYPE</Key>

<Value>NUMERIC</Value>

</Metadata>

<Metadata>

<Key>NAME</Key>

<Value>numeric-parameter</Value>

</Metadata>

<RelatedUnitOfMeasurement>

<IdRef>uom1234</IdRef>

</RelatedUnitOfMeasurement>

</Column>

<Column>

<Number>3</Number>

<Type>OM_PARAMETER</Type>

<Metadata>

<Key>TYPE</Key>

<Value>BOOLEAN</Value>

</Metadata>

<Metadata>

<Key>NAME</Key>

<Value>boolean-parameter</Value>

</Metadata>

</Column>

<Column>

<Number>4</Number>

<Type>OM_PARAMETER</Type>

<Metadata>

<Key>TYPE</Key>

<Value>TEXT</Value>

</Metadata>

<Metadata>

<Key>NAME</Key>

<Value>text-parameter</Value>

</Metadata>

</Column>

<Column>

<Number>5</Number>

<Type>OM_PARAMETER</Type>

<Metadata>

<Key>TYPE</Key>

<Value>CATEGORY</Value>

</Metadata>

<Metadata>

<Key>NAME</Key>

<Value>category-parameter</Value>

</Metadata>

</Column>

- Have datafile and configuration file ready.

- Open command line tool.

- Change to directory with

52n-sos-importer-feeder-$VERSION_NUMBER$-bin.jar. - Run

java -jar 52n-sos-importer-feeder-$VERSION_NUMBER$-bin.jarto see the latest supported and required parameters like this:

usage: java -jar Feeder.jar -c file [-d datafile] [-p period]

options and arguments:

-c file : read the config file and start the import process

-d datafile : OPTIONAL override of the datafile defined in config file

-p period : OPTIONAL time period in minutes for repeated feeding

OR

-m directory period threads : directory path containing configuration XML files that are every period of minutes submitted as FeedingTasks into a ThreadPool of size threads

- Notes

- Repeated Feeding

- Element

SosImportConfiguration::DataFile::LocalFile::Path- ...set to a directory → the repeated feeding implementation will always take the newest file (regarding

java.io.File.lastModified()) and skip the current run if no new file is available. - ...set to a file → the repeated feeding implementation will always try to import all found observations from the datafile (:information_source: the 52°North SOS implementation prohibits inserting duplicate observations! → no problem when finding some in the data file!)

- ...set to a directory → the repeated feeding implementation will always take the newest file (regarding

- Element

- Repeated Feeding

- Open command line tool.

- Change to directory with

52n-sos-importer-wizard-$VERSION_NUMBER$-bin.jar. - Run

java -jar 52n-sos-importer-wizard-$VERSION_NUMBER$-bin.jar. - Follow the wizard to create a configuration file which can be used by the feeder module for repeated feeding or import the data once using the wizard (the second option requires the latest

52n-sos-importer-feeder-$VERSION_NUMBER$-bin.jarin the same folder like the52n-sos-importer-wizard-$VERSION_NUMBER$-bin.jar.

Follow this list of steps or this user guide using the demo data to get a first user experience.

- Download the example data to your computer.

- Start the application with

javar -jar 52n-sos-importer-wizard-bin.jar. - Select the file you have just downloaded on step 1 and click next.

- Increase the value of Ignore data until line to

1and click next. - Select Date & Time and than Combination and than provide the following date parsing pattern:

dd.MM.yyyy HH:mmand click next. - 3x Select Measured Value and than Numeric Value and click next.

- Set UTC offset to 0.

ℹ️ If you want to import data reguarly, it is common sense to use UTC for timestamps. - Feature of Interest: On the next view Add missing metadata select Set Identifier manually, click on the pen next to the Name label and enter any name and URI combination you can think of. Repeat this step 3 times (one for each time series). For time series #2 and #3 you can although select the previously entered value.

- Observed property: repeat as before but enter name and URI of the observed property for each timeseries, e.g. Propan, Water and Krypton.

- Unit of Measurement: repeat as before but enter name and URI of the unit of measurement for each timeseries, e.g. l,l,kg.

- Sensor: repeat as before but enter name and URI of the sensor for each timeseries, that performed the observations, e.g. propane-sensor, water-meter, crypto-graph.

- Define the position of the feature of interest manually, giving its coordinates.

- Next, specify the URL of the SOS instance, you want to import data into.

- Choose a folder to store the import configuration (for later re-use with the feeder module, for example).

- Specify the OGC specification version the SOS instance supports (We recommend to use

2.0.0!). - When using the 52N SOS, you can specify to use the import strategy SweArrayObservation which improves the performance of the communication between feeder and SOS a lot.

- On the last step, you can view the log file, configuration file or start the import procedure. That's it, now you should be able to import data into a running SOS instance from CSV files, or similar.

You can just download example files to see how the application works:

- example-data.csv: Example CSV file with 3 time series

ℹ️ Github issues are used to organize the work.

-

Have jdk (>=1.8), maven (>=3.1.1), and git installed already.

-

Optional: Due to some updates to the OX-Framework done during the SOS-Importer development, you might need to build the OX-F from the branch develop. Please check in the

pom.xmlthe value of<oxf.version>. If it ends with-SNAPSHOT, continue here, else continue with step #3:~$ git clone https://github.com/52North/OX-Framework.git ~$ cd OX-Framework ~/OX-Framework$ mvn installor this fork (please check for open pull requests!):

~$ git remote add eike https://github.com/EHJ-52n/OX-Framework.git ~/OX-Framework$ git fetch eike ~/OX-Framework$ git checkout -b eike-develop eike/develop ~/OX-Framework$ mvn clean install -

Checkout latest version of SOS-Importer with:

~$ git clone https://github.com/52North/sos-importer.gitin a separate directory.

-

Switch to required branch (

masterfor latest stable version;developfor latest development version) via~/sos-importer$ git checkout develop -

Build SOS importer modules:

~/sos-importer$ mvn install -

Find the jar files here:

- wizard:

~/sos-importer/wizard/target/ - feeder:

~/sos-importer/feeder/target/

- wizard:

| URLs | Content |

|---|---|

git: https://github.com/52North/sos-importer/tree/develop |

latest development version |

git: https://github.com/52North/sos-importer/tree/master |

latest stable version |

git: https://github.com/52North/sos-importer/releases/tag/52n-sos-importer-0.4.0 |

release 0.4.0 |

git: https://github.com/52North/sos-importer/releases/tag/52n-sos-importer-0.3.0 |

release 0.3.0 |

git: https://github.com/52North/sos-importer/releases/tag/52n-sos-importer-0.2.0 |

release 0.2.0 |

git: https://github.com/52North/sos-importer/releases/tag/52n-sos-importer-0.1.0 |

release 0.1.0 |

- JAVA 1.8+

- List of Dependencies (generated following our best practice documentation): THIRD-PARTY.txt

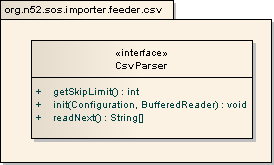

For providing your own CsvParser implementation

Since version 0.4.0, it is possible to implement your own CsvParser type, if the current generic CSV parser implementation is not sufficient for your use case. Currently, one additional parser is implemented. The NSAMParser is able to handle CSV files that grow not from top to down but from left to right.

To get your own parser implementation working, you need to implement the CsvParser interface (see the next Figure for more details).

In addition, you need to add <CsvParserClass> in your configuration to <CsvMetadata>. The class that MUST be used for parsing the data file. The interface org.n52.sos.importer.feeder.CsvParser MUST be implemented. The class name MUST contain the fully qualified package name and a zero-argument constructor MUST be provided.

The CsvParser.init(..) is called after the constructor and should result in a ready-to-use parser instance. CsvParser.readNext() returns the next "line" of values that should be processed as String[]. An IOException could be thrown if something unexpected happens during the read operation. The CsvParser.getSkipLimit() should return 0, if number of lines == number of observations, or the difference between line number and line index.

If you have any problems, please check the issues section for the sos importer first:

This project follows the Gitflow branching model. "master" reflects the latest stable release. Ongoing development is done in branch develop and dedicated feature branches (feature-*).

The 52°North Sos-Importer is published under the GNU General Public License v2.0. The licenses of the dependencies are documented in another section.