Hi,

First of all, thank you for your work ! It's really amazing.

But i'm having an issue with my attempts of TrEnDs assignement on Kaggle, I have the issue below:

It seems to me that Scipy is not recognized or something like that, even thought i put import scipy as sp on my notebook or even in AutoViml.py

Can you help ? thanks again !

Best Regards .

############## D A T A S E T A N A L Y S I S #######################

Training Set Shape = (5434, 1411)

Training Set Memory Usage = 58.54 MB

Test Set Shape = (5877, 1405)

Test Set Memory Usage = 63.04 MB

Multi_Label Target: ['age', 'domain1_var1', 'domain1_var2', 'domain2_var1', 'domain2_var2']

############## C L A S S I F Y I N G V A R I A B L E S ####################

Classifying variables in data set...

Number of Numeric Columns = 1404

Number of Integer-Categorical Columns = 0

Number of String-Categorical Columns = 0

Number of Factor-Categorical Columns = 0

Number of String-Boolean Columns = 0

Number of Numeric-Boolean Columns = 0

Number of Discrete String Columns = 0

Number of NLP String Columns = 0

Number of Date Time Columns = 0

Number of ID Columns = 1

Number of Columns to Delete = 1

1406 Predictors classified...

This does not include the Target column(s)

2 variables removed since they were ID or low-information variables

Number of GPUs = 2

GPU available

############# D A T A P R E P A R A T I O N #############

No Missing Values in train data set

Test data has no missing values. Continuing...

Completed Scaling of Train and Test Data using MinMaxScaler(copy=True, feature_range=(0, 1)) ...

Regression problem: hyperparameters are being optimized for mae

############## F E A T U R E S E L E C T I O N ####################

Removing highly correlated features among 1404 variables using pearson correlation...

Number of variables removed due to high correlation = 407

List of variables removed: ['SCN(99)_vs_SCN(69)', 'SCN(45)_vs_SCN(69)', 'SMN(11)_vs_SCN(69)', 'SMN(27)_vs_SCN(69)', 'SMN(66)_vs_SCN(69)', 'VSN(20)_vs_SCN(69)', 'VSN(8)_vs_SCN(69)', 'CON(88)_vs_SCN(69)', 'CON(67)_vs_SCN(69)', 'CON(38)_vs_SCN(69)', 'CON(83)_vs_SCN(69)', 'CBN(4)_vs_SCN(69)', 'CBN(7)_vs_SCN(69)', 'SCN(45)_vs_SCN(53)', 'ADN(21)_vs_SCN(53)', 'ADN(56)_vs_SCN(53)', 'SMN(27)_vs_SCN(53)', 'SMN(54)_vs_SCN(53)', 'SMN(66)_vs_SCN(53)', 'VSN(20)_vs_SCN(53)', 'VSN(8)_vs_SCN(53)', 'CON(88)_vs_SCN(53)', 'CON(67)_vs_SCN(53)', 'CON(38)_vs_SCN(53)', 'CBN(4)_vs_SCN(53)', 'CBN(7)_vs_SCN(53)', 'SCN(45)_vs_SCN(98)', 'SMN(27)_vs_SCN(98)', 'VSN(8)_vs_SCN(98)', 'ADN(21)_vs_SCN(99)', 'ADN(56)_vs_SCN(99)', 'SMN(3)_vs_SCN(99)', 'SMN(2)_vs_SCN(99)', 'SMN(11)_vs_SCN(99)', 'SMN(27)_vs_SCN(99)', 'SMN(72)_vs_SCN(99)', 'VSN(16)_vs_SCN(99)', 'VSN(5)_vs_SCN(99)', 'VSN(62)_vs_SCN(99)', 'VSN(15)_vs_SCN(99)', 'VSN(12)_vs_SCN(99)', 'VSN(93)_vs_SCN(99)', 'VSN(20)_vs_SCN(99)', 'VSN(8)_vs_SCN(99)', 'VSN(77)_vs_SCN(99)', 'CON(68)_vs_SCN(99)', 'CON(33)_vs_SCN(99)', 'CON(43)_vs_SCN(99)', 'CON(70)_vs_SCN(99)', 'CON(61)_vs_SCN(99)', 'CON(55)_vs_SCN(99)', 'CON(63)_vs_SCN(99)', 'CON(79)_vs_SCN(99)', 'CON(84)_vs_SCN(99)', 'CON(96)_vs_SCN(99)', 'CON(88)_vs_SCN(99)', 'CON(48)_vs_SCN(99)', 'CON(81)_vs_SCN(99)', 'CON(37)_vs_SCN(99)', 'CON(67)_vs_SCN(99)', 'CON(38)_vs_SCN(99)', 'CON(83)_vs_SCN(99)', 'DMN(32)_vs_SCN(99)', 'DMN(23)_vs_SCN(99)', 'DMN(71)_vs_SCN(99)', 'CBN(13)_vs_SCN(99)', 'CBN(18)_vs_SCN(99)', 'CBN(4)_vs_SCN(99)', 'CBN(7)_vs_SCN(99)', 'ADN(21)_vs_SCN(45)', 'ADN(56)_vs_SCN(45)', 'SMN(3)_vs_SCN(45)', 'SMN(9)_vs_SCN(45)', 'SMN(2)_vs_SCN(45)', 'SMN(11)_vs_SCN(45)', 'SMN(27)_vs_SCN(45)', 'SMN(54)_vs_SCN(45)', 'SMN(66)_vs_SCN(45)', 'VSN(16)_vs_SCN(45)', 'VSN(93)_vs_SCN(45)', 'VSN(20)_vs_SCN(45)', 'VSN(8)_vs_SCN(45)', 'CON(63)_vs_SCN(45)', 'CON(84)_vs_SCN(45)', 'CON(88)_vs_SCN(45)', 'CON(67)_vs_SCN(45)', 'CON(38)_vs_SCN(45)', 'CON(83)_vs_SCN(45)', 'DMN(40)_vs_SCN(45)', 'DMN(71)_vs_SCN(45)', 'CBN(13)_vs_SCN(45)', 'CBN(18)_vs_SCN(45)', 'CBN(4)_vs_SCN(45)', 'CBN(7)_vs_SCN(45)', 'SMN(11)_vs_ADN(21)', 'SMN(80)_vs_ADN(21)', 'VSN(62)_vs_ADN(21)', 'VSN(93)_vs_ADN(21)', 'VSN(20)_vs_ADN(21)', 'VSN(8)_vs_ADN(21)', 'VSN(77)_vs_ADN(21)', 'CON(33)_vs_ADN(21)', 'CON(84)_vs_ADN(21)', 'CON(37)_vs_ADN(21)', 'DMN(51)_vs_ADN(21)', 'DMN(94)_vs_ADN(21)', 'CBN(13)_vs_ADN(21)', 'CBN(18)_vs_ADN(21)', 'CBN(4)_vs_ADN(21)', 'CBN(7)_vs_ADN(21)', 'SMN(11)_vs_ADN(56)', 'SMN(54)_vs_ADN(56)', 'SMN(80)_vs_ADN(56)', 'VSN(16)_vs_ADN(56)', 'VSN(5)_vs_ADN(56)', 'VSN(62)_vs_ADN(56)', 'VSN(15)_vs_ADN(56)', 'VSN(12)_vs_ADN(56)', 'VSN(93)_vs_ADN(56)', 'VSN(20)_vs_ADN(56)', 'VSN(8)_vs_ADN(56)', 'VSN(77)_vs_ADN(56)', 'CON(33)_vs_ADN(56)', 'CON(70)_vs_ADN(56)', 'CON(61)_vs_ADN(56)', 'CON(55)_vs_ADN(56)', 'CON(63)_vs_ADN(56)', 'CON(84)_vs_ADN(56)', 'CON(48)_vs_ADN(56)', 'CON(37)_vs_ADN(56)', 'CON(67)_vs_ADN(56)', 'CON(38)_vs_ADN(56)', 'CON(83)_vs_ADN(56)', 'DMN(32)_vs_ADN(56)', 'DMN(40)_vs_ADN(56)', 'DMN(23)_vs_ADN(56)', 'DMN(71)_vs_ADN(56)', 'DMN(51)_vs_ADN(56)', 'CBN(13)_vs_ADN(56)', 'CBN(4)_vs_ADN(56)', 'CBN(7)_vs_ADN(56)', 'SMN(2)_vs_SMN(3)', 'SMN(11)_vs_SMN(3)', 'SMN(80)_vs_SMN(3)', 'VSN(16)_vs_SMN(3)', 'VSN(5)_vs_SMN(3)', 'VSN(15)_vs_SMN(3)', 'VSN(12)_vs_SMN(3)', 'VSN(93)_vs_SMN(3)', 'VSN(20)_vs_SMN(3)', 'VSN(8)_vs_SMN(3)', 'VSN(77)_vs_SMN(3)', 'CON(33)_vs_SMN(3)', 'CON(63)_vs_SMN(3)', 'CON(84)_vs_SMN(3)', 'CON(88)_vs_SMN(3)', 'CON(37)_vs_SMN(3)', 'DMN(51)_vs_SMN(3)', 'DMN(94)_vs_SMN(3)', 'CBN(13)_vs_SMN(3)', 'CBN(18)_vs_SMN(3)', 'CBN(4)_vs_SMN(3)', 'CBN(7)_vs_SMN(3)', 'SMN(2)_vs_SMN(9)', 'SMN(80)_vs_SMN(9)', 'CON(33)_vs_SMN(9)', 'CON(61)_vs_SMN(9)', 'CON(63)_vs_SMN(9)', 'CON(84)_vs_SMN(9)', 'CON(67)_vs_SMN(9)', 'DMN(23)_vs_SMN(9)', 'DMN(51)_vs_SMN(9)', 'DMN(94)_vs_SMN(9)', 'SMN(11)_vs_SMN(2)', 'SMN(80)_vs_SMN(2)', 'VSN(62)_vs_SMN(2)', 'CON(33)_vs_SMN(2)', 'CON(61)_vs_SMN(2)', 'CON(63)_vs_SMN(2)', 'CON(84)_vs_SMN(2)', 'CON(88)_vs_SMN(2)', 'CON(37)_vs_SMN(2)', 'CON(67)_vs_SMN(2)', 'CON(38)_vs_SMN(2)', 'DMN(23)_vs_SMN(2)', 'DMN(71)_vs_SMN(2)', 'CBN(4)_vs_SMN(2)', 'CBN(7)_vs_SMN(2)', 'SMN(27)_vs_SMN(11)', 'SMN(54)_vs_SMN(11)', 'SMN(66)_vs_SMN(11)', 'SMN(80)_vs_SMN(11)', 'VSN(16)_vs_SMN(11)', 'VSN(5)_vs_SMN(11)', 'VSN(62)_vs_SMN(11)', 'VSN(12)_vs_SMN(11)', 'VSN(93)_vs_SMN(11)', 'VSN(20)_vs_SMN(11)', 'VSN(8)_vs_SMN(11)', 'VSN(77)_vs_SMN(11)', 'CON(68)_vs_SMN(11)', 'CON(43)_vs_SMN(11)', 'CON(70)_vs_SMN(11)', 'CON(61)_vs_SMN(11)', 'CON(55)_vs_SMN(11)', 'CON(63)_vs_SMN(11)', 'CON(79)_vs_SMN(11)', 'CON(84)_vs_SMN(11)', 'CON(96)_vs_SMN(11)', 'CON(88)_vs_SMN(11)', 'CON(48)_vs_SMN(11)', 'CON(37)_vs_SMN(11)', 'CON(38)_vs_SMN(11)', 'CON(83)_vs_SMN(11)', 'DMN(32)_vs_SMN(11)', 'DMN(40)_vs_SMN(11)', 'DMN(23)_vs_SMN(11)', 'DMN(17)_vs_SMN(11)', 'DMN(51)_vs_SMN(11)', 'DMN(94)_vs_SMN(11)', 'CBN(13)_vs_SMN(11)', 'CBN(18)_vs_SMN(11)', 'CBN(4)_vs_SMN(11)', 'CBN(7)_vs_SMN(11)', 'VSN(16)_vs_SMN(27)', 'VSN(5)_vs_SMN(27)', 'VSN(62)_vs_SMN(27)', 'VSN(15)_vs_SMN(27)', 'VSN(12)_vs_SMN(27)', 'VSN(93)_vs_SMN(27)', 'VSN(20)_vs_SMN(27)', 'VSN(8)_vs_SMN(27)', 'VSN(77)_vs_SMN(27)', 'CON(33)_vs_SMN(27)', 'CON(88)_vs_SMN(27)', 'CON(67)_vs_SMN(27)', 'CON(38)_vs_SMN(27)', 'DMN(71)_vs_SMN(27)', 'CBN(13)_vs_SMN(27)', 'CBN(18)_vs_SMN(27)', 'CBN(4)_vs_SMN(27)', 'CBN(7)_vs_SMN(27)', 'VSN(12)_vs_SMN(54)', 'VSN(20)_vs_SMN(54)', 'VSN(8)_vs_SMN(54)', 'CON(81)_vs_SMN(54)', 'DMN(94)_vs_SMN(54)', 'VSN(62)_vs_SMN(66)', 'VSN(20)_vs_SMN(66)', 'VSN(8)_vs_SMN(66)', 'CON(63)_vs_SMN(66)', 'DMN(94)_vs_SMN(66)', 'CBN(13)_vs_SMN(66)', 'CBN(7)_vs_SMN(66)', 'VSN(20)_vs_SMN(80)', 'VSN(8)_vs_SMN(80)', 'DMN(94)_vs_SMN(80)', 'CBN(7)_vs_SMN(80)', 'VSN(20)_vs_SMN(72)', 'VSN(8)_vs_SMN(72)', 'DMN(94)_vs_SMN(72)', 'CBN(7)_vs_SMN(72)', 'VSN(8)_vs_VSN(16)', 'CON(63)_vs_VSN(16)', 'CON(48)_vs_VSN(16)', 'CON(83)_vs_VSN(16)', 'DMN(94)_vs_VSN(16)', 'VSN(15)_vs_VSN(5)', 'VSN(8)_vs_VSN(5)', 'VSN(20)_vs_VSN(62)', 'VSN(8)_vs_VSN(62)', 'CON(68)_vs_VSN(62)', 'CON(79)_vs_VSN(62)', 'CON(96)_vs_VSN(62)', 'CON(81)_vs_VSN(62)', 'CON(37)_vs_VSN(62)', 'DMN(40)_vs_VSN(62)', 'DMN(23)_vs_VSN(62)', 'DMN(17)_vs_VSN(62)', 'DMN(94)_vs_VSN(62)', 'CBN(18)_vs_VSN(62)', 'CBN(7)_vs_VSN(62)', 'VSN(12)_vs_VSN(15)', 'VSN(20)_vs_VSN(15)', 'DMN(94)_vs_VSN(15)', 'VSN(8)_vs_VSN(12)', 'CON(43)_vs_VSN(12)', 'DMN(94)_vs_VSN(12)', 'CBN(13)_vs_VSN(12)', 'VSN(20)_vs_VSN(93)', 'VSN(8)_vs_VSN(93)', 'CON(83)_vs_VSN(93)', 'DMN(94)_vs_VSN(93)', 'CBN(7)_vs_VSN(93)', 'VSN(8)_vs_VSN(20)', 'VSN(77)_vs_VSN(20)', 'CON(68)_vs_VSN(20)', 'CON(33)_vs_VSN(20)', 'CON(43)_vs_VSN(20)', 'CON(70)_vs_VSN(20)', 'CON(61)_vs_VSN(20)', 'CON(55)_vs_VSN(20)', 'CON(63)_vs_VSN(20)', 'CON(79)_vs_VSN(20)', 'CON(84)_vs_VSN(20)', 'CON(96)_vs_VSN(20)', 'CON(88)_vs_VSN(20)', 'CON(48)_vs_VSN(20)', 'CON(81)_vs_VSN(20)', 'CON(37)_vs_VSN(20)', 'CON(67)_vs_VSN(20)', 'CON(38)_vs_VSN(20)', 'CON(83)_vs_VSN(20)', 'DMN(32)_vs_VSN(20)', 'DMN(40)_vs_VSN(20)', 'DMN(23)_vs_VSN(20)', 'DMN(71)_vs_VSN(20)', 'DMN(17)_vs_VSN(20)', 'DMN(51)_vs_VSN(20)', 'DMN(94)_vs_VSN(20)', 'CBN(13)_vs_VSN(20)', 'CBN(4)_vs_VSN(20)', 'CBN(7)_vs_VSN(20)', 'VSN(77)_vs_VSN(8)', 'CON(68)_vs_VSN(8)', 'CON(33)_vs_VSN(8)', 'CON(43)_vs_VSN(8)', 'CON(70)_vs_VSN(8)', 'CON(61)_vs_VSN(8)', 'CON(55)_vs_VSN(8)', 'CON(63)_vs_VSN(8)', 'CON(79)_vs_VSN(8)', 'CON(84)_vs_VSN(8)', 'CON(96)_vs_VSN(8)', 'CON(88)_vs_VSN(8)', 'CON(48)_vs_VSN(8)', 'CON(81)_vs_VSN(8)', 'CON(37)_vs_VSN(8)', 'CON(67)_vs_VSN(8)', 'CON(38)_vs_VSN(8)', 'CON(83)_vs_VSN(8)', 'DMN(32)_vs_VSN(8)', 'DMN(40)_vs_VSN(8)', 'DMN(23)_vs_VSN(8)', 'DMN(71)_vs_VSN(8)', 'DMN(17)_vs_VSN(8)', 'DMN(51)_vs_VSN(8)', 'DMN(94)_vs_VSN(8)', 'CBN(13)_vs_VSN(8)', 'CBN(18)_vs_VSN(8)', 'CBN(4)_vs_VSN(8)', 'CBN(7)_vs_VSN(8)', 'DMN(94)_vs_VSN(77)', 'CBN(7)_vs_VSN(77)', 'DMN(94)_vs_CON(33)', 'CBN(7)_vs_CON(33)', 'CON(61)_vs_CON(43)', 'CON(63)_vs_CON(43)', 'CON(38)_vs_CON(43)', 'CBN(13)_vs_CON(43)', 'CBN(7)_vs_CON(70)', 'CON(96)_vs_CON(61)', 'DMN(94)_vs_CON(61)', 'DMN(94)_vs_CON(55)', 'CBN(7)_vs_CON(55)', 'CON(96)_vs_CON(63)', 'DMN(40)_vs_CON(63)', 'DMN(17)_vs_CON(63)', 'DMN(94)_vs_CON(63)', 'CBN(7)_vs_CON(63)', 'CON(38)_vs_CON(79)', 'DMN(94)_vs_CON(84)', 'CBN(7)_vs_CON(84)', 'CON(38)_vs_CON(96)', 'CBN(13)_vs_CON(96)', 'DMN(17)_vs_CON(88)', 'DMN(94)_vs_CON(88)', 'CBN(7)_vs_CON(88)', 'CON(38)_vs_CON(81)', 'DMN(94)_vs_CON(37)', 'CBN(7)_vs_CON(37)', 'DMN(94)_vs_CON(67)', 'DMN(40)_vs_CON(38)', 'DMN(17)_vs_CON(38)', 'DMN(94)_vs_CON(38)', 'CBN(7)_vs_CON(38)', 'DMN(71)_vs_CON(83)', 'CBN(13)_vs_CON(83)', 'CBN(18)_vs_CON(83)', 'CBN(7)_vs_CON(83)', 'DMN(17)_vs_DMN(32)', 'DMN(94)_vs_DMN(32)', 'DMN(17)_vs_DMN(40)', 'CBN(7)_vs_DMN(23)', 'CBN(7)_vs_DMN(71)', 'DMN(51)_vs_DMN(17)', 'DMN(94)_vs_DMN(17)', 'CBN(13)_vs_DMN(17)', 'DMN(94)_vs_DMN(51)', 'CBN(7)_vs_DMN(51)', 'CBN(13)_vs_DMN(94)', 'CBN(18)_vs_DMN(94)', 'CBN(7)_vs_DMN(94)', 'CBN(4)_vs_CBN(13)', 'CBN(7)_vs_CBN(13)', 'CBN(4)_vs_CBN(18)', 'CBN(7)_vs_CBN(4)']

############# PROCESSING T A R G E T = age ##########################

No categorical feature reduction done. All 0 Categorical vars selected

############## F E A T U R E S E L E C T I O N ####################

Removing highly correlated features among 997 variables using pearson correlation...

Number of variables removed due to high correlation = 176

List of variables removed: ['ADN(21)_vs_SCN(69)', 'ADN(56)_vs_SCN(69)', 'SMN(3)_vs_SCN(69)', 'SMN(9)_vs_SCN(69)', 'SMN(2)_vs_SCN(69)', 'VSN(16)_vs_SCN(69)', 'VSN(5)_vs_SCN(69)', 'VSN(15)_vs_SCN(69)', 'VSN(12)_vs_SCN(69)', 'CBN(18)_vs_SCN(69)', 'SMN(2)_vs_SCN(53)', 'SMN(11)_vs_SCN(53)', 'VSN(16)_vs_SCN(53)', 'VSN(5)_vs_SCN(53)', 'VSN(15)_vs_SCN(53)', 'VSN(12)_vs_SCN(53)', 'CBN(13)_vs_SCN(53)', 'CBN(18)_vs_SCN(53)', 'ADN(21)_vs_SCN(98)', 'ADN(56)_vs_SCN(98)', 'SMN(3)_vs_SCN(98)', 'SMN(2)_vs_SCN(98)', 'SMN(11)_vs_SCN(98)', 'SMN(54)_vs_SCN(98)', 'SMN(54)_vs_SCN(99)', 'SMN(66)_vs_SCN(98)', 'SMN(66)_vs_SCN(99)', 'VSN(16)_vs_SCN(98)', 'VSN(15)_vs_SCN(98)', 'VSN(12)_vs_SCN(98)', 'VSN(20)_vs_SCN(98)', 'CBN(13)_vs_SCN(98)', 'CBN(18)_vs_SCN(98)', 'CBN(7)_vs_SCN(98)', 'SMN(9)_vs_SCN(99)', 'VSN(15)_vs_SCN(45)', 'VSN(12)_vs_SCN(45)', 'VSN(16)_vs_ADN(21)', 'VSN(5)_vs_ADN(21)', 'VSN(15)_vs_ADN(21)', 'VSN(12)_vs_ADN(21)', 'SMN(27)_vs_SMN(3)', 'CON(67)_vs_SMN(11)', 'DMN(71)_vs_SMN(3)', 'DMN(71)_vs_SMN(11)', 'VSN(62)_vs_SMN(9)', 'CON(38)_vs_SMN(9)', 'DMN(71)_vs_SMN(9)', 'CBN(4)_vs_SMN(9)', 'CBN(7)_vs_SMN(9)', 'VSN(93)_vs_SMN(2)', 'VSN(20)_vs_SMN(2)', 'VSN(8)_vs_SMN(2)', 'VSN(77)_vs_SMN(2)', 'CBN(13)_vs_SMN(2)', 'VSN(15)_vs_SMN(11)', 'VSN(16)_vs_SMN(54)', 'VSN(5)_vs_SMN(54)', 'VSN(15)_vs_SMN(54)', 'VSN(5)_vs_SMN(66)', 'VSN(15)_vs_SMN(66)', 'VSN(12)_vs_SMN(66)', 'VSN(16)_vs_SMN(80)', 'VSN(5)_vs_SMN(80)', 'VSN(15)_vs_SMN(80)', 'VSN(12)_vs_SMN(80)', 'VSN(16)_vs_SMN(72)', 'VSN(5)_vs_SMN(72)', 'VSN(15)_vs_SMN(72)', 'VSN(12)_vs_SMN(72)', 'VSN(5)_vs_VSN(16)', 'VSN(62)_vs_VSN(16)', 'VSN(15)_vs_VSN(16)', 'VSN(12)_vs_VSN(16)', 'VSN(93)_vs_VSN(16)', 'VSN(20)_vs_VSN(16)', 'VSN(77)_vs_VSN(16)', 'CON(33)_vs_VSN(16)', 'CON(43)_vs_VSN(16)', 'CON(61)_vs_VSN(16)', 'CON(55)_vs_VSN(16)', 'CON(79)_vs_VSN(16)', 'CON(84)_vs_VSN(16)', 'CON(88)_vs_VSN(16)', 'CON(81)_vs_VSN(16)', 'CON(37)_vs_VSN(16)', 'CON(67)_vs_VSN(16)', 'CON(38)_vs_VSN(16)', 'DMN(71)_vs_VSN(16)', 'DMN(17)_vs_VSN(16)', 'DMN(51)_vs_VSN(16)', 'CBN(13)_vs_VSN(16)', 'CBN(4)_vs_VSN(16)', 'CBN(7)_vs_VSN(16)', 'VSN(62)_vs_VSN(5)', 'VSN(93)_vs_VSN(5)', 'VSN(77)_vs_VSN(5)', 'CON(68)_vs_VSN(5)', 'CON(33)_vs_VSN(5)', 'CON(43)_vs_VSN(5)', 'CON(61)_vs_VSN(5)', 'CON(55)_vs_VSN(5)', 'CON(79)_vs_VSN(5)', 'CON(84)_vs_VSN(5)', 'CON(88)_vs_VSN(5)', 'CON(81)_vs_VSN(5)', 'CON(37)_vs_VSN(5)', 'CON(67)_vs_VSN(5)', 'CON(38)_vs_VSN(5)', 'CON(83)_vs_VSN(5)', 'DMN(71)_vs_VSN(5)', 'DMN(17)_vs_VSN(5)', 'DMN(51)_vs_VSN(5)', 'DMN(94)_vs_VSN(5)', 'CBN(13)_vs_VSN(5)', 'CBN(4)_vs_VSN(5)', 'CBN(7)_vs_VSN(5)', 'VSN(15)_vs_VSN(62)', 'VSN(12)_vs_VSN(62)', 'VSN(93)_vs_VSN(15)', 'VSN(77)_vs_VSN(15)', 'CON(68)_vs_VSN(15)', 'CON(33)_vs_VSN(15)', 'CON(43)_vs_VSN(15)', 'CON(70)_vs_VSN(15)', 'CON(61)_vs_VSN(15)', 'CON(55)_vs_VSN(15)', 'CON(63)_vs_VSN(15)', 'CON(79)_vs_VSN(15)', 'CON(84)_vs_VSN(15)', 'CON(96)_vs_VSN(15)', 'CON(88)_vs_VSN(15)', 'CON(48)_vs_VSN(15)', 'CON(81)_vs_VSN(15)', 'CON(37)_vs_VSN(15)', 'CON(67)_vs_VSN(15)', 'CON(38)_vs_VSN(15)', 'CON(83)_vs_VSN(15)', 'DMN(32)_vs_VSN(15)', 'DMN(40)_vs_VSN(15)', 'DMN(23)_vs_VSN(15)', 'DMN(71)_vs_VSN(15)', 'DMN(17)_vs_VSN(15)', 'DMN(51)_vs_VSN(15)', 'CBN(13)_vs_VSN(15)', 'CBN(18)_vs_VSN(15)', 'CBN(4)_vs_VSN(15)', 'CBN(7)_vs_VSN(15)', 'VSN(93)_vs_VSN(12)', 'VSN(77)_vs_VSN(12)', 'CON(68)_vs_VSN(12)', 'CON(33)_vs_VSN(12)', 'CON(70)_vs_VSN(12)', 'CON(61)_vs_VSN(12)', 'CON(55)_vs_VSN(12)', 'CON(63)_vs_VSN(12)', 'CON(79)_vs_VSN(12)', 'CON(84)_vs_VSN(12)', 'CON(96)_vs_VSN(12)', 'CON(88)_vs_VSN(12)', 'CON(48)_vs_VSN(12)', 'CON(81)_vs_VSN(12)', 'CON(37)_vs_VSN(12)', 'CON(67)_vs_VSN(12)', 'CON(38)_vs_VSN(12)', 'CON(83)_vs_VSN(12)', 'DMN(32)_vs_VSN(12)', 'DMN(40)_vs_VSN(12)', 'DMN(23)_vs_VSN(12)', 'DMN(71)_vs_VSN(12)', 'DMN(17)_vs_VSN(12)', 'DMN(51)_vs_VSN(12)', 'CBN(18)_vs_VSN(12)', 'CBN(4)_vs_VSN(12)', 'CBN(7)_vs_VSN(12)', 'CBN(18)_vs_VSN(20)']

Adding 0 categorical variables to reduced numeric variables of 821

############## F E A T U R E S E L E C T I O N ####################

Current number of predictors = 821

Finding Important Features using Boosted Trees algorithm...

using 821 variables...

[11:18:07] WARNING: C:/Jenkins/workspace/xgboost-win64_release_0.90/src/objective/regression_obj.cu:152: reg:linear is now deprecated in favor of reg:squarederror.

using 657 variables...

[11:18:09] WARNING: C:/Jenkins/workspace/xgboost-win64_release_0.90/src/objective/regression_obj.cu:152: reg:linear is now deprecated in favor of reg:squarederror.

using 493 variables...

[11:18:10] WARNING: C:/Jenkins/workspace/xgboost-win64_release_0.90/src/objective/regression_obj.cu:152: reg:linear is now deprecated in favor of reg:squarederror.

using 329 variables...

[11:18:12] WARNING: C:/Jenkins/workspace/xgboost-win64_release_0.90/src/objective/regression_obj.cu:152: reg:linear is now deprecated in favor of reg:squarederror.

using 165 variables...

[11:18:12] WARNING: C:/Jenkins/workspace/xgboost-win64_release_0.90/src/objective/regression_obj.cu:152: reg:linear is now deprecated in favor of reg:squarederror.

using 1 variables...

[11:18:13] WARNING: C:/Jenkins/workspace/xgboost-win64_release_0.90/src/objective/regression_obj.cu:152: reg:linear is now deprecated in favor of reg:squarederror.

Found 73 important features

Starting Feature Engineering now...

No Entropy Binning specified or there are no numeric vars in data set to Bin

############### M O D E L B U I L D I N G ####################

Rows in Train data set = 4347

Features in Train data set = 73

Rows in held-out data set = 1087

UnboundLocalError Traceback (most recent call last)

in

----> 1 model_age_trends, features_trends, trainm_trends, testm_trends = Auto_ViML(df, ['age','domain1_var1','domain1_var2','domain2_var1','domain2_var2'], test_df, verbose=2, scoring_parameter='r2')

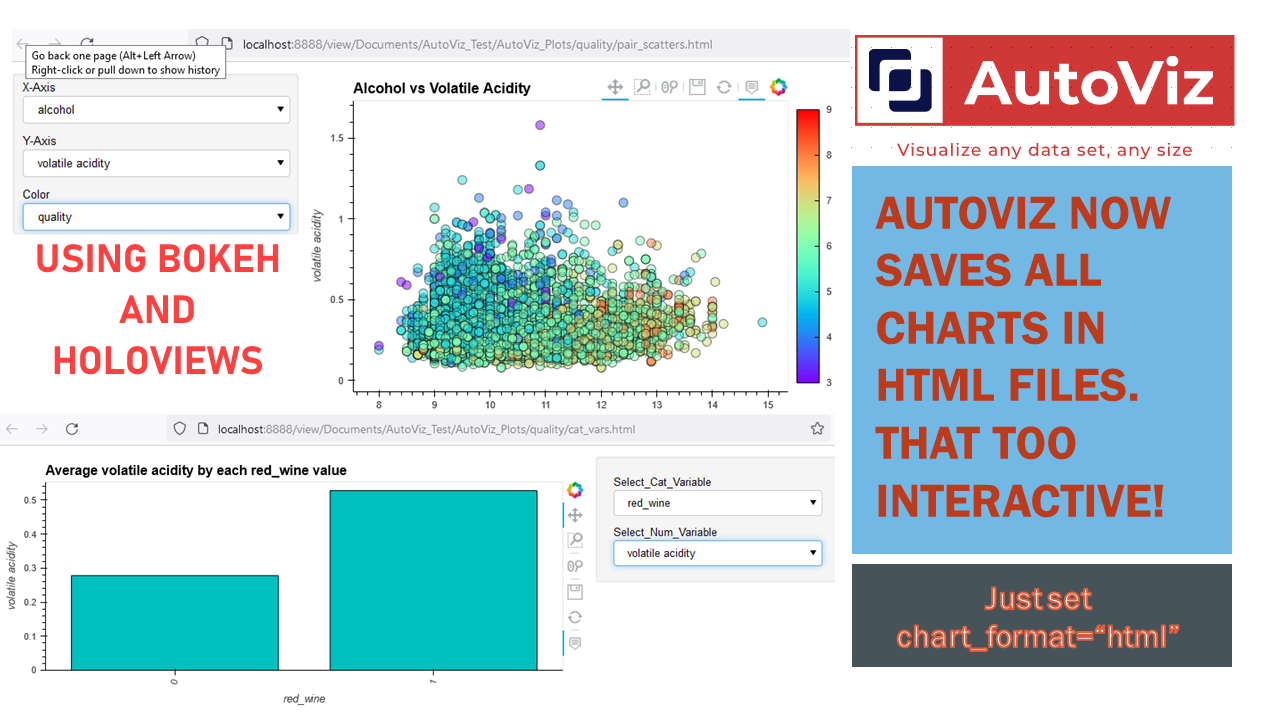

C:\ProgramData\Anaconda3\envs\virtEnv\lib\site-packages\autoviml\Auto_ViML.py in Auto_ViML(train, target, test, sample_submission, hyper_param, feature_reduction, scoring_parameter, Boosting_Flag, KMeans_Featurizer, Add_Poly, Stacking_Flag, Binning_Flag, Imbalanced_Flag, verbose)

1109 'alpha': np.logspace(-5,3),

1110 },

-> 1111 "XGBoost": {

1112 'learning_rate': sp.stats.uniform(scale=1),

1113 'gamma': sp.stats.randint(0, 32),

UnboundLocalError: local variable 'sp' referenced before assignment

Join our elite team of contributors!