An optimizing backend toolkit for PureScript's CoreFn.

PureScript's built-in optimizer leaves a lot on the table by only performing

naive syntactic rewrites in the JavaScript specific backend.

purescript-backend-optimizer consumes the compiler's high-level IR (CoreFn)

and applies a more aggressive inlining pipeline (subsuming existing

optimizations) that is backend agnostic.

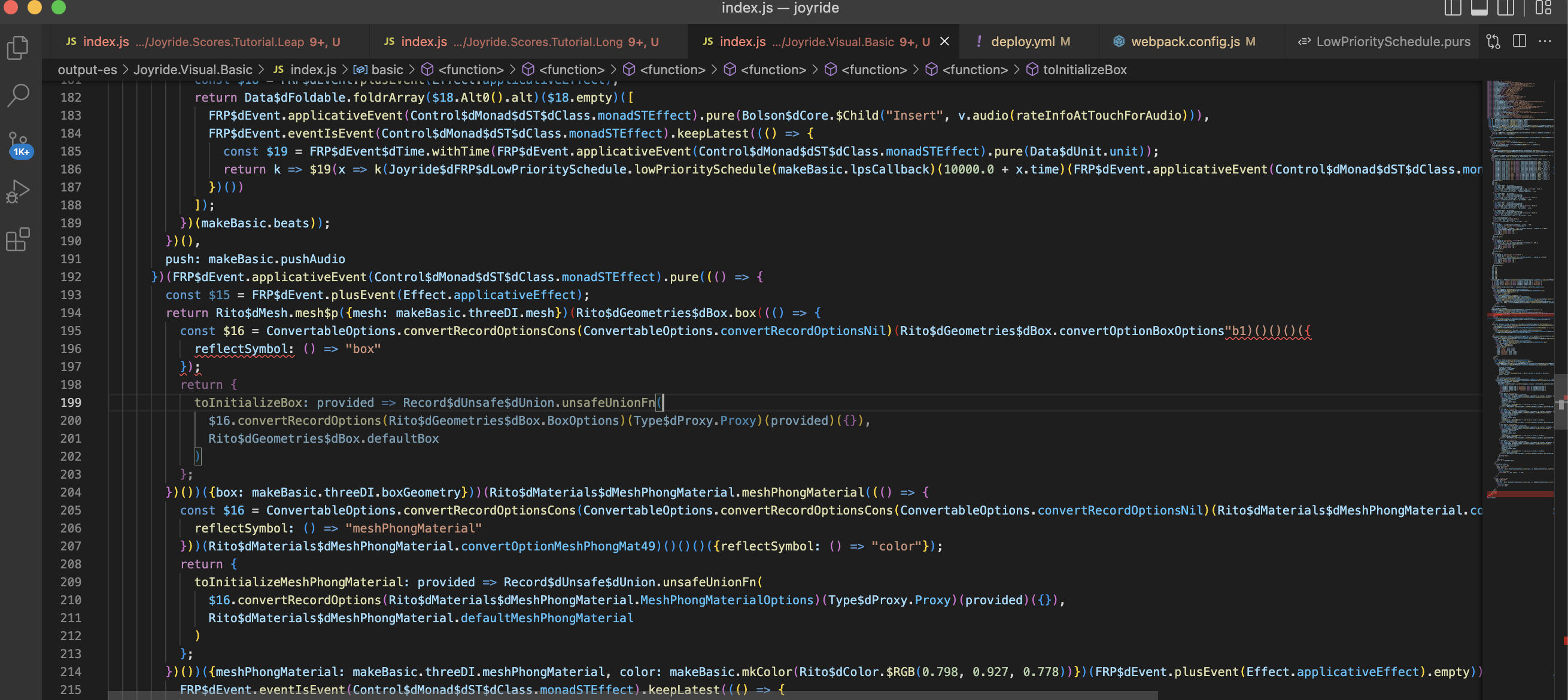

It additionally ships with an alternative code-generator which outputs modern ECMAScript with additional runtime optimizations, resulting in lighter, faster bundles.

| Example | Input | purs |

purs-backend-es |

|---|---|---|---|

| Lenses | Input | Output | Output |

| Prisms | Input | Output | Output |

| Variant | Input | Output | Output |

| Heterogeneous | Input | Output | Output |

| Uncurried CPS | Input | Output | Output |

| Generics | Input | Output | Output |

| Fusion (Fold) | Input | Output | Output |

| Fusion (Unfold) | Input | Output | Output |

| Recursion Schemes | Input | Output | Output |

| HTML DSL | Input | Output | Output |

| Imperative Loops | Input | Output | Output |

npm install purs-backend-espurs-backend-es requires PureScript 0.15.4 or greater. Add it as a backend in your spago.dhall.

+, backend = "purs-backend-es build"You should likely only do this for a production build configuration, since

optimization and code-generation are currently not incremental. For example,

you can create a separate prod.dhall with the following:

./spago.dhall // { backend = "purs-backend-es build" }By default, purs-backend-es will read corefn.json files from output, and

generate code in output-es following the same directory pattern as the

compiler's JS backend.

See the CLI help for options:

purs-backend-es --helpspago bundle-app is not compatible with purs-backend-es. To bundle your app,

you can invoke purs-backend-es bundle-app. It supports the same CLI arguments

as spago bundle-app.

spago build && purs-backend-es bundle-app --no-build- Uses arrow functions,

const/letblock scope, and object spread syntax. - Uses a much lighter-weight data encoding (using plain objects) which is significantly faster to dispatch. By default, we use string tags, but integer tags are also supported via a CLI flag for further performance improvement and size reduction.

- Newtypes over

EffectandSTalso benefit from optimizations. With general inlining, even instances that aren't newtype-derived benefit from the same optimizations. - TCO fires in more cases. For example, you can now write TCO loops over

purescript-existsbecause the eliminator is inlined away. - TCO supports mutually recursive binding groups.

- Optimized pattern matching eliminates redundant tests.

Code size and performance improvement varies by usecase, but we've generally observed:

- 25-35% improvement in runtime.

- 20-25% improvement in minified bundle size.

- 15-20% improvement in minified+gzip bundle size.

The inliner follows some basic heuristics, but to get the most out of it you should configure inlining directives. An inlining directive tells the optimizer under what conditions it should inline a definition.

The following inlining directives are supported:

default- A definition is inlined using default heuristics (unspecified).never- A definition is never inlined.always- A definition is inlined at every reference.arity=n- Wherenis a positive integer, a definition is inlined when at leastnarguments are applied.

An inlining directive may be applied to a top-level binding or top-level accessor.

module Example where

import Prelude

myAdd :: Int -> Int -> Int

myAdd a b = a + bThe myAdd function would likely already be inlined since it is so small, but

to guarantee that it is always inlined after two arguments are applied, you would

write the following directive:

Example.myAdd arity=2

For instance methods, you should use named instances and a top-level accessor:

module Example where

import Prelude

data MyData = Zero | One

instance semigroupMyData :: Semigroup MyData where

append = case _, _ of

Zero, _ -> Zero

_, Zero -> Zero

_, _ -> OneExample.semigroupMyData.append arity=2

It's possible to refer to unnamed instances through their compiler-generated name, however this is quite difficult to maintain.

Sometimes instances are parameterized by other constraints:

module Example where

import Prelude

data Product f g a = Product (f a) (g a)

instance functorProduct :: (Functor f, Functor g) => Functor (Product f g) where

map f (Product a b) = Product (f <$> a) (f <$> b)Example.functorProduct(..).map arity=2

Note the (..) between the name and the accessor, which will match

applications of known instance dictionaries.

Inlining directives can be configured in three ways:

In any given module header you can add @inline comments with the above syntax:

-- @inline Example.myAdd arity=2

module AnotherExample where

import Example

...Directives configured this way only apply to the current module.

In any given module header, you can add @inline export directives for definitions

in the current module:

-- @inline export myAdd arity=2

-- @inline export semigroupMyData.append arity=1

module Example where

...Directives configured this way apply to the current module and downstream modules.

Note: They must be defined in the module header to due to an upstream compiler limitation.

You can provide a directive file to purs-backend-es:

purs-backend-es build --directives my-directives.txtEach line should contain an inlining directive using the above syntax, with the

additional support of -- line comments. These directives will take precedence

over any defaults or exported directives, so you can tweak inlining for your

project however you see fit.

Precedence applies in the following order (most specific to least specific):

| Location | Affects |

|---|---|

Module A's header, @inline module B directive |

Module B's usages in module A |

| Directives file | All modules |

Module A's header, @inline export module A directive |

Module A's usages in all modules |

| Default heuristics | All modules |

purs-backend-es can also trace the rewrite passes taken when optimizing a

top-level expression via the --trace-rewrites CLI arg. This may help in

debugging an unexpected or non-optimal result.

purescript-backend-optimizer consumes the PureScript compiler's high-level

intermediate representation (IR) known as CoreFn. CoreFn has no defined

evaluation semantics, but we operate under assumptions based on common use:

-

We make decisions on what to keep or discard using Fast and Loose Reasoning, assuming that CoreFn is pure and total.

In practical terms, this means we may take the opportunity to remove any code that we know for certain is not demanded. However, at times we may also choose to propagate known bottoms. Thus, non-totality is considered undefined behavior for the purposes of CoreFn's semantics.

-

We preserve sharing of redexes under common assumptions of call-by-value semantics. Like non-totality, we consider a specific evaluation order to be undefined behavior in CoreFn. However, we assume that all terms under a redex should be in normal form.

In practical terms, this means we will not delay function arguments that most would expect to be evaluated immediately.