A PyTorch implementation of Single Shot MultiBox Detector from the 2016 paper by Wei Liu, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott Reed, Cheng-Yang, and Alexander C. Berg. The official and original Caffe code can be found here.

- Install PyTorch by selecting your environment on the website and running the appropriate command.

- Clone this repository.

- Note: We currently only support Python 3+.

- Then download the dataset by following the instructions below.

- We now support Visdom for real-time loss visualization during training!

- To use Visdom in the browser:

# First install Python server and client pip install visdom # Start the server (probably in a screen or tmux) python -m visdom.server

- Then (during training) navigate to http://localhost:8097/ (see the Train section below for training details).

- Note: For training, we currently support VOC and COCO, and aim to add ImageNet support soon.

To make things easy, we provide bash scripts to handle the dataset downloads and setup for you. We also provide simple dataset loaders that inherit torch.utils.data.Dataset, making them fully compatible with the torchvision.datasets API.

Microsoft COCO: Common Objects in Context

# specify a directory for dataset to be downloaded into, else default is ~/data/

sh data/scripts/COCO2014.shPASCAL VOC: Visual Object Classes

# specify a directory for dataset to be downloaded into, else default is ~/data/

sh data/scripts/VOC2007.sh # <directory># specify a directory for dataset to be downloaded into, else default is ~/data/

sh data/scripts/VOC2012.sh # <directory>- First download the fc-reduced VGG-16 PyTorch base network weights at: https://s3.amazonaws.com/amdegroot-models/vgg16_reducedfc.pth

- By default, we assume you have downloaded the file in the

ssd.pytorch/weightsdir:

mkdir weights

cd weights

wget https://s3.amazonaws.com/amdegroot-models/vgg16_reducedfc.pth- To train SSD using the train script simply specify the parameters listed in

train.pyas a flag or manually change them.

python train.py- Note:

- For training, an NVIDIA GPU is strongly recommended for speed.

- For instructions on Visdom usage/installation, see the Installation section.

- You can pick-up training from a checkpoint by specifying the path as one of the training parameters (again, see

train.pyfor options)

To evaluate a trained network:

python eval.pyYou can specify the parameters listed in the eval.py file by flagging them or manually changing them.

| Original | Converted weiliu89 weights | From scratch w/o data aug | From scratch w/ data aug |

|---|---|---|---|

| 77.2 % | 77.26 % | 58.12% | 77.43 % |

GTX 1060: ~45.45 FPS

- We are trying to provide PyTorch

state_dicts(dict of weight tensors) of the latest SSD model definitions trained on different datasets. - Currently, we provide the following PyTorch models:

- SSD300 trained on VOC0712 (newest PyTorch weights)

- SSD300 trained on VOC0712 (original Caffe weights)

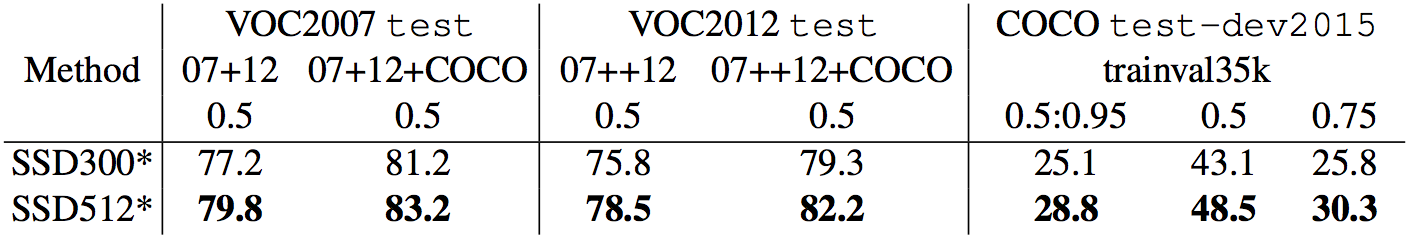

- Our goal is to reproduce this table from the original paper

- Make sure you have jupyter notebook installed.

- Two alternatives for installing jupyter notebook:

# make sure pip is upgraded

pip3 install --upgrade pip

# install jupyter notebook

pip install jupyter

# Run this inside ssd.pytorch

jupyter notebook- Now navigate to

demo/demo.ipynbat http://localhost:8888 (by default) and have at it!

- Works on CPU (may have to tweak

cv2.waitkeyfor optimal fps) or on an NVIDIA GPU - This demo currently requires opencv2+ w/ python bindings and an onboard webcam

- You can change the default webcam in

demo/live.py

- You can change the default webcam in

- Install the imutils package to leverage multi-threading on CPU:

pip install imutils

- Running

python -m demo.liveopens the webcam and begins detecting!

We have accumulated the following to-do list, which we hope to complete in the near future

- Still to come:

- Support for the MS COCO dataset

- Support for SSD512 training and testing

- Support for training on custom datasets

Note: Unfortunately, this is just a hobby of ours and not a full-time job, so we'll do our best to keep things up to date, but no guarantees. That being said, thanks to everyone for your continued help and feedback as it is really appreciated. We will try to address everything as soon as possible.

- Wei Liu, et al. "SSD: Single Shot MultiBox Detector." ECCV2016.

- Original Implementation (CAFFE)

- A huge thank you to Alex Koltun and his team at Webyclip for their help in finishing the data augmentation portion.

- A list of other great SSD ports that were sources of inspiration (especially the Chainer repo):