Webvectors is a toolkit to serve vector semantic models (particularly, prediction-based word embeddings, as in word2vec or ELMo) over the web, making it easy to demonstrate their abilities to general public. It requires Python >= 3.6, and uses Flask, Gensim and simple_elmo under the hood.

Working demos:

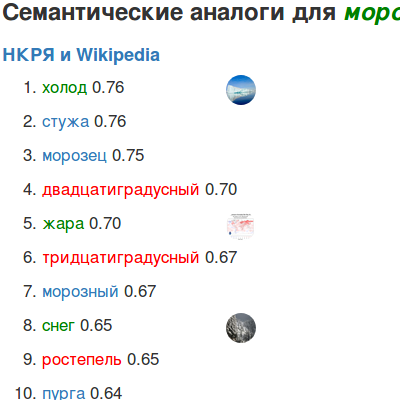

- https://rusvectores.org (for Russian)

- http://vectors.nlpl.eu/explore/embeddings/ (for English and Norwegian)

The service can be either integrated into Apache web server as a WSGI application or run as a standalone server using Gunicorn (we recommend the latter option).

- Clone WebVectors git repository (git clone https://github.com/akutuzov/webvectors.git) into a directory acessible by your web server.

- Install Apache for Apache integration or Gunicorn for standalone server.

- Install all the Python requirements (pip3 install -r requirements.txt)

- If you want to use PoS tagging for user queries, install UDPipe, Stanford CoreNLP, Freeling or other PoS-tagger of your choice.

- Configure the files:

Add the following line to Apache configuration file:

WSGIScriptAlias /WEBNAME "PATH/syn.wsgi",

where WEBNAME is the alias for your service relative to the server root (webvectors for http://example.com/webvectors), and PATH is your filesystem path to the WebVectors directory.

In all *.wsgi and *.py files in your WebVectors directory, replace webvectors.cfg in the string

config.read('webvectors.cfg')

with the absolute path to the webvectors.cfg file.

Set up your service using the configuration file webvectors.cfg.

Most important settings are:

- `root` - absolute path to your _WebVectors_ directory (**NB: end it with a slash!**)

- `temp` - absolute path to your temporary files directory

- `font` - absolute path to a TTF font you want to use for plots (otherwise, the default system font will be used)

- `detect_tag` - whether to use automatic PoS tagging

- `default_search` - URL of search engine to use on individual word pages (for example, https://duckduckgo.com/?q=)

Tags

Models can use arbitrary tags assigned to words (for example, part-of-speech tags, as in boot_NOUN). If your models are trained on words with tags, you should switch this on in webvectors.cfg (use_tags variable).

Then, WebVectors will allow users to filter their queries by tags. You also should specify the list of allowed tags (tags_list variable in webvectors.cfg) and the list of tags which will be shown to the user (tags.tsv file).

Models daemon

WebVectors uses a daemon, which runs in the background and actually processes all embedding-related tasks. It can also run on a different machine, if you want. Thus, in webvectors.cfg you should specify host and port that this daemon will listen at.

After that, start the actual daemon script word2vec_server.py. It will load the models and open a listening socket. This daemon must be active permanently, so you may want to launch it using screen or something like this.

Models

The list of models you want to use is defined in the file models.tsv. It consists of tab-separated fields:

- model identifier

- model description

- path to model

- identifier of localized model name

- is the model default or not

- does the model contain PoS tags

- training algorithm of the model (word2vec/fastText/etc)

- size of the training corpus in words

- language of the model

Model identifier will be used as the name for checkboxes in the web pages, and it is also important that in the strings.csv file the same identifier is used when denoting model names. Language of the model is used as an argument passed to the lemmatizer function, it is a simple string with the name of the language (e.g. "english", "russian", "french").

Models can currently be in 4 formats:

- plain text _word2vec_ models (ends with `.vec`);

- binary _word2vec_ models (ends with `.bin`);

- Gensim format _word2vec_ models (ends with `.model`);

- Gensim format _fastText_ models (ends with `.model`).

WebVectors will automatically detect models format and load all of them into memory. The users will be able to choose among loaded models.

Localization

WebVectors uses the strings.csv file as the source of localized strings. It is a comma-separated file with 3 fields:

- identifier

- string in language 1

- string in language 2

By default, language 1 is English and language 2 is Russian. This can be changed in webvectors.cfg.

Templates

Actual web pages shown to user are defined in the files templates/*.html.

Tune them as you wish. The main menu is defined at base.html.

Statis files

If your application does not find the static files (bootstrap and js scripts), edit the variable static_url_path in run_syn.py. You should put there the absolute path to the data folder.

Query hints

If you want query hints to work, do not forget to compile your own list of hints (JSON format). Example of such a list is given in data/example_vocab.json.

Real URL of this list should be stated in data/hint.js.

Running WebVectors

Once you have modified all the settings according to your workflow, made sure the templates are OK for you, and launched the models daemon, you are ready to actually start the service. If you use Apache integration, simply restart/reload Apache. If you prefer the standalone option, execute the following command in the root directory of the project:

gunicorn run_syn:app_syn -b address:port

where address is the address on which the service should be active (can be localhost), and port is, well, port to listen (for example, 9999).

Support for contextualized embeddings You can turn on support for contextualized embedding models (currently ELMo is supported). In order to do that:

-

Install simple_elmo package

-

Download an ELMo model of your choice (for example, here).

-

Create a type-based projection in the

word2vecformat for a limited set of words (for example 10 000), given the ELMo model and a reference corpus. For this, use theextract_elmo.pyscript we provide:

python3 extract_elmo.py --input CORPUS --elmo PATH_TO_ELMO --outfile TYPE_EMBEDDING_FILE --vocab WORD_SET_FILE

It will run the ELMo model over the provided corpus and generate static averaged type embeddings for each word in the word set. They will be used as lexical substitutes.

-

Prepare a frequency dictionary to use with the contextualized visualizations, as a plain-text tab-separated file, where the first column contains words and the second column contains their frequencies in the reference dictionary of your choice. The first line of this file should contain one integer matching the size of the corpus in word tokens.

-

In the

[Token]section of thewebvectors.cfgconfiguration file, switchuse_contextualizedto True and state the paths to yourtoken_model(pre-trained ELMo),type_model(the type-based projection you created with our script) andfreq_filewhich is your frequency dictionary. -

In the

ref_static_modelfield, specify any of your static word embedding models (just its name), which you want to use as the target of hyperlinks from words in the contextualized visualization pages. -

The page with ELMo lexical substitutes will be available at http://YOUR_ROOT_URL/contextual/

In case of any problems, please feel free to contact us:

- [email protected] (Andrey Kutuzov)

- [email protected] (Elizaveta Kuzmenko)