Welcome to PyDataAlgo, a comprehensive guide designed to illuminate the intricacies of data structures and algorithms in Python. Whether you're a student, software engineer, or data scientist, this resource aims to bolster your understanding of the foundational elements that are crucial in writing efficient and effective code. Dive into an exploration of primitive and complex data structures, unpack common and advanced algorithmic strategies, and master the concepts that underpin computational problem-solving.

- PyDataAlgo

- Section 1: Understanding Data Structures in Python

- Section 2: Algorithm Types and Their Uses in Python

- Section 3: Unlock the Power of Recursion in Python

- Section 4: Unlock the Power of Iterative Solution in Python

- Section 5: Unlocking the Secrets of Algorithm Efficiency

- Section 6: Space and Time Complexity

- Section 7: Exploring Arrays in Python: Part 1

- Section 8: Exploring Arrays in Python: Part 2

- Section 9: Explore the dynamic nature of lists

Data structures are fundamental components in Python programming. They allow you to organize and manage data efficiently. In Python, we have two main categories of data structures: primitive and non-primitive.

These are the basic building blocks that store single values:

- int: Stores whole numbers, positive or negative, like ages or counts.

- float: Represents floating-point numbers with decimal places, useful for measurements and calculations.

- bool: Stores binary data, either True or False, often used in decision-making.

- str: Represents strings of characters, such as names, addresses, or textual information.

These are more complex and can store collections of data. They are further categorized into two types: linear and non-linear.

Linear data structures organize data sequentially, where each element has a unique predecessor and successor. They can be further divided into two subtypes: static and dynamic.

Static structures have a fixed size that cannot change during runtime. An example of a static structure is an array.

- Arrays: Store elements of the same data type sequentially in memory.

Dynamic structures can grow or shrink during runtime. Examples include linked lists, stacks, and queues.

- Linked Lists: Consist of nodes that contain data and a reference (or link) to the next node.

- Stacks: Follow a Last-In-First-Out (LIFO) order and are often used for tasks like function call management.

- Queues: Follow a First-In-First-Out (FIFO) order, ideal for tasks like task scheduling.

Non-linear data structures do not follow a strict order and can have multiple predecessors or successors. Examples include trees and graphs.

- Trees: Consist of nodes with parent-child relationships. They are used in hierarchical structures.

- Graphs: Consist of nodes (vertices) and edges that connect them, allowing complex relationships.

Algorithms are essentially step-by-step procedures or instructions used to solve specific problems. In Python, they play a crucial role in efficient problem-solving and are widely utilized in various domains, including data analysis, machine learning, and software development.

Let's take a closer look at some common types of algorithms:

This type of algorithm breaks down a problem into smaller subproblems of a similar nature. By solving these subproblems recursively, we can eventually arrive at a solution for the main problem. It's a powerful technique that allows us to solve complex problems by dividing them into more manageable chunks.

Similar to the simple recursive approach, the divide and conquer algorithm also involves breaking down a problem into smaller subproblems. However, it differs in that it combines the solutions to these subproblems to obtain the final solution for the main problem. It's particularly useful for solving problems that can be divided into independent parts.

Two popular examples of the divide and conquer algorithm are Quick Sort and Merge Sort.

Dynamic programming algorithms rely on a technique called memorization. This means that they store the results of subproblems to avoid redundant computations. By utilizing this approach, dynamic programming algorithms solve complex problems more efficiently by breaking them down into overlapping subproblems.

Greedy algorithms aim to find the best possible solution at each step without considering the overall consequences. They are particularly useful for optimization problems where the goal is to maximize or minimize a certain objective. Greedy algorithms are often employed in scenarios where finding the globally optimal solution is not necessary.

Brute force algorithms involve exhaustively checking all possible solutions to a problem. Although they may not be the most efficient, brute-force algorithms guarantee to find the correct solution. They are commonly used when the problem size is small or when other more efficient algorithms are not available.

Randomized algorithms introduce an element of randomness into the problem-solving process. They utilize random inputs or decisions to solve problems. These algorithms are often used in scenarios where the input is uncertain or where randomness can lead to more efficient solutions.

Recursion is a programming technique where a function calls itself to solve a problem. It's like a problem-solving approach where a larger problem is broken down into smaller, similar subproblems.

Imagine Russian nesting dolls, also known as Matryoshka dolls, where each doll contains a smaller doll inside. The process of opening the dolls one by one can be thought of as a recursive operation.

The base case in the Russian doll example is finding the smallest doll with no more dolls inside. This is crucial in recursion to prevent an infinite loop.

The recursive case is the process of opening a doll to reveal a smaller one inside. This case calls the same operation (opening dolls) on a smaller version of the problem (the smaller doll).

In a recursive method, you typically have three main components:

- Base Case: This is the condition that defines when the recursion should stop. It prevents infinite recursion by providing a terminating condition.

- Recursive Case: This is where the function calls itself with a modified version of the problem, making it closer to the base case. It's the part that "solves" a smaller subproblem.

- Unintentional Case: This is the part of the problem that doesn't fall into the base case or recursive case. You should ensure that the recursive method eventually reaches the base case to avoid infinite recursion.

When a recursive function is called, a stack is used to keep track of function calls. Each function call is placed on the stack, and when a function call returns, it is removed from the stack.

Here's a step-by-step example of a recursive function and how it's stored in the stack:

def calculate_factorial(n):

assert n >= 0 and int(n) == n, 'The number must be a positive integer only!'

if n in [0, 1]:

return 1

else:

return n * calculate_factorial(n - 1)factorial(5)is called and added to the stack.factorial(4)is called and added to the stack.factorial(3)is called and added to the stack.factorial(2)is called and added to the stack.factorial(1)is called and added to the stack.factorial(0)is called and added to the stack.

Now, the base case is reached (n == 0).

The calculations start to complete:

factorial(0)returns 1.factorial(1)returns 1 * 1 = 1.factorial(2)returns 2 * 1 = 2.factorial(3)returns 3 * 2 = 6.factorial(4)returns 4 * 6 = 24.factorial(5)returns 5 * 24 = 120.

Each function call returns its result, and as it does so, it is removed from the stack.

Recursion is useful in various situations, including:

- Solving Problems with Divisibility: Recursion is often used

to solve problems that can be divided into smaller, similar subproblems, like sorting and searching algorithms.

- Tree and Graph Structures: When working with tree or graph data structures, recursive algorithms can be more intuitive.

- Mathematical Calculations: Recursion can simplify mathematical operations, such as factorials, exponentials, and Fibonacci sequences.

- Exploring All Possibilities: Recursion can be used to explore all possible solutions or paths in a problem space, like in backtracking algorithms.

Recursion should be used judiciously, and there are situations when it's better to avoid it:

- Performance Concerns: Recursive calls can lead to stack overflow errors for deep recursion. In such cases, iterative solutions or tail recursion may be more suitable.

- Complexity and Debugging: Recursive code can be harder to understand and debug compared to iterative solutions. It's essential to have a clear understanding of how recursion works.

- Limited Stack Size: In some environments, there may be a limit on stack size, which can restrict the use of recursion for deep calls.

In Python, an iterative solution involves solving a problem through a loop-based approach, where you repeatedly execute a set of instructions until a specific condition is met. Iteration is a fundamental concept in programming, and it's often used when you need to perform a task multiple times or when you can't easily express the problem in a recursive manner.

Power of Two (Iterative and Recursive):

Let's use the example of calculating the power of two (2^n) to illustrate both iterative and recursive solutions.

Iterative Solution:

def power_of_two_iterative(n):

result = 1

for _ in range(n):

result *= 2

return resultRecursive Solution:

def power_of_two_recursive(n):

if n == 0:

return 1

else:

return 2 * power_of_two_recursive(n - 1)- The iterative solution uses a for loop to repeatedly multiply the result by 2

ntimes. - The recursive solution uses a function that calls itself with a decreasing value of

nuntilnreaches 0.

Iterative solutions are often preferred in the following situations:

- Performance: Iterative solutions can be more efficient than recursive ones in many cases, as they avoid the overhead of function calls.

- Readability: Iterative code can be more straightforward and easier to read for some problems, especially those that involve looping through data structures.

- Memory Efficiency: Iterative solutions often have lower memory consumption compared to recursive ones, as they don't create a new stack frame for each recursive call.

- Complex Problems: If a problem naturally involves doing something over and over again, like solving a puzzle piece by piece, it's usually fine to use iterative solutions. But for problems that are like a maze with lots of twists and turns, trying to solve them step by step with loops can make your code messy and hard to understand.

- Too Many Steps: Imagine trying to stack a thousand pieces of paper one by one. It's a lot of work, and it might be easier to find a shortcut. Similarly, if you have to do something many, many times, an iterative solution can become inefficient or even lead to errors.

- Running Out of Space: Think of your computer's memory like a shelf with limited space. If you keep adding books (or data) to it, it might run out of space. Iterative solutions sometimes use more memory because they remember where they left off. In such cases, a recursive approach or a different strategy may be better.

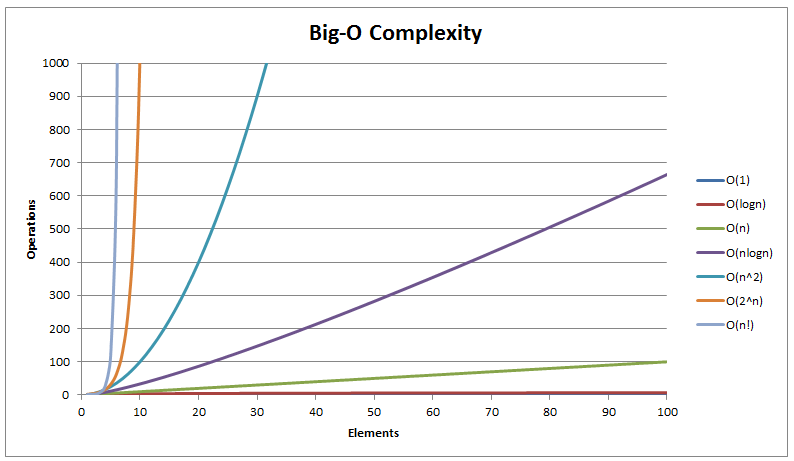

Big O notation (often referred to as time complexity) is a way to describe how the runtime of an algorithm grows as the input size increases. It provides an upper bound on the runtime, helping us understand algorithm performance in the worst-case scenario.

Example: Consider a car's performance, analogous to an algorithm. The time it takes for a car to travel depends on conditions like road, weather, traffic, and engine power.

- The car represents an algorithm.

- Travel time represents the algorithm's runtime.

- Road conditions, weather, and traffic are input conditions or sizes.

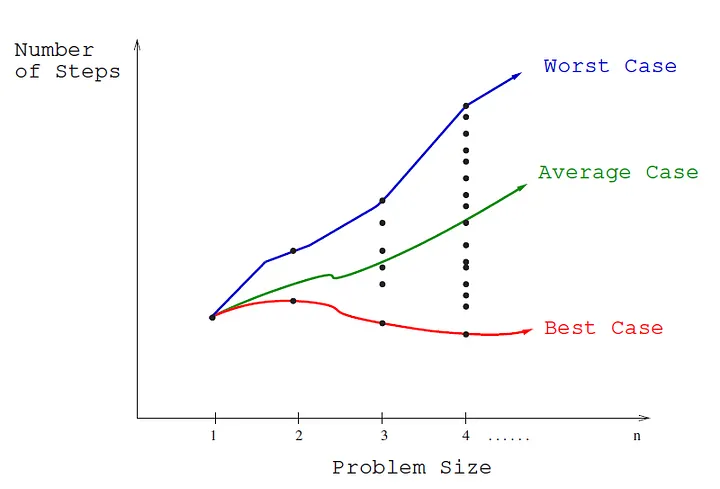

In algorithm analysis, we consider different scenarios:

-

Best Case: The scenario where the algorithm performs at its best, akin to a car on an empty road with perfect conditions.

-

Worst Case: The scenario where the algorithm performs at its worst, similar to a car in congested traffic and bad weather.

-

Average Case: The expected runtime when considering all possible inputs or scenarios.

Image Source: Algorithm Analysis

-

Big O (O-notation): Represents the upper bound or worst-case scenario for an algorithm's runtime. It guarantees that the algorithm won't run any slower than this upper limit as the input size increases.

-

Big Omega (Ω-notation): Represents the lower bound or best-case scenario for an algorithm's runtime. It ensures that the algorithm won't run faster than this lower limit as the input size increases.

-

Big Theta (Θ-notation): Represents a tight bound on an algorithm's runtime, providing both best and worst-case information.

Example: Searching for a book in a library—worst case expressed as O(n), where 'n' is the number of shelves.

Image Source: Understanding Time Complexity

In summary, Big O is the worst-case scenario, Big Omega is the best-case scenario, and Big Theta is an average or tight bound, helping us understand and communicate algorithm performance under different conditions.

Algorithm runtime complexity measures how an algorithm's performance behaves with increasing input size, especially for large datasets.

Types of Runtime Complexities:

- O(1) - Constant Time Complexity: Execution time remains constant regardless of input size (e.g., accessing an element by index).

# O(1) - Constant Time Complexity

def access_first_element(my_list):

# This function returns the first element of the list.

return my_list[0]- O(c) - Constant Time Complexity: Similar to O(1), the algorithm executes in constant time (e.g., simple arithmetic operation).

# O(c) - Constant Time Complexity

def add(a, b):

# This function adds two numbers and returns the result.

return a + b- O(2N) - Linear Time Complexity: Execution time grows linearly with the input size (e.g., simple loop iterating through the input list).

# O(2N) - Linear Time Complexity

def linear_sum(numbers):

# This function calculates the sum of a list of numbers using a loop.

total = 0

for num in numbers:

total += num

return total- O(N^2) - Quadratic Time Complexity: Execution time grows with the square of the input size (e.g., nested loops comparing all pairs of elements).

# O(N^2) - Quadratic Time Complexity

def bubble_sort(arr):

# This function sorts a list using the bubble sort algorithm.

n = len(arr)

for i in range(n):

for j in range(0, n - i - 1):

if arr[j] > arr[j + 1]:

arr[j], arr[j + 1] = arr[j + 1], arr[j]- O(log N) - Logarithmic Time Complexity: Execution time increases logarithmically with the input size (e.g., binary search in a sorted list).

# O(log N) - Logarithmic Time Complexity

def binary_search(arr, target):

# This function performs binary search to find the target element in a sorted list.

left, right = 0, len(arr) - 1

while left <= right:

mid = (left + right) // 2

if arr[mid] == target:

return mid

elif arr[mid] < target:

left = mid + 1

else:

right = mid - 1

return -1

Space complexity measures the memory space an algorithm consumes in relation to its input size.

Types of Space Complexities:

-

O(1) - Constant Space Complexity: Space requirements remain constant, regardless of input size.

-

O(N) - Linear Space Complexity: Memory usage grows linearly with the input size.

-

O(N^2) - Quadratic Space Complexity: Space usage grows with the square of the input size.

Dropping the Constant and Non-dominant Terms:

When analyzing complexities, dropping constant factors and non-dominant terms simplifies analysis, focusing on the highest-growing term.

In real-world scenarios, the dominant term usually has the most impact on an algorithm's efficiency, aiding in algorithm comparison and selection.

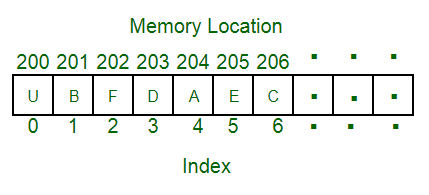

In Python, an array is a collection of elements, similar to a list. Arrays store multiple values under a single name, making it easier to manage and manipulate data. They provide a way to access and organize related values.

Relating Arrays to Cakes and Macaroons:

-

Array as a Box of Cakes: Each cake in the box represents an element in the array. Cakes are of the same type, stored sequentially, and have a unique position, similar to array elements.

-

Multi-Dimensional Array as a Box of Macaroons: A multi-dimensional array is like a box of macaroons, divided into rows and columns, representing a 2D array.

Arrays are stored sequentially in memory. Whether one-dimensional or multi-dimensional, they occupy a continuous block of memory.

Creating an Array:

To create an array, three typical steps are involved:

- Assign to a Variable: Give the array a name.

- Define the Type: Specify the data type.

- Define the Size: Indicate the number of elements (not always required in languages like Python).

Creating an Array:

- Time Complexity: O(n) space complexity (size of the array). O(1) time complexity.

# Import the 'array' class from the 'array' module

from array import array

# Create an array of signed integers ('i') and initialize it with values

# The 'i' type code represents signed integers

# The array will contain the elements: 1, 2, 3, 4, and 5

arr = array('i', [1, 2, 3, 4, 5])Insertion (Append):

- O(1) time complexity for appending an element. O(n) in some cases when resizing is needed.

# Insertion (Append)

arr.append(6) # Appending element 6 to the endDeletion (Pop):

- O(1) time complexity for removing an element from the end.

# Deletion (Pop)

last_element = arr.pop() # Removing and getting the last elementTraversing (Iterating):

- O(n) time complexity for iterating through the entire array.

# Traversing (Iterating)

for element in arr:

print(f"Element: {element}")Accessing (By Index):

- O(1) time complexity for accessing an element by index.

# Accessing (By Index)

third_element = arr[2] # Accessing the element at index 2 (3)Searching:

- O(n) time complexity in the worst case for searching an element, requiring traversal.

# Searching

value_to_find = 4

if value_to_find in arr:

index = arr.index(value_to_find)

print(f"{value_to_find} found at index {index}")Deletion (Remove by Value):

- O(n) time complexity for removing an element by value, involving finding the index and potential shifting.

# Deletion (Remove by Value)

value_to_remove = 3

if value_to_remove in arr:

arr.remove(value_to_remove) # Removing value 3- extend(): Appends elements from another iterable to the end of the array.

# extend()

arr1 = array("i",[1, 2, 3])

arr2 = array("i",[4, 5, 6])

arr1.extend(arr2) # Appends elements from arr2 to arr1- index(): Returns the index of the first occurrence of a specified value.

# index()

index = arr1.index(4) # Returns the index of value 4- reverse(): Reverses the order of elements in the array.

# reverse()

arr1.reverse() # Reverses the order of elements- buffer_info(): Returns a tuple containing the address and size of the array's buffer.

# buffer_info()

buffer_info = arr1.buffer_info() A two-dimensional array in Python is a list of lists, organizing elements in a matrix or grid format. Each element is identified by two indices, allowing representation of tables, matrices, and other 2D structures.

Why Use It:

- Structured Data: Excellent for representing matrices, tables, and grids.

- Efficient Storage: Provides a tabular structure for efficient data storage.

- Matrix Operations: Useful for linear algebra and numerical computing.

- Image Processing: Commonly employed for pixel data in image processing.

Creation:

- Time Complexity: O(m * n) where m is the number of rows and n is the number of columns. Space Complexity: O(m * n).

# Creating a 2D array using lists

matrix = [

[1, 2, 3],

[4, 5, 6],

[7, 8, 9]

]Accessing an Element:

- Time Complexity: O(1) at the end; O(m * n) at the beginning or middle (may involve shifting elements). Space Complexity: O(1) for each element.

# Accessing an element

element = matrix[1][2]

print(f"Accessed element: {element}")Searching:

- Time Complexity: O(m * n) in the worst case; O(m + n) in the average case if the target is in the last row or column. Space Complexity: O(1).

# Searching for an element

def search_2d_array(arr, target):

for i, row in enumerate(arr):

if target in row:

return i, row.index(target)

return -1, -1

target_element = 5

index_i, index_j = search_2d_array(matrix, target_element)

print(f"Element {target_element} found at index ({index_i}, {index_j})") Traversing:

- Time Complexity: O(m * n) as every element needs to be visited. Space Complexity: O(1).

# Traversing the 2D array

print("Traversing the 2D array:")

for row in matrix:

for element in row:

print(element, end=' ')

print()Deletion:

- Time Complexity: O(m * n) for a specific element (may involve shifting elements); O(m * n) for a complete row or column (involves copying the remaining elements). Space Complexity: O(1) for each element.

# Deleting a row

del matrix[1]

print("2nd row deleted:", matrix)

# Deleting an element

matrix[0].pop(1)

print("Element at row 0, column 1 deleted:", matrix)NumPy provides a powerful array class for working with multi-dimensional arrays.

For Creation:

- Time Complexity: O(m * n) for initializing each element. Space Complexity: O(m * n).

import numpy as np

# Using NumPy to create a 2D array

np_matrix = np.array([

[1, 2, 3],

[4, 5, 6],

[7, 8, 9]

])For Deletion:

- Time Complexity: O(m * n) for a specific element (may involve shifting elements); O(m * n) for a complete row or column (involves copying the remaining elements). Space Complexity: O(m * n) as it creates a new array.

Use Arrays When:

- Random Access is Important: O(1) time complexity.

- Fixed Size is Acceptable: Efficient storage with O(1) access.

Avoid Arrays When:

- Dynamic Resizing is Needed: O(n) time complexity for resizing.

- Key-Value Pairs are Required: For scenarios where data retrieval is based on keys rather than indices.

- Complex Operations are Frequent: If operations involve more than basic access or modification.

In programming, a list is an ordered collection of items which can be of varying data types. Lists are mutable, allowing for the modification of their contents.

Lists can store multiple data types:

- Integers:

[1, 2, 3] - Floats:

[1.1, 2.2, 3.3] - Strings:

["apple", "banana", "cherry"] - Mixed:

[1, "apple", 3.14, [2, "banana"]]

Each item in a list is paired with an index, which is used to access the element:

- Indexing starts at 0 for the first element.

- Negative indexing starts at -1 for the last element.

Example:

my_list = ['apple', 'banana', 'cherry']

print(my_list[1]) # Outputs 'banana'- Accessing: Elements in a list are accessed using their index. List indexing is zero-based.

- Example:

my_list[2]accesses the third element in the list.

- Example:

- Traversing: Iterating through a list can be done using loops or list comprehensions.

- Append: Adds an element to the end of the list.

- Time Complexity: O(1)

- Space Complexity: O(1)

- Example:

my_list.append(10)

- Insert: Inserts an element at a specified index, shifting other elements.

- Time Complexity: O(n)

- Space Complexity: O(1)

- Example:

my_list.insert(2, 7)

- Slice: Extracts a portion of the list.

- Time Complexity: O(k) where k is the size of the slice.

- Space Complexity: O(k)

- Example:

sublist = my_list[1:4]

- Delete: Removes an element or a slice from the list.

- Time Complexity: O(n) for a single element, O(k) for a slice.

- Space Complexity: O(1)

- Example:

del my_list[2]ordel my_list[1:4]

- Search: Looks for the presence of an element in the list.

- Time Complexity: O(n)

- Space Complexity: O(1)

- Example:

element_index = my_list.index(7)

Certainly! Let's add examples for each of the List Operations and Functions:

Concatenation is the process of combining two or more lists into a single list.

list1 = [1, 2, 3]

list2 = [4, 5, 6]

result = list1 + list2

print(result)Output:

[1, 2, 3, 4, 5, 6]

Repetition involves duplicating a list multiple times.

original_list = [1, 2, 3]

repeated_list = original_list * 3

print(repeated_list)Output:

[1, 2, 3, 1, 2, 3, 1, 2, 3]

The len() function returns the number of elements in a list.

my_list = [10, 20, 30, 40, 50]

length = len(my_list)

print(length)Output:

5

The max() function returns the maximum value in a list.

numbers = [5, 8, 2, 10, 3]

maximum_value = max(numbers)

print(maximum_value)Output:

10

The min() function returns the minimum value in a list.

numbers = [5, 8, 2, 10, 3]

minimum_value = min(numbers)

print(minimum_value)Output:

2

The sum() function calculates the sum of all elements in a list.

my_list = [1, 2, 3, 4, 5]

sum_of_elements = sum(my_list)

print(sum_of_elements)Output:

15

To convert a string into a list, you can use the list() constructor or the split() method.

my_string = "Hello, World!"

string_list = list(my_string)

print(string_list)Output:

['H', 'e', 'l', 'l', 'o', ',', ' ', 'W', 'o', 'r', 'l', 'd', '!']

my_string = "Hello, World!"

string_list = my_string.split()

print(string_list)Output:

['Hello,', 'World!']

A delimiter is a character used to separate elements in a string or list. Common delimiters include spaces, commas, or any character of choice.

csv_data = "John,Doe,30,Developer"

csv_list = csv_data.split(',')

print(csv_list)Output:

['John', 'Doe', '30', 'Developer']

The join() method is used to concatenate elements of a list into a single string. It takes a delimiter as an argument.

my_list = ['apple', 'banana', 'orange']

list_to_string = ', '.join(my_list)

print(list_to_string)Output:

apple, banana, orange

Similarities and Differences:

Both lists and arrays are used to store collections of data in Python, but they have some key differences in terms of flexibility and functionality.

Lists:

-

Dynamic Size:

- Lists in Python are dynamic, meaning they can grow or shrink in size during runtime.

- You can easily add or remove elements from a list without specifying the size beforehand.

-

Homogeneous or Heterogeneous:

- Lists can store elements of different data types within the same list.

- For example:

my_list = [1, 'hello', 3.14, True]

-

Built-in Functions:

- Python provides a variety of built-in functions for manipulating lists, such as

append(),pop(),remove(), and more.

- Python provides a variety of built-in functions for manipulating lists, such as

-

More Memory Overhead:

- Lists come with additional memory overhead because they store not only the data but also additional information about the list structure.

Arrays:

-

Static Size:

- Arrays have a fixed size determined at the time of creation.

- The size is defined when you declare the array, and it cannot be changed during runtime.

-

Homogeneous:

- Arrays typically store elements of the same data type.

- For example:

import array my_array = array.array('i', [1, 2, 3, 4])

-

Efficient Memory Usage:

- Arrays have less memory overhead compared to lists because they store only the raw data without additional information about the array structure.

Similarities:

-

Indexing:

- Both lists and arrays use zero-based indexing to access elements.

-

Iterating:

- You can iterate through both lists and arrays using loops.

-

Common Operations:

- Many common operations, such as slicing, can be performed on both lists and arrays.

Example:

# List Example

my_list = [1, 2, 3, 4, 5]

print(my_list[2]) # Output: 3

my_list.append(6)

print(my_list) # Output: [1, 2, 3, 4, 5, 6]

# Array Example

import array

my_array = array.array('i', [1, 2, 3, 4, 5])

print(my_array[2]) # Output: 3

# Arrays have a fixed size, so you can't directly append. You need to create a new array.

new_array = array.array('i', my_array + array.array('i', [6]))

print(new_array) # Output: array('i', [1, 2, 3, 4, 5, 6])In summary, lists provide more flexibility and functionality at the cost of increased memory overhead, while arrays offer efficient memory usage but come with a fixed size. The choice between them depends on the specific requirements of your program.

| Operation | Time Complexity | Space Complexity |

|---|---|---|

| Access (by index) | O(1) | O(1) |

| Append | O(1) (amortized) | O(1) |

| Insert (at a specific index) | O(n) | O(1) |

| Pop (from the end) | O(1) | O(1) |

| Pop (from a specific index) | O(n) | O(1) |

| Delete (by value) | O(n) | O(1) |

| Search (for a value) | O(n) | O(1) |

| Iteration | O(n) | O(1) |

| Size (n) | - | O(n) |