databrickslabs / jupyterlab-integration Goto Github PK

View Code? Open in Web Editor NEWDEPRECATED: Integrating Jupyter with Databricks via SSH

License: Other

DEPRECATED: Integrating Jupyter with Databricks via SSH

License: Other

As mentioned in the document, Either Macos or Linux. Windows is currently not supported. What about Windows Subsystem for Linus?

I can see this would be useful running from jupyterhub which is a multi tenant jupyter service. For example, a user runs a local notebook and sends a job to a remote cluster. I would like the databricks cluster to start when the job is sent and not be running continuously and then shutdown after the clusters inactive timeout settiing.

In our case we use jupyterhub running in AKS and Azure Databricks in own vnet.

I did try to create a docker image as an extension of one of the default jupyter images.

`

FROM jupyter/datascience-notebook:latest

ENV BASH_ENV ~/.bashrc

RUN conda create -n db-jlab

RUN echo "source activate db-jlab" > ~/.bashrc

ENV PATH /opt/conda/envs/env/bin:$PATH

RUN pip install --upgrade databrickslabs-jupyterlab

RUN databrickslabs-jupyterlab -b

RUN pip install databricks-cli

USER $NB_USER

`

I am still testing

First off just want to say I think this tool is great, and generally works flawlessly. I'm running into an issue where i'll sporadically receive a "Cluster Unreachable" exception, prompting me to restart the cluster. For long running jobs, this is can be annoying, since it forces me to restart the cluster, and then re kick off the job. Any ideas why this is happening? It happens even in the middle of interactive work where my local machine is active and (in theory) the SSH tunnel is stable (although I haven't tested network disruptions etc).

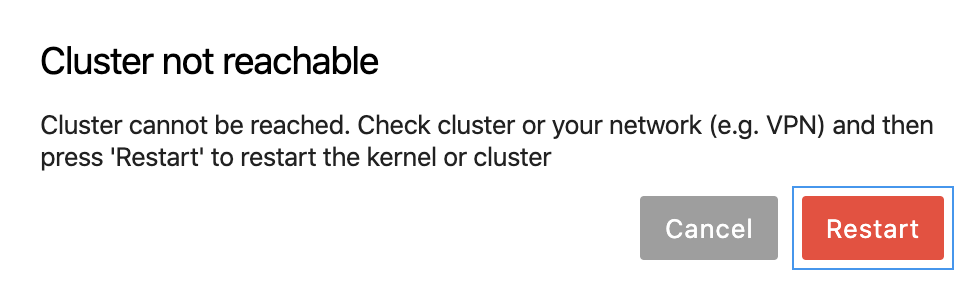

Here's the pop up that will surface:

Any help is much appreciated.

If there are some multiline dicts in jupyter_notebook_config.py like

c.NotebookApp.tornado_settings={

'headers': {

'SOME_HEADER': 'SOME_VALUE'

}

}

then databrickslabs_jupyterlab.local.write_config splits the first line by "=" which results in

['c.NotebookApp.tornado_settings', '{']

Next lines are just simply ignored as there is no "=" symbol there. As a result, the correct c.NotebookApp.tornado_settings settings are overwritten by c.NotebookApp.tornado_settings={ which simply breaks the config as now we have only an opening brace.

A workaround on this is just to flatten the config lines into one, but it can make the config unreadable if there are lots of lines. So maybe there is some sense to change write_config func so it can handle such cases.

I guess I can fix it and create a pull-request

The package relies on conda, but conda is very heavyweight and not everyone is a huge conda fan.

I believe this is a lower priority issue, but it would be great if we could use any virtual env manager we want, like say Poetry.

Hello, all: trying to set up following the instruction on Azure Databricks getting this error,

databrickslabs-jupyterlab eastus -k -i 0426-155413-ring996

Traceback (most recent call last):

File "/data/anaconda/envs/db-jlab/bin/databrickslabs-jupyterlab", line 171, in <module>

version = conda_version()

File "/data/anaconda/envs/db-jlab/lib/python3.6/site-packages/databrickslabs_jupyterlab/local.py", line 125, in conda_version

return result["stdout"].strip().split(" ")[1]

IndexError: list index out of range

I am able to SSH into master no problem.

-p also list the profile.

databrickslabs-jupyterlab -p

PROFILE HOST SSH KEY

eastus https://eastus.azuredatabricks.net/?o=3573392022285404 OK

databricks cli connects with cluster successful.

databricks clusters list --profile eastus

0426-155413-ring996 gpu RUNNING

0404-233454-navel281 std TERMINATED

Much appreciated on your help.

Readme mentions we support DBR 7.0 beta. Is 7.3 ML LTS also supported?

Is it possible to upgrade notebook to latest version 6.2.0?

In the used version of jupyter/notebook (notebook==6.0.3) there is a problem with Ensure that cell ids persist after save, discussed PR in jupyter/notebook. Which has been fixed in notebook in latest version 6.2.0. Without this whenever we save Notebook it will create new ID for each cell and review on Notebook becomes difficult.

I was checking if there is any possibility to upgrade notebook to latest version 6.2.0, your help will be appreciated!

Can you please clarify how the notebook experience would work if I used scala?

I've read the following, and had follow-up questions...

https://github.com/databrickslabs/jupyterlab-integration/blob/master/docs/v2/how-it-works.md

Based on my understanding of that article, the scala kernel, a JVM, would never run locally on my workstation. Is that correct? It sounds everything I'm doing in each cell is being proxied to the remote cluster, including any logic that would otherwise be executed on the spark driver.

I am pretty excited by your demo, that I saw here:

https://github.com/databrickslabs/jupyterlab-integration/blob/master/docs/v2/news/scala-magic.md

I guess the concern I have is that if the scala kernel is never running on the local machine, then it will be difficult to achieve a rich scala development experience within Jupyter. I think you highlighted some of the limitations already. As-of now I've been using almond-sh ( https://almond.sh/ ) as my scala kernel in Jupyter and it sounds like this jupyterlab-integration experience would be very different.

Please let me know. I'm very eager to develop scala notebooks in Jupyterlab that will interact with a remote databricks cluster (via db-connect). It seems like a good combination to use jupyterlab for development, along with a remote cluster that I don't need to manage myself.

conda version is latest i.e. greater than 4.7.5 - still databrickslabs-jupyterlab complains of it being too old.

(dbconnect) ~ λ databrickslabs-jupyterlab $PROFILE -s -i $CLUSTER_ID

Too old conda version:

Please update conda to at least 4.7.5

(dbconnect) ~ λ conda --version

conda 4.8.0

Hi,

Are there any plans to add support for dbutils.library module? Right now simple dbutils.library.help("install") produces an error:

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

<ipython-input-19-28186010f9f4> in <module>

----> 1 dbutils.library.help("install")

AttributeError: 'DbjlUtils' object has no attribute 'library'

In ML runtime there is also a great magic %pip - https://docs.databricks.com/notebooks/notebooks-python-libraries.html#enable-pip-and-conda-magic-commands

It installs libraries both to driver and executor nodes.

In contrast, when running %pip install inside jupyterlab notebook connected to databricks cluster - it installs libraries only on driver node. Which makes it unusable in case of udfs, cause executors need same libraries also.

Could you suggest any workaround? Or maybe there are some plans to bring such support to jupyterlab-integration?

Any way to install notebook scoped libraries interactively without init scripts?

Thanks in advance

Hello,

I was wondering if there is any way that notebooks connecting to Databricks via SSH could read files on local machine.

I have a yaml file and a notebook on my local side. I opened the notebook from jupyterlab connecting to Databricks via SSH and the notebook tried to read the yaml file but it did not work. Because the yaml file did not exist on Databricks. What I could figure out this is uploading yaml file to dbfs system so that the notebook can read the yaml file from there. Is there any better way to do that?

Thanks.

Hi all, thanks for this nice effort and great work! However, I miss the potential to switch the connectivity around (eg. connecting from my k8s to DB cluster).

So, how is this different to jupyter gateway or jupyter enterprise gateway?

Since Anaconda has licensing which is incompatible with some corporate structures, it is important to use open source pip package manager instead.

I will investigate and see what can be done. There is a conda version of the databricks runtime docker images, but they are ostensibly less up-to-date than the pip versions.

Hi Databricks,

After I pip install --upgrade databrickslabs-jupyterlab==2.2.1, but encounter encounter ModuleNotFoundError: No module named 'version_parser'.

Where I can find the version_parser to install??

Best,

We have a setup using VPN to connect to our databricks clusters.

I want to be able to connect to the cluster using its private IP.

I've tried just changing the IP in the ssh config, but that gets overridden.

Any ideas?

Hi!

Firstly, Thank you for your work!

I'm wondering If I can somehow specify custom environment variables for my ssh kernel.

I have some custom libraries for which I need to specify PYTHONPATH, LD_LIBRARY_PATH and some other env vars. When I just use simple Databricks notebooks I have my own docker image and Init Scripts where I setup these vars and add them to .bashrc. But if I run Jupyterlab with databricks integration then I don't see those variables. The only workaround I've found so far is to edit local.py from this library and setup my env there.

Obviously, this is a rather dirty hack.

In Azure databricks environments we

(db-jlab) C02Y77B9JG5H:~ gobinath$ databrickslabs-jupyterlab $PROFILE -k -o 4116859307136712 -i 0520-162211-ilk548

Valid version of conda detected: 4.7.12

* Getting host and token from .databrickscfg

* Select remote cluster

Token for profile 'jupyterssh' is invalid

=> Exiting

(db-jlab) C02Y77B9JG5H:~ gobinath$

(db-jlab) C02Y77B9JG5H:~ gobinath$ databrickslabs-jupyterlab $PROFILE -s -i 0520-162211-ilk548

Valid version of conda detected: 4.7.12

* Getting host and token from .databrickscfg

=> ssh key '/Users/gobinath/.ssh/id_jupyterssh' does not exist

=> Shall it be created (y/n)? (default = n): y

=> Creating ssh key /Users/gobinath/.ssh/id_jupyterssh

=> OK

Token for profile 'jupyterssh' is invalid

=> Exiting

(db-jlab) C02Y77B9JG5H:~ gobinath$

I know the token is good because I validated over and again with direct cli command:

(db-jlab) C02Y77B9JG5H:~ gobinath$ databricks clusters list --profile jupyterssh

0520-162211-ilk548 test_jupyter RUNNING

(db-jlab) C02Y77B9JG5H:~ gobinath$

I'm getting a missing arguments error of 'pinned_mode' upon successful connect when I tried to get the Spark context.

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-2-376bcb4d28bb> in <module>

1 from databrickslabs_jupyterlab.connect import dbcontext, is_remote

----> 2 dbcontext()

/databricks/python/lib/python3.7/site-packages/databrickslabs_jupyterlab/connect.py in dbcontext(progressbar)

179 # ... and connect to this gateway

180 #

--> 181 gateway = get_existing_gateway(port, True, auth_token)

182 print(". connected")

183 # print("Python interpreter: %s" % interpreter)

TypeError: get_existing_gateway() missing 1 required positional argument: 'pinned_mode'

I found that databricks-connect supports jupyter and I made sure that jupyterlab works with databricks-connect with this link.

https://docs.databricks.com/dev-tools/databricks-connect.html#jupyter

Which one should I use for using jupyterlab with databricks, this library or databricks-connect? Does the development on this repo continue? I'm wondering where the databricks team will be putting effort into to integrate jupyterlab.

Hi!

Do you have plans to support new jupyterlab 3? If so, are there any approximate dates for it and can I help somehow?

Dataframes render fine in local Jupyter notebook, but matplotlib and plotly plots do not.

For matplotlib, the plot simply does not render:

For plotly, the error when using default plotly renderer is

When updating the default render to "notebook", nothing is printed out, similar to matplotlib. I can make plotly work with a very hacky workaround, by saving the plot as HTML and then displaying the HTML.

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.