Comments (33)

Hi @bitprophet

We use Paramiko to move large CSV files between machines over long distance WAN connections (could be from a 1Mbps to 1Gbps link depending on location). We've noticed the long transfer times noted here in #60, in our use case the issue was when the transport was using compression. I know this might not apply to all the folks here with #60 issues but if you have compression read on.

Our test case is a 1.3Gb CSV file, to be moved between two machines connected by a 100Mbps lan segment. We're running RHEL 7.3, paramiko-1.15.2 and Python 3.4.1 Sending machine is using Paramiko and receiving end is running RHEL sshd.

After getting poor reports from our production instances using Paramiko with compression we did some tests looking at multiple ways to move the file between the same servers, see results below

| Transfer type | Avg time secs (3 runs) | Effective data rate |

|---|---|---|

| regular scp | 116 | 93 Mbps |

| scp with compression | 54 | 201 Mbps |

| gzip, scp, gunzip combo | 69 | 158 Mbps |

| Paramiko no compression | 117 | 93 Mbps |

| Paramiko with compression | 278 | 39 Mbps |

This showed that Paramiko with compression was by far the slowest option, so we went digging...

The root cause of the issue in the choice of compression level in paramiko/paramiko/compress.py

import zlib

class ZlibCompressor (object):

def __init__(self):

self.z = zlib.compressobj(9)

Its hard coded to 9 which is Zlib's max compression (its default is the same as gzip = 6)

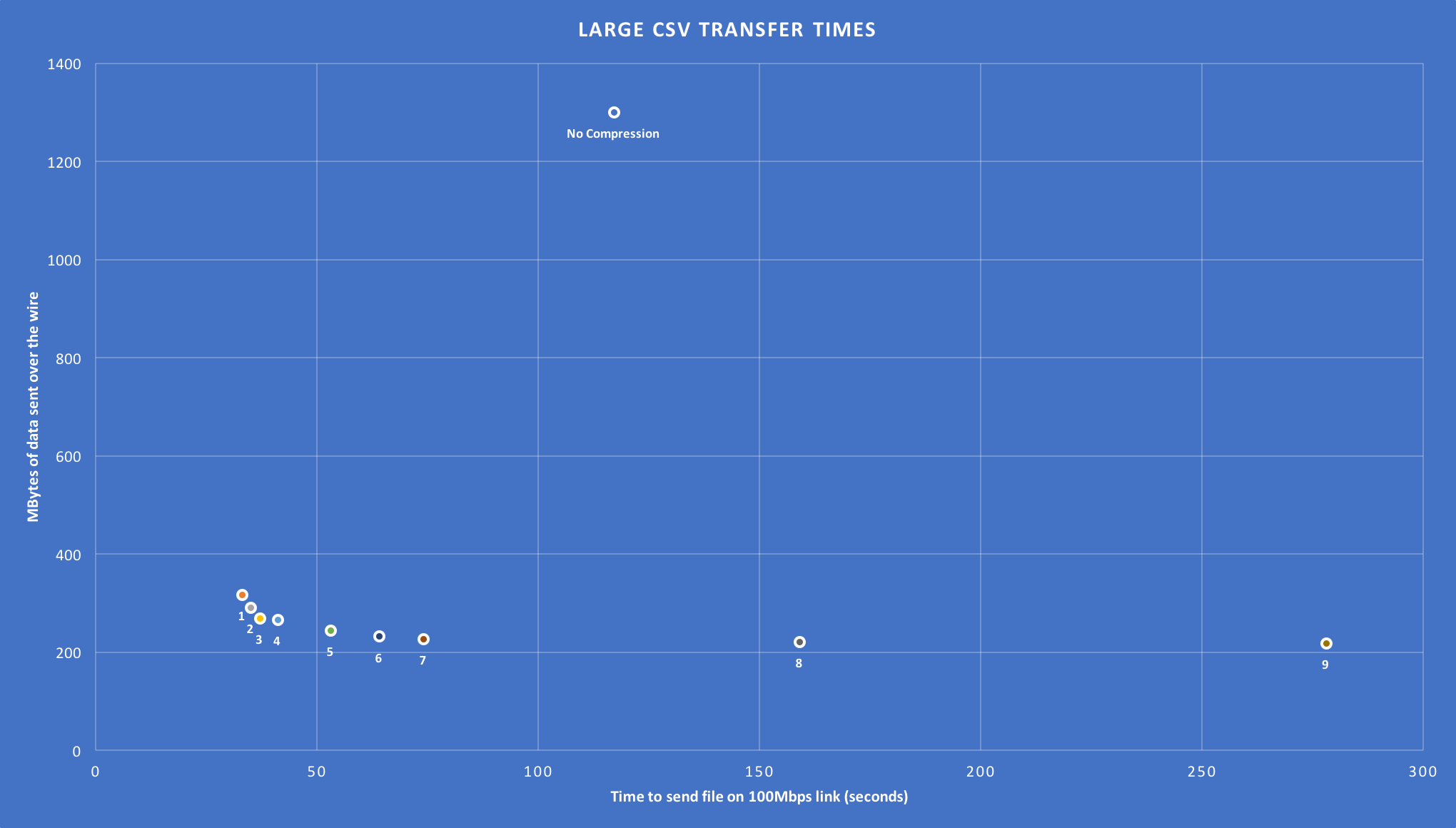

To prove this out, we did the same test setting the zlib.compressobj level to 0 through 9, shown below. We used sshd set to DEBUG level logging on the far end to record exactly how many bytes passed over the WAN/LAN.

| lib Compression level | Time to send (secs) | Data over WAN MBytes | Compression % |

|---|---|---|---|

| 0 | 117 | 1,301 | 0% |

| 1 | 33 | 317 | 76% |

| 2 | 35 | 291 | 78% |

| 3 | 37 | 269 | 79% |

| 4 | 41 | 266 | 80% |

| 5 | 53 | 245 | 81% |

| 6 | 64 | 232 | 82% |

| 7 | 74 | 227 | 83% |

| 8 | 159 | 221 | 83% |

| 9 | 278 | 219 | 83% |

Clearly this data is only valid for our test case of a very big CSV file, but in general we find that compression set to 9 is a bad trade off in CPU and time vs the actual gain on compression. From our data setting the level to 8 and 9 is a terrible choice while the levels 1-4 give you a pretty good bang for the buck, a lot of this will depend just how constrained each end users WAN or LAN connection really is.

The simple fix for many people will be to alter the call to zlib.compressobj() and let zlib default to 6 or a more elegant solution would be to allow the API to pass the users requested level of compression. We will be happy to submit a PR for this change.

from paramiko.

Just one more note to say that the compression slowness I commented on above may also explain why a lot of people have uni-directional slowness.

The compression level is chosen by the sending end, so for example if we are doing Paramiko -> sshd put of a file with compression, we would see slow times, high CPU. But getting exactly the same file from sshd -> Paramiko will be good performance as its the end running sshd thats doing the compression.

It could also explain the issue noted above by @Dreamsorcerer where txt files are quick and .png's are slow. This is due to the fact the trying to re-compress an already compressed file, especially at level 9 will take much longer than squishing a txt file.

Pull Request is in for this #1037

from paramiko.

commented on September 24, 2024

commented on September 24, 2024

I also experienced similar problems - let me know if I can help to debug the issue!

from paramiko.

I am seeing this as well, and it is even worse on windows machines. So far I was unable to find the root cause from profiling.

from paramiko.

The speed problems were a blocker for me. I have tested paramiko and the fork ssh (https://github.com/bitprophet/ssh)

I now have moved to pylibssh2 (https://github.com/wallix/pylibssh2).

from paramiko.

I have seen this issue as well, and have attempted to trace the problem with the profiler.

During lengthy 'put' transfers, there is significant time spent reading the response packets from the connection. My suspicion is that self.sftp.sock.recv_ready() in SFTPFile._write() is returning true (since there is at least 1 byte available), but the response has not yet been fully received, so the calls below self.sftp._read_response(req) (also in SFTPFile._write() block waiting for the complete response. This makes the transfer behave similar to the non-pipelined mode since there is still a blocking read for each packet written.

My klugey workaround -- placing a timer around the 'if not self.pipelined...' block that limits it to once per second -- increases the same-host put speed rate from 11 MB/sec to 23 MB/sec (vs. command-line sftp at 74 MB/sec).

from paramiko.

@wwh Indeed, there is a loop with no delay that's likely doing nothing but consuming cycles while checking if there's data as fast as possible. So in fact it's reading in chunks as small as possible. Clients like lftp implement something smarter, lftp itself only reads out the buffer when the data in the buffer is larger than the size of the read (which is 64k) or it suspends the connection. (Beware that lftp is multithreaded though)

https://github.com/lavv17/lftp/blob/89487eb2d7ff3d320ee8441ad3e7f4eec0d28fc5/src/SFtp.cc#L237

from paramiko.

I've definitely noticed that SFTP is pretty slow, and have complaints about that from Fabric users as well. Experienced it myself just the other night, even.

Fabric itself recently fixed a potentially similar problem in its command execution stuff, where it was using Paramiko's channel read methods to read byte-by-byte -- super inefficient. If Paramiko itself is doing something similar in the SFTP lib, fixing that would be pretty great. I'll try to take a look soon.

from paramiko.

I am wondering that is this problem solved?

from paramiko.

Unfortunately I still haven't had a chance to dig into this and it hasn't been a pain point for me recently. Totally willing to field a pull request if somebody has more time to dig & figure it out, though.

from paramiko.

I have managed to push it up to 33MB/sec localhost -> localhost (which seems to be the limit) whitout compression and bulk checking the responses every 32MB transfered (i.e every 100 _write call in SFTPFile.py). To me this is an okay compromise since you are not ignoring to check the ACKs from the server, you are also making sure to not get the error too late in a large transfer. This works for smaller files too, since there is a cleanup routine being called when the file has been transfered.

from paramiko.

Is this bug still open? I noticed a very heavy CPU usage during a fabric put file. It's not even a big file, just some 11kb, but it takes 7 seconds and full CPU usage just to send the file. The same transfer with scp takes only 0.2 seconds.

System: Fedora 20

Fabric 1.7.0

Paramiko 1.15.1

from paramiko.

Could swear I saw recent chatter about this, yes, but I cannot find any other open tickets matching appropriate keywords. @dioni21 if you're able to do some quick bisecting with versions (eg try 1.10, 1.13, etc, narrow down whether this got worse recently or if it's constant always) that might help give us a clue. Thanks!

from paramiko.

Hi @bitprophet ,

My bysecting found no difference at all. But I managed to "locate" the problem.

Using a test file with 11583 bytes, I my results were:

Fabric==1.5.0

ecdsa==0.11

paramiko==1.15.1

pycrypto==2.6.1

=> Elapsed time: 7.620926 seconds

Fabric==1.4.4

pycrypto==2.6.1

ssh==1.8.0

=> Elapsed time: 0.423845 seconds

This elapsed time is just around the put operation:

t1 = time.time()

put(tmpfile,tmpfile)

print 'Elapsed: %f seconds' % (time.time() - t1)

Apparently, this problem has always been around ever since Fabric migrated from ssh to Paramiko.

from paramiko.

FYI,

I managed to do some profiling with these versions (latest):

Fabric==1.10.0

ecdsa==0.11

paramiko==1.15.1

pycrypto==2.6.1

The code used:

pr = cProfile.Profile()

pr.enable()

put(tmpfile,tmpfile)

pr.disable()

pr.dump_stats("profile.stats")

And the top results, sorted by cumulative time:

Ordered by: cumulative time

ncalls tottime percall cumtime percall filename:lineno(function)

1 0.000 0.000 13.042 13.042 network.py:640(host_prompting_wrapper)

1 0.000 0.000 13.042 13.042 operations.py:253(put)

1 0.000 0.000 13.024 13.024 sftp.py:29(__init__)

1 0.000 0.000 12.995 12.995 network.py:153(__getitem__)

1 0.000 0.000 12.994 12.994 network.py:138(connect)

1 0.000 0.000 12.994 12.994 network.py:381(connect)

1 0.000 0.000 12.765 12.765 client.py:75(load_system_host_keys)

1 0.017 0.017 12.765 12.765 hostkeys.py:79(load)

2831 0.006 0.000 12.322 0.004 hostkeys.py:188(check)

2833 1.755 0.001 12.287 0.004 hostkeys.py:127(lookup)

119315 1.233 0.000 5.465 0.000 hostkeys.py:258(hash_host)

119315 3.101 0.000 4.158 0.000 util.py:315(constant_time_bytes_eq)

119331 0.799 0.000 1.417 0.000 hmac.py:27(__init__)

14323543 1.013 0.000 1.013 0.000 {ord}

238630 0.543 0.000 0.903 0.000 base64.py:310(encodestring)

240293 0.186 0.000 0.830 0.000 py3compat.py:48(u)

4101533 0.718 0.000 0.718 0.000 {method 'startswith' of 'str' objects}

240293 0.240 0.000 0.603 0.000 {method 'decode' of 'str' objects}

...

Apparently, something in hostkeys is taking about 7 seconds (without profiling). Not much in a single run, but after hundreds of runs a day, it gets boring.

from paramiko.

Yea, the hostkeys related stuff is a known thing (#437 I believe). So this may just be another facet of that same issue.

from paramiko.

I'm encountering serious performance issues too. Downloading a directory of 313 items totalling 4.2 MB through my file browser takes me about 10 seconds, with paramiko it takes in the region of an hour.

from paramiko.

Printing out the files as they are downloaded, I can see that it has downloaded a bunch of text files in the region of 60KB each without a problem, but when it gets to a .png that is only 5.5KB it hangs for atleast several minutes.

from paramiko.

Oh, there are other .png's before that which it has also downloaded fine. But, it always gets stuck at the same file.

from paramiko.

Hi - I am experiencing similiar issues of performance using Paramiko and PySFTP libraries for SFTP downloads of files in the 30-40MB range. Using the package 6.6p1-2ubuntu2 for SFTP client in Ubuntu 14.04 is giving me about 4x better download speed.

I have tried to actively debug this issue and tune some parameters but I cannot figure it out. Curious if anyone has solved this issue or knows of some resources I may be able to use to debug this performance problem.

from paramiko.

Is this thread related to this other one marked as closed?

#175

from paramiko.

There are (were) two different problems. #175 solved a transfer speed problem, but I still have speed problems during key exchange.

from paramiko.

We are facing similar problem. 500 MB file SFTP is taking 90+ minutes. Tried changing window_size to 2147483647 but it did not help. Is there any other parameter which needs to be changed?

8 MB file is taking 50 seconds

8 KB file is taking 0.3 seconds

from paramiko.

@mukpat, on large files sending data in chunks may help, see #892

from paramiko.

Gonna be real ecstatic if that change fixes it in some/many/most cases. (FTR the hardcoded level 9 compression looks to be an arbitrary choice from literally the start of the project, 12 years ago. 😮) Thanks for doing the detailed digging, @DrNeutron!

from paramiko.

Quick note about this: while testing #1037, it took me an embarrassingly long time to notice that no compression occurs by default - even though debug logs imply negotiation is occurring, Paramiko disables compression (by negotiating with just an option list of ["none"]) unless you explicitly say Client.connect(compress=True) (or manually call Transport.use_compression(True), if not using Client.)

So while I'm still hoping #1037 fixes the issue for folks egressing files and explicitly using compression, I wonder if that actually covers all the various "SFTP is slow" reports we've gotten over time - presumably the average user isn't remembering (or wanting) to enable compression.

from paramiko.

I came across this here after I did a speed test in my implementation and was very surprised: Via loopback I only got just about 1.1 MByte/s of data transfer rate. This is a show stopper. As this obviously has not yet been resolved till now I need to reject the use of paramiko :-(

from paramiko.

does any has a solution for slow paramiko performance. i am transferring 15 GB of data which takes around 45 minutes. normal scp takes only around 7 minutes.

any welcome would be great. thanks

from paramiko.

Same here - is this a recent regression?

from paramiko.

same problem

paramiko only 4MB/s

but command scp, sftp nearly 100MB/s

I try to change window_size, max_package_size and compress, no change.

I set window_size, max_package_size as 4MB and get the log

DEB 2020-02-24 13:53:14,571 paramiko.transport: starting thread (client mode): 0xe47d510L

DEB 2020-02-24 13:53:14,572 paramiko.transport: Local version/idstring: SSH-2.0-paramiko_2.6.0

DEB 2020-02-24 13:53:14,588 paramiko.transport: Remote version/idstring: SSH-2.0-OpenSSH_7.4

INF 2020-02-24 13:53:14,588 paramiko.transport: Connected (version 2.0, client OpenSSH_7.4)

DEB 2020-02-24 13:53:14,594 paramiko.transport: kex algos:[u'curve25519-sha256', u'[email protected]', u'ecdh-sha2-nistp256', u'ecdh-sha2-nistp384', u'ecdh-sha2-nistp521', u'diffie-hellman-group-exchange-sha256', u'diffie-hellman-group16-sha512', u'diffie-hellman-group18-sha512', u'diffie-hellman-group-exchange-sha1', u'diffie-hellman-group14-sha256', u'diffie-hellman-group14-sha1', u'diffie-hellman-group1-sha1'] server key:[u'ssh-rsa', u'rsa-sha2-512', u'rsa-sha2-256', u'ecdsa-sha2-nistp256', u'ssh-ed25519'] client encrypt:[u'[email protected]', u'aes128-ctr', u'aes192-ctr', u'aes256-ctr', u'[email protected]', u'[email protected]', u'aes128-cbc', u'aes192-cbc', u'aes256-cbc', u'blowfish-cbc', u'cast128-cbc', u'3des-cbc'] server encrypt:[u'[email protected]', u'aes128-ctr', u'aes192-ctr', u'aes256-ctr', u'[email protected]', u'[email protected]', u'aes128-cbc', u'aes192-cbc', u'aes256-cbc', u'blowfish-cbc', u'cast128-cbc', u'3des-cbc'] client mac:[u'[email protected]', u'[email protected]', u'[email protected]', u'[email protected]', u'[email protected]', u'[email protected]', u'[email protected]', u'hmac-sha2-256', u'hmac-sha2-512', u'hmac-sha1'] server mac:[u'[email protected]', u'[email protected]', u'[email protected]', u'[email protected]', u'[email protected]', u'[email protected]', u'[email protected]', u'hmac-sha2-256', u'hmac-sha2-512', u'hmac-sha1'] client compress:[u'none', u'[email protected]'] server compress:[u'none', u'[email protected]'] client lang:[u''] server lang:[u''] kex follows?False

DEB 2020-02-24 13:53:14,594 paramiko.transport: Kex agreed: [email protected]

DEB 2020-02-24 13:53:14,594 paramiko.transport: HostKey agreed: ssh-ed25519

DEB 2020-02-24 13:53:14,595 paramiko.transport: Cipher agreed: aes128-ctr

DEB 2020-02-24 13:53:14,595 paramiko.transport: MAC agreed: hmac-sha2-256

DEB 2020-02-24 13:53:14,595 paramiko.transport: Compression agreed: none

DEB 2020-02-24 13:53:14,612 paramiko.transport: kex engine KexCurve25519 specified hash_algo <built-in function openssl_sha256>

DEB 2020-02-24 13:53:14,613 paramiko.transport: Switch to new keys ...

DEB 2020-02-24 13:53:14,635 paramiko.transport: Attempting password auth...

DEB 2020-02-24 13:53:14,653 paramiko.transport: userauth is OK

INF 2020-02-24 13:53:14,688 paramiko.transport: Authentication (password) successful!

DEB 2020-02-24 13:53:14,700 paramiko.transport: [chan 0] Max packet in: 4194304 bytes

DEB 2020-02-24 13:53:15,002 paramiko.transport: Received global request "[email protected]"

DEB 2020-02-24 13:53:15,002 paramiko.transport: Rejecting "[email protected]" global request from server.

DEB 2020-02-24 13:53:15,042 paramiko.transport: [chan 0] Max packet out: 32768 bytes

DEB 2020-02-24 13:53:15,042 paramiko.transport: Secsh channel 0 opened.

DEB 2020-02-24 13:53:15,071 paramiko.transport: [chan 0] Sesch channel 0 request ok

INF 2020-02-24 13:53:15,095 paramiko.transport.sftp: [chan 0] Opened sftp connection (server version 3)

DEB 2020-02-24 13:53:15,096 paramiko.transport.sftp: [chan 0] open('/usr/local/bin/calicoctl', 'wb')

DEB 2020-02-24 13:53:15,103 paramiko.transport.sftp: [chan 0] open('/usr/local/bin/calicoctl', 'wb') -> 00000000

DEB 2020-02-24 13:53:25,195 paramiko.transport.sftp: [chan 0] close(00000000)

DEB 2020-02-24 13:53:25,201 paramiko.transport.sftp: [chan 0] stat('/usr/local/bin/calicoctl')

INF 2020-02-24 13:53:25,203 paramiko.transport.sftp: [chan 0] sftp session closed.

DEB 2020-02-24 13:53:25,204 paramiko.transport: [chan 0] EOF sent (0)

DEB 2020-02-24 13:53:25,206 paramiko.transport: EOF in transport thread

I notice that max packet in is 4MB, but Max packet out is still 32KB. Is it the reason?

from paramiko.

We had the same problem and we solved it with these two changes:

- We used the

set_pipelinedto reduce the time waiting to hear back from the server - We changed our data to

memoryviewobjects to take care of this bottleneck here:Line 523 in 5cf145f

Locally, for a 100MB file this reduced the time to transfer from 254s to 3.4s.

from paramiko.

FWIW to any future reader, it looks like this was fixed here (haven't tested it myself):

#892

from paramiko.

Also, more recently, I've found this issue too: pyinvoke/invoke#819

Which also causes severe speed drops, if you end up hitting it in your code.

from paramiko.

Related Issues (20)

- [BUG] - SFTP disables further prefetching on single prefetching failure

- [SUPPORT] - Deprecation Warning for TripleDES Algorithm in Paramiko HOT 39

- [BUG] - Attempting to resize window while using Fabric + Paramiko to stream cloudinit logs

- [SUPPORT] - <Paramiko rekey related support required>

- [SUPPORT] - Handle Tailscale SSH authentication HOT 3

- [BUG] CryptographyDeprecationWarning: TripleDES has been moved to cryptography HOT 1

- [BUG] - <paramiko.ssh_exception.NoValidConnectionsError: [Errno None] Unable to connect to port 22 on 10.0.89.23> HOT 1

- Anaconda release not up to date HOT 1

- [FEAT] - Allow selecting SSH-Agent (OpenSSH vs Pageant)

- [SUPPORT] - Print Statements Missing

- [BUG] - paramiko.ssh_exception.SSHException: not a valid OPENSSH private key file when connecting to RHEL 9.X

- [FEAT] - Publickey authentication should default to the algorithm of the private key

- [BUG] - Actual Error message overwrite during auth_publickey failure

- [SUPPORT] - Cannot connect to the SFTP server with error paramiko.ssh_exception.ChannelException: ChannelException(1, 'Administratively prohibited')

- [BUG] - SSH Certificates authentication and and ssh-agent

- [BUG] - Writing more than 32675 bytes decreases performance by 99% HOT 4

- [BUG] - BufferedFile.read can fail to return buffered when timeouts occur

- [BUG] - paramiko crashes on Ubuntu Jammy (22.04) in FIPS mode

- [BUG] - Sometimes loading an ECDSAKey or DSSKey with a comment of certain lengths results in "Invalid key". HOT 1

- [SUPPORT] - Allowing running CVE-2018-15473 again

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from paramiko.