D3 (or D3.js) is a free, open-source JavaScript library for visualizing data. Its low-level approach built on web standards offers unparalleled flexibility in authoring dynamic, data-driven graphics. For more than a decade D3 has powered groundbreaking and award-winning visualizations, become a foundational building block of higher-level chart libraries, and fostered a vibrant community of data practitioners around the world.

d3 / d3-contour Goto Github PK

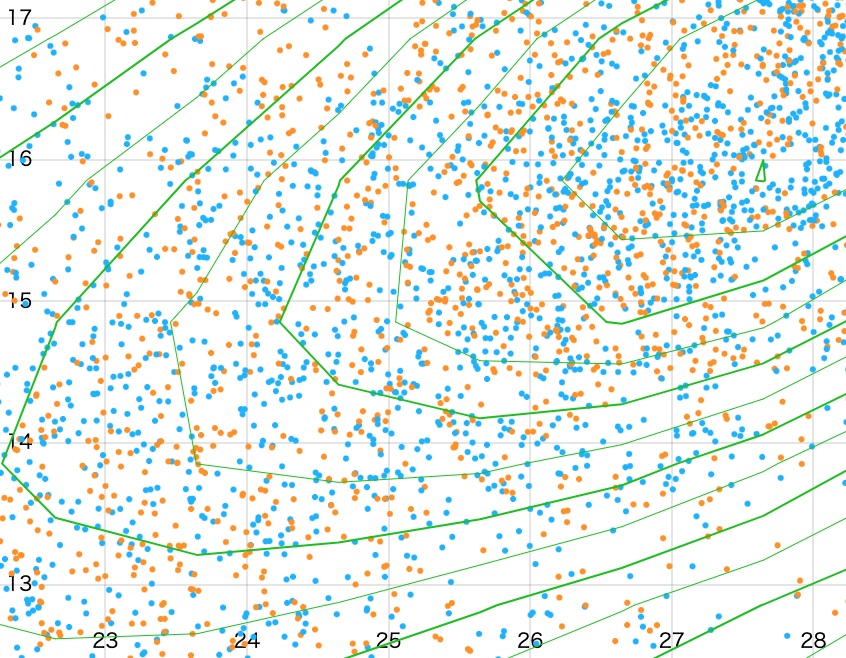

View Code? Open in Web Editor NEWCompute contour polygons using marching squares.

Home Page: https://d3js.org/d3-contour

License: ISC License